Author Archives: Yves

Author Archives: Yves

This post discusses about design considerations when interconnecting two tightly coupled fabrics using dark fibers or DWDM, but not limited to Metro distances. If we think very long distances, the point-to-point links can be also established using a virtual overlay such as EoMPLS port x-connect; nonetheless the debate will be the same.

Notice that this discussion is not limited to one type of network fabric transport, but any solutions that use multi-pathing is concerned, such as FabricPath, VxLAN or ACI.

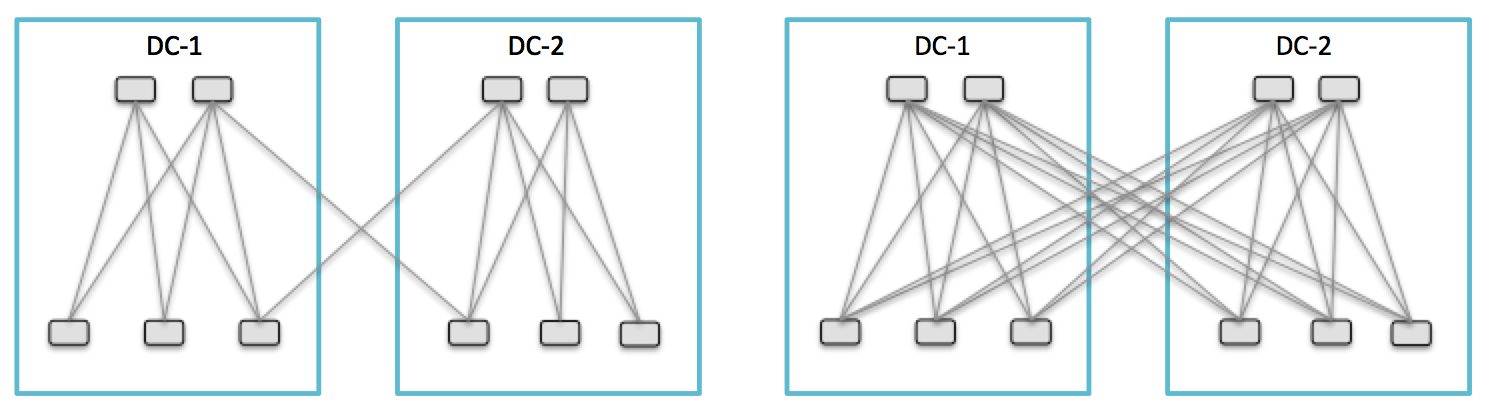

Assuming the distance between DC-1 and DC-2 is about 100 km; if the following two design options sound quite simple to guess which one might be the most efficient, actually it’s not as obvious as we could think of, and a bad choice may have a huge impact for some applications. I met several networkers discussing about the best choice between full-mesh and partial-mesh for interconnecting 2 fabrics. Some folks think that full-mesh is the best solution. Actually, albeit it depends on the distances between network fabrics, this is certainly not the most efficient design option for interconnecting them together.

Partial-Mesh with transit leafs design (left) Continue reading

A fantastic overview of the Elastic Cloud project from Luca Relandini

http://lucarelandini.blogspot.com/2015/03/the-elastic-cloud-project-porting-to.html?

And don’t miss this excellent recent post which explains how to invoke UCS Director workflows via the northbound REST API.

http://lucarelandini.blogspot.com/2015/03/invoking-ucs-director-workflows-via.html

Since I posted this article “Is VxLAN a DCI solution for LAN extension ?” clarifying why Multicast-based VxLAN was not suitable to offer a viable DCI solution, the DCI market (Data Center Interconnect) has become a buzz of activity around the evolution of VxLAN based on Control Plane (CP).

In this network overlay context, the Control Plane objective is to leverage Unicast transport while processing VTEP and host discovery and distribution processes. This method significantly reduces flooding for Unknown Unicast traffic within and across the fabrics.

The VxLAN protocol (RFC 7348) is aimed at carrying a virtualized Layer 2 network tunnel established over an IP network, hence from a network overlay point of view there is no restriction to transport a Layer 2 frame over an IP network, because that’s what the network overlays offers.

Consequently a question as previously discussed with MCAST-only transport in regard to a new DCI alternative solution, comes back again;

Consequently, this noise requires a clarification on how reliable a DCI solution can be when based on VxLAN Unicast transport using a Control Continue reading

Back to the recent comments on what is “officially” supported or not ?

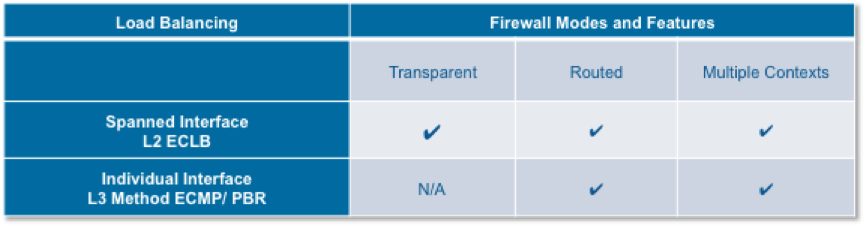

First of all, let’s review the different Firewall forwarding mode officially supported

ASA cluster deployed inside a single data center:

Fig.1 Firewall forwarding mode within a single DC. Please note the firewall routed mode supported with the Layer 2 load balancing (LACP) Spanned Interface mode.

Fig.1 Firewall forwarding mode within a single DC. Please note the firewall routed mode supported with the Layer 2 load balancing (LACP) Spanned Interface mode.

When configured in Routed mode (e.g. default gateway for the machines), the same ASA identifiers IP/MAC are distributed among all ASA members of the cluster. When the ASA cluster is stretched across different locations, the Layer 2 distribution mechanism facing the ASA devices is achieved locally using pair of switches (usually leveraged the a Multi-chassis EthernetChannel technique such as VSS or vPC).

Subsequently the same virtual MAC address (ASA vMAC) of the ASA cluster is duplicated on both sites and as the result it hits the upward switch from different interfaces.

Fig.2 ASA and duplicate vMAC address

When the ASA cluster runs the firewall routed mode with Spanned interface method, it breaks the Ethernet rules due to the duplicate MAC address, with risks of affecting the whole network operation. Consequently Continue reading

How can we talk about security service extension across multiple locations without elaborating on path optimisation ? ![]()

In the previous post, 27 – Active/Active Firewall spanned across multiple sites – Part 1, we demonstrated the integration of ASA clustering in a DCI environment.

We discussed the need to maintain the active sessions stateful while the machines migrate to a new location. However, we see that, after the move, the original DC still receives new requests from outside, prior to sending them throughout the broadcast domain (via the extended layer 2), reaching the final destination endpoint in a distant location. This is the expected behavior and is due to the fact that the same IP broadcast domain is extended across all sites of concern. Hence the IP network (WAN) is natively not aware of the physical location of the end-node. The routing is the best path at the lowest cost via the most specific route. However, that behavior requires the requested workflow to “ping-pong” from site to site, adding pointless latency that may have some performance impact on applications distributed across long distances.

With the increasing demand for dynamic workload mobility Continue reading

Having dual sites or multiple sites in Active/Active mode aims to offer elasticity of resources available everywhere in different locations, just as with a single logical data center. This solution brings as well the business continuity with disaster avoidance. This is achieved by manually or dynamically moving the applications and software framework where resources are available. When “hot”-moving virtual machines from one DC to another, there are some important requirements to take into consideration:

As with several other network and security services, the Continue reading

Just a slight note to clarify some VxLAN deployment for an hybrid network (Intra-DC).

As discussed in the previous post, with the software-based VxLAN, only one single VTEP L2 Gateway can be active for the same VxLAN instance.

This means that all end-systems connected to the VLAN concerned by a mapping with a particular VNID must be confined into the same leaf switch where the VTEP GW is attached. Other end-systems connected to the same VLAN but on different leaf switches isolated by the layer 3 fabric cannot communicate with the VTEP L2 GW. This may be a concern with hybrid network where servers supporting the same application are spread over multiple racks.

To allow bridging between VNID and VLAN, it implies that the L2 network domain is spanned between the active VTEP L2 Gateway and all servers of interest that share the same VLAN ID. Among other improvements, VxLAN is also aiming to contain the layer 2 failure domain to its smallest diameter, leveraging instead layer 3 for the transport, not necessarily both. Although it is certainly a bit antithetical to VxLAN purposes, nonetheless if all leafs are concerned by the same mapping of VNID to VLAN ID, it is Continue reading

One of the questions that many network managers are asking is “Can I use VxLAN stretched across different locations to interconnect two or more physical DCs and form a single logical DC fabric?”

The answer is that the current standard implementation of VxLAN has grown up for an intra-DC fabric infrastructure and would necessitate additional tools as well as a control plane learning process to fully address the DCI requirements. Consequently, as of today it is not considered as a DCI solution.

To understand this statement, we first need to review the main requirements to deploy a solid and efficient DC interconnect solution and dissect the workflow of VxLAN to see how it behaves against these needs. All of the following requirements for a valid DCI LAN extension have already been discussed throughout previous posts, so the following serves as a brief reminder.

The Datacenter network architecture is evolving from the traditional multi-tier layer architecture, where the placement of security and network service is usually at the aggregation layer, into a wider spine and flat network also known as fabric network ( ‘Clos’ type), where the network services are distributed to the border leafs.

This evolution has been conceived at improving the following :

If most of qualifiers above have been already successfully addressed with traditional multi-tier layer architecture, today’s Data centres are experiencing an increase of East-West data traffic that is the result of: