App Highlight: Hardenize

Hardenize is a comprehensive security tool that continuously monitors the security and configuration of your domain name, email, and website. Ivan Ristić, the author of Hardenize, gave a demo of his app at our Cloudflare London HQ.

Do you know how secure your site is? View a Hardenize report on your website by clicking this button:

Interested in sharing a demo of your app at a meetup? We can help coordinate. Drop a line to [email protected].

Broken packets: IP fragmentation is flawed

As opposed to the public telephone network, the internet has a Packet Switched design. But just how big can these packets be?

CC BY 2.0 image by ajmexico, inspired by

CC BY 2.0 image by ajmexico, inspired by

This is an old question and the IPv4 RFCs answer it pretty clearly. The idea was to split the problem into two separate concerns:

What is the maximum packet size that can be handled by operating systems on both ends?

What is the maximum permitted datagram size that can be safely pushed through the physical connections between the hosts?

When a packet is too big for a physical link, an intermediate router might chop it into multiple smaller datagrams in order to make it fit. This process is called "forward" IP fragmentation and the smaller datagrams are called IP fragments1.

Image by Geoff Huston, reproduced with permission

Image by Geoff Huston, reproduced with permission

The IPv4 specification defines the minimal requirements. From the RFC791:

Every internet destination must be able to receive a datagram

of 576 octets either in one piece or in fragments to

be reassembled. [...]

Every internet module must be able to forward a datagram of 68

octets without further fragmentation. [...]

The first value - Continue reading

Why We Terminated Daily Stormer

Earlier today, Cloudflare terminated the account of the Daily Stormer. We've stopped proxying their traffic and stopped answering DNS requests for their sites. We've taken measures to ensure that they cannot sign up for Cloudflare's services ever again.

Our terms of service reserve the right for us to terminate users of our network at our sole discretion. The tipping point for us making this decision was that the team behind Daily Stormer made the claim that we were secretly supporters of their ideology.

Our team has been thorough and have had thoughtful discussions for years about what the right policy was on censoring. Like a lot of people, we’ve felt angry at these hateful people for a long time but we have followed the law and remained content neutral as a network. We could not remain neutral after these claims of secret support by Cloudflare.

Now, having made that decision, let me explain why it's so dangerous.

Where Do You Regulate Content on the Internet?

There are a number of different organizations that work in concert to bring you the Internet. They include:

- Content creators, who author the actual content online.

- Platforms (e.g., Facebook, Wordpress, etc.), where Continue reading

Power outage hits the island of Taiwan. Here’s what we learned.

At approximately 4:50pm local time (8:50am UTC) August 15, a major unexpected power outage hit the island of Taiwan with a significant amount of its power generation facilities going down.

Blackout!

Most of the island was hit with power outages, shortages and rolling blackouts, with street lights not functioning, nor power in many of Taipei’s shopping malls, and much other infrastructure.

Blackouts of this scale are very rare. Usually, during an outage of this scale, it would be expected that Internet traffic would greatly drop, as houses and businesses lose power and are unable to connect to the Internet. I’ve experienced this in the past, working at consumer ISPs. As households and businesses lose power, so do their modems or routers which connect them to the Internet.

However, during yesterday's outage, something different happened. I'd like to share some insights from yesterday's outage.

Photo: Taipei 101 Dark during the Blackout -

Photo: Taipei 101 Dark during the Blackout -

Source: David Chang/EPA

Even when the power is out, the Internet still operates

Most Telecom and Data Center facilities are built with redundancy in mind and have backup power generation. Our Data Center partner, Chief, was able to switch to backup power generation without any service interruption, allowing Continue reading

Recap: How to make a Cloudflare App workshop in Austin

Cloudflare hosted a developer preview workshop in Austin for Cloudflare Apps, taught by Zack Bloom, tech lead of Cloudflare Apps. Due to popular request, we are making available the video from the workshop.

Want some ideas on what to start with? Check out the idea suggestion list on our Cloudflare Community page. It's a great idea to review our Apps documentation available here.

Want to request a Cloudflare Apps workshop in your city? Please drop a line to [email protected]

Share your works in progress and compare notes with other developers on the community forum.

Moving Forward with Path Forward

In February, I blogged about our first rotation of Path Forward returnships and the awesome people we’ve hired as a result of the program. As a refresher, Path Forward is a nonprofit organization that aims to empower people who’ve taken time away from their careers to focus on caregiving to return to the workforce.

Cloudflare started partnering with Path Forward this past year as a way to expand our talent pool to include the best and the brightest, regardless of any gaps in their career journeys. We truly believe in a diverse group of employees, and that includes those who can bring different perspectives from their time away from the workforce.

We’ve been lucky to have three amazing candidates go through the Path Forward program this time around across our People & Places, Solutions Engineering, and Marketing teams.

Christine Winston (director of partnerships, Path Forward), Me, and Tami Forman (executive director, Path Forward)

Christine Winston (director of partnerships, Path Forward), Me, and Tami Forman (executive director, Path Forward)

The Cloudflare Path Forward Experience

Gigi Chiu did a returnship with the Solutions Engineering team here at Cloudflare after taking some time off to raise her children. Before taking time off, she worked at Motorola and previously worked for a telco in Canada that was helping to Continue reading

The Languages Which Almost Became CSS

This was adapted from a post which originally appeared on the Eager blog. Eager has now become the new Cloudflare Apps.

In fact, it has been a constant source of delight for me over the past year to get to continually tell hordes (literally) of people who want to – strap yourselves in, here it comes – control what their documents look like in ways that would be trivial in TeX, Microsoft Word, and every other common text processing environment: “Sorry, you’re screwed.”

— Marc Andreessen

1994

When Tim Berners-Lee announced HTML in 1991 there was no method of styling pages. How a given HTML tag was rendered was determined by the browser, often with significant input from the user’s preferences. To Continue reading

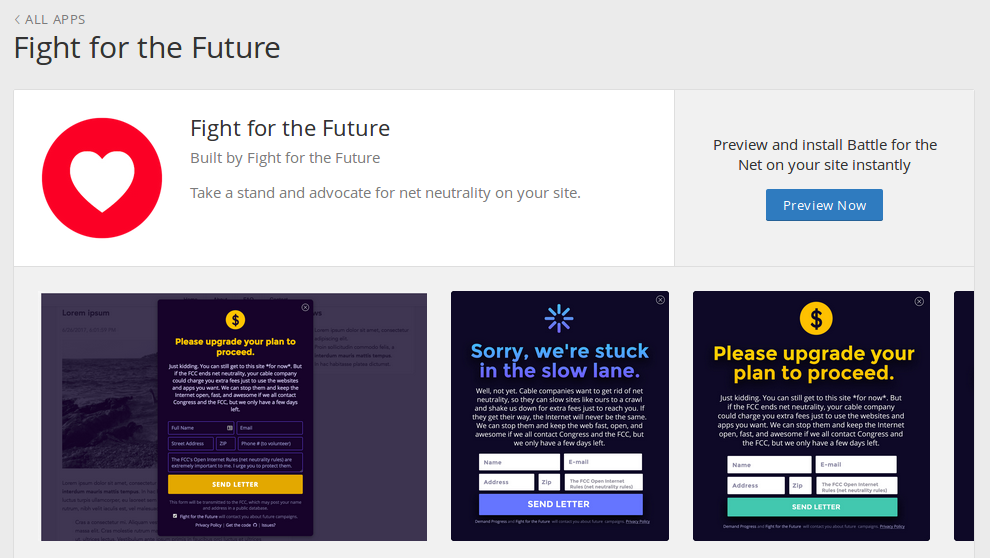

Net Neutrality Day: Cloudflare + Fight for the Future

For Net Neutrality Day on July 12, Fight for the Future (FFTF) launched a Cloudflare App installable for websites all over the world. Sites with it installed saw as many as 178 million page views prompting the users to write to their local congressional representative on the importance of Net Neutrality. All told, the FCC received over 2 million comments and Congress received millions of emails and phone calls.

Screenshot of App Page for FFTF’s Battle for the Net app. Source code for this app.

Screenshot of App Page for FFTF’s Battle for the Net app. Source code for this app.

When our co-founders launched Cloudflare in 2011, it was with a firm belief that the Internet is a place where all voices should be heard. The ability for either an ISP or government to censor the Internet based on their opinions or a profit motive rather than law could pose a huge threat to free speech on the Internet.

Cloudflare is a staunch supporter of Net Neutrality and the work done by Fight for the Future, which shows how effective Internet civic campaigns can be.

To get a heads up on Fight for the Future campaigns in the future, sign up for their mailing list.

See source code for FFTF’s Battle for the Net Cloudflare Continue reading

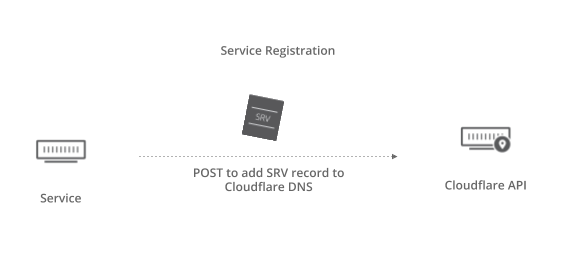

How to use Cloudflare for Service Discovery

Cloudflare runs 3,588 containers, making up 1,264 apps and services that all need to be able to find and discover each other in order to communicate -- a problem solved with service discovery.

You can use Cloudflare for service discovery. By deploying microservices behind Cloudflare, microservices’ origins are masked, secured from DDoS and L7 exploits and authenticated, and service discovery is natively built in. Cloudflare is also cloud platform agnostic, which means that if you have distributed infrastructure deployed across cloud platforms, you still get a holistic view of your services and the ability to manage your security and authentication policies in one place, independent of where services are actually deployed.

How it works

Service locations and metadata are stored in a distributed KV store deployed in all 100+ Cloudflare edge locations (the service registry).

Services register themselves to the service registry when they start up and deregister themselves when they spin down via a POST to Cloudflare’s API. Services provide data in the form of a DNS record, either by giving Cloudflare the address of the service in an A (IPv4) or AAAA (IPv6) record, or by providing more metadata like transport protocol and port in an SRV record.

Aquele Abraço Rio de Janeiro: Cloudflare’s 116th Data Center!

Cloudflare is excited to announce our newest data center in Rio de Janeiro, Brazil. This is our eighth data center in South America, and expands the Cloudflare network to 116 cities across 57 countries. Our newest deployment will improve the performance and security of over six million Internet applications across Brazil, while providing redundancy to our existing São Paulo data center. As additional ISPs peer with us at the local internet exchange (IX.br), we’ll be able to provide even closer coverage to a growing share of Brazil Internet users.

A Cloudflare está muito feliz de anunciar o nosso mais recente data center: Rio de Janeiro, Brasil. Este é o nosso oitavo data center na América do Sul, e com ele a rede da Cloudflare se expande por 116 cidades em 57 países. Este lançamento vai acelerar e proteger mais de seis milhões de sites e aplicações web pelo Brasil, também provendo redundância para o nosso data center em São Paulo. Provendo acesso à nossa rede para mais parceiros através do Ponto de Troca de Tráfego (IX-RJ), nós estamos chegando mais perto dos usuários da Internet em todo o Brasil.

History

Rio de Janeiro plays a great role in the Continue reading

Ninth Circuit Rules on National Security Letter Gag Orders

As we’ve previously discussed on this blog, Cloudflare has been challenging for years the constitutionality of the FBI’s use of national security letters (NSLs) to demand user data on a confidential basis. On Monday morning, a three-judge panel of the U.S. Ninth Circuit Court of Appeals released the latest decision in our lawsuit, and endorsed the use of gag orders that severely restrict a company's disclosures related to NSLs.

CC-BY 2.0 image by a200/a77Wells

CC-BY 2.0 image by a200/a77Wells

This is the latest chapter in a court proceeding that dates back to 2013, when Cloudflare initiated a challenge to the previous form of the NSL statute with the help of our friends at EFF. Our efforts regarding NSLs have already seen considerable success. After a federal district court agreed with some of our arguments, Congress passed a new law that addressed transparency, the USA FREEDOM Act. Under the new law, companies were finally permitted to disclose the number of NSLs they receive in aggregate bands of 250. But there were still other concerns about judicial review or limitation of gag orders that remained.

Today’s outcome is disappointing for Cloudflare. NSLs are “administrative subpoenas” that fall short of a warrant, and are frequently accompanied Continue reading

Getting started with Cloudflare Apps

We recently launched our new Cloudflare Apps platform, and love to see the community it is building. In an effort to help people who run web services such as websites, APIs and more, we would like to help make your web services faster, safer and more reliable using our new Apps Platform by leveraging our 115 points of presence around the world. (Skip ahead to the fun part if you already know how Cloudflare Apps works)

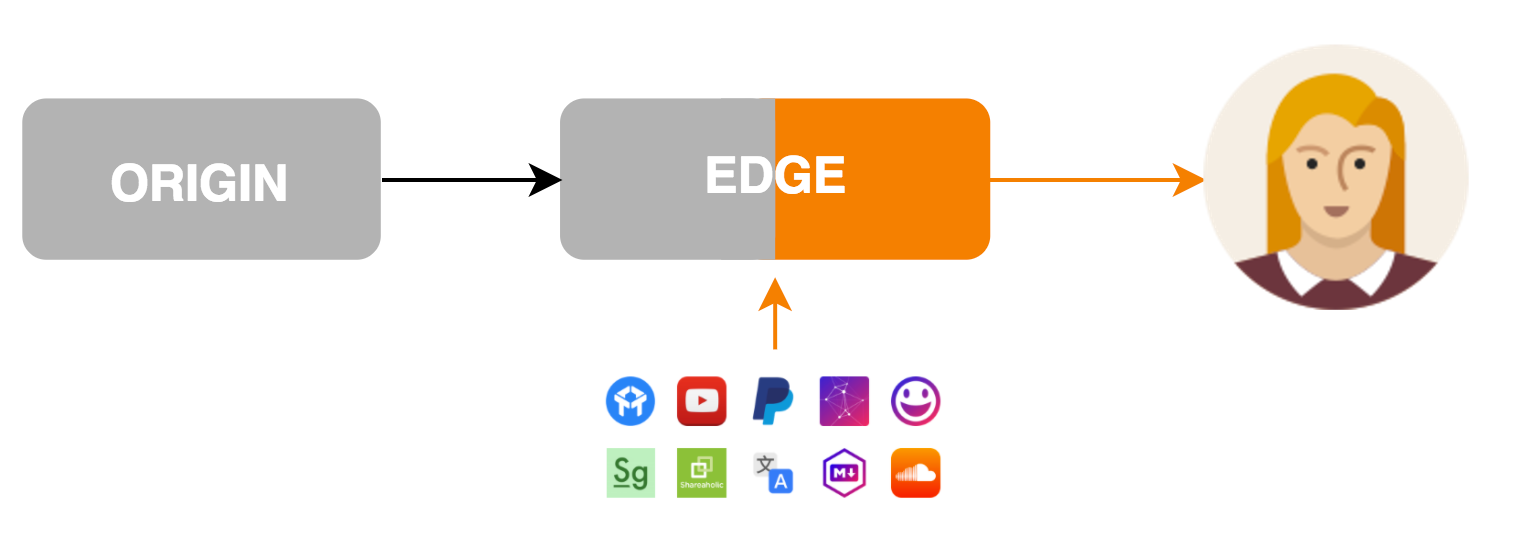

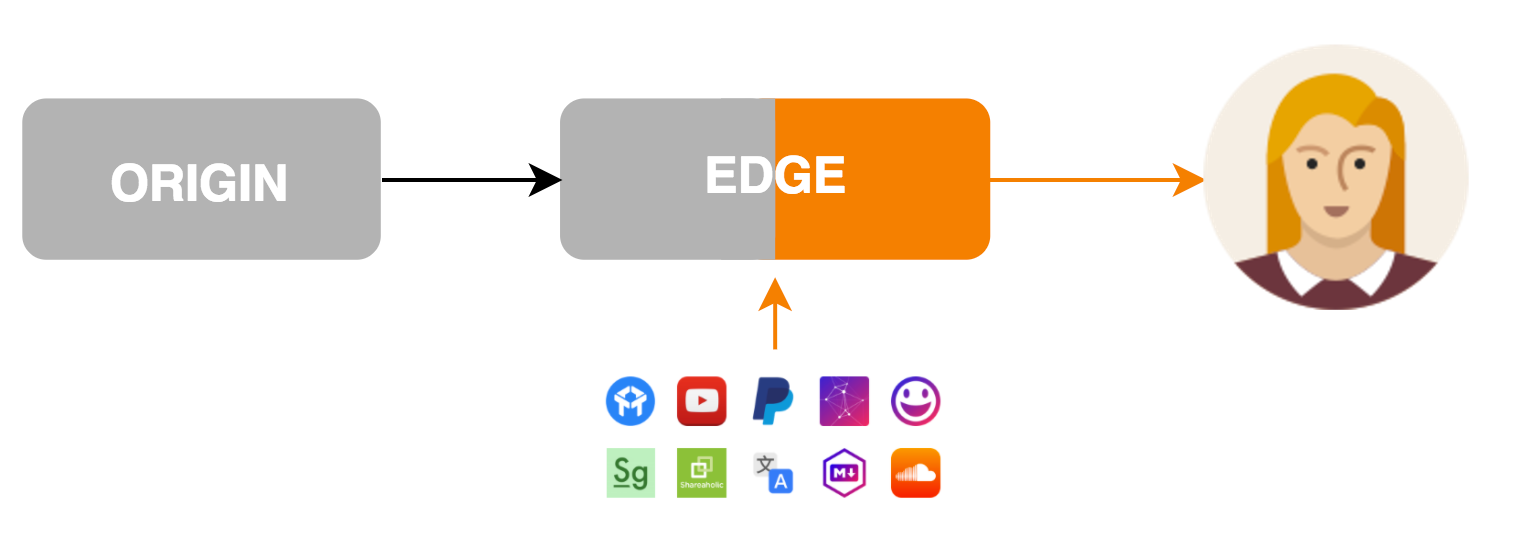

How Cloudflare apps work

Here is a quick diagram of how Cloudflare apps work:

The “Origin” is the server that is providing your services, such as your website or API. The “Edge” represents a point of presence that is closest to your visitors. Cloudflare uses a routing method known as Anycast to ensure the end user, pictured on the far right, is routed through the best network path to our points of presence closest to them around the world.

Historically, to make changes or additions to your site at the edge changes to a site, you needed to be a Cloudflare employee. Now with apps, anyone can quickly make changes to the pages rendered to their users via Javascript and CSS. Today, you Continue reading

High-reliability OCSP stapling and why it matters

At Cloudflare our focus is making the internet faster and more secure. Today we are announcing a new enhancement to our HTTPS service: High-Reliability OCSP stapling. This feature is a step towards enabling an important security feature on the web: certificate revocation checking. Reliable OCSP stapling also improves connection times by up to 30% in some cases. In this post, we’ll explore the importance of certificate revocation checking in HTTPS, the challenges involved in making it reliable, and how we built a robust OCSP stapling service.

Why revocation is hard

Digital certificates are the cornerstone of trust on the web. A digital certificate is like an identification card for a website. It contains identity information including the website’s hostname along with a cryptographic public key. In public key cryptography, each public key has an associated private key. This private key is kept secret by the site owner. For a browser to trust an HTTPS site, the site’s server must provide a certificate that is valid for the site’s hostname and a proof of control of the certificate’s private key. If someone gets access to a certificate’s private key, they can impersonate the site. Private key compromise is a serious risk Continue reading

Participate in the Net Neutrality Day of Action

We at Cloudflare strongly believe in network neutrality, the principle that networks should not discriminate against content that passes through them. We’ve previously posted on our views on net neutrality and the role of the FCC here and here.

In May, the FCC took a first step toward revoking bright-line rules it put in place in 2015 to require ISPs to treat all web content equally. The FCC is seeking public comment on its proposal to eliminate the legal underpinning of the 2015 rules, revoking the FCC's authority to implement and enforce net neutrality protections. Public comments are also requested on whether any rules are needed to prevent ISPs from blocking or throttling web traffic, or creating “fast lanes” for some internet traffic.

To raise awareness about the FCC's efforts, July 12th will be “Internet-Wide Day of Action to save Net Neutrality.” Led by the group Battle for the Net, participating websites will show the world what the web would look like without net neutrality by displaying an alert on their homepage. Website users will be encouraged to contact Congress and the FCC in support of net neutrality.

We wanted to make sure our users had an opportunity to participate in this Continue reading

How to make your site HTTPS-only

The Internet is getting more secure every day as people enable HTTPS, the secure version of HTTP, on their sites and services. Last year, Mozilla reported that the percentage of requests made by Firefox using encrypted HTTPS passed 50% for the first time. HTTPS has numerous benefits that are not available over unencrypted HTTP, including improved performance with HTTP/2, SEO benefits for search engines like Google and the reassuring lock icon in the address bar.

So how do you add HTTPS to your site or service? That’s simple, Cloudflare offers free and automatic HTTPS support for all customers with no configuration. Sign up for any plan and Cloudflare will issue an SSL certificate for you and serve your site over HTTPS.

HTTPS-only

Enabling HTTPS does not mean that all visitors are protected. If a visitor types your website’s name into the address bar of a browser or follows an HTTP link, it will bring them to the insecure HTTP version of your website. In order to make your site HTTPS-only, you need to redirect visitors from the HTTP to the HTTPS version of your site.

Going HTTPS-only should be as easy as a click of a button, so we Continue reading

Three little tools: mmsum, mmwatch, mmhistogram

In a recent blog post, my colleague Marek talked about some SSDP-based DDoS activity we'd been seeing recently. In that blog post he used a tool called mmhistogram to output an ASCII histogram.

That tool is part of a small suite of command-line tools that can be handy when messing with data. Since a reader asked for them to be open sourced... here they are.

mmhistogram

Suppose you have the following CSV of the ages of major Star Wars characters at the time of Episode IV:

Anakin Skywalker (Darth Vader),42

Boba Fett,32

C-3PO,32

Chewbacca,200

Count Dooku,102

Darth Maul,54

Han Solo,29

Jabba the Hutt,600

Jango Fett,66

Jar Jar Binks,52

Lando Calrissian,31

Leia Organa (Princess Leia),19

Luke Skywalker,19

Mace Windu,72

Obi-Wan Kenobi,57

Palpatine,82

Qui-Gon Jinn,92

R2-D2,32

Shmi Skywalker,72

Wedge Antilles,21

Yoda,896

You can get an ASCII histogram of the ages as follows using the mmhistogram tool.

$ cut -d, -f2 epiv | mmhistogram -t "Age"

Age min:19.00 avg:123.90 med=54.00 max:896.00 dev:211.28 count:21

Age:

value |-------------------------------------------------- count

0 | 0

1 | 0

2 | 0

4 | 0

8 | 0

16 |************************************************** 8

32 | ************************* 4

64 | ************************************* 6

128 | ****** 1

256 Continue readingA container identity bootstrapping tool

Everybody has secrets. Software developers have many. Often these secrets—API tokens, TLS private keys, database passwords, SSH keys, and other sensitive data—are needed to make a service run properly and interact securely with other services. Today we’re sharing a tool that we built at Cloudflare to securely distribute secrets to our Dockerized production applications: PAL.

PAL is available on Github: https://github.com/cloudflare/pal.

Although PAL is not currently under active development, we have found it a useful tool and we think the community will benefit from its source being available. We believe that it's better to open source this tool and allow others to use the code than leave it hidden from view and unmaintained.

Secrets in production

CC BY 2.0 image by Personal Creations

CC BY 2.0 image by Personal Creations

How do you get these secrets to your services? If you’re the only developer, or one of a few on a project, you might put the secrets with your source code in your version control system. But if you just store the secrets in plain text with your code, everyone with access to your source repository can read them and use them for nefarious purposes (for example, stealing an API token and pretending to be Continue reading

Stupidly Simple DDoS Protocol (SSDP) generates 100 Gbps DDoS

Last month we shared statistics on some popular reflection attacks. Back then the average SSDP attack size was ~12 Gbps and largest SSDP reflection we recorded was:

- 30 Mpps (millions of packets per second)

- 80 Gbps (billions of bits per second)

- using 940k reflector IPs

This changed a couple of days ago when we noticed an unusually large SSDP amplification. It's worth deeper investigation since it crossed the symbolic threshold of 100 Gbps.

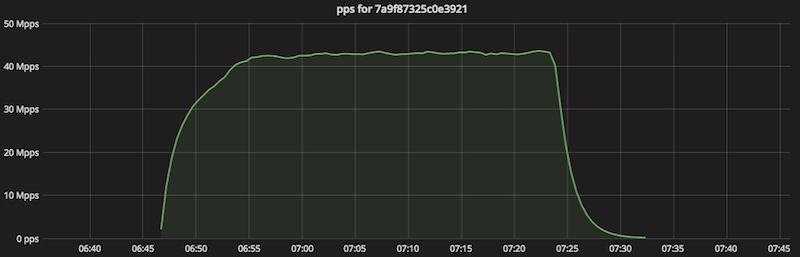

The packets per second chart during the attack looked like this:

The bandwidth usage:

This packet flood lasted 38 minutes. According to our sampled netflow data it utilized 930k reflector servers. We estimate that the during 38 minutes of the attack each reflector sent 112k packets to Cloudflare.

The reflector servers are across the globe, with a large presence in Argentina, Russia and China. Here are the unique IPs per country:

$ cat ips-nf-ct.txt|uniq|cut -f 2|sort|uniq -c|sort -nr|head

439126 CN

135783 RU

74825 AR

51222 US

41353 TW

32850 CA

19558 MY

18962 CO

14234 BR

10824 KR

10334 UA

9103 IT

...

The reflector IP distribution across ASNs is typical. It pretty much follows the world’s largest residential ISPs:

$ cat ips-nf-asn.txt |uniq|cut -f 2|sort|uniq Continue readingAnnouncing the Cloudflare Apps Platform and Developer Fund

When we started Cloudflare we had no idea if anyone would validate our core idea. Our idea was what that everyone should have the ability to be as fast and secure as the Internet giants like Google, Facebook, and Microsoft. Six years later, it's incredible how far that core idea has taken us.

CC BY-SA 2.0 image by Mobilus In Mobili

CC BY-SA 2.0 image by Mobilus In Mobili

Today, Cloudflare runs one of the largest global networks. We have data centers in 115 cities around the world and continue to expand. We've built a core service that delivers performance, security, availability, and insight to more than 6 million users.

Democratizing the Internet

From the beginning, our goal has been to democratize the Internet. Today we're taking another step toward that goal with the launch of the Cloudflare Apps Platform and the Cloudflare Developer Fund. To understand that, you have to understand where we started.

When we started Cloudflare we needed two things: a collection of users for the service, and finances to help us fund our development. In both cases, people were taking a risk on Cloudflare. Our first users came from Project Honey Pot, which Lee Holloway and I created back in 2004. Members Continue reading

Announcing the New Cloudflare Apps

Today we’re excited to announce the next generation of Cloudflare Apps. Cloudflare Apps is an open platform of tools to build a high quality website. It’s a place where every website owner can select from a vast catalog of Apps which can improve their websites and internet properties in every way imaginable. Selected apps can be previewed and installed instantly with just a few clicks, giving every website owner the power of technical expertise, and every developer the platform only Cloudflare can provide.

Apps can modify content and layout on the page they’re installed on, communicate with external services and dramatically improve websites. Imagine Google Analytics, YouTube videos, in-page chat tools, widgets, themes and every other business which can be built by improving websites. All of these and more can be done with Cloudflare Apps.

Cloudflare Apps makes it possible for a developer in her basement to build the next great new tool and get it on a million websites overnight. With Cloudflare Apps, even the smallest teams can get massive distribution for their apps on the web so that the best products win. With your help we will make it possible for developers like you to build a new Continue reading