Author Archives: Vincent Bernat

Author Archives: Vincent Bernat

I have unearthed a few old articles typed during my adolescence, between 1996 and 1998. Unremarkable at the time, these pages now compose, three decades later, the chronicle of a vanished era.1

The word “blog” does not exist yet. Wikipedia remains to come. Google has not been born. AltaVista reigns over searches, while already struggling to embrace the nascent immensity of the web2. To meet someone, you had to agree in advance and prepare your route on paper maps. 🗺️

The web is taking off. The CSS specification has just emerged, HTML tables still serve for page layout. Cookies and advertising banners are making their appearance. Pages are adorned with music and videos, forcing browsers to arm themselves with plugins. Netscape Navigator sits on 86% of the territory, but Windows 95 now bundles Internet Explorer to quickly catch up. Facing this offensive, Netscape opensource its browser.

France falls behind. Outside universities, Internet access remains expensive and laborious. Minitel still reigns, offering phone directory, train tickets, remote shopping. This was not yet possible with the Internet: buying a CD online was a pipe dream. Encryption suffers from inappropriate regulation: the DES algorithm is capped at 40 bits and Continue reading

Standard RAID solutions waste space when disks have different sizes. Linux software RAID with LVM uses the full capacity of each disk and lets you grow storage by replacing one or two disks at a time.

We start with four disks of equal size:

$ lsblk -Mo NAME,TYPE,SIZE NAME TYPE SIZE vda disk 101M vdb disk 101M vdc disk 101M vdd disk 101M

We create one partition on each of them:

$ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vda $ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vdb $ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vdc $ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vdd $ lsblk -Mo NAME,TYPE,SIZE NAME TYPE SIZE vda disk 101M └─vda1 part 100M vdb disk 101M └─vdb1 part 100M vdc disk 101M └─vdc1 part 100M vdd disk 101M └─vdd1 part 100M

We set up a RAID 5 device by assembling the four partitions:1

$ mdadm --create /dev/md0 --level=raid5 --bitmap=internal --raid-devices=4 \ > /dev/vda1 /dev/vdb1 /dev/vdc1 /dev/vdd1 $ lsblk -Mo NAME,TYPE,SIZE NAME TYPE SIZE vda disk 101M ┌┈▶ └─vda1 part 100M ┆ vdb disk 101M ├┈▶ └─vdb1 part 100M ┆ Continue reading

Akvorado collects sFlow and IPFIX flows over UDP. Because UDP does not retransmit lost packets, it needs to process them quickly. Akvorado runs several workers listening to the same port. The kernel should load-balance received packets fairly between these workers. However, this does not work as expected. A couple of workers exhibit high packet loss:

$ curl -s 127.0.0.1:8080/api/v0/inlet/metrics \ > | sed -n s/akvorado_inlet_flow_input_udp_in_dropped//p packets_total{listener="0.0.0.0:2055",worker="0"} 0 packets_total{listener="0.0.0.0:2055",worker="1"} 0 packets_total{listener="0.0.0.0:2055",worker="2"} 0 packets_total{listener="0.0.0.0:2055",worker="3"} 1.614933572278264e+15 packets_total{listener="0.0.0.0:2055",worker="4"} 0 packets_total{listener="0.0.0.0:2055",worker="5"} 0 packets_total{listener="0.0.0.0:2055",worker="6"} 9.59964121598348e+14 packets_total{listener="0.0.0.0:2055",worker="7"} 0

eBPF can help by implementing an alternate balancing algorithm. 🐝

There are three methods to load-balance UDP packets across workers:

SO_REUSEPORT socket option.SO_REUSEPORT optionTom Hebert added the SO_REUSEPORT socket Continue reading

Go’s embed feature lets you bundle static assets into an executable, but it stores them uncompressed. This wastes space: a web interface with documentation can bloat your binary by dozens of megabytes. A proposition to optionally enable compression was declined because it is difficult to handle all use cases. One solution? Put all the assets into a ZIP archive! 🗜️

The Go standard library includes a module to read and write ZIP archives. It

contains a function that turns a ZIP archive into an io/fs.FS

structure that can replace embed.FS in most contexts.1

package embed import ( "archive/zip" "bytes" _ "embed" "fmt" "io/fs" "sync" ) //go:embed data/embed.zip var embeddedZip []byte var dataOnce = sync.OnceValue(func() *zip.Reader { r, err := zip.NewReader(bytes.NewReader(embeddedZip), int64(len(embeddedZip))) if err != nil { panic(fmt.Sprintf("cannot read embedded archive: %s", err)) } return r }) func Data() fs.FS { return dataOnce() }

We can build the embed.zip archive with a rule in a Makefile. We specify the

files Continue reading

Akvorado 2.0 was released today! Akvorado collects network flows with IPFIX and sFlow. It enriches flows and stores them in a ClickHouse database. Users can browse the data through a web console. This release introduces an important architectural change and other smaller improvements. Let’s dive in! 🤿

$ git diff --shortstat v1.11.5 493 files changed, 25015 insertions(+), 21135 deletions(-)

The major change in Akvorado 2.0 is splitting the inlet service into two parts: the inlet and the outlet. Previously, the inlet handled all flow processing: receiving, decoding, and enrichment. Flows were then sent to Kafka for storage in ClickHouse:

Network flows reach the inlet service using UDP, an unreliable protocol. The inlet must process them fast enough to avoid losing packets. To handle a high number of flows, the inlet spawns several sets of workers to receive flows, fetch metadata, and assemble enriched flows for Kafka. Many configuration options existed for scaling, which increased complexity for users. The code needed to avoid blocking at any cost, making the processing pipeline complex and sometimes unreliable, particularly the BMP receiver.1 Adding new features became difficult Continue reading

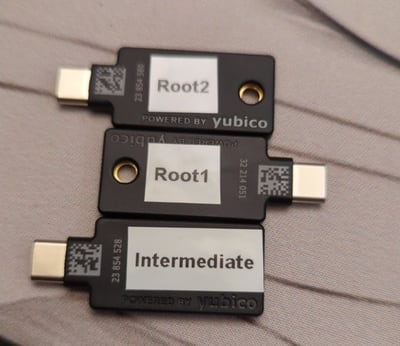

An offline PKI enhances security by physically isolating the certificate authority from network threats. A YubiKey is a low-cost solution to store a root certificate. You also need an air-gapped environment to operate the root CA.

This post describes an offline PKI system using the following components:

It is possible to add more YubiKeys as a backup of the root CA if needed. This is not needed for the intermediate CA as you can generate a new one if the current one gets destroyed.

offline-pki is a small Python application to manage an offline PKI.

It relies on yubikey-manager to manage YubiKeys and cryptography for

cryptographic operations not executed on the YubiKeys. The application has some

opinionated design choices. Notably, the cryptography is hard-coded to use NIST

P-384 elliptic curve.

The first step is to reset all your YubiKeys:

$ offline-pki yubikey reset This will reset the connected YubiKey. Are you sure? [y/N]: y New PIN code: Repeat for confirmation: Continue reading

To avoid needless typing, the fish shell features command abbreviations to expand some words after pressing space. We can emulate such a feature with Zsh:

# Definition of abbrev-alias for auto-expanding aliases typeset -ga _vbe_abbrevations abbrev-alias() { alias $1 _vbe_abbrevations+=(${1%%\=*}) } _vbe_zle-autoexpand() { local -a words; words=(${(z)LBUFFER}) if (( ${#_vbe_abbrevations[(r)${words[-1]}]} )); then zle _expand_alias fi zle magic-space } zle -N _vbe_zle-autoexpand bindkey -M emacs " " _vbe_zle-autoexpand bindkey -M isearch " " magic-space # Correct common typos (( $+commands[git] )) && abbrev-alias gti=git (( $+commands[grep] )) && abbrev-alias grpe=grep (( $+commands[sudo] )) && abbrev-alias suod=sudo (( $+commands[ssh] )) && abbrev-alias shs=ssh # Save a few keystrokes (( $+commands[git] )) && abbrev-alias gls="git ls-files" (( $+commands[ip] )) && { abbrev-alias ip6='ip -6' abbrev-alias ipb='ip -brief' } # Hard to remember options (( $+commands[mtr] )) && abbrev-alias mtrr='mtr -wzbe'

Here is a demo where gls is expanded to git ls-files after pressing space:

gls to git ls-filesI don’t auto-expand all aliases. I keep using regular aliases when slightly modifying the behavior of a command or for well-known abbreviations:

alias df='df -h' alias du='du -h' alias rm='rm -i' alias mv='mv -i' alias ll='ls -ltrhA'

Caddy is an open-source web server written in Go. It handles TLS certificates automatically and comes with a simple configuration syntax. Users can extend its functionality through plugins1 to add features like rate limiting, caching, and Docker integration.

While Caddy is available in Nixpkgs, adding extra plugins is not

simple.2 The compilation process needs Internet access, which Nix

denies during build to ensure reproducibility. When trying to build the

following derivation using xcaddy, a tool for building Caddy with plugins,

it fails with this error: dial tcp: lookup proxy.golang.org on [::1]:53:

connection refused.

{ pkgs }: pkgs.stdenv.mkDerivation { name = "caddy-with-xcaddy"; nativeBuildInputs = with pkgs; [ go xcaddy cacert ]; unpackPhase = "true"; buildPhase = '' xcaddy build --with github.com/caddy-dns/[email protected] ''; installPhase = '' mkdir -p $out/bin cp caddy $out/bin ''; }

Fixed-output derivations are an exception to this rule and get network access

during build. They need to specify their output hash. For example, the

fetchurl function produces a fixed-output derivation:

{ stdenv, fetchurl }: stdenv.mkDerivation rec { pname = "hello"; version = "2.12.1"; src Continue reading

In 2020, Google introduced Core Web Vitals metrics to measure some aspects of real-world user experience on the web. This blog has consistently achieved good scores for two of these metrics: Largest Contentful Paint and Interaction to Next Paint. However, optimizing the third metric, Cumulative Layout Shift, which measures unexpected layout changes, has been more challenging. Let’s face it: optimizing for this metric is not really useful for a site like this one. But getting a better score is always a good distraction. 💯

To prevent the “flash of invisible text” when using web fonts, developers should

set the font-display property to swap in @font-face rules. This method

allows browsers to initially render text using a fallback font, then replace it

with the web font after loading. While this improves the LCP score, it causes

content reflow and layout shifts if the fallback and web fonts are not

metrically compatible. These shifts negatively affect the CLS score. CSS

provides properties to address this issue by overriding font metrics when using

fallback fonts: size-adjust,

ascent-override, descent-override,

and line-gap-override.

Two comprehensive articles explain each property and their computation methods in detail: Creating Perfect Font Fallbacks in CSS and Improved Continue reading

Combining BGP confederations and AS override can potentially create a BGP routing loop, resulting in an indefinitely expanding AS path.

BGP confederation is a technique used to reduce the number of iBGP sessions and improve scalability in large autonomous systems (AS). It divides an AS into sub-ASes. Most eBGP rules apply between sub-ASes, except that next-hop, MED, and local preferences remain unchanged. The AS path length ignores contributions from confederation sub-ASes. BGP confederation is rarely used and BGP route reflection is typically preferred for scaling.

AS override is a feature that allows a router to replace the ASN of a

neighbor in the AS path of outgoing BGP routes with its own. It’s useful when

two distinct autonomous systems share the same ASN. However, it interferes with

BGP’s loop prevention mechanism and should be used cautiously. A safer

alternative is the allowas-in directive.1

In the example below, we have four routers in a single confederation, each in

its own sub-AS. R0 originates the 2001:db8::1/128 prefix. R1, R2, and

R3 forward this prefix to the next router in the loop.

The router configurations are available in a Continue reading

IPv4 is an expensive resource. However, many content providers are still IPv4-only. The most common reason is that IPv4 is here to stay and IPv6 is an additional complexity.1 This mindset may seem selfish, but there are compelling reasons for a content provider to enable IPv6, even when they have enough IPv4 addresses available for their needs.

Disclaimer

It’s been a while since this article has been in my drafts. I started it when I was working at Shadow, a content provider, while I now work for Free, an internet service provider.

Providing a public IPv4 address to each customer is quite costly when each IP address costs US$40 on the market. For fixed access, some consumer ISPs are still providing one IPv4 address per customer.2 Other ISPs provide, by default, an IPv4 address shared among several customers. For mobile access, most ISPs distribute a shared IPv4 address.

There are several methods to share an IPv4 address:3

SSH offers several forms of authentication, such as passwords and public keys. The latter are considered more secure. However, password authentication remains prevalent, particularly with network equipment.1

A classic solution to avoid typing a password for each connection is sshpass, or its more correct variant passh. Here is a wrapper for Zsh, getting the password from pass, a simple password manager:2

pssh() { passh -p <(pass show network/ssh/password | head -1) ssh "$@" } compdef pssh=ssh

This approach is a bit brittle as it requires to parse the output of the ssh

command to look for a password prompt. Moreover, if no password is required, the

password manager is still invoked. Since OpenSSH 8.4, we can use

SSH_ASKPASS and SSH_ASKPASS_REQUIRE instead:

ssh() { set -o localoptions -o localtraps local passname=network/ssh/password local helper=$(mktemp) trap "command rm -f $helper" EXIT INT > $helper <<EOF #!$SHELL pass show $passname | head -1 EOF chmod u+x $helper SSH_ASKPASS=$helper SSH_ASKPASS_REQUIRE=force command ssh "$@" }

If the password is incorrect, we can display a prompt on the Continue reading

Akvorado collects sFlow and IPFIX flows, stores them in a ClickHouse database, and presents them in a web console. Although it lacks built-in DDoS detection, it’s possible to create one by crafting custom ClickHouse queries.

Let’s assume we want to detect DDoS targeting our customers. As an example, we consider a DDoS attack as a collection of flows over one minute targeting a single customer IP address, from a single source port and matching one of these conditions:

Here is the SQL query to detect such attacks over the last 5 minutes:

SELECT * FROM ( SELECT toStartOfMinute(TimeReceived) AS TimeReceived, DstAddr, SrcPort, dictGetOrDefault('protocols', 'name', Proto, '???') AS Proto, SUM(((((Bytes * SamplingRate) * 8) / 1000) / 1000) / 1000) / 60 AS Gbps, uniq(SrcAddr) AS sources, uniq Continue reading

Akvorado collects network flows using IPFIX or sFlow. It stores them in a ClickHouse database. A web console allows a user to query the data and plot some graphs. A nice aspect of this console is how we can filter flows with a SQL-like language:

Often, web interfaces expose a query builder to build such filters. I think combining a SQL-like language with an editor supporting completion, syntax highlighting, and linting is a better approach.1

The language parser is built with pigeon (Go) from a parsing expression grammar—or PEG. The editor component is CodeMirror (TypeScript).

PEG grammars are relatively recent2 and are an alternative to context-free grammars. They are easier to write and they can generate better error messages. Python switched from an LL(1)-based parser to a PEG-based parser in Python 3.9.

pigeon generates a parser for Go. A grammar is a set of rules. Each rule is

an identifier, with an optional user-friendly label for error messages, an

expression, and an action in Go to be executed on match. You can find the

complete grammar in parser.peg. Here is Continue reading

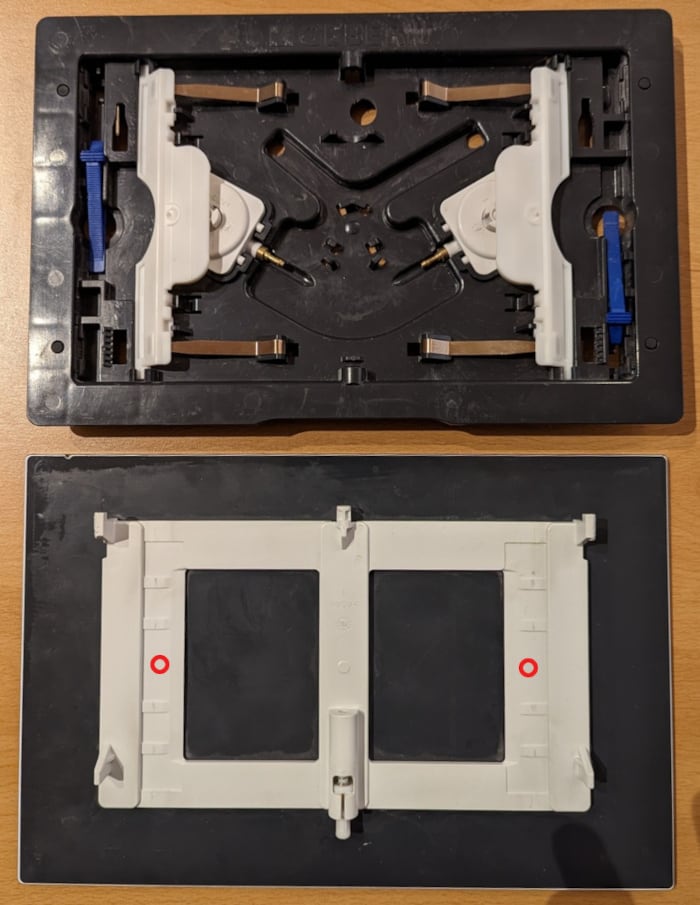

My toilet is equipped with a Geberit Sigma 70 flush plate. The sales pitch for this hydraulic-assisted device praises the “ingenious mount that acts like a rocker switch.” In practice, the flush is very capricious and has a very high failure rate. Avoid this type of mechanism! Prefer a fully mechanical version like the Geberit Sigma 20.

After several plumbers, exchanges with Geberit’s technical department, and the expensive replacement of the entire mechanism, I was still getting a failure rate of over 50% for the small flush. I finally managed to decrease this rate to 5% by applying two 8 mm silicone bumpers on the back of the plate. Their locations are indicated by red circles on the picture below:

Expect to pay about 5 € and as many minutes for this operation.

Protocol Buffers are a popular choice for serializing structured data due to their compact size, fast processing speed, language independence, and compatibility. There exist other alternatives, including Cap’n Proto, CBOR, and Avro.

Usually, data structures are described in a proto definition file

(.proto). The protoc compiler and a language-specific plugin convert it into

code:

$ head flow-4.proto syntax = "proto3"; package decoder; option go_package = "akvorado/inlet/flow/decoder"; message FlowMessagev4 { uint64 TimeReceived = 2; uint32 SequenceNum = 3; uint64 SamplingRate = 4; uint32 FlowDirection = 5; $ protoc -I=. --plugin=protoc-gen-go --go_out=module=akvorado:. flow-4.proto $ head inlet/flow/decoder/flow-4.pb.go // Code generated by protoc-gen-go. DO NOT EDIT. // versions: // protoc-gen-go v1.28.0 // protoc v3.21.12 // source: inlet/flow/data/schemas/flow-4.proto package decoder import ( protoreflect "google.golang.org/protobuf/reflect/protoreflect"

Akvorado collects network flows using IPFIX or sFlow, decodes them with GoFlow2, encodes them to Protocol Buffers, and sends them to Kafka to be stored in a ClickHouse database. Collecting a new field, such as source and destination MAC addresses, requires modifications in multiple places, including the proto definition file and the ClickHouse migration code. Moreover, Continue reading

A few years ago, I downsized my personal infrastructure. Until 2018, there were a dozen containers running on a single Hetzner server.1 I migrated my emails to Fastmail and my DNS zones to Gandi. It left me with only my blog to self-host. As of today, my low-scale infrastructure is composed of 4 virtual machines running NixOS on Hetzner Cloud and Vultr, a handful of DNS zones on Gandi and Route 53, and a couple of Cloudfront distributions. It is managed by CDK for Terraform (CDKTF), while NixOS deployments are handled by NixOps.

In this article, I provide a brief introduction to Terraform, CDKTF, and the Nix ecosystem. I also explain how to use Nix to access these tools within your shell, so you can quickly start using them.

Terraform is an “infrastructure-as-code” tool. You can define your infrastructure by declaring resources with the HCL language. This language has some additional features like Continue reading

Earlier this year, we released Akvorado, a flow collector, enricher, and visualizer. It receives network flows from your routers using either NetFlow v9, IPFIX, or sFlow. Several pieces of information are added, like GeoIP and interface names. The flows are exported to Apache Kafka, a distributed queue, then stored inside ClickHouse, a column-oriented database. A web frontend is provided to run queries. A live version is available for you to play.

Several alternatives exist:

Akvorado differentiates itself from these solutions because:

The proposed deployment solution relies on Docker Compose to set up Akvorado, Zookeeper, Kafka, and ClickHouse. Continue reading

TL;DR

Never trust show commit changes diff on Cisco IOS XR.

Cisco IOS XR is the operating system running for the Cisco ASR, NCS, and

8000 routers. Compared to Cisco IOS, it features a candidate

configuration and a running configuration. In configuration mode, you can

modify the first one and issue the commit command to apply it to the running

configuration.1 This is a common concept for many NOS.

Before committing the candidate configuration to the running configuration, you

may want to check the changes that have accumulated until now. That’s where the

show commit changes diff command2 comes up. Its goal is to show the

difference between the running configuration (show running-configuration) and

the candidate configuration (show configuration merge). How hard can it be?

Let’s put an interface down on IOS XR 7.6.2 (released in August 2022):

RP/0/RP0/CPU0:router(config)#int Hu0/1/0/1 shut RP/0/RP0/CPU0:router(config)#show commit changes diff Wed Nov 23 11:08:30.275 CET Building configuration... !! IOS XR Configuration 7.6.2 + interface HundredGigE0/1/0/1 + shutdown ! end

The + sign before interface HundredGigE0/1/0/1 makes it look like you did

create a new interface. Maybe there was a typo? No, the diff is just broken. If

you Continue reading

Here are the slides I presented for FRnOG #36 in September 2022. They are about Akvorado, a tool to collect network flows and visualize them. It was developped by Free. I didn’t get time to publish a blog post yet, but it should happen soon!

The presentation, in French, was recorded. I have added English subtitles.