Nexus Dashboard Fabric Controller 12

Good day every one,

As you certainly know, we are now rebranding DCNM 11 to Nexus Dashboard Fabric Controller, consequently, NDFC 12 is the new acronym to automate and operate NX-OS based fabrics

It is NOT just about changing the name, there are several significant changes with NDFC 12. To list few of them:

- Enhanced Topology View

- Ability to modify switch discovery IP

- Flexible CLI option – config profile or native NXOS CLI

- Performance Programmable reports

- Granular RBAC

- Secure POAP User

- Simplified and flexible Image Management

- Server Smart Licensing

- Automate IOS-XR configuration

- Automate VXLAN EVPN fabric deployment with Cat9k

One of the key evolution in regard to NDFC is that it now joins the ecosystem of services that runs natively on top of the common Nexus dashboard platform.

One of the key advantages for the network operators with this evolution, is that they just need a single experience, it doesn’t really matter what application you run, from the installation process to the common Web GUI. All applications or services look with the same logic behind any requests.

You have a single pane of glass which is the Nexus dashboard platform through which the end-user can consume different applications for the Continue reading

44 – The Essentials of DCNM 11

Good day everyone,

I have recorded 6 different modules aimed at giving you a good understanding of the key functions that DCNM 11 offers to manage, automate and operate your data center network.

While waiting for the new version 12 coming soon, this series of modules covers the Essentials of Data Center Network Manager (DCNM ) version 11.

) version 11.

By “Essentials” the mean is that it covers the most features needed for all enterprises, from small legacy Data Center Network to multiple modern Fabrics or a mix of. This essential series of modules covering DCNM 11 emphases the Classic LAN as well as the VXLAN EVPN Fabric environments. Indeed, any network team can benefit from the advantages of leveraging the same and unique platform to operate a classic based data center network or a modern VXLAN EVPN based fabric or a mixture of the both.

I have developed the content for the lectures on DCNM 11 and 10.1(n) environment.

If you are not yet familiar with DCNM , you can read previous posts, otherwise, DCNM is the leading Intent-Based Networking solution for Standalone NX-OS based Data Center. It encompasses the comprehensive management solution for all NX-OS network deployments Continue reading

, you can read previous posts, otherwise, DCNM is the leading Intent-Based Networking solution for Standalone NX-OS based Data Center. It encompasses the comprehensive management solution for all NX-OS network deployments Continue reading

42 – LISP Network Deployment and Troubleshooting

Good day Network experts

It has been a great pleasure and an honor working with Tarique Shakil and Vinit Jain on this book below, deep-diving on this amazing LISP protocol.

I would like also to take this opportunity to thank Max Ardica, Victor Moreno and Marc Portoles Comeras for their invaluable help. I wrote the section on LISP Mobility deployment with traditional and modern data center fabrics (VXLAN EVPN based as well as ACI Multi-Pod/Multi-Site), however this could not have been done without the amazing support of these guys.

Available from Cisco Press

or from Safari book Online

Implement flexible, efficient LISP-based overlays for cloud, data center, and enterprise

The LISP overlay network helps organizations provide seamless connectivity to devices and workloads wherever they move, enabling open and highly scalable networks with unprecedented flexibility and agility.

LISP Network Deployment and Troubleshooting is the definitive resource for all network engineers who want to understand, configure, and troubleshoot LISP on Cisco IOS-XE, IOS-XR and NX-OS platforms. It brings together comprehensive coverage of how LISP works, how it integrates with leading Cisco platforms, how to configure it for maximum efficiency, and how to address key issues such Continue reading

41 – Interconnecting Traditional DCs with DCNM using VXLAN EVPN Multi-site

Good day,

The same question arises often about how to leverage DCNM to deploy a VXLAN EVPN Multi-site between traditional Data Centers. To clarify, DCNM can definitively help interconnecting two or multiple Classical Ethernet-based DC networks, in a short time.

As a reminder (post 37) , VXLAN EVPN Multi-site overlay is initiated from the Border Gateway nodes (BGW). The BGW function can run in two different modes; either in Anycast BGW mode (Up to 6 BGW devices per site), traditionally deployed to interconnect VXLAN EVPN based fabrics, or in vPC BGW mode (up to 2 BGW per site), essentially designed to interconnect traditional data centers, but not limited to. vPC BGW can be leveraged to interconnect multiple VXLAN EVPN fabrics from which Endpoints can be locally dual- attached at layer 2. Several designations are used to describe the Classic Ethernet-based data center networks such as Traditional or legacy data center network. All these terms mean that these network are non VXLAN EVPN-based fabrics. The vPC BGW node is agnostic about data center network types, models or switches that construct the data center infrastructure, as long as it offers Layer 2 (dot1Q) connectivity for layer 2 extension, and if Continue reading

40 – DCNM 11.1 and VRF-Lite connection to an external Layer 3 Network

Another great feature supported by DCNM concerns the extension of Layer 3 connection across an external Layer 3 network using VRF-Lite hand-off from the Border leaf node toward the external Edge router.

There are different options to deploy a VRF-Lite connection to the outside of the VXLAN fabric. Either using a manual deployment or leveraging the auto-configuration process that will configure automatically the VRF-lite toward the Layer 3 network.

One of the key reasons for configuring the interfaces manually is when the Layer 3 network is managed by an external service provider, thus the Network team has no control on the configuration which is imposed by the Layer 3 service operator.

The first demo illustrates an end-to-end manual configuration of VRF-Lite connections from the Border leaf node to an external Edge router.

The Border leaf nodes being a vPC domain, the recommendation is to configure a interface per vPC peer device connecting the external Layer 3 network. As a result, I configured 1 “inter-fabric” type link per Border Gateway.

Prior to deploy the external VRF-lite, an external fabric must be created in which the concerned Edge router should be imported. For this particular scenario, because the Network team is not Continue reading

39 – DCNM 11.1 and VXLAN EVPN Multi-site Update

Dear Network experts,

It took a while to post this update on DCNM 11.1 due to other priorities, but I should admit it’s a shame due to all great features that came with DCNM 11.1. As mentioned in the previous post, DCNM 11.1 brings a lot of great improvements.

Hereafter is a summary of the top LAN fabric enhancements that comes with DCNM 11.1 for LAN Fabric.

Feel free to look at the Release-notes for an exhaustive list of New Features and Enhancements in Cisco DCNM, Release 11.1(1)

Fabric Builder, fabric devices and fabric underlay networks

-

Configuration Compliance display side-by-side of existing and pending configuration before deployment.

- vPC support for BGWs (VXLAN EVPN Multi-site) and standalone fabrics.

Brownfield Migration

-

Transition an existing VXLAN fabric management into DCN.

Interfaces

-

Port-channel, vPC, subinterface, and loopback interfaces can be added and eddited with an external fabric devices.

-

Cisco DCNM 11.1(1) specific template enhancements are made for interfaces.

Overlay Network/VRF provisioning

-

Networks and VRFs deployment can be deploy automatically at the Multi-site Domain level from Site to Site in one single action.

External Fabric

-

Switches can be added to the external fabric. Inter-Fabric Connections (IFCs) can be created Continue reading

38 – DCNM 11 and VXLAN EVPN Multi-site

Hot networks served chilled, DCNM style

When I started this blog for Data Center Interconnection purposes some time ago, I was not planning to talk about network management tools. Nevertheless, I recently tested DCNM 11 to deploy an end-to-end VXLAN EVPN Multi-site architecture, hence, I thought about sharing with you my recent experience with this software engine. What pushed me to publish this post is that I’ve been surprisingly impressed with how efficient and time-saving DCNM 11 is in deploying a complex VXLAN EVPN fabric-based infrastructure, including the multi-site interconnection, while greatly reducing the risk of human errors caused by several hundred required CLI commands. Hence, I sought to demonstrate the power of this fabric management tool using a little series of tiny videos, even though I’m usually not a fan of GUI tools.

To cut a long story short, if you are not familiar with DCNM (Data Center Network Manager), DCNM is a software management platform that can run from a vCenter VM, a KVM machine, or a Bare metal server. It focuses on Cisco Data Center infrastructure, supporting a large set of devices, services, and architecture solutions. It covers multiple types of Data Center Fabrics; from the Storage Continue reading

37 – DCI is dead, long live to DCI

Some may find the title a bit strange, but, actually, it’s not 100% wrong. It just depends on what the acronym “DCI” stands for. And, actually, a new definition for DCI may come shortly, disrupting the way we used to interconnect multiple Data Centres together.

For many years, the DCI acronym has conventionally stood for Data Centre Interconnect.

Soon, the “Data Centre Interconnect” naming convention may essentially be used to describe solutions for interconnecting traditional-based DC network solutions, which have been used for many years. I am not denigrating any traditional DCI solutions per se, but the Data Centre networking is evolving very quickly from the traditional hierarchical network architecture to the emerging VXLAN-based fabric model gaining momentum in enterprise adopting it to optimize modern applications, computing resources, save costs and gain operational benefits. Consequently, these independent DCI technologies will continue to be deployed primarily for extending Layer 2 and Layer 3 networks between traditional DC networks. However, for the interconnection of modern VXLAN EVPN standalone (1) Fabrics, a new innovative solution called “VXLAN EVPN Multi-site” – which integrates in a single device the extension of the Layer 2 and Layer 3 services across multiple sites – has been created for a Continue reading

36 – New White Paper that describes OTV to interconnect Multiple VXLAN EVPN Fabrics

Good day,

In the meantime that this long series of sub-posts becomes a white paper, there is a new document available on CCO written by Lukas Krattiger that covers the Layer 2 and Layer 3 interconnection of multiple VXLAN fabrics. I feel this document is complementary to this series of Post 36, describing from a different angle (using a human language approach) how to achieve VXLAN EVPN Multi-Fabric design in conjunction with OTV.

Optimizing Layer 2 DCI with OTV between Multiple VXLAN EVPN Fabrics (Multifabric) White Paper

Good reading, yves

36 – VXLAN EVPN Multi-Fabrics – Path Optimisation (part 5)

Ingress/Egress Traffic Path Optimization

In the VXLAN Multi-fabric design discussed in this post, each data center normally represents a separate BGP autonomous system (AS) and is assigned a unique BGP autonomous system number (ASN).

Three types of BGP peering are usually established as part of the VXLAN Multi-fabric solution:

- MP internal BGP (MP-iBGP) EVPN peering sessions are established in each VXLAN EVPN fabric between all the deployed leaf nodes. As previously discussed, EVPN is the intrafabric control plane used to exchange reachability information for all the endpoints connected to the fabric and for external destinations.

- Layer 3 peering sessions are established between the border nodes of separate fabrics to exchange IP reachability information (host routes) for the endpoints connected to the different VXLAN fabrics and the IP subnets that are not stretched (east-west communication). Often, a dedicated Layer 3 DCI network connection is used for this purpose. In a multitenant VXLAN fabric deployment, a separate Layer 3 logical connection is required for each VRF instance defined in the fabric (VRF-Lite model). Although either eBGP or IGP routing protocols can be used to establish interfabric Layer 3 connectivity, the eBGP scenario is the most common and is the one discussed in Continue reading

36 – VXLAN EVPN Multi-Fabrics – Host Mobility (part 4)

Host Mobility across Fabrics

This section discusses support for host mobility when a distributed Layer 3 Anycast gateway is configured across multiple VXLAN EVPN fabrics.

In this scenario, VM1 belonging to VLAN 100 (subnet_100) is hosted by H2 in fabric 1, and VM2 on VLAN 200 (subnet_200) initially is hosted by H3 in the same fabric 1. Destination IP subnet_100 and subnet_200 are locally configured on leaf nodes L12 and L13 as well as on L14 and L15.

This example assumes that the virtual machines (endpoints) have been previously discovered, and that Layer 2 and 3 reachability information has been announced across both sites as discussed in the previous sections.

Figure 1 highlights the content of the forwarding tables on different leaf nodes in both fabrics before virtual machine VM2 is migrated to fabric 2.

The following steps show the process for maintaining communication between the virtual machines in a host mobility scenario, as depicted in Figure 2

- For operational purposes, virtual machine VM2 moves to host H4 located in fabric 2 and connected to leaf nodes L21 and L22.

- After Continue reading

36 – VXLAN EVPN Multi-Fabrics with Anycast L3 gateway (part 3)

VXLAN EVPN Multi-Fabric with Distributed Anycast Layer 3 Gateway

Layer 2 and Layer 3 DCI interconnecting multiple VXLAN EVPN Fabrics

A distributed anycast Layer 3 gateway provides significant added value to VXLAN EVPN deployments for several reasons:

- It offers the same default gateway to all edge switches. Each endpoint can use its local VTEP as a default gateway to route traffic outside its IP subnet. The endpoints can do so, not only within a fabric but across independent VXLAN EVPN fabrics (even when fabrics are geographically dispersed), removing suboptimal interfabric traffic paths. Additionally, routed flows between endpoints connected to the same leaf node can be directly routed at the local leaf layer.

- In conjunction with ARP suppression, it reduces the flooding domain to its smallest diameter (the leaf or edge device), and consequently confines the failure domain to that switch.

- It allows transparent host mobility, with the virtual machines continuing to use their respective default gateways (on the local VTEP), within each VXLAN EVPN fabric and across multiple VXLAN EVPN fabrics.

- It does not require you to create any interfabric FHRP filtering, because no protocol exchange is required between Layer 3 anycast gateways.

- It allows better distribution of state (ARP, Continue reading

36 – VXLAN EVPN Multi-Fabrics with External Routing Block (part 2)

VXLAN EVPN Multi-Fabric with External Active/Active Gateways

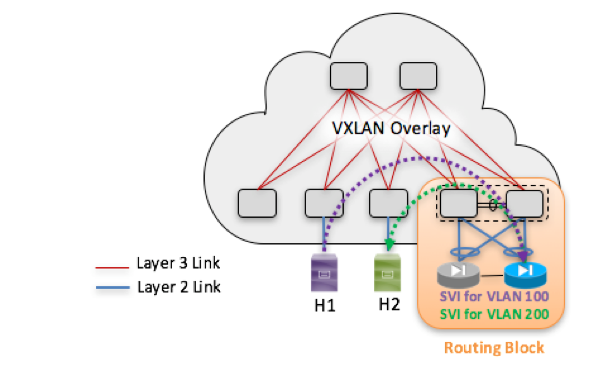

The first use case is simple. Each VXLAN fabric behaves like a traditional Layer 2 network with a centralized routing block. External devices (such as routers and firewalls) provide default gateway functions, as shown in Figure 1.

In the Layer 2–based VXLAN EVPN fabric deployment, the external routing block is used to perform routing functions between Layer 2 segments. The same routing block can be connected to the WAN advertising the public networks from each data center to the outside and to propagate external routes to each fabric.

The routing block consists of a “router-on-a-stick” design (from the fabric’s point of view) built with a pair of traditional routers, Layer 3 switches, or firewalls that serve as the IP gateway. These IP gateways are attached to a pair of vPC border nodes that initiate and terminate the VXLAN EVPN tunnels.

Connectivity between the IP gateways and the border nodes is achieved through a Layer 2 trunk carrying all the VLANs that require routing services.

To improve performance with active default gateways in each data center, reducing the hairpinning of east-west traffic for Continue reading

36 – VXLAN EVPN Multi-Fabrics Design Considerations (part 1)

Notices

With my friend and respectful colleague Max Ardica, we have tested and qualified the current solution to interconnect multiple VXLAN EVPN fabric. We have developed this technical support to clarify the network design requirements when the function Layer 3 Anycast gateways is distributed among all server node platform and all VXLAN EVPN Fabrics. The whole article is organised in 5 different posts.

- This 1st one elaborates the design considerations to interconnect two VXLAN EVPN based fabrics.

- The 2nd post discusses the Layer 2 DCI requirements interconnecting Layer-2-based VXLAN EVPN fabrics with external routing block

- The 3rd covers the Layer 2 and Layer 3 DCI requirement interconnecting VXLAN EVPN fabrics with distributed Layer Anycast Gateway.

- The 4th post examines host mobility across two VXLAN EVPN Fabrics

- Finally the last section develops inbound and outbound path optimisation with VXLAN EVPN fabrics geographically dispersed.

Introduction

Recently, fabric architecture has become a common and popular design option for building new-generation data center networks. Virtual Extensible LAN (VXLAN) with Multiprotocol Border Gateway Protocol (MP-BGP) Ethernet VPN (EVPN) is essentially becoming the standard technology used for deploying network virtualization overlays in data center fabrics.

Data center networks usually require the interconnection of separate network fabrics, which may also be deployed across geographically dispersed Continue reading

35 – East-West Endpoint localization with LISP IGP Assist

East-West Communication Intra and Inter-sites

For the following scenario, subnets are stretched across multiple locations using a Layer 2 DCI solution. There are several use cases that require LAN extension between multiple sites, such as Live migration, Health-check probing for HA cluster (heartbeat), Operational Cost containment such as migration of Mainframes, etc. It is assumed that due to long distances between sites, the network services are duplicated and active on each of the sites. This option allows the use of local network services such as default gateways, load balancer’s and security engines distributed across each location, helps reduce server to server communication latency (East-West work flows).

Traditionally, an IP address uses a unique identifier assigned to a specific network entity such as physical system, virtual machine or firewall, default gateway, etc. The routed WAN uses the identifier to also determine the network entity’s location in the IP subnet. When a Virtual Machine migrates from one data center to another, the traditional IP address schema retains its original unique identifier and location, although the physical location has actually changed. As a result, the extended VLAN must share the same subnet so that the TCP/IP parameters of the VM remain the same from site Continue reading

34 – Q-in-VNI and EFP for Hosting Providers

Dear Network and DCI Experts !

While this post is a little bit out of the DCI focus, and assuming many of you already know Q-in-Q, the question is, are you yet familiar with Q-in-VNI? For those who are not, I think this topic is a good opportunity to bring Q-in-VNI deployment with VXLAN EVPN for intra- and inter-VXLAN-based Fabrics and understand its added value and how one or multiple Client VLANs from the double encapsulation can be selected for further actions.

Although it’s not an unavoidable rule per-se, some readers already using Dot1Q tunneling may not necessarily fit into the following use-case. Nonetheless, I think it’s safe to say that most of Q-in-Q deployment have been used by Hosting Provider for co-location requirements for multiple Clients, hence, the choice of the Hosting Provider use-case elaborated in this article.

For many years now, Hosting Services have physically sheltered thousands of independent clients’ infrastructures within the Provider’s Data Centers. The Hosting Service Provider is responsible for supporting each and every Client’s data network in its shared network infrastructure. This must be achieved without changing any Tenant’s Layer 2 or Layer 3 parameter, and must also be done as quickly as possible. For many years, the co-location Continue reading

Cisco ACI Multipod

Since 2.0, Multipod for ACI enables provisioning a more fault tolerant fabric comprised of multiple pods with isolated control plane protocols. Also, multipod provides more flexibility with regard to the full mesh cabling between leaf and spine switches. When leaf switches are spread across different floors or different buildings, multipod enables provisioning multiple pods per floor or building and providing connectivity between pods through spine switches.

A new White Paper on ACI Multipod is now available

http://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-737855.html?cachemode=refresh

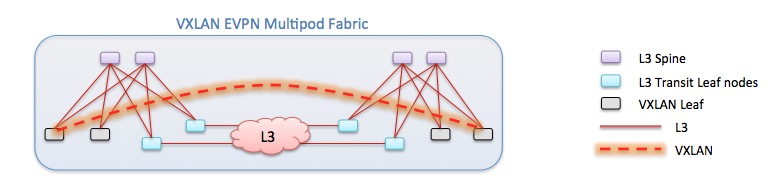

32 – VXLAN Multipod stretched across geographically dispersed datacenters

This article focuses on the single stretched Fabric across multiple locations as mentioned in the previous post (31) through the 1st option.

I have been working with my friends Patrice and Max for several months building a efficient and resilient solution to stretched a VXLAN Multipod fabric across two sites. The whole technical white paper is now available and can be accessible here:

One of the key use-case for that scenario is for an enterprise to select VXLAN EVPN as the technology of choice for building multiple greenfield data center pods. It becomes therefore logical to extend VXLAN between distant PoD’s that are managed and operated as a single administrative domain. This choice makes sense as a multipod fabric functionally and operationally is a single logical VXLAN fabric, and its deployment is a continuation of the work performed to roll out the pod, simplifying the provisioning of end-to-end Layer 2 and Layer 3 connectivity.

Technically speaking and thanks to the flexibility of VXLAN, we could deploy the overlay network on top of any Layer 3 architecture within a datacenter.

- [Q] However, can we afford to stretch the VXLAN fabric as a single fabric without taking into consideration the risks of loosing the whole resources Continue reading

31 – Multiple approaches interconnecting VXLAN Fabrics

As discussed in previous articles, VXLAN data plane encapsulation in conjunction with its control plane MP-BGP AF EVPN is becoming the foremost technology to support the modern network Fabric.

It is therefore interesting to clarify how to interconnect multiple VXLAN/EVPN fabrics geographically dispersed across different locations.

Three approaches can be considered:

| The 1st option is the extension of multiple sites as one large single stretched VXLAN Fabric. There is no network overlay boundary per se, nor VLAN hand-off at the interconnection, which simplifies operations. This option is also known as geographically dispersed VXLAN Multiple PoD. However we should not consider this solution as a DCI solution as there is no demarcation, nor separation between locations. Nonetheless this one is very interesting for its simplicity and flexibility. Consequently we have deeply tested and validated this design. |

- The second option to consider is multiple VXLAN/EVPN-based Fabrics interconnected using a DCI Layer 2 and layer 3 extension. Each greenfield DC located on different site is deployed as an independent fabric, increasing autonomy of each site and enforce global resiliency. This is often called Multisite. A Data Center Interconnect technology (OTV, VPLS, PBB-EVPN, or even VXLAN/EVPN) is therefore used to extend Layer 2 and Layer Continue reading