Author Archives: Ivan Pepelnjak

Author Archives: Ivan Pepelnjak

One of my readers sent me a question along these lines:

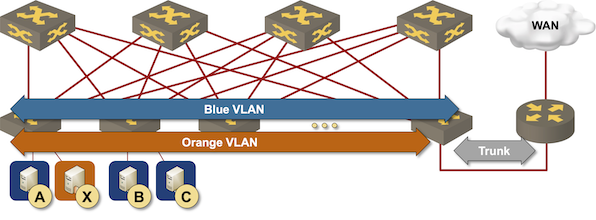

Do I have to have an IBGP session between Customer Edge (CE) routers in a multihomed site if they run EBGP with the upstream provider(s)?

Let’s start with a simple diagram and a refactoring of the question:

Javier Antich, the author of the fantastic AI/ML in Networking webinar, spent years writing the Machine Learning for Network and Cloud Engineers book that is now available in paperback and Kindle format.

I’ve seen a final draft of the book and it’s definitely worth reading. You should also invest some time into testing the scenarios Javier created. Here’s what I wrote in the foreword:

Artificial Intelligence (AI) has been around for decades. It was one of the exciting emerging (and overhyped) topics when I attended university in the late 1980s. Like today, the hype failed to deliver, resulting in long, long AI winter.

Javier Antich, the author of the fantastic AI/ML in Networking webinar, spent years writing the Machine Learning for Network and Cloud Engineers book that is now available in paperback and Kindle format.

I’ve seen a final draft of the book and it’s definitely worth reading. You should also invest some time into testing the scenarios Javier created. Here’s what I wrote in the foreword:

Artificial Intelligence (AI) has been around for decades. It was one of the exciting emerging (and overhyped) topics when I attended university in the late 1980s. Like today, the hype failed to deliver, resulting in long, long AI winter.

It’s incredible how little CPU resources some network devices consume in a steady state – a netlab user managed to run almost 100 Mikrotik routers on a 24-core server. Starting them simultaneously (like vagrant up tries to do when used with the vagrant-libvirt plugin) is a different story. The router virtual machines are configured with two CPU cores for a good reason, and if they don’t get enough CPU cycles during the boot time, they get sluggish, Vagrant gives up, and the lab start procedure fails.

One could use a nasty workaround:

It’s incredible how little CPU resources some network devices consume in a steady state – a netlab user managed to run almost 100 Mikrotik routers on a 24-core server. Starting them simultaneously (like vagrant up tries to do when used with the vagrant-libvirt plugin) is a different story. The router virtual machines are configured with two CPU cores for a good reason, and if they don’t get enough CPU cycles during the boot time, they get sluggish, Vagrant gives up, and the lab start procedure fails.

One could use a nasty workaround:

Stuart Charlton started the Kubernetes Networking Deep Dive webinar with an overview of basic concepts including the networking model and services. After covering the fundamentals, it was time for The Real Stuff: Container Networking Interface, starting with an overview of Kubernetes SDN architecture.

Stuart Charlton started the Kubernetes Networking Deep Dive webinar with an overview of basic concepts including the networking model and services. After covering the fundamentals, it was time for The Real Stuff: Container Networking Interface, starting with an overview of Kubernetes SDN architecture.

I get several emails every week1 from people I never heard of telling me what a wonderful job they could do writing guest blog posts on a range of topics of interest to my audience.

I’m positive you must be pretty intelligent to be a successful scammer, so I’m sure the good ones are using ChatGPT to generate the “unique” content they’re promising. I felt it was high time to return the favor.

I get several emails every week1 from people I never heard of telling me what a wonderful job they could do writing guest blog posts on a range of topics of interest to my audience.

I’m positive you must be pretty intelligent to be a successful scammer, so I’m sure the good ones are using ChatGPT to generate the “unique” content they’re promising. I felt it was high time to return the favor.

Dmitry Perets left a thoughtful comment on my Nothing Works blog post describing why enterprise IT might be even worse than consumer world.

I think another reason for the “Nothing Works” world is that the only true Management Plane separation that exists in our industry is that of the real “human” management. In the medium/large enterprises they (and their interests, KPIs and so on) are very much separated from the technical workforce. And increasingly so, because today the technical workforce might not even be the employees of the same enterprise. They are likely to come from some IT consultancy outsource – degree of separation which makes a true SDN evangelist envious.

Dmitry Perets left a thoughtful comment on my Nothing Works blog post describing why enterprise IT might be even worse than consumer world.

I think another reason for the “Nothing Works” world is that the only true Management Plane separation that exists in our industry is that of the real “human” management. In the medium/large enterprises they (and their interests, KPIs and so on) are very much separated from the technical workforce. And increasingly so, because today the technical workforce might not even be the employees of the same enterprise. They are likely to come from some IT consultancy outsource – degree of separation which makes a true SDN evangelist envious.

I like using Cisco IOS for my routing protocol virtual labs1. It uses a trivial amount of memory2 and boots relatively fast. There was just one thing that kept annoying me: Cisco IOS release 15.x takes forever to install local routes in the BGP table and even longer to select the best routes and propagate them3.

I finally found the culprit: bgp update-delay nerd knob. Here’s what the documentation has to say about it:

I like using Cisco IOS for my routing protocol virtual labs1. It uses a trivial amount of memory2 and boots relatively fast. There was just one thing that kept annoying me: Cisco IOS release 15.x takes forever to install local routes in the BGP table and even longer to select the best routes and propagate them3.

I finally found the culprit: bgp update-delay nerd knob. Here’s what the documentation has to say about it:

Maybe it’s just me, but I always need a few extra devices in my virtual labs to have endpoints I could ping to/from or to have external routing information sources. We used VRF- and VLAN tricks in the days when we had to use physical devices to carve out a dozen hosts out of a single Cisco 2501, and life became much easier when you could spin up a few additional virtual machines in a virtual lab instead.

Unfortunately, those virtual machines eat precious resources. For example, netlab allocates 1GB to every Linux virtual machine when you only need bash and ping. Wouldn’t it be great if you could start that ping in a busybox container instead?

Maybe it’s just me, but I always need a few extra devices in my virtual labs to have endpoints I could ping to/from or to have external routing information sources. We used VRF- and VLAN tricks in the days when we had to use physical devices to carve out a dozen hosts out of a single Cisco 2501, and life became much easier when you could spin up a few additional virtual machines in a virtual lab instead.

Unfortunately, those virtual machines eat precious resources. For example, netlab allocates 1GB to every Linux virtual machine when you only need bash and ping. Wouldn’t it be great if you could start that ping in a busybox container instead?

Robert Graham published a blog post describing how his IDS/IPS system handled 2 Mpps on a Pentium III CPU 20 years ago… and yet some people keep claiming that “Driving a 100 Gbps network at 80% utilization in both directions consumes 10–20 cores just in the networking stack” (in 2023). I guess a suboptimal-enough implementation can still consume all the CPU cycles it can get and then some.

Robert Graham published a blog post describing how his IDS/IPS system handled 2 Mpps on a Pentium III CPU 20 years ago… and yet some people keep claiming that “Driving a 100 Gbps network at 80% utilization in both directions consumes 10–20 cores just in the networking stack” (in 2023). I guess a suboptimal-enough implementation can still consume all the CPU cycles it can get and then some.

Matthias Luft concluded his part of Introduction to Cloud Computing webinar with a case study: how can you migrate an existing workload into a cloud environment?

Matthias Luft concluded his part of Introduction to Cloud Computing webinar with a case study: how can you migrate an existing workload into a cloud environment?

The simplest way to implement layer-3 forwarding in a network fabric is to offload it to an external device1, be it a WAN edge router, a firewall, a load balancer, or any other network appliance.

Routing at the (outer) edge of the fabric