Author Archives: Ivan Pepelnjak

Author Archives: Ivan Pepelnjak

The we should give different applications different paths across the network idea never dies (even though in many places the residential Internet gives you enough bandwidth to watch 4K videos), and the Leveraging Intent-Based Networking and SRv6 for Dynamic End-to-End Traffic Steering (video) by Severin Dellsperger was an interesting new riff on that ancient grailhunt.

Their solution uses SRv6 for traffic steering1, an Intent-Based System2 that figures out paths across the network, and eBPF on client hosts3 to add per-application SRv6 headers to outgoing traffic.

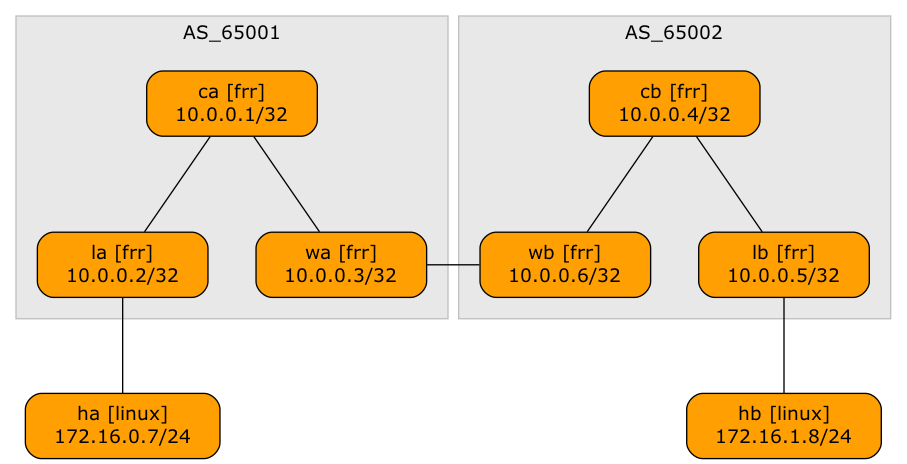

A netlab user wanted to explore a multi-site design where every site runs an independent EVPN fabric, and the inter-site link is either a layer-2 or a layer-3 interconnect (DCI). Let’s start with the easiest scenario: a layer-3 DCI with a separate (virtual) link for every tenant (in the MPLS/VPN world, we’d call that Inter-AS Option A)

Lab topology

A networking engineer (let’s call him Joe1) sent me an interesting challenge: they built a data center network with Cisco switches, and the switches flood LLDP packets between servers.

That would be interesting by itself (the whole network would appear as a single hub), but they’re also using DCBX (which is riding in LLDP TLVs), and the DCBX parameters are negotiated between servers (not between servers and adjacent switches), sometimes resulting in NIC resets2.

When I was cleaning the “set BGP MED” integration test, I decided that once a BGP prefix is in the BGP table of the BGP peer, there’s no need for a further wait before checking its MED value. After all:

That approach failed miserably with ArubaCX; it was time to investigate the details.

A large number of vendors claim to use industry-standard CLI, which means “something that looks like Cisco IOS, but we can’t say that in public.” The implementations of that “standard” are full of quirks; as I was making fun of Cisco IOS last week, it’s only fair to look at how others deal with BGP community propagation.

netlab has BGP configuration templates for 14 different platforms1, including these implementations that look like Cisco IOS from a distance if you squint just right2: Arista EOS, Aruba CX, and FRRouting. You can check the configuration templates if you wish; here’s the TC&DB3 overview:

The SwiNOG 40 event started with an interesting presentation on Building Trustworthy Network Automation (video) by Damien Garros (now CEO @ OpsMill) who discussed the principles one can use to build a trustworthy network automation solution, including idempotency, dry runs, and transactional changes. He also covered the crucial roles of the declarative approach, version control, and testing.

If you have ever watched any of my network automation materials, you won’t be surprised by anything he said, but if you’re just starting your network automation journey, you MUST watch this presentation to get your bearings straight.

Rodney Brooks republished an article on great AI expectations that he wrote 37 years ago. Not surprisingly, apart from a few technical details triggered by four decades of exponential growth in silicon capabilities, the article could have been written yesterday.

Side note: I’m a bit younger than Rodney, but I also went through at least three waves of AI hype cycles, starting with Prolog and 4GL, then expert systems, and finally neural networks. Around that time, I stopped caring and focused on networking, but I have enough battle scars to remain skeptical.

Just when I thought no vendor stupidity peculiarity could surprise me, Cisco IOS/XE proved me wrong.

I was improving a completely unrelated BGP functionality. I ran BGP integration tests on Cisco IOL (because it’s the fastest one to boot), and the BGP community propagation test failed. After verifying that I did not change the template and that the data structures had not changed, I checked the IOL release I was using.

Surprise 🎉🎉: the neighbor send-community configurations that worked since (at least) the IOS Classic release 15.x stopped working in Cisco IOS/XE release 17.16.01a.

PlanetScale published a great article describing the high-level principles of how storage devices work and covering everything from tape drives to SSDs and network-attached storage — a must-read for anyone even remotely interested in how their data is stored.

Is an LLM a stubborn donkey, a genie, or a slot machine (and why)? Find out in the Who is LLM? article by Martin Fowler.

Changing an existing BGP routing policy is always tricky on platforms that apply line-by-line changes to device configurations (Cisco IOS and most other platforms claiming to have industry-standard CLI, with the notable exception of Arista EOS). The safest approach seems to be:

One should never trust the technical details published by the industry press, but assuming the Tomahawk Ultra puff piece isn’t too far off the mark, the new Broadcom ASIC (supposedly loosely based on emerging Ultra Ethernet specs):

If you’re ancient enough, you might recognize #3 as part of Fibre Channel, #2 and #3 as part of IEEE 802.1 LLC2 (used by IBM to implement SNA over Token Ring and Ethernet), and all three as the fundamental ideas of X.25 that Broadcom obviously reinvented at 800 Gbps speeds, proving (yet again) RFC 1925 Rule 11.

A while ago, I published a blog post proudly describing the netlab integration test that should check for incorrect OSPF network types in netlab-generated device configurations. Almost immediately, Erik Auerswald pointed out that my test wouldn’t detect that error (it might detect other errors, though) as the OSPF network adjacency is always established even when the adjacent routers have mismatching OSPF network types.

I made one of the oldest testing mistakes: I checked whether my test would work under the correct conditions but not whether it would detect an incorrect condition.

Writing tests that check the correctness of network device configurations is hard (overview, more details). It’s also an interesting exercise in getting the timing just right:

And just when you think you nailed it, you encounter a device that blows your assumptions out of the water.

netlab release 25.07 was published yesterday. The major new features include:

But wait, there’s much more:

As I was running the netlab pre-release integration tests, I noticed that ArubaCX failed the IPv6 Common Services test (it worked before). Here’s the gist of what that test does:

Here’s the relevant part of the netlab lab topology:

Did you ever wonder why pressing an up-arrow in a (Linux) terminal window sometimes recalls the previous command but other times creates ^[[A?

Julia Evans did, and spent months exploring the quirks of the Linux terminal (and writing blog posts describing what she found), finally resulting in The Secret Rules of the Terminal (including the various shells, terminal emulators, escape codes, and TTY driver). A must-read if you’re a newbie who wants to understand why things happen the way they do.

One of the happy netlab users sent me an interesting challenge:

Unfortunately, you cannot add new devices to an already-running lab. You must shut down the lab, change the topology description, and start a new lab. However, there are things you can do to preserve the extra work you already did:

Martin Fowler published an interesting article about Expert Generalists. Straight from the abstract:

As computer systems get more sophisticated we’ve seen a growing trend to value deep specialists. But we’ve found that our most effective colleagues have a skill in spanning many specialties.

Also:

There are two sides to real expertise. The first is the familiar depth: a detailed command of one domain’s inner workings. The second, crucial in our fast-moving field is the ability to learn quickly, spot the fundamentals that run beneath shifting tools and trends, and apply them wherever we land.

Remember how I told you to focus on the fundamentals? 😎

Have you ever managed to type reload in the wrong terminal window and brought down a core switch (I probably did)? I managed to do the Ubuntu equivalent of that stupidity: I told my main Ubuntu server to sudo poweroff instead of doing that to a Vagrant VM.

Fortunately, the open-source world doesn’t have to rely on the roadmaps created by networking vendors’ product managers; if there’s a big enough pain, someone will solve it.