Author Archives: Ivan Pepelnjak

Author Archives: Ivan Pepelnjak

We have school holidays this week, so I’m reposting wonderful comments that would otherwise be lost somewhere in the page margins. Today: Dmitry Perets on the interactions between BFD and GR.

Well, assuming that the C-bit is set honestly (will be funny if not) and assuming that the Helper is using this bit correctly (and I think it’s pretty well defined what “correctly” means - see section 4.3 in RFC 5882), the answer is pretty clear.

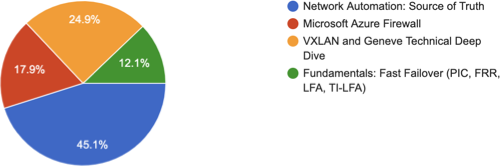

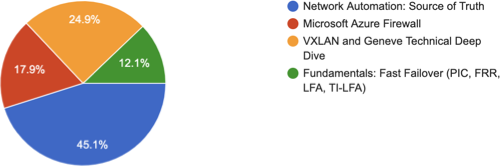

A few weeks ago I asked my subscribers which webinar they’d like to see in November (thanks a million to everyone who replied!). Not surprisingly, network automation got the top spot, but I was a bit sad to see my long-term pet project at the bottom of the list:

A few weeks ago I asked my subscribers which webinar they’d like to see in November (thanks a million to everyone who replied!). Not surprisingly, network automation got the top spot, but I was a bit sad to see my long-term pet project at the bottom of the list:

In case you’re ever asked to justify an investment in network automation, read How to Make the Case for Automation Architecture first. Not surprisingly, it includes the evergreen what problem are you trying to solve?

In case you’re ever asked to justify an investment in network automation, read How to Make the Case for Automation Architecture first. Not surprisingly, it includes the evergreen what problem are you trying to solve?

Network validation is becoming another overhyped buzzword with many opinionated pundits talking about it and few environments using it in practice (why am I not surprised?)

As always, there are exceptions. They don’t have to be members of the FAANG club, and some of them get the job done with open-source tools regardless of what vendor marketers would like you to believe. For example, Donatas Abraitis described how the Hostinger networking team gradually implemented network validation using Cumulus VX, Vagrant, SuzieQ, PyTest and Test Kitchen. Enjoy!

Network validation is becoming another overhyped buzzword with many opinionated pundits talking about it and few environments using it in practice (why am I not surprised?)

As always, there are exceptions. They don’t have to be members of the FAANG club, and some of them get the job done with open-source tools regardless of what vendor marketers would like you to believe. For example, Donatas Abraitis described how the Hostinger networking team gradually implemented network validation using Cumulus VX, Vagrant, SuzieQ, PyTest and Test Kitchen. Enjoy!

In May 2021, Javier Antich ran a great webinar explaining the principles of Artificial Intelligence and Machine learning and how they apply (or not) to networking.

He started with a brief overview of AI/ML hype that should help you understand why there’s a bit of a difference between self-driving cars (not that we got there) and self-driving networks.

In May 2021, Javier Antich ran a great webinar explaining the principles of Artificial Intelligence and Machine learning and how they apply (or not) to networking.

He started with a brief overview of AI/ML hype that should help you understand why there’s a bit of a difference between self-driving cars (not that we got there) and self-driving networks.

A while ago my friend Nicola Modena sent me another intriguing curveball:

Imagine a CTO who has invested millions in a super-secure data center and wants to consolidate all compute workloads. If you were asked to run a BGP Route Reflector as a VM in that environment, and would like to bring OSPF or ISIS to that box to enable BGP ORR, would you use a GRE tunnel to avoid a dedicated VLAN or boring other hosts with routing protocol hello messages?

While there might be good reasons for doing that, my first knee-jerk reaction was:

A while ago, my friend Nicola Modena sent me another intriguing curveball:

Imagine a CTO who has invested millions in a super-secure data center and wants to consolidate all compute workloads. If you were asked to run a BGP Route Reflector as a VM in that environment, and would like to bring OSPF or ISIS to that box to enable BGP ORR, would you use a GRE tunnel to avoid a dedicated VLAN or boring other hosts with routing protocol hello messages?

While there might be good reasons for doing that, my first knee-jerk reaction was:

I was happily munching popcorn while watching the latest season of Lack of DHCPv6 on Android soap opera on v6ops mailing list when one of the lead actors trying to justify the current state of affairs with a technical argument quoted an RFC to prove his rightful indignation with DHCPv6 and the decision not to implement it in Android:

[…not having multiple IPv6 addresses per interface…] is also harmful for a variety of reasons, and for general purpose devices, it’s not recommended by the IETF. That’s exactly what RFC 7934 is about - explaining why it’s harmful.

I was happily munching popcorn while watching the latest season of Lack of DHCPv6 on Android soap opera on v6ops mailing list when one of the lead actors trying to justify the current state of affairs with a technical argument quoted an RFC to prove his rightful indignation with DHCPv6 and the decision not to implement it in Android:

[…not having multiple IPv6 addresses per interface…] is also harmful for a variety of reasons, and for general purpose devices, it’s not recommended by the IETF. That’s exactly what RFC 7934 is about - explaining why it’s harmful.

The whole High Availability Switching series started with a question along the lines of “does it make sense to run BFD together with Graceful Restart”. After Non-Stop Forwarding 101, Graceful Restart 101, and Graceful Restart and Convergence Speed we finally have enough information to answer that question.

TL&DR: Most probably not.

A more nuanced answer depends (as always) on a gazillion implementation details.

The whole High Availability Switching series started with a question along the lines of “does it make sense to run BFD together with Graceful Restart”. After Non-Stop Forwarding 101, Graceful Restart 101, and Graceful Restart and Convergence Speed we finally have enough information to answer that question.

TL&DR: Most probably not.

A more nuanced answer depends (as always) on a gazillion implementation details.

In mid-October I finally found time to add the icing to the netsim-tools cake: netlab up command takes a lab topology and does everything needed to have a running virtual lab:

In mid-October I finally found time to add the icing to the netlab cake: netlab up command takes a lab topology and does everything needed to have a running virtual lab:

Every other blue moon someone writes (yet another) article along the lines of professional liability would solve so many broken things in the IT industry. This time it’s Poul-Henning Kamp of the FreeBSD and Varnish fame with The Software Industry IS STILL the Problem. Unfortunately it’s just another stab at the windmills considering how much money that industry pours into lobbying.

Every other blue moon someone writes (yet another) article along the lines of professional liability would solve so many broken things in the IT industry. This time it’s Poul-Henning Kamp of the FreeBSD and Varnish fame with The Software Industry IS STILL the Problem. Unfortunately it’s just another stab at the windmills considering how much money that industry pours into lobbying.

Decades ago there was a trick question on the CCIE exam exploring the intricate relationships between MAC and ARP table. I always understood the explanation for about 10 minutes and then I was back to I knew why that’s true, but now I lost it.

Fast forward 20 years, and we’re still seeing the same challenges, this time in EVPN networks using in-subnet proxy ARP. For more details, read the excellent ARP problems in EVPN article by Dmytro Shypovalov (I understood the problem after reading the article, and now it’s all a blur 🤷♂️).