Author Archives: Michelle Chen

Author Archives: Michelle Chen

In recent months, we’ve seen a leap forward for closed-source image generation models with the rise of Google’s Nano Banana and OpenAI image generation models. Today, we’re happy to share that a new open-weight contender is back with the launch of Black Forest Lab’s FLUX.2 [dev] and available to run on Cloudflare’s inference platform, Workers AI. You can read more about this new model in detail on BFL’s blog post about their new model launch here.

We have been huge fans of Black Forest Lab’s FLUX image models since their earliest versions. Our hosted version of FLUX.1 [schnell] is one of the most popular models in our catalog for its photorealistic outputs and high-fidelity generations. When the time came to host the licensed version of their new model, we jumped at the opportunity. The FLUX.2 model takes all the best features of FLUX.1 and amps it up, generating even more realistic, grounded images with added customization support like JSON prompting.

Our Workers AI hosted version of FLUX.2 has some specific patterns, like using multipart form data to support input images (up to 4 512x512 images), and output images up to 4 megapixels. The multipart form Continue reading

Getting the observability you need is challenging enough when the code is deterministic, but AI presents a new challenge — a core part of your user’s experience now relies on a non-deterministic engine that provides unpredictable outputs. On top of that, there are many factors that can influence the results: the model, the system prompt. And on top of that, you still have to worry about performance, reliability, and costs.

Solving performance, reliability and observability challenges is exactly what Cloudflare was built for, and two years ago, with the introduction of AI Gateway, we wanted to extend to our users the same levels of control in the age of AI.

Today, we’re excited to announce several features to make building AI applications easier and more manageable: unified billing, secure key storage, dynamic routing, security controls with Data Loss Prevention (DLP). This means that AI Gateway becomes your go-to place to control costs and API keys, route between different models and providers, and manage your AI traffic. Check out our new AI Gateway landing page for more information at a glance.

When using an AI provider, you typically have to sign up for Continue reading

When we first launched Workers AI, we made a bet that AI models would get faster and smaller. We built our infrastructure around this hypothesis, adding specialized GPUs to our datacenters around the world that can serve inference to users as fast as possible. We created our platform to be as general as possible, but we also identified niche use cases that fit our infrastructure well, such as low-latency image generation or real-time audio voice agents. To lean in on those use cases, we’re bringing on some new models that will help make it easier to develop for these applications.

Today, we’re excited to announce that we are expanding our model catalog to include closed-source partner models that fit this use case. We’ve partnered with Leonardo.Ai and Deepgram to bring their latest and greatest models to Workers AI, hosted on Cloudflare’s infrastructure. Leonardo and Deepgram both have models with a great speed-to-performance ratio that suit the infrastructure of Workers AI. We’re starting off with these great partners — but expect to expand our catalog to other partner models as well.

The benefits of using these models on Workers AI is that we don’t only have a standalone inference Continue reading

OpenAI has just announced their latest open-weight models — and we are excited to share that we are working with them as a Day 0 launch partner to make these models available in Cloudflare's Workers AI. Cloudflare developers can now access OpenAI's first open model, leveraging these powerful new capabilities on our platform. The new models are available starting today at @cf/openai/gpt-oss-120b and @cf/openai/gpt-oss-20b.

Workers AI has always been a champion for open models and we’re thrilled to bring OpenAI's new open models to our platform today. Developers who want transparency, customizability, and deployment flexibility can rely on Workers AI as a place to deliver AI services. Enterprises that need the ability to run open models to ensure complete data security and privacy can also deploy with Workers AI. We are excited to join OpenAI in fulfilling their mission of making the benefits of AI broadly accessible to builders of any size.

The OpenAI models have been released in two sizes: a 120 billion parameter model and a 20 billion parameter model. Both of them are Mixture-of-Experts models – a popular architecture for recent model releases – that allow relevant experts to be called for a Continue reading

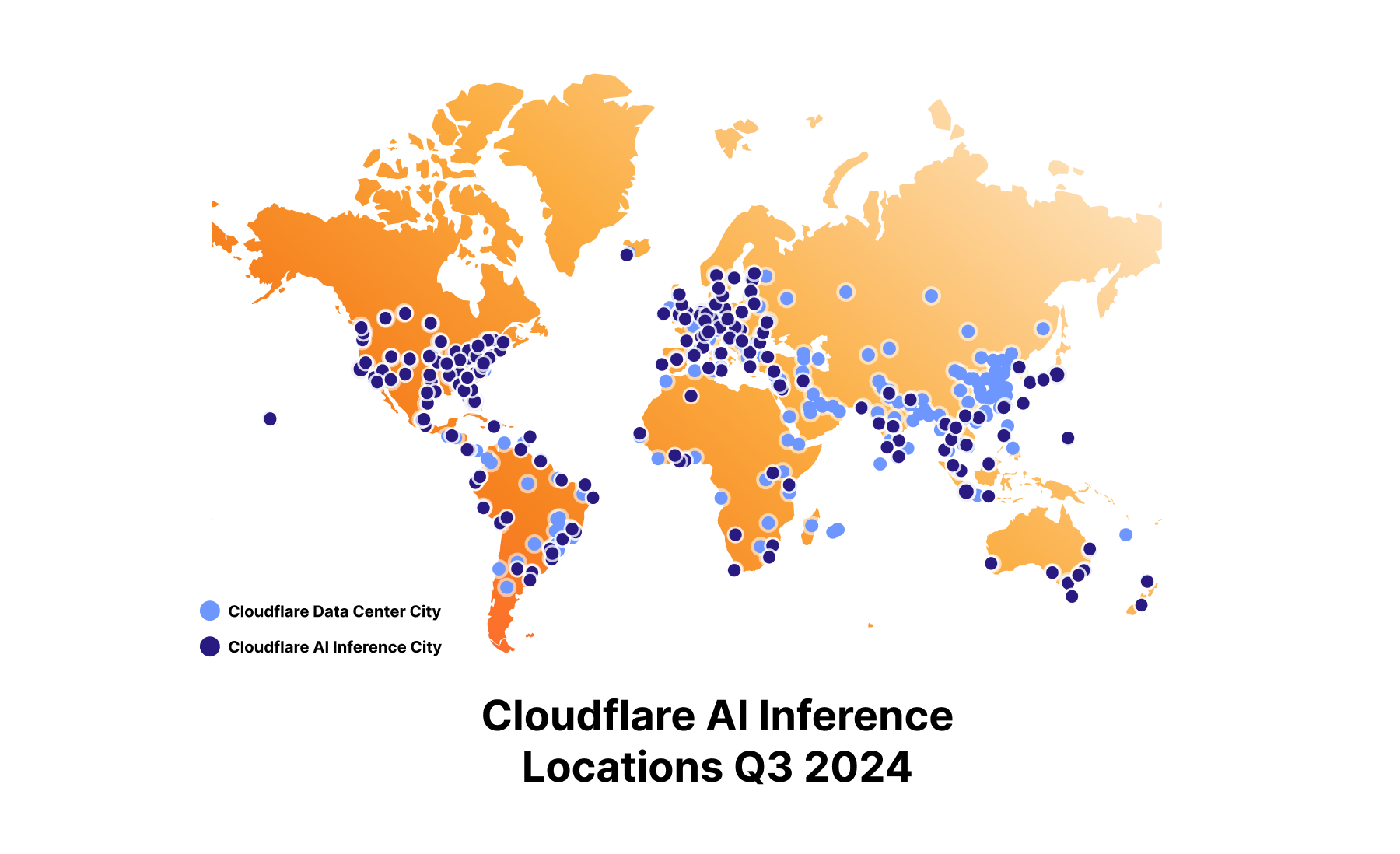

Since the launch of Workers AI in September 2023, our mission has been to make inference accessible to everyone.

Over the last few quarters, our Workers AI team has been heads down on improving the quality of our platform, working on various routing improvements, GPU optimizations, and capacity management improvements. Managing a distributed inference platform is not a simple task, but distributed systems are also what we do best. You’ll notice a recurring theme from all these announcements that has always been part of the core Cloudflare ethos — we try to solve problems through clever engineering so that we are able to do more with less.

Today, we’re excited to introduce speculative decoding to bring you faster inference, an asynchronous batch API for large workloads, and expanded LoRA support for more customized responses. Lastly, we’ll be recapping some of our newly added models, updated pricing, and unveiling a new dashboard to round out the usability of the platform.

We’re excited to roll out speed improvements to models in our catalog, starting with the Llama 3.3 70b model. These improvements include speculative decoding, prefix caching, an updated inference backend, Continue reading

As one of Meta’s launch partners, we are excited to make Meta’s latest and most powerful model, Llama 4, available on the Cloudflare Workers AI platform starting today. Check out the Workers AI Developer Docs to begin using Llama 4 now.

Llama 4 is an industry-leading release that pushes forward the frontiers of open-source generative Artificial Intelligence (AI) models. Llama 4 relies on a novel design that combines a Mixture of Experts architecture with an early-fusion backbone that allows it to be natively multimodal.

The Llama 4 “herd” is made up of two models: Llama 4 Scout (109B total parameters, 17B active parameters) with 16 experts, and Llama 4 Maverick (400B total parameters, 17B active parameters) with 128 experts. The Llama Scout model is available on Workers AI today.

Llama 4 Scout has a context window of up to 10 million (10,000,000) tokens, which makes it one of the first open-source models to support a window of that size. A larger context window makes it possible to hold longer conversations, deliver more personalized responses, and support better Retrieval Augmented Generation (RAG). For example, users can take advantage of that increase to summarize multiple documents or Continue reading

Birthday Week 2024 marks our first anniversary of Cloudflare’s AI developer products — Workers AI, AI Gateway, and Vectorize. For our first birthday this year, we’re excited to announce powerful new features to elevate the way you build with AI on Cloudflare.

Workers AI is getting a big upgrade, with more powerful GPUs that enable faster inference and bigger models. We’re also expanding our model catalog to be able to dynamically support models that you want to run on us. Finally, we’re saying goodbye to neurons and revamping our pricing model to be simpler and cheaper. On AI Gateway, we’re moving forward on our vision of becoming an ML Ops platform by introducing more powerful logs and human evaluations. Lastly, Vectorize is going GA, with expanded index sizes and faster queries.

Whether you want the fastest inference at the edge, optimized AI workflows, or vector database-powered RAG, we’re excited to help you harness the full potential of AI and get started on building with Cloudflare.

The first thing that you notice about an application is how fast, or in many cases, how slow it is. This is especially true of AI applications, Continue reading

At Cloudflare, we’re big supporters of the open-source community – and that extends to our approach for Workers AI models as well. Our strategy for our Cloudflare AI products is to provide a top-notch developer experience and toolkit that can help people build applications with open-source models.

We’re excited to be one of Meta’s launch partners to make their newest Llama 3.1 8B model available to all Workers AI users on Day 1. You can run their latest model by simply swapping out your model ID to @cf/meta/llama-3.1-8b-instruct or test out the model on our Workers AI Playground. Llama 3.1 8B is free to use on Workers AI until the model graduates out of beta.

Meta’s Llama collection of models have consistently shown high-quality performance in areas like general knowledge, steerability, math, tool use, and multilingual translation. Workers AI is excited to continue to distribute and serve the Llama collection of models on our serverless inference platform, powered by our globally distributed GPUs.

The Llama 3.1 model is particularly exciting, as it is released in a higher precision (bfloat16), incorporates function calling, and adds support across 8 languages. Having multilingual support built-in means that you can Continue reading

At Cloudflare, we’re big supporters of the open-source community – and that extends to our approach for Workers AI models as well. Our strategy for our Cloudflare AI products is to provide a top-notch developer experience and toolkit that can help people build applications with open-source models.

We’re excited to be one of Meta’s launch partners to make their newest Llama 3.1 8B model available to all Workers AI users on Day 1. You can run their latest model by simply swapping out your model ID to @cf/meta/llama-3.1-8b-instruct or test out the model on our Workers AI Playground. Llama 3.1 8B is free to use on Workers AI until the model graduates out of beta.

Meta’s Llama collection of models have consistently shown high-quality performance in areas like general knowledge, steerability, math, tool use, and multilingual translation. Workers AI is excited to continue to distribute and serve the Llama collection of models on our serverless inference platform, powered by our globally distributed GPUs.

The Llama 3.1 model is particularly exciting, as it is released in a higher precision (bfloat16), incorporates function calling, and adds support across 8 languages. Having multilingual support built-in means that you can Continue reading

We are thrilled to give developers around the world the ability to build AI applications with Meta Llama 3 using Workers AI. We are proud to be a launch partner with Meta for their newest 8B Llama 3 model, and excited to continue our partnership to bring the best of open-source models to our inference platform.

Workers AI’s initial launch in beta included support for Llama 2, as it was one of the most requested open source models from the developer community. Since that initial launch, we’ve seen developers build all kinds of innovative applications including knowledge sharing chatbots, creative content generation, and automation for various workflows.

At Cloudflare, we know developers want simplicity and flexibility, with the ability to build with multiple AI models while optimizing for accuracy, performance, and cost, among other factors. Our goal is to make it as easy as possible for developers to use their models of choice without having to worry about the complexities of hosting or deploying models.

As soon as we learned about the development of Llama 3 from our partners at Meta, we knew developers would want to start building with it as quickly as possible. Continue reading

This post is also available in 简体中文, 繁體中文, 日本語, 한국어, Deutsch, Français and Español.

Welcome to Tuesday – our AI day of Developer Week 2024! In this blog post, we’re excited to share an overview of our new AI announcements and vision, including news about Workers AI officially going GA with improved pricing, a GPU hardware momentum update, an expansion of our Hugging Face partnership, Bring Your Own LoRA fine-tuned inference, Python support in Workers, more providers in AI Gateway, and Vectorize metadata filtering.

Today, we’re excited to announce that our Workers AI inference platform is now Generally Available. After months of being in open beta, we’ve improved our service with greater reliability and performance, unveiled pricing, and added many more models to our catalog.

With Workers AI, our goal is to make AI inference as reliable and easy to use as the rest of Cloudflare’s network. Under the hood, we’ve upgraded the load balancing that is built into Workers AI. Requests can now be routed to more GPUs in more cities, and each city is aware of the total available capacity for AI inference. If the request Continue reading

This post is also available in 简体中文, 繁體中文, 日本語, 한국어, Deutsch, Français and Español.

Today, we’re excited to announce that you can now run fine-tuned inference with LoRAs on Workers AI. This feature is in open beta and available for pre-trained LoRA adapters to be used with Mistral, Gemma, or Llama 2, with some limitations. Take a look at our product announcements blog post to get a high-level overview of our Bring Your Own (BYO) LoRAs feature.

In this post, we’ll do a deep dive into what fine-tuning and LoRAs are, show you how to use it on our Workers AI platform, and then delve into the technical details of how we implemented it on our platform.

Fine-tuning is a general term for modifying an AI model by continuing to train it with additional data. The goal of fine-tuning is to increase the probability that a generation is similar to your dataset. Training a model from scratch is not practical for many use cases given how expensive and time consuming they can be to train. By fine-tuning an existing pre-trained model, Continue reading

On February 6th, 2024 we announced eight new models that we added to our catalog for text generation, classification, and code generation use cases. Today, we’re back with seventeen (17!) more models, focused on enabling new types of tasks and use cases with Workers AI. Our catalog is now nearing almost 40 models, so we also decided to introduce a revamp of our developer documentation that enables users to easily search and discover new models.

The new models are listed below, and the full Workers AI catalog can be found on our new developer documentation.

Text generation

Summarization

Text-to-image

Image-to-text

Today’s catalog update includes a number of new language models so that developers can pick and choose the best LLMs for their use cases. Although most LLMs can be generalized to work in any instance, there are many benefits to choosing models that are tailored for a specific use case. We are excited to bring you some new large language models (LLMs), small language models (SLMs), Continue reading

Today, we’re excited to announce our beta of AI Gateway – the portal to making your AI applications more observable, reliable, and scalable.

AI Gateway sits between your application and the AI APIs that your application makes requests to (like OpenAI) – so that we can cache responses, limit and retry requests, and provide analytics to help you monitor and track usage. AI Gateway handles the things that nearly all AI applications need, saving you engineering time, so you can focus on what you're building.

It only takes one line of code for developers to get started with Cloudflare’s AI Gateway. All you need to do is replace the URL in your API calls with your unique AI Gateway endpoint. For example, with OpenAI you would define your baseURL as "https://gateway.ai.cloudflare.com/v1/ACCOUNT_TAG/GATEWAY/openai" instead of "https://api.openai.com/v1" – and that’s it. You can keep your tokens in your code environment, and we’ll log the request through AI Gateway before letting it pass through to the final API with your token.

// configuring AI gateway with the dedicated OpenAI endpoint

const openai = new OpenAI({

apiKey: env.OPENAI_API_KEY,

baseURL: "https://gateway.ai. Continue reading