Helping keep customers safe with leaked password notification

Password reuse is a real problem. When people use the same password across multiple services, it creates a risk that a breach of one service will give attackers access to a different, apparently unrelated, service. Attackers know people reuse passwords and build giant lists of known passwords and known usernames or email addresses.

If you got to the end of that paragraph and realized you’ve reused the same password multiple places, stop reading and go change those passwords. We’ll wait.

To help protect Cloudflare customers who have used a password attackers know about, we are releasing a feature to improve the security of the Cloudflare dashboard for all our customers by automatically checking whether their Cloudflare user password has appeared in an attacker's list. Cloudflare will securely check a customer’s password against threat intelligence sources that monitor data breaches in other services.

If a customer logs in to Cloudflare with a password that was leaked in a breach elsewhere on the Internet, Cloudflare will alert them and ask them to choose a new password.

For some customers, the news that their password was known to hackers will come as a surprise – no one wants to intentionally use passwords that Continue reading

Using machine learning to detect bot attacks that leverage residential proxies

Bots using residential proxies are a major source of frustration for security engineers trying to fight online abuse. These engineers often see a similar pattern of abuse when well-funded, modern botnets target their applications. Advanced bots bypass country blocks, ASN blocks, and rate-limiting. Every time, the bot operator moves to a new IP address space until they blend in perfectly with the “good” traffic, mimicking real users’ behavior and request patterns. Our new Bot Management machine learning model (v8) identifies residential proxy abuse without resorting to IP blocking, which can cause false positives for legitimate users.

Background

One of the main sources of Cloudflare’s bot score is our bot detection machine learning model which analyzes, on average, over 46 million HTTP requests per second in real time. Since our first Bot Management ML model was released in 2019, we have continuously evolved and improved the model. Nowadays, our models leverage features based on request fingerprints, behavioral signals, and global statistics and trends that we see across our network.

Each iteration of the model focuses on certain areas of improvement. This process starts with a rigorous R&D phase to identify the emerging patterns of bot attacks by reviewing feedback from Continue reading

How the UEFA Euro 2024 football games are impacting local Internet traffic

Football (“soccer” in the US) is considered the most popular sport in the world, with around 3.5 billion fans spread across the world. European football is central to its popularity. The UEFA Euro 2024 (the European Football Championship) started on June 14 and will run until July 14, 2024. But how much do these games impact Internet traffic in countries where national teams are playing? That’s what we aim to explore in this blog post. We found that, on average, traffic dropped 6% during games in European countries with national teams playing in the tournament.

Cloudflare has a global presence with data centers in over 320 cities, which helps provide a global view of what’s happening on the Internet. This is helpful for security, privacy, efficiency, and speed purposes, but also for observing Internet disruptions and traffic trends.

In the past, we’ve seen how Internet traffic and HTTP requests are impacted by events such as total solar eclipses, the Super Bowl, and elections. 2024 is the year of elections, and we’ve been sharing our observations in blog posts and our new 2024 Election Insights report on Cloudflare Radar.

However, football games are different from elections. Related trends Continue reading

Exam-ining recent Internet shutdowns in Syria, Iraq, and Algeria

The practice of cheating on exams (or at least attempting to) is presumably as old as the concept of exams itself, especially when the results of the exam can have significant consequences for one’s academic future or career. As access to the Internet became more ubiquitous with the growth of mobile connectivity, and communication easier with an assortment of social media and messaging apps, a new avenue for cheating on exams emerged, potentially facilitating the sharing of test materials or answers. Over the last decade, some governments have reacted to this perceived risk by taking aggressive action to prevent cheating, ranging from targeted DNS-based blocking/filtering to multi-hour nationwide shutdowns across multi-week exam periods.

Syria and Iraq are well-known practitioners of the latter approach, and we have covered past exam-related Internet shutdowns in Syria (2021, 2022, 2023) and Iraq (2022, 2023) here on the Cloudflare blog. It is now mid-June 2024, and exams in both countries took place over the last several weeks, and with those exams, regular nationwide Internet shutdowns. In addition, Baccalaureate exams also took place in Algeria, and we have written about related Internet disruptions there in the past ( Continue reading

Patrick Finn: why I joined Cloudflare as VP Sales for the Americas

I’m delighted to be joining Cloudflare as Vice President of Sales in the US, Canada, and Latin America.

I’ve had the privilege of leading sales for some of the world’s most iconic tech companies, including IBM and Cisco. During my career I’ve led international teams numbering in the thousands and driving revenue in the billions of dollars while serving some of the world's largest enterprise customers. I’ve seen first-hand the evolution of technology and what it can achieve for businesses, from robotics, automation, and data analytics, to cloud computing, cybersecurity, and AI.

I firmly believe Cloudflare is well on its way to being one of the next iconic tech companies.

Why Cloudflare

Cloudflare has a unique opportunity to help businesses navigate an enduring wave of technological change. There are few companies in the world that operate in the three most exciting fields of innovation that will continue to shape our world in the coming years: cloud computing, AI, and cybersecurity. Cloudflare is one of those companies. When I was approached for this role, I spoke to a wide range of connections across the financial sector, private companies, and government. The feedback was unanimous that Cloudflare is poised on the edge Continue reading

Introducing Stream Generated Captions, powered by Workers AI

With one click, customers can now generate video captions effortlessly using Stream’s newest feature: AI-generated captions for on-demand videos and recordings of live streams. As part of Cloudflare’s mission to help build a better Internet, this feature is available to all Stream customers at no additional cost.

This solution is designed for simplicity, eliminating the need for third-party transcription services and complex workflows. For videos lacking accessibility features like captions, manual transcription can be time-consuming and impractical, especially for large video libraries. Traditionally, it has involved specialized services, sometimes even dedicated teams, to transcribe audio and deliver the text along with video, so it can be displayed during playback. As captions become more widely expected for a variety of reasons, including ethical obligation, legal compliance, and changing audience preferences, we wanted to relieve this burden.

With Stream’s integrated solution, the caption generation process is seamlessly integrated into your existing video management workflow, saving time and resources. Regardless of when you uploaded a video, you can easily add automatic captions to enhance accessibility. Captions can now be generated within the Cloudflare Dashboard or via an API request, all within the familiar and unified Stream platform.

This feature is designed with Continue reading

Celebrating 10 years of Project Galileo

One of the great benefits of the Internet has been its ability to empower activists and journalists in repressive societies to organize, communicate, and simply find each other. Ten years ago today, Cloudflare launched Project Galileo, a program which today provides security services, at no cost, to more than 2,600 independent journalists and nonprofit organizations around the world supporting human rights, democracy, and local communities. You can read last week’s blog and Radar dashboard that provide a snapshot of what public interest organizations experience on a daily basis when it comes to keeping their websites online.

Origins of Project Galileo

We’ve admitted before that Project Galileo was born out of a mistake, but it's worth reminding ourselves. In 2014, when Cloudflare was a much smaller company with a smaller network, our free service did not include DDoS mitigation. If a free customer came under a withering attack, we would stop proxying traffic to protect our own network. It just made sense.

One evening, a site that was using us came under a significant DDoS attack, exhausting Cloudflare resources. After pulling up the site and seeing Cyrillic writing and pictures of men with guns, the young engineer on call followed the Continue reading

Heeding the call to support Australia’s most at-risk entities

When Australia unveiled its 2023-2030 Australian Cyber Security Strategy in November 2023, we enthusiastically announced Cloudflare’s support, especially for the call for the private sector to work together to protect Australia’s smaller, at-risk entities. Today, we are extremely pleased to announce that Cloudflare and the Critical Infrastructure - Information Sharing and Analysis Centre (CI-ISAC), a member-driven organization helping to defend Australia's critical infrastructure from cyber attacks, are teaming up to protect some of Australia’s most at-risk organizations – General Practitioner (GP) clinics.

Cloudflare helps a broad range of organizations -– from multinational organizations, to entrepreneurs and small businesses, to nonprofits, humanitarian groups, and governments across the globe — to secure their employees, applications and networks. We support a multitude of organizations in Australia, including some of Australia’s largest banks and digital natives, with our world-leading security products and services.

When it comes to protecting entities at high risk of cyber attack who might not have significant resources, we at Cloudflare believe we have a lot to offer. Our mission is to help build a better Internet. A key part of that mission is democratizing cybersecurity – making a range of tools readily available for all, including small and medium enterprises Continue reading

Exploring the 2024 EU Election: Internet traffic trends and cybersecurity insights

The 2024 European Parliament election took place June 6-9, 2024, with hundreds of millions of Europeans from the 27 countries of the European Union electing 720 members of the European Parliament. This was the first election after Brexit and without the UK, and it had an impact on the Internet. In this post, we will review some of the Internet traffic trends observed during the election days, as well as providing insight into cyberattack activity.

Elections matter, and as we have mentioned before (1, 2), 2024 is considered “the year of elections”, with voters going to the polls in at least 60 countries, as well as the 27 EU member states. That’s why we’re publishing a regularly updated election report on Cloudflare Radar. We’ve already included our analysis of recent elections in South Africa, India, Iceland, and Mexico, and provided a policy view on the EU elections.

The European Parliament election coincided with several other national or local elections in European Union member states, leading to direct consequences. For example, in Belgium, the prime minister announced his resignation, resulting in a drop in Internet traffic during the speech followed by a clear increase after the speech was Continue reading

Internet insights on 2024 elections in the Netherlands, South Africa, Iceland, India, and Mexico

2024 is being called by the media “the” year of elections. More voters than ever are going to the polls in at least 60 countries for national elections, plus the 27 member states of the European Union. This includes eight of the world’s 10 most populous nations, impacting around half of the world’s population.

To track and analyze these significant global events, we’ve created the 2024 Election Insights report on Cloudflare Radar, which will be regularly updated as elections take place.

Our data shows that during elections, there is often a decrease in Internet traffic during polling hours, followed by an increase as results are announced. This trend has been observed before in countries like France and Brazil, and more recently in Mexico and India — where elections were held between April 19 and June 1 in seven phases. Some regions, like Comoros and Pakistan, have experienced government-directed Internet disruptions around election time.

Below, you’ll find a review of the trends we saw in elections in South Africa (May 29), to Mexico (June 2), India (April 19 - June 1) and Iceland (June 1). This includes election-related shifts in traffic, as well at attacks. For example, during the Continue reading

Dutch political websites hit by cyber attacks as EU voting starts

The 2024 European Parliament election started in the Netherlands today, June 6, 2024, and will continue through June 9 in the other 26 countries that are part of the European Union. Cloudflare observed DDoS attacks targeting multiple election or politically-related Internet properties on election day in the Netherlands, as well as the preceding day.

These elections are highly anticipated. It’s also the first European election without the UK after Brexit.

According to news reports, several websites of political parties in the Netherlands suffered cyberattacks on Thursday, with a pro-Russian hacker group called HackNeT claiming responsibility.

On June 5 and 6, 2024, Cloudflare systems automatically detected and mitigated DDoS attacks that targeted at least three politically-related Dutch websites. Significant attack activity targeted two of them, and is described below.

A DDoS attack, short for Distributed Denial of Service attack, is a type of cyber attack that aims to take down or disrupt Internet services such as websites or mobile apps and make them unavailable for users. DDoS attacks are usually done by flooding the victim's server with more traffic than it can handle. To learn more about DDoS attacks and other types of attacks, visit our Learning Center.

Attackers Continue reading

Protecting vulnerable communities for 10 years with Project Galileo

In celebration of Project Galileo's 10th anniversary, we want to give you a snapshot of what organizations that work in the public interest experience on an everyday basis when it comes to keeping their websites online. With this, we are publishing the Project Galileo 10th anniversary Radar dashboard with the aim of providing valuable insights to researchers, civil society members, and targeted organizations, equipping them with effective strategies for protecting both internal information and their public online presence.

Key Statistics

- Under Project Galileo, we protect more than 2,600 Internet properties in 111 countries.

- Between May 1, 2023, and March 31, 2024, Cloudflare blocked 31.93 billion cyber threats against organizations protected under Project Galileo. This is an average of nearly 95.89 million cyber attacks per day over the 11-month period.

- When looking at the different organizational categories, journalism and media organizations were the most attacked, accounting for 34% of all attacks targeting the Internet properties protected under the Project in the last year, followed by human rights organizations at 17%.

- On October 11, 2023, Cloudflare detected one of the largest attacks we’ve seen against an organization under Project Galileo, targeting a prominent independent journalism website covering stories in Russia Continue reading

European Union elections 2024: securing democratic processes in light of new threats

Between June 6-9 2024, hundreds of millions of European Union (EU) citizens will be voting to elect their members of the European Parliament (MEPs). The European elections, held every five years, are one of the biggest democratic exercises in the world. Voters in each of the 27 EU countries will elect a different number of MEPs according to population size and based on a proportional system, and the 720 newly elected MEPs will take their seats in July. All EU member states have different election processes, institutions, and methods, and the security risks are significant, both in terms of cyber attacks but also with regard to influencing voters through disinformation. This makes the task of securing the European elections a particularly complex one, which requires collaboration between many different institutions and stakeholders, including the private sector. Cloudflare is well positioned to support governments and political campaigns in managing large-scale cyber attacks. We have also helped election entities around the world by providing tools and expertise to protect them from attack. Moreover, through the Athenian Project, Cloudflare works with state and local governments in the United States, as well as governments around the world through international nonprofit partners, to provide Continue reading

Adopting OpenTelemetry for our logging pipeline

Cloudflare’s logging pipeline is one of the largest data pipelines that Cloudflare has, serving millions of log events per second globally, from every server we run. Recently, we undertook a project to migrate the underlying systems of our logging pipeline from syslog-ng to OpenTelemetry Collector and in this post we want to share how we managed to swap out such a significant piece of our infrastructure, why we did it, what went well, what went wrong, and how we plan to improve the pipeline even more going forward.

Background

A full breakdown of our existing infrastructure can be found in our previous post An overview of Cloudflare's logging pipeline, but to quickly summarize here:

- We run a syslog-ng daemon on every server, reading from the local systemd-journald journal, and a set of named pipes.

- We forward those logs to a set of centralized “log-x receivers”, in one of our core data centers.

- We have a dead letter queue destination in another core data center, which receives messages that could not be sent to the primary receiver, and which get mirrored across to the primary receivers when possible.

The goal of this project was to replace those syslog-ng instances as Continue reading

Extending local traffic management load balancing to Layer 4 with Spectrum

In 2023, Cloudflare introduced a new load balancing solution, supporting Local Traffic Management (LTM). This gives organizations a way to balance HTTP(S) traffic between private or internal servers within a region-specific data center. Today, we are thrilled to be able to extend those same LTM capabilities to non-HTTP(S) traffic. This new feature is enabled by the integration of Cloudflare Spectrum, Cloudflare Tunnels, and Cloudflare load balancers and is available to enterprise customers. Our customers can now use Cloudflare load balancers for all TCP and UDP traffic destined for private IP addresses, eliminating the need for expensive on-premise load balancers.

A quick primer

In this blog post, we will be referring to load balancers at either layer 4 or layer 7. This is, of course, referring to layers of the OSI model but more specifically, the ingress path that is being used to reach the load balancer. Layer 7, also known as the Application Layer, is where the HTTP(S) protocol exists. Cloudflare is well known for our layer 7 capabilities, which are built around speeding up and protecting websites which run over HTTP(S). When we refer to layer 7 load balancers, we are referring to HTTP(S)-based services. Our layer Continue reading

Disrupting FlyingYeti’s campaign targeting Ukraine

Cloudforce One is publishing the results of our investigation and real-time effort to detect, deny, degrade, disrupt, and delay threat activity by the Russia-aligned threat actor FlyingYeti during their latest phishing campaign targeting Ukraine. At the onset of Russia’s invasion of Ukraine on February 24, 2022, Ukraine introduced a moratorium on evictions and termination of utility services for unpaid debt. The moratorium ended in January 2024, resulting in significant debt liability and increased financial stress for Ukrainian citizens. The FlyingYeti campaign capitalized on anxiety over the potential loss of access to housing and utilities by enticing targets to open malicious files via debt-themed lures. If opened, the files would result in infection with the PowerShell malware known as COOKBOX, allowing FlyingYeti to support follow-on objectives, such as installation of additional payloads and control over the victim’s system.

Since April 26, 2024, Cloudforce One has taken measures to prevent FlyingYeti from launching their phishing campaign – a campaign involving the use of Cloudflare Workers and GitHub, as well as exploitation of the WinRAR vulnerability CVE-2023-38831. Our countermeasures included internal actions, such as detections and code takedowns, as well as external collaboration with third parties to remove the actor’s cloud-hosted malware. Continue reading

Cloudflare acquires BastionZero to extend Zero Trust access to IT infrastructure

We’re excited to announce that BastionZero, a Zero Trust infrastructure access platform, has joined Cloudflare. This acquisition extends our Zero Trust Network Access (ZTNA) flows with native access management for infrastructure like servers, Kubernetes clusters, and databases.

Security teams often prioritize application and Internet access because these are the primary vectors through which users interact with corporate resources and external threats infiltrate networks. Applications are typically the most visible and accessible part of an organization's digital footprint, making them frequent targets for cyberattacks. Securing application access through methods like Single Sign-On (SSO) and Multi-Factor Authentication (MFA) can yield immediate and tangible improvements in user security.

However, infrastructure access is equally critical and many teams still rely on castle-and-moat style network controls and local resource permissions to protect infrastructure like servers, databases, Kubernetes clusters, and more. This is difficult and fraught with risk because the security controls are fragmented across hundreds or thousands of targets. Bad actors are increasingly focusing on targeting infrastructure resources as a way to take down huge swaths of applications at once or steal sensitive data. We are excited to extend Cloudflare One’s Zero Trust Network Access to natively protect infrastructure with user- and device-based policies Continue reading

Expanding Regional Services configuration flexibility for customers

This post is also available in Français, Español, Nederlands.

When we launched Regional Services in June 2020, the concept of data locality and data sovereignty were very much rooted in European regulations. Fast-forward to today, and the pressure to localize data persists: Several countries have laws requiring data localization in some form, public-sector contracting requirements in many countries require their vendors to restrict the location of data processing, and some customers are reacting to geopolitical developments by seeking to exclude data processing from certain jurisdictions.

That’s why today we're happy to announce expanded capabilities that will allow you to configure Regional Services for an increased set of defined regions to help you meet your specific requirements for being able to control where your traffic is handled. These new regions are available for early access starting in late May 2024, and we plan to have them generally available in June 2024.

It has always been our goal to provide you with the toolbox of solutions you need to not only address your security and performance concerns, but also to help you meet your legal obligations. And when it comes to data localization, we know that some of you need Continue reading

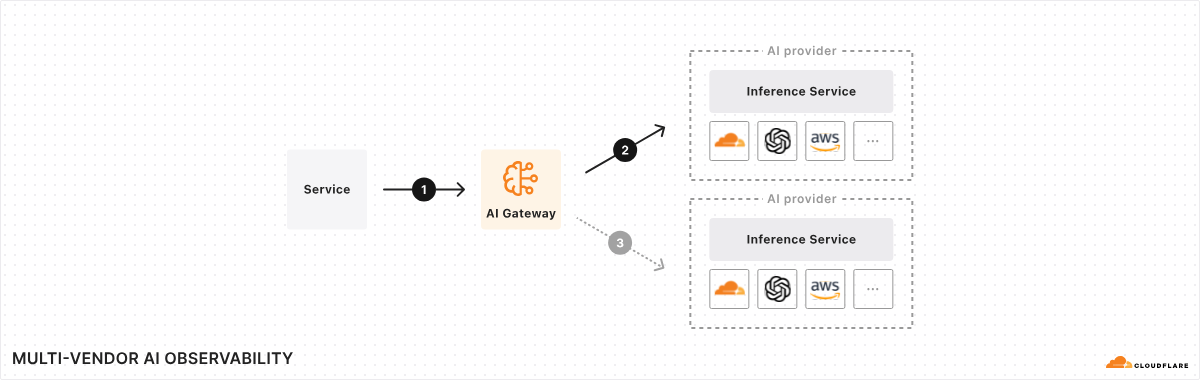

AI Gateway is generally available: a unified interface for managing and scaling your generative AI workloads

During Developer Week in April 2024, we announced General Availability of Workers AI, and today, we are excited to announce that AI Gateway is Generally Available as well. Since its launch to beta in September 2023 during Birthday Week, we’ve proxied over 500 million requests and are now prepared for you to use it in production.

AI Gateway is an AI ops platform that offers a unified interface for managing and scaling your generative AI workloads. At its core, it acts as a proxy between your service and your inference provider(s), regardless of where your model runs. With a single line of code, you can unlock a set of powerful features focused on performance, security, reliability, and observability – think of it as your control plane for your AI ops. And this is just the beginning – we have a roadmap full of exciting features planned for the near future, making AI Gateway the tool for any organization looking to get more out of their AI workloads.

Why add a proxy and why Cloudflare?

The AI space moves fast, and it seems like every day there is a new model, provider, or framework. Given this high rate of Continue reading

New Consent and Bot Management features for Cloudflare Zaraz

Managing consent online can be challenging. After you’ve figured out the necessary regulations, you usually need to configure some Consent Management Platform (CMP) to load all third-party tools and scripts on your website in a way that respects these demands. Cloudflare Zaraz manages the loading of all of these third-party tools, so it was only natural that in April 2023 we announced the Cloudflare Zaraz CMP: the simplest way to manage consent in a way that seamlessly integrates with your third-party tools manager.

As more and more third-party tool vendors are required to handle consent properly, our CMP has evolved to integrate with these new technologies and standardization efforts. Today, we’re happy to announce that the Cloudflare Zaraz CMP is now compatible with the Interactive Advertising Bureau Transparency and Consent Framework (IAB TCF) requirements, and fully supports Google’s Consent Mode v2 signals. Separately, we’ve taken efforts to improve the way Cloudflare Zaraz handles traffic coming from online bots.

IAB TCF Compatibility

Earlier this year, Google announced that websites that would like to use AdSense and other advertising solutions in the European Economic Area (EEA), the UK, and Switzerland, will be required to use a CMP that is approved by Continue reading