A return to US net neutrality rules?

For nearly 15 years, the Federal Communications Commission (FCC) in the United States has gone back and forth on open Internet rules – promulgating and then repealing, with some court battles thrown in for good measure. Last week was the deadline for Internet stakeholders to submit comments to the FCC about their recently proposed net neutrality rules for Internet Service Providers (ISPs), which would introduce considerable protections for consumers and codify the responsibility held by ISPs.

For anyone who has worked to help to build a better Internet, as Cloudflare has for the past 13 years, the reemergence of net neutrality is déjà vu all over again. Cloudflare has long supported the open Internet principles that are behind net neutrality, and we still do today. That’s why we filed comments with the FCC expressing our support for these principles, and concurring with many of the technical definitions and proposals that largely would reinstitute the net neutrality rules that were previously in place.

But let’s back up and talk about net neutrality. Net neutrality is the principle that ISPs should not discriminate against the traffic that flows through them. Specifically, when these rules were adopted by the FCC in 2015, there Continue reading

Don’t Let the Cyber Grinch Ruin your Winter Break: Project Cybersafe Schools protects small school districts in the US

As the last school bell rings before winter break, one thing school districts should keep in mind is that during the winter break, schools can become particularly vulnerable to cyberattacks as the reduced staff presence and extended downtime create an environment conducive to security lapses. Criminal actors make their move when organizations are most vulnerable: on weekends and holiday breaks. With fewer personnel on-site, routine monitoring and response to potential threats may be delayed, providing cybercriminals with a window of opportunity. Schools store sensitive student and staff data, including personally identifiable information, financial records, and confidential academic information, and therefore consequences of a successful cyberattack can be severe. It is imperative that educational institutions implement robust cybersecurity measures to safeguard their digital infrastructure.

If you are a small public school district in the United States, Project Cybersafe Schools is here to help. Don’t let the Cyber Grinch ruin your winter break.

The impact of Project Cybersafe Schools thus far

In August of this year, as part of the White House Back to School Safely: K-12 Cybersecurity Summit, Cloudflare announced Project Cybersafe Schools to help support eligible K-12 public school districts with a package of Zero Trust cybersecurity solutions — Continue reading

Australia’s cybersecurity strategy is here and Cloudflare is all in

We are thrilled about Australia’s strategic direction to build a world-leading cyber nation by 2030. As a world-leading cybersecurity company whose mission is to help build a better Internet, we think we can help.

Cloudflare empowers organizations to make their employees, applications and networks faster and more secure everywhere, while reducing complexity and cost. Cloudflare is trusted by millions of organizations – from the largest brands to entrepreneurs and small businesses to nonprofits, humanitarian groups, and governments across the globe.

Cloudflare first established a footprint in Australia in 2012 when we launched our 15th data center in Sydney (our network has since grown to span over 310 cities in 120 countries/regions). We support a multitude of customers in Australia and New Zealand, including some of Australia’s largest banks and digital natives, with our world-leading security products and services. For example, Australia’s leading tech company Canva, whose service is used by over 35 million people worldwide each month, uses a broad array of Cloudflare’s products — spanning use cases as diverse as remote application access, to serverless development, and even bot management to help Canva protect its network from attacks.

In support of the Australian Cyber Security Strategy Continue reading

Integrating Turnstile with the Cloudflare WAF to challenge fetch requests

Two months ago, we made Cloudflare Turnstile generally available — giving website owners everywhere an easy way to fend off bots, without ever issuing a CAPTCHA. Turnstile allows any website owner to embed a frustration-free Cloudflare challenge on their website with a simple code snippet, making it easy to help ensure that only human traffic makes it through. In addition to protecting a website’s frontend, Turnstile also empowers web administrators to harden browser-initiated (AJAX) API calls running under the hood. These APIs are commonly used by dynamic single-page web apps, like those created with React, Angular, Vue.js.

Today, we’re excited to announce that we have integrated Turnstile with the Cloudflare Web Application Firewall (WAF). This means that web admins can add the Turnstile code snippet to their websites, and then configure the Cloudflare WAF to manage these requests. This is completely customizable using WAF Rules; for instance, you can allow a user authenticated by Turnstile to interact with all of an application’s API endpoints without facing any further challenges, or you can configure certain sensitive endpoints, like Login, to always issue a challenge.

Challenging fetch requests in the Cloudflare WAF

Millions of websites protected by Cloudflare’s WAF leverage our Continue reading

Using DNS to estimate the worldwide state of IPv6 adoption

In order for one device to talk to other devices on the Internet using the aptly named Internet Protocol (IP), it must first be assigned a unique numerical address. What this address looks like depends on the version of IP being used: IPv4 or IPv6.

IPv4 was first deployed in 1983. It’s the IP version that gave birth to the modern Internet and still remains dominant today. IPv6 can be traced back to as early as 1998, but only in the last decade did it start to gain significant traction — rising from less than 1% to somewhere between 30 and 40%, depending on who’s reporting and what and how they’re measuring.

With the growth in connected devices far exceeding the number of IPv4 addresses available, and its costs rising, the much larger address space provided by IPv6 should have made it the dominant protocol by now. However, as we’ll see, this is not the case.

Cloudflare has been a strong advocate of IPv6 for many years and, through Cloudflare Radar, we’ve been closely following IPv6 adoption across the Internet. At three years old, Radar is still a relatively recent platform. To go further back in time, we Continue reading

From Google to Generative AI: Ranking top Internet services in 2023

Ask nearly any Internet user, and they are bound to have their own personal list of favorite sites, applications, and Internet services for news, messaging, video, AI chatbots, music, and more. Sum that question up across a lot of users in a lot of different countries, and you end up with a sense of the most popular websites and services in the world. In a nutshell, that’s what this blog post is about: how humans interacted with the online world in 2023 from what Cloudflare observed.

Building on similar reports we’ve done over the past two years, we have compiled a ranking of the top Internet properties of 2023. In addition to our overall ranking, we chose 9 categories to focus on. One of these is a new addition in 2023: Generative AI. Here are the 9 categories we’ll be digging into:

1. Generative AI

2. Social Media

3. E-commerce

4. Video Streaming

5. News

6. Messaging

7. Metaverse & Gaming

8. Financial Services

9. Cryptocurrency Services

Our method for calculating the results is the same as in 2022: we analyze anonymized DNS query data from our 1.1.1.1 public DNS resolver, used by millions of Continue reading

Cloudflare 2023 Year in Review

The 2023 Cloudflare Radar Year in Review is our fourth annual review of Internet trends and patterns observed throughout the year at both a global and country/region level across a variety of metrics. Below, we present a summary of key findings, and then explore them in more detail in subsequent sections.

Key findings

Traffic Insights & Trends

- Global Internet traffic grew 25%, in line with peak 2022 growth. Major holidays, severe weather, and intentional shutdowns clearly impacted Internet traffic. 🔗

- Google was again the most popular general Internet service, with 2021 leader TikTok falling to fourth place. OpenAI was the most popular service in the emerging Generative AI category, and Binance remained the most popular Cryptocurrency service. 🔗

- Globally, over two-thirds of mobile device traffic was from Android devices. Android had a >90% share of mobile device traffic in over 25 countries/regions; peak iOS mobile device traffic share was 66%. 🔗

- Global traffic from Starlink nearly tripled in 2023. After initiating service in Brazil in mid-2022, Starlink traffic from that country was up over 17x in 2023. 🔗

- Google Analytics, React, and HubSpot were among the most popular technologies found on top websites. 🔗

- Globally, nearly half of web requests Continue reading

ML Ops Platform at Cloudflare

We've been relying on ML and AI for our core services like Web Application Firewall (WAF) since the early days of Cloudflare. Through this journey, we've learned many lessons about running AI deployments at scale, and all the tooling and processes necessary. We recently launched Workers AI to help abstract a lot of that away for inference, giving developers an easy way to leverage powerful models with just a few lines of code. In this post, we’re going to explore some of the lessons we’ve learned on the other side of the ML equation: training.

Cloudflare has extensive experience training models and using them to improve our products. A constantly-evolving ML model drives the WAF attack score that helps protect our customers from malicious payloads. Another evolving model power bot management product to catch and prevent bot attacks on our customers. Our customer support is augmented by data science. We build machine learning to identify threats with our global network. To top it all off, Cloudflare is delivering machine learning at unprecedented scale across our network.

Each of these products, along with many others, has elevated ML models — including experimentation, training, and deployment — to a crucial position within Continue reading

How we used OpenBMC to support AI inference on GPUs around the world

Cloudflare recently announced Workers AI, giving developers the ability to run serverless GPU-powered AI inference on Cloudflare’s global network. One key area of focus in enabling this across our network was updating our Baseboard Management Controllers (BMCs). The BMC is an embedded microprocessor that sits on most servers and is responsible for remote power management, sensors, serial console, and other features such as virtual media.

To efficiently manage our BMCs, Cloudflare leverages OpenBMC, an open-source firmware stack from the Open Compute Project (OCP). For Cloudflare, OpenBMC provides transparent, auditable firmware. Below describes some of what Cloudflare has been able to do so far with OpenBMC with respect to our GPU-equipped servers.

Ouch! That’s HOT!

For this project, we needed a way to adjust our BMC firmware to accommodate new GPUs, while maintaining the operational efficiency with respect to thermals and power consumption. OpenBMC was a powerful tool in meeting this objective.

OpenBMC allows us to change the hardware of our existing servers without the dependency of our Original Design Manufacturers (ODMs), consequently allowing our product teams to get started on products quickly. To physically support this effort, our servers need to be able to supply enough power and keep Continue reading

Latest copyright decision in Germany rejects blocking through global DNS resolvers

This post is also available in Deutsch.

A recent decision from the Higher Regional Court of Cologne in Germany marked important progress for Cloudflare and the Internet in pushing back against misguided attempts to address online copyright infringement through the DNS system. In early November, the Court in Universal v. Cloudflare issued its decision rejecting a request to require public DNS resolvers like Cloudflare’s 1.1.1.1. to block websites based on allegations of online copyright infringement. That’s a position we’ve long advocated, because blocking through public resolvers is ineffective and disproportionate, and it does not allow for much-needed transparency as to what is blocked and why.

What is a DNS resolver?

To see why the Universal decision matters, it’s important to understand what a public DNS resolver is, and why it’s not a good place to try to moderate content on the Internet.

The DNS system translates website names to IP addresses, so that Internet requests can be routed to the correct location. At a high-level, the DNS system consists of two parts. On one side sit a series of nameservers (Root, TLD, and Authoritative) that together store information mapping domain names to IP addresses; on the other Continue reading

Cloudflare Gen 12 Server: Bigger, Better, Cooler in a 2U1N form factor

Two years ago, Cloudflare undertook a significant upgrade to our compute server hardware as we deployed our cutting-edge 11th Generation server fleet, based on AMD EPYC Milan x86 processors. It's nearly time for another refresh to our x86 infrastructure, with deployment planned for 2024. This involves upgrading not only the processor itself, but many of the server's components. It must be able to accommodate the GPUs that drive inference on Workers AI, and leverage the latest advances in memory, storage, and security. Every aspect of the server is rigorously evaluated — including the server form factor itself.

One crucial variable always in consideration is temperature. The latest generations of x86 processors have yielded significant leaps forward in performance, with the tradeoff of higher power draw and heat output. In this post we will explore this trend, and how it informed our decision to adopt a new physical footprint for our next-generation fleet of servers.

In preparation for the upcoming refresh, we conducted an extensive survey of the x86 CPU landscape. AMD recently introduced its latest offerings: Genoa, Bergamo, and Genoa-X, featuring the power of their innovative Zen 4 architecture. At the same time, Intel unveiled Sapphire Rapids as Continue reading

Cyber Week: Analyzing Internet traffic and e-commerce trends

Throughout the year, special events lead to changes in Internet traffic. We observed this with Thanksgiving in the US last week, where traffic dipped, and during periods like Black Friday (November 24, 2023) and Cyber Monday (November 27, 2023), where traffic spiked.

But how significant are these Cyber Week days on the Internet? Is it a global phenomenon? Does e-commerce interest peak on Black Friday or Cyber Monday, and are attacks increasing during this time? These questions are important to retailers and stakeholders around the world. At Cloudflare, we manage substantial traffic for our customers, which gives us a unique vantage from which to analyze traffic and attack patterns across large swaths of the Internet.

As we'll explore next, we observed varying trends. From a global perspective, there was a clear Internet traffic winner: Cyber Monday was the highest overall traffic day of 2023 (as it was for 2022), followed by Black Friday, and then Monday, November 21 from the same week. But zooming in, this pattern didn’t hold in some countries.

For this analysis, we examined anonymized samples of HTTP requests crossing our network, as well as DNS queries. Cloudflare's global data shows that peak request traffic occurred on Continue reading

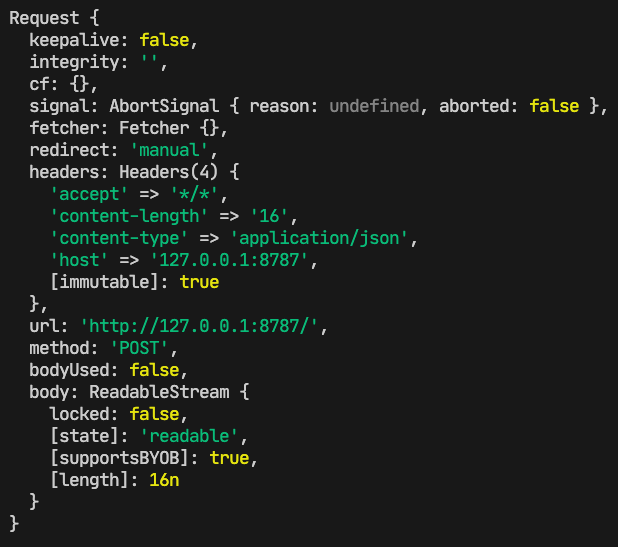

Better debugging for Cloudflare Workers, now with breakpoints

As developers, we’ve all experienced times when our code doesn’t work like we expect it to. Whatever the root cause is, being able to quickly dive in, diagnose the problem, and ship a fix is invaluable.

If you’re developing with Cloudflare Workers, we provide many tools to help you debug your applications; from your local environment all the way into production. This additional insight helps save you time and resources and provides visibility into how your application actually works — which can help you optimize and refactor code even before it goes live.

In this post, we’ll explore some of the tools we currently offer, and do a deep dive into one specific area — breakpoint debugging — looking at not only how to use it, but how we recently implemented it in our runtime, workerd.

Available Debugging Tools

Logs

console.log. It might be the simplest tool for a developer to debug, but don’t underestimate it. Built into the Cloudflare runtime is node-like logging, which provides detailed, color-coded logs. Locally, you can view these logs in a terminal window, and they will look like this:

Outside local development, once your Worker is deployed, console.log statements are visible via Continue reading

Steve Bray: Why I joined Cloudflare

I am excited to announce that I joined Cloudflare last month as Head of Australia & New Zealand, to continue to build on Cloudflare’s success in the region through extending our valuable relationships with our customers and partners.

My journey to Cloudflare

I’ve been fortunate over my 25-year career in the IT industry to have worked for some of the most recognised and innovative organisations such as Oracle, Salesforce, and Zendesk. It’s been exciting to be inside these businesses as they’ve taken new ideas about how software can be developed and delivered to solve real world problems for any organisation’s customers. I’ve learned a lot by being a part of the industry, but probably more importantly, I’ve learned the most from the smart, experienced, diverse groups of talented people that I’ve had the pleasure to work with in ANZ and across Asia Pacific. I have always been interested in the problems that organisations are trying to solve through technology — for example, responding to strategic challenges, reducing cost, improving revenue, reducing risk — and joining Cloudflare is an opportunity to stay focussed on addressing those critical issues with our customers and partners using Cloudflare’s innovative solutions.

So why Cloudflare?

Cloudflare’s Continue reading

Cloudflare named a leader in Forrester Edge Development Platforms Wave, Q4 2023

This post is also available in Español, Deutsch, Português, 日本語, 한국어.

Forrester has recognized Cloudflare as a leader in The Forrester Wave™: Edge Development Platforms, Q4 2023 with the top score in the current offering category.

According to the report by Principal Analyst, Devin Dickerson, “Cloudflare’s edge development platform provides the building blocks enterprises need to create full stack distributed applications and enables developers to take advantage of a globally distributed network of compute, storage and programmable security without being experts on CAP theorem.“

Over one million developers are building applications using the Developer Platform products including Workers, Pages, R2, KV, Queues, Durable Objects, D1, Stream, Images, and more. Developers can easily deploy highly distributed, full-stack applications using Cloudflare’s full suite of compute, storage, and developer services.

Workers make Cloudflare’s network programmable

“ A key strength of the platform is the interoperability with Cloudflare’s programmable global CDN combined with a deployment model that leverages intelligent workload placement.”

– The Forrester Wave™: Edge Development Platforms, Q4 2023

Workers run across Cloudflare’s global network, provide APIs to read from and write directly to the local cache, Continue reading

Do hackers eat turkey? And other Thanksgiving Internet trends

Thanksgiving is a tradition celebrated by millions of Americans across six time zones and 50 states, usually involving travel and bringing families together. This year, it was celebrated yesterday, on November 23, 2023. With the Internet so deeply enmeshed into our daily lives, anything that changes how so many people behave is going to also have an impact on online traffic. But how big an impact, exactly?

At a high level: a 10% daily decrease in Internet traffic in the US (compared to the previous week). That happens to be the exact same percentage decrease we observed in 2022. So, Thanksgiving in the US, at least in the realm of Internet traffic, seems consistent with last year.

Let’s dig into more details about how people deal with cooking (or online ordering!) and whether family gatherings are less online, according to our Cloudflare Radar data. We’ll also touch on whether hackers stop for turkey, too.

The Thanksgiving hour: around 15:00 (local time)

While we can see a 10% overall daily drop in US traffic due to Thanksgiving, the drop is even more noticeable when examining traffic on an hour-by-hour basis. Internet activity began to decrease significantly after 12:00 EST, persisting Continue reading

Workers AI Update: Stable Diffusion, Code Llama + Workers AI in 100 cities

Thanksgiving might be a US holiday (and one of our favorites — we have many things to be thankful for!). Many people get excited about the food or deals, but for me as a developer, it’s also always been a nice quiet holiday to hack around and play with new tech. So in that spirit, we're thrilled to announce that Stable Diffusion and Code Llama are now available as part of Workers AI, running in over 100 cities across Cloudflare’s global network.

As many AI fans are aware, Stable Diffusion is the groundbreaking image-generation model that can conjure images based on text input. Code Llama is a powerful language model optimized for generating programming code.

For more of the fun details, read on, or head over to the developer docs to get started!

Generating images with Stable Diffusion

Stability AI launched Stable Diffusion XL 1.0 (SDXL) this past summer. You can read more about it here, but we’ll briefly mention some really cool aspects.

First off, “Distinct images can be prompted without having any particular ‘feel’ imparted by the model, ensuring absolute freedom of Continue reading

Workers AI Update: Hello Mistral 7B

This post is also available in Deutsch.

Today we’re excited to announce that we’ve added the Mistral-7B-v0.1-instruct to Workers AI. Mistral 7B is a 7.3 billion parameter language model with a number of unique advantages. With some help from the founders of Mistral AI, we’ll look at some of the highlights of the Mistral 7B model, and use the opportunity to dive deeper into “attention” and its variations such as multi-query attention and grouped-query attention.

Mistral 7B tl;dr:

Mistral 7B is a 7.3 billion parameter model that puts up impressive numbers on benchmarks. The model:

- Outperforms Llama 2 13B on all benchmarks

- Outperforms Llama 1 34B on many benchmarks,

- Approaches CodeLlama 7B performance on code, while remaining good at English tasks, and

- The chat fine-tuned version we’ve deployed outperforms Llama 2 13B chat in the benchmarks provided by Mistral.

Here’s an example of using streaming with the REST API:

curl -X POST \

“https://api.cloudflare.com/client/v4/accounts/{account-id}/ai/run/@cf/mistral/mistral-7b-instruct-v0.1” \

-H “Authorization: Bearer {api-token}” \

-H “Content-Type:application/json” \

-d '{ “prompt”: “What is grouped query attention”, “stream”: true }'

API Response: { response: “Grouped query attention is a technique used in natural language processing (NLP) and machine learning Continue reading2024, the year of elections

2024 is a year of elections, with more than 70 elections scheduled in 40 countries around the world. One of the key pillars of democracy is trust. To that end, ensuring that the Internet is trusted, secure, reliable, and accessible for the public and those working in the election space is critical to any free and fair election.

Cloudflare has considerable experience in gearing up for elections and identifying how our cyber security tools can be used to help vulnerable groups in the election space. In December 2022, we expanded our product set to include Zero Trust products to assist these groups against new and emerging threats. Over the last few years, we’ve reported on our work in protecting a range of election entities and as we prepare for the 2024 elections, we want to provide insight into attack trends we’ve seen against these groups to understand what to expect in the next year.

For this blog post, we identified cyber attack trends for a variety of groups in the elections space based in the United States, as many of our Cloudflare Impact projects provide services to these groups. These include U.S. state and local government websites protected under Continue reading

How to execute an object file: Part 4, AArch64 edition

Translating source code written in a high-level programming language into an executable binary typically involves a series of steps, namely compiling and assembling the code into object files, and then linking those object files into the final executable. However, there are certain scenarios where it can be useful to apply an alternate approach that involves executing object files directly, bypassing the linker. For example, we might use it for malware analysis or when part of the code requires an incompatible compiler. We’ll be focusing on the latter scenario: when one of our libraries needed to be compiled differently from the rest of the code. Learning how to execute an object file directly will give you a much better sense of how code is compiled and linked together.

To demonstrate how this was done, we have previously published a series of posts on executing an object file:

- How to execute an object file: Part 1

- How to execute an object file: Part 2

- How to execute an object file: Part 3

The initial posts are dedicated to the x86 architecture. Since then the fleet of our working machines has expanded to include a large and growing number of ARM CPUs. This Continue reading