Bringing the best live video experience to Cloudflare Stream with AV1

Consumer hardware is pushing the limits of consumers’ bandwidth.

VR headsets support 5760 x 3840 resolution — 22.1 million pixels per frame of video. Nearly all new TVs and smartphones sold today now support 4K — 8.8 million pixels per frame. It’s now normal for most people on a subway to be casually streaming video on their phone, even as they pass through a tunnel. People expect all of this to just work, and get frustrated when it doesn’t.

Consumer Internet bandwidth hasn’t kept up. Even advanced mobile carriers still limit streaming video resolution to prevent network congestion. Many mobile users still have to monitor and limit their mobile data usage. Higher Internet speeds require expensive infrastructure upgrades, and 30% of Americans still say they often have problems simply connecting to the Internet at home.

We talk to developers every day who are pushing up against these limits, trying to deliver the highest quality streaming video without buffering or jitter, challenged by viewers’ expectations and bandwidth. Developers building live video experiences hit these limits the hardest — buffering doesn’t just delay video playback, it can cause the viewer to get out of sync with the live event. Buffering Continue reading

What we served up for the last Birthday Week before we’re a teenager

Almost a teen. With Cloudflare’s 12th birthday last Tuesday, we’re officially into our thirteenth year. And what a birthday we had!

36 announcements ranging from SIM cards to post quantum encryption via hardware keys and so much more. Here’s a review of everything we announced this week.

Monday

| What | In a sentence… |

|---|---|

| The First Zero Trust SIM | We’re bringing Zero Trust security controls to the humble SIM card, rethinking how mobile device security is done, with the Cloudflare SIM: the world’s first Zero Trust SIM. |

| Securing the Internet of Things | We’ve been defending customers from Internet of Things botnets for years now, and it’s time to turn the tides: we’re bringing the same security behind our Zero Trust platform to IoT. |

| Bringing Zero Trust to mobile network operators | Helping bring the power of Cloudflare’s Zero Trust platform to mobile operators and their subscribers. |

Tuesday

| What | In a sentence… |

|---|---|

| Workers Launchpad | Leading venture capital firms to provide up to $1.25 BILLION to back startups built on Cloudflare Workers. |

| Startup Plan v2.0 | Increasing the scope, eligibility and products we include under our Startup Plan, enabling more developers and startups to build the next big thing on top of Cloudflare. |

| workerd: Continue reading |

Defending against future threats: Cloudflare goes post-quantum

There is an expiration date on the cryptography we use every day. It’s not easy to read, but somewhere between 15 or 40 years, a sufficiently powerful quantum computer is expected to be built that will be able to decrypt essentially any encrypted data on the Internet today.

Luckily, there is a solution: post-quantum (PQ) cryptography has been designed to be secure against the threat of quantum computers. Just three months ago, in July 2022, after a six-year worldwide competition, the US National Institute of Standards and Technology (NIST), known for AES and SHA2, announced which post-quantum cryptography they will standardize. NIST plans to publish the final standards in 2024, but we want to help drive early adoption of post-quantum cryptography.

Starting today, as a beta service, all websites and APIs served through Cloudflare support post-quantum hybrid key agreement. This is on by default1; no need for an opt-in. This means that if your browser/app supports it, the connection to our network is also secure against any future quantum computer.

We offer this post-quantum cryptography free of charge: we believe that post-quantum security should be the new baseline for the Internet.

Deploying post-quantum cryptography seems like a Continue reading

Introducing post-quantum Cloudflare Tunnel

Undoubtedly, one of the big themes in IT for the next decade will be the migration to post-quantum cryptography. From tech giants to small businesses: we will all have to make sure our hardware and software is updated so that our data is protected against the arrival of quantum computers. It seems far away, but it’s not a problem for later: any encrypted data captured today (not protected by post-quantum cryptography) can be broken by a sufficiently powerful quantum computer in the future.

Luckily we’re almost there: after a tremendous worldwide effort by the cryptographic community, we know what will be the gold standard of post-quantum cryptography for the next decades. Release date: somewhere in 2024. Hopefully, for most, the transition will be a simple software update then, but it will not be that simple for everyone: not all software is maintained, and it could well be that hardware needs an upgrade as well. Taking a step back, many companies don’t even have a full list of all software running on their network.

For Cloudflare Tunnel customers, this migration will be much simpler: introducing Post-Quantum Cloudflare Tunnel. In this blog post, first we give an overview of how Cloudflare Tunnel Continue reading

Automatic (secure) transmission: taking the pain out of origin connection security

In 2014, Cloudflare set out to encrypt the Internet by introducing Universal SSL. It made getting an SSL/TLS certificate free and easy at a time when doing so was neither free, nor easy. Overnight millions of websites had a secure connection between the user’s browser and Cloudflare.

But getting the connection encrypted from Cloudflare to the customer’s origin server was more complex. Since Cloudflare and all browsers supported SSL/TLS, the connection between the browser and Cloudflare could be instantly secured. But back in 2014 configuring an origin server with an SSL/TLS certificate was complex, expensive, and sometimes not even possible.

And so we relied on users to configure the best security level for their origin server. Later we added a service that detects and recommends the highest level of security for the connection between Cloudflare and the origin server. We also introduced free origin server certificates for customers who didn’t want to get a certificate elsewhere.

Today, we’re going even further. Cloudflare will shortly find the most secure connection possible to our customers’ origin servers and use it, automatically. Doing this correctly, at scale, while not breaking a customer’s service is very complicated. This blog post explains how we are Continue reading

Gateway + CASB: alphabetti spaghetti that spells better SaaS security

This post is also available in 简体中文 and Español.

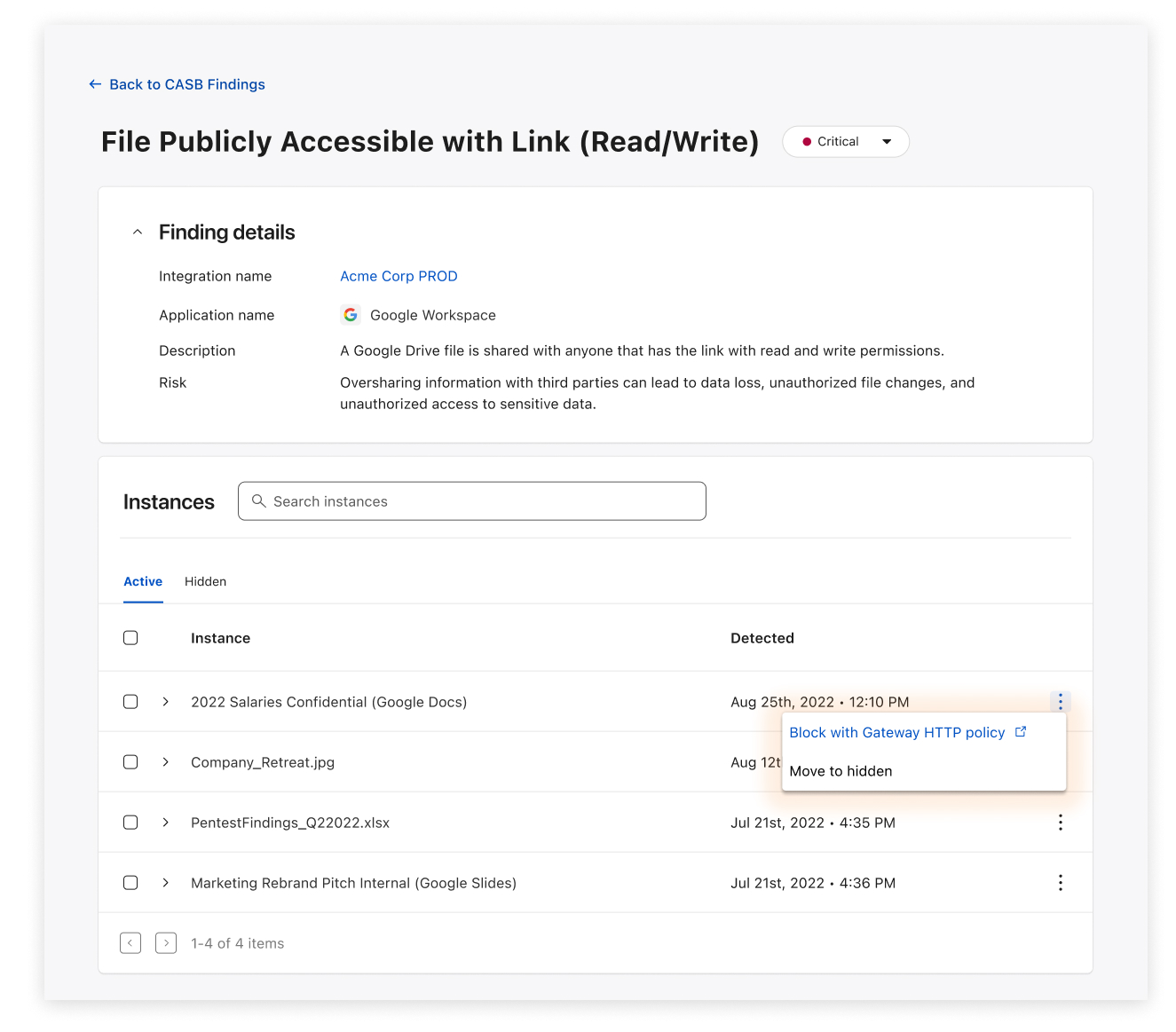

Back in June 2022, we announced an upcoming feature that would allow for Cloudflare Zero Trust users to easily create prefilled HTTP policies in Cloudflare Gateway (Cloudflare’s Secure Web Gateway solution) via issues identified by CASB, a new Cloudflare product that connects, scans, and monitors your SaaS apps - like Google Workspace and Microsoft 365 - for security issues.

With Cloudflare’s 12th Birthday Week nearing its end, we wanted to highlight, in true Cloudflare fashion, this new feature in action.

What is CASB? What is Gateway?

To quickly recap, Cloudflare’s API-driven CASB offers IT and security teams a fast, yet effective way to connect, scan, and monitor their SaaS apps for security issues, like file exposures, misconfigurations, and Shadow IT. In just a few clicks, users can see an exhaustive list of security issues that may be affecting the security of their SaaS apps, including Google Workspace, Microsoft 365, Slack, and GitHub.

Cloudflare Gateway, our Secure Web Gateway (SWG) offering, allows teams to monitor and control the outbound connections originating from endpoint devices. For example, don’t want your employees to access gambling and social media websites on company devices? Just block Continue reading

The status page the Internet needs: Cloudflare Radar Outage Center

Historically, Cloudflare has covered large-scale Internet outages with timely blog posts, such as those published for Iran, Sudan, Facebook, and Syria. While we still explore such outages on the Cloudflare blog, throughout 2022 we have ramped up our monitoring of Internet outages around the world, posting timely information about those outages to @CloudflareRadar on Twitter.

The new Cloudflare Radar Outage Center (CROC), launched today as part of Radar 2.0, is intended to be an archive of this information, organized by location, type, date, etc.

Furthermore, this initial release is also laying the groundwork for the CROC to become a first stop and key resource for civil society organizations, journalists/news media, and impacted parties to get information on, or corroboration of, reported or observed Internet outages.

What information does the CROC contain?

At launch, the CROC includes summary information about observed outage events. This information includes:

- Location: Where was the outage?

- ASN: What autonomous system experienced a disruption in connectivity?

- Type: How broad was the outage? Did connectivity fail nationwide, or at a sub-national level? Did just a single network provider have an outage?

- Scope: If it was a sub-national/regional Continue reading

The home page for Internet insights: Cloudflare Radar 2.0

Cloudflare Radar was launched two years ago to give everyone access to the Internet trends, patterns and insights Cloudflare uses to help improve our service and protect our customers.

Until then, these types of insights were only available internally at Cloudflare. However, true to our mission of helping build a better Internet, we felt everyone should be able to look behind the curtain and see the inner workings of the Internet. It’s hard to improve or understand something when you don’t have clear visibility over how it’s working.

On Cloudflare Radar you can find timely graphs and visualizations on Internet traffic, security and attacks, protocol adoption and usage, and outages that might be affecting the Internet. All of these can be narrowed down by timeframe, country, and Autonomous System (AS). You can also find interactive deep dive reports on important subjects such as DDoS and the Meris Botnet. It’s also possible to search for any domain name to see details such as SSL usage and which countries their visitors are coming from.

Since launch, Cloudflare Radar has been used by NGOs to confirm the Internet disruptions their observers see in the field, by journalists looking for Internet trends related to Continue reading

Lights, Camera, Action! Business and Pro customers get bundled streaming video

Beginning December 1, 2022, if you have a Business or Pro subscription, you will receive a complimentary allocation of Cloudflare Stream. Here’s what this means:

- All Cloudflare customers with a Biz or Pro domain will be able to store up to 100 minutes of video content and deliver up to 10,000 minutes of video content each month at no additional cost

- If you need additional storage or delivery beyond the complimentary allocation, you will be able to upgrade to a paid Stream subscription from the Stream Dashboard.

Cloudflare Stream simplifies storage, encoding and playback of videos. You can use the free allocation of Cloudflare Stream for various use cases, such as background/hero videos, e-commerce product videos, how-to guides and customer testimonials.

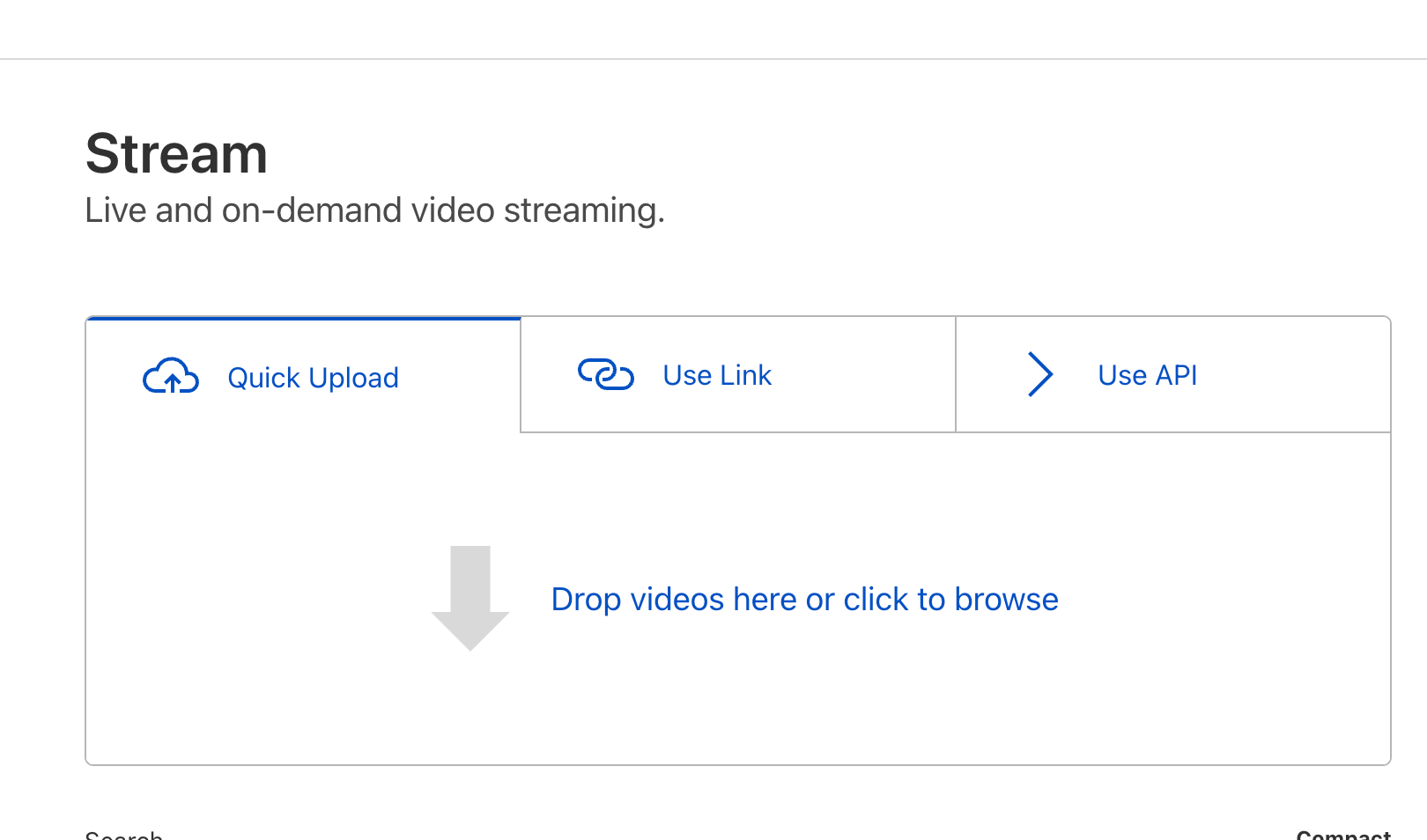

Upload videos with no code

To upload your first video Stream, simply visit the Stream Dashboard and drag-and-drop the video file:

Once you upload a video, Stream will store and encode your video. Stream automatically optimizes your video uploads by creating multiple versions of it at different quality levels. This happens behind-the-scenes and requires no extra effort from your side. The Stream Player automatically selects the optimal quality level based on your website visitor’s Internet connection using a technology Continue reading

Don’t roll your own high cardinality analytics, use Workers Analytics Engine

Workers Analytics Engine (or for short, Analytics Engine) is a new way for developers to store and analyze time series analytics about anything using Cloudflare Workers, and it’s now in open beta! Analytics Engine is really good at gathering time-series data for really high cardinality and high-volume data sets from Cloudflare Workers. At Cloudflare, we use Analytics Engine to provide insight into how our customers use Cloudflare products.

Log, log, logging!

As an example, Analytics Engine is used to observe the backend that powers Instant Logs. Instant Logs allows Cloudflare customers to stream a live session of the HTTP logs for their domain to the Cloudflare dashboard. The backend for Instant Logs is built on Cloudflare Workers.

Briefly, the Instant Logs backend works by receiving requests from each Cloudflare server that processes a customer's HTTP traffic. These requests contain the HTTP logs for the customer’s HTTP traffic. The Instant Logs backend then forwards these HTTP logs to the customer’s browser via a WebSocket.

In order to ensure that the HTTP logs are being delivered smoothly to a customer's browser, we need to track the request rates across all active Instant Logs sessions. We also need to track the request rates Continue reading

Project A11Y: how we upgraded Cloudflare’s dashboard to adhere to industry accessibility standards

At Cloudflare, we believe the Internet should be accessible to everyone. And today, we’re happy to announce a more inclusive Cloudflare dashboard experience for our users with disabilities. Recent improvements mean our dashboard now adheres to industry accessibility standards, including Web Content Accessibility Guidelines (WCAG) 2.1 AA and Section 508 of the Rehabilitation Act.

Over the past several months, the Cloudflare team and our partners have been hard at work to make the Cloudflare dashboard1 as accessible as possible for every single one of our current and potential customers. This means incorporating accessibility features that comply with the latest Web Content Accessibility Guidelines (WCAG) and Section 508 of the US’s federal Rehabilitation Act. We are invested in working to meet or exceed these standards; to demonstrate that commitment and share openly about the state of accessibility on the Cloudflare dashboard, we have completed the Voluntary Product Accessibility Template (VPAT), a document used to evaluate our level of conformance today.

Conformance with a technical and legal spec is a bit abstract–but for us, accessibility simply means that as many people as possible can be successful users of the Cloudflare dashboard. This is important because each day, more and more Continue reading

Goodbye, Alexa. Hello, Cloudflare Radar Domain Rankings

The Internet is a living organism. Technology changes, shifts in human behavior, social events, intentional disruptions, and other occurrences change the Internet in unpredictable ways, even to the trained eye.

Cloudflare Radar has long been the place to visit for accessing data and getting unique insights into how people and organizations are using the Internet across the globe, as well as those unpredictable changes to the Internet.

One of the most popular features on Radar has always been the “Most Popular Domains,” with both global and country-level perspectives. Domain usage signals provide a proxy for user behavior over time and are a good representation of what people are doing on the Internet.

Today, we’re going one step further and launching a new dataset called Radar Domain Rankings (Beta). Domain Rankings is based on aggregated 1.1.1.1 resolver data that is anonymized in accordance with our privacy commitments. The dataset aims to identify the top most popular domains based on how people use the Internet globally, without tracking individuals’ Internet use.

There are a few reasons why we're doing this now. One is obviously to improve our Radar features with better data and incorporate new learnings. But also, ranking Continue reading

The (hardware) key to making phishing defense seamless with Cloudflare Zero Trust and Yubico

This post is also available in 简体中文, Français, 日本語 and Español.

Hardware keys provide the best authentication security and are phish-proof. But customers ask us how to implement them and which security keys they should buy. Today we’re introducing an exclusive program for Cloudflare customers that makes hardware keys more accessible and economical than ever. This program is made possible through a new collaboration with Yubico, the industry’s leading hardware security key vendor and provides Cloudflare customers with exclusive “Good for the Internet” pricing.

Yubico Security Keys are available today for any Cloudflare customer, and they easily integrate with Cloudflare’s Zero Trust service. That service is open to organizations of any size from a family protecting a home network to the largest employers on the planet. Any Cloudflare customer can sign in to the Cloudflare dashboard today and order hardware security keys for as low as $10 per key.

In July 2022, Cloudflare prevented a breach by an SMS phishing attack that targeted more than 130 companies, due to the company’s use of Cloudflare Zero Trust paired with hardware security keys. Those keys were YubiKeys and this new collaboration with Yubico, the maker of YubiKeys, removes barriers for Continue reading

How Cloudflare implemented hardware keys with FIDO2 and Zero Trust to prevent phishing

Cloudflare’s security architecture a few years ago was a classic “castle and moat” VPN architecture. Our employees would use our corporate VPN to connect to all the internal applications and servers to do their jobs. We enforced two-factor authentication with time-based one-time passcodes (TOTP), using an authenticator app like Google Authenticator or Authy when logging into the VPN but only a few internal applications had a second layer of auth. That architecture has a strong looking exterior, but the security model is weak. We recently detailed the mechanics of a phishing attack we prevented, which walks through how attackers can phish applications that are “secured” with second factor authentication methods like TOTP. Happily, we had long done away with TOTP and replaced it with hardware security keys and Cloudflare Access. This blog details how we did that.

The solution to the phishing problem is through a multi-factor authentication (MFA) protocol called FIDO2/WebAuthn. Today, all Cloudflare employees log in with FIDO2 as their secure multi-factor and authenticate to our systems using our own Zero Trust products. Our newer architecture is phish proof and allows us to more easily enforce the least privilege access control.

A little about the terminology of Continue reading

Now all customers can share access to their Cloudflare account with Role Based Access Controls

Cloudflare’s mission is to help build a better Internet. Pair that with our core belief that security is something that should be accessible to everyone and the outcome is a better and safer Internet for all. Previously, our FREE and PAYGO customers didn’t have the flexibility to give someone control of just part of their account, they had to give access to everything.

Starting today, role based access controls (RBAC), and all of our additional roles will be rolled out to users on every plan! Whether you are a small business or even a single user, you can ensure that you can add users only to parts of Cloudflare you deem appropriate.

Why should I limit access?

It is good practice with security in general to limit access to what a team member needs to do a job. Restricting access limits the overall threat surface if a given user was compromised, and ensures that you limit the surface that mistakes can be made.

If a malicious user was able to gain access to an account, but it only had read access, you’ll find yourself with less of a headache than someone who had administrative access, and could change how your Continue reading

Back in 2017 we gave you Unmetered DDoS Mitigation, here’s a birthday gift: Unmetered Rate Limiting

In 2017, we made unmetered DDoS protection available to all our customers, regardless of their size or whether they were on a Free or paid plan. Today we are doing the same for Rate Limiting, one of the most successful products of the WAF family.

Rate Limiting is a very effective tool to manage targeted volumetric attacks, takeover attempts, bots scraping sensitive data, attempts to overload computationally expensive API endpoints and more. To manage these threats, customers deploy rules that limit the maximum rate of requests from individual visitors on specific paths or portions of their applications.

Until today, customers on a Free, Pro or Business plan were able to purchase Rate Limiting as an add-on with usage-based cost of $5 per million requests. However, we believe that an essential security tool like Rate Limiting should be available to all customers without restrictions.

Since we launched unmetered DDoS, we have mitigated huge attacks, like a 2 Tbps multi-vector attack or the most recent 26 million requests per second attack. We believe that releasing an unmetered version of Rate Limiting will increase the overall security posture of millions of applications protected by Cloudflare.

Today, we are announcing that Free, Pro and Continue reading

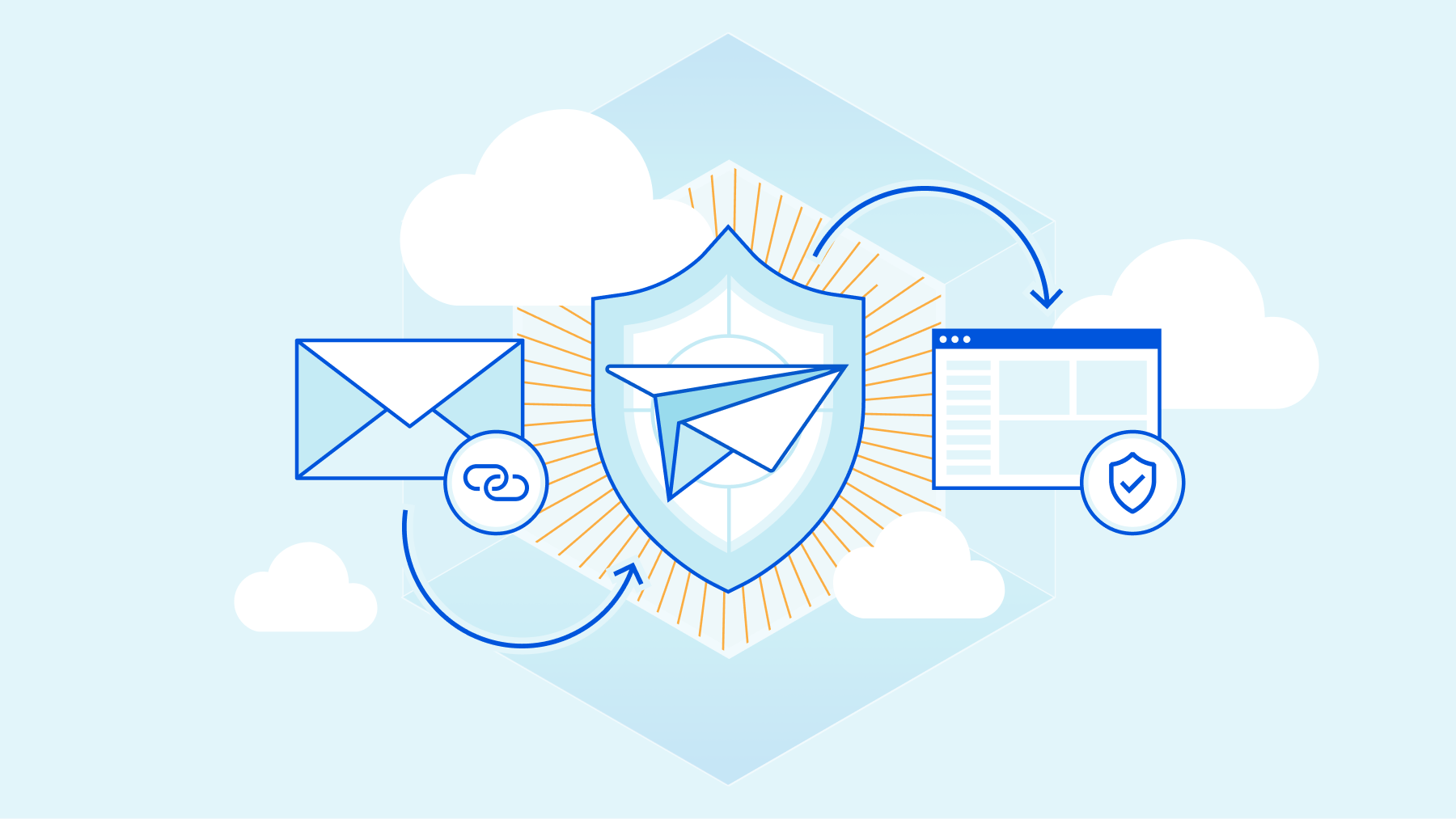

Click Here! (safely): Automagical Browser Isolation for potentially unsafe links in email

We're often told not to click on 'odd' links in email, but what choice do we really have? With the volume of emails and the myriad of SaaS products that companies use, it's inevitable that employees find it almost impossible to distinguish a good link before clicking on it. And that's before attackers go about making links harder to inspect and hiding their URLs behind tempting "Confirm" and "Unsubscribe" buttons.

We need to let end users click on links and have a safety net for when they unwittingly click on something malicious — let’s be honest, it’s bound to happen even if you do it by mistake. That safety net is Cloudflare's Email Link Isolation.

Email Link Isolation

With Email Link Isolation, when a user clicks on a suspicious link — one that email security hasn’t identified as ‘bad’, but is still not 100% sure it’s ‘good’ — they won’t immediately be taken to that website. Instead, the user first sees an interstitial page recommending extra caution with the website they’ll visit, especially if asked for passwords or personal details.

From there, one may choose to not visit the webpage or to proceed and open it in a remote isolated Continue reading

Announcing Turnstile, a user-friendly, privacy-preserving alternative to CAPTCHA

Today, we’re announcing the open beta of Turnstile, an invisible alternative to CAPTCHA. Anyone, anywhere on the Internet, who wants to replace CAPTCHA on their site will be able to call a simple API, without having to be a Cloudflare customer or sending traffic through the Cloudflare global network. Sign up here for free.

There is no point in rehashing the fact that CAPTCHA provides a terrible user experience. It's been discussed in detail before on this blog, and countless times elsewhere. The creator of the CAPTCHA has even publicly lamented that he “unwittingly created a system that was frittering away, in ten-second increments, millions of hours of a most precious resource: human brain cycles.” We hate it, you hate it, everyone hates it. Today we’re giving everyone a better option.

Turnstile is our smart CAPTCHA alternative. It automatically chooses from a rotating suite of non-intrusive browser challenges based on telemetry and client behavior exhibited during a session. We talked in an earlier post about how we’ve used our Managed Challenge system to reduce our use of CAPTCHA by 91%. Now anyone can take advantage of this same technology to stop using CAPTCHA on their own site.

UX Continue reading

Monitor your own network with free network flow analytics from Cloudflare

As a network engineer or manager, answering questions about the traffic flowing across your infrastructure is a key part of your job. Cloudflare built Magic Network Monitoring (previously called Flow Based Monitoring) to give you better visibility into your network and to answer questions like, “What is my network’s peak traffic volume? What are the sources of that traffic? When does my network see that traffic?” Today, Cloudflare is excited to announce early access to a free version of Magic Network Monitoring that will be available to everyone. You can request early access by filling out this form.

Magic Network Monitoring now features a powerful analytics dashboard, self-serve configuration, and a step-by-step onboarding wizard. You’ll have access to a tool that helps you visualize your traffic and filter by packet characteristics including protocols, source IPs, destination IPs, ports, TCP flags, and router IP. Magic Network Monitoring also includes network traffic volume alerts for specific IP addresses or IP prefixes on your network.

Making Network Monitoring easy

Magic Networking Monitoring allows customers to collect network analytics without installing a physical device like a network TAP (Test Access Point) or setting up overly complex remote monitoring systems. Our product works Continue reading

Introducing Cloudflare’s free Botnet Threat Feed for service providers

We’re pleased to introduce Cloudflare’s free Botnet Threat Feed for Service Providers. This includes all types of service providers, ranging from hosting providers to ISPs and cloud compute providers.

This feed will give service providers threat intelligence on their own IP addresses that have participated in HTTP DDoS attacks as observed from the Cloudflare network — allowing them to crack down on abusers, take down botnet nodes, reduce their abuse-driven costs, and ultimately reduce the amount and force of DDoS attacks across the Internet. We’re giving away this feed for free as part of our mission to help build a better Internet.

Service providers that operate their own IP space can now sign up to the early access waiting list.

Cloudflare’s unique vantage point on DDoS attacks

Cloudflare provides services to millions of customers ranging from small businesses and individual developers to large enterprises, including 29% of Fortune 1000 companies. Today, about 20% of websites rely directly on Cloudflare’s services. This gives us a unique vantage point on tremendous amounts of DDoS attacks that target our customers.

DDoS attacks, by definition, are distributed. They originate from botnets of many sources — in some cases, from hundreds of thousands to millions Continue reading