Kubernetes 101 – External access into the cluster

![]() In our last post, we looked at how Kubernetes handles the bulk of it’s networking. What we didn’t cover yet, was how to access services deployed in the Kubernetes cluster from outside the cluster. Obviously services that live in pods can be accessed directly as each pod has its own routable IP address. But what if we want something a little more dynamic? What if we used a replication controller to scale our web front end? We have the Kubernetes service, but what I would call its VIP range (Portal Net) isn’t routable on the network. There are a couple of ways to solve this problem. Let’s walk through the problem and talk about a couple of ways to solve it. I’ll demonstrate the way I chose to solve it but that doesn’t imply that there aren’t other better ways as well.

In our last post, we looked at how Kubernetes handles the bulk of it’s networking. What we didn’t cover yet, was how to access services deployed in the Kubernetes cluster from outside the cluster. Obviously services that live in pods can be accessed directly as each pod has its own routable IP address. But what if we want something a little more dynamic? What if we used a replication controller to scale our web front end? We have the Kubernetes service, but what I would call its VIP range (Portal Net) isn’t routable on the network. There are a couple of ways to solve this problem. Let’s walk through the problem and talk about a couple of ways to solve it. I’ll demonstrate the way I chose to solve it but that doesn’t imply that there aren’t other better ways as well.

As we’ve seen, Kubernetes has a built-in load balancer which it refers to as a service. A service is group of pods that all provide the same function. Services are accessible by other pods through an IP address which is allocated out of the clusters portal net allocation. Continue reading

Kubernetes 101 – Networking

One of the reasons that I’m so interested in docker and it’s associated technologies is because of the new networking paradigm it brings along with it. Kubernetes has a unique (and pretty awesome) way of dealing with these networking challenges but it can be hard to understand at first glance. My goal in this post is to walk you through deploying a couple of Kubernetes constructs and analyze what Kubernetes is doing at the network layer to make it happen. That being said, let’s start with the basics of deploying a pod. We’ll be using the lab we created in the first post and some of the config file examples we created in the second post.

One of the reasons that I’m so interested in docker and it’s associated technologies is because of the new networking paradigm it brings along with it. Kubernetes has a unique (and pretty awesome) way of dealing with these networking challenges but it can be hard to understand at first glance. My goal in this post is to walk you through deploying a couple of Kubernetes constructs and analyze what Kubernetes is doing at the network layer to make it happen. That being said, let’s start with the basics of deploying a pod. We’ll be using the lab we created in the first post and some of the config file examples we created in the second post.

Note: I should point out here again that this lab is built with bare metal hardware. The network model in this type of lab is likely slightly different that what you’d see with a cloud provider. However, the mechanics behind what Kubernetes is doing from a network perspective should be identical.

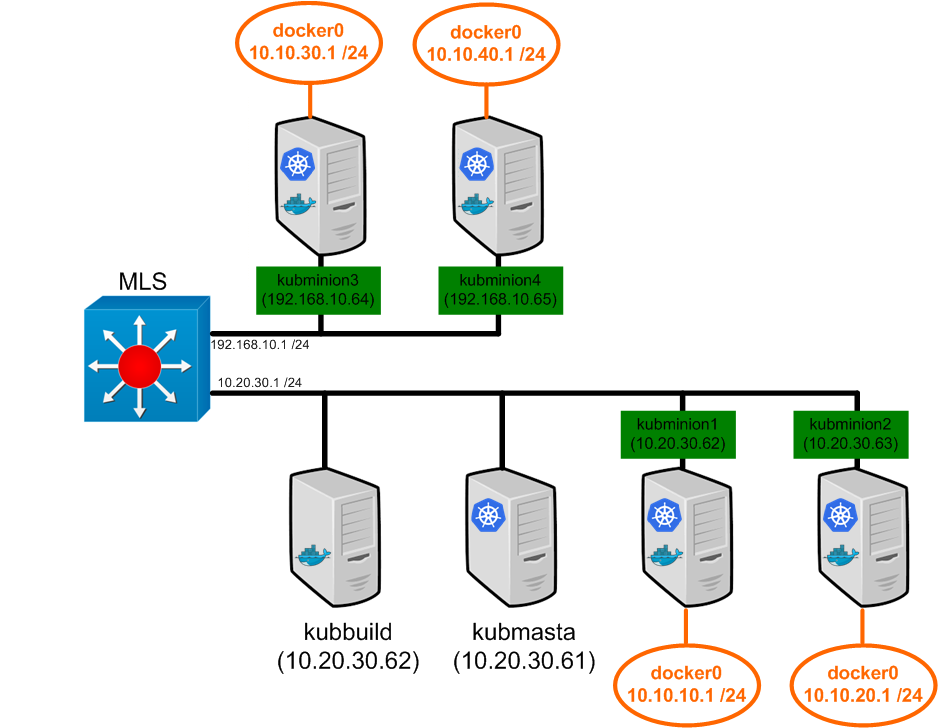

So just to level set, here is what our lab looks like…

We touched on the topic of pod IP addressing before, but let’s provide Continue reading

Kubernetes 101 – The constructs

In our last post, we got our base lab up and running. In this post, I’d like to walk us through the four main constructs that Kubernetes uses. Kubernetes defines these constructs through configuration files which can be either YAML or JSON. Let’s walk through each construct so we can define it, show possible configurations, and lastly an example of how it works on our lab cluster.

Pods

Pods are the basic deployment unit in Kubernetes. A pod consists of one or more containers. Recall that Kubernetes is a container cluster management solution. It concerns itself with workload placement not individual container placement. Kubernetes defines a pod as a group of ‘closely related containers’. Some people would go as far as saying a pod is a single application. I’m hesitant of that definition since it it seems too broad. I think what it really boils down to is grouping containers together that make sense. From a network point of view, a pod has a single IP address. Multiple containers that run in a pod all share that common network name space. This also means that containers Continue reading

Kubernetes 101 – The build

In this series of posts we’re going to tackle deploying a Kubernetes cluster. Kubernetes is the open source container cluster manager that Google released some time ago. In short, it’s a way to treat a large number of hosts as single compute instance that you can deploy containers against. While the system itself is pretty straight forward to use, the install and initial configuration can be a little bit daunting if you’ve never done it before. The other reason I’m writing this is because I had a hard time finding all of the pieces to build a bare metal kubernetes cluster. Most of the other blogs you’ll read use some mix of an overlay (Weave or Flannel) so I wanted to document a build that used bare metal hosts along with non-overlay networking.

In this first post we’ll deal with getting things running. This includes downloading the actual code from github, building it, deploying it to your machines, and configuring the services. In the following posts we’ll actually start deploying pods (we’ll talk about what those are later on), discuss the deployment model, and dig into how Kubernetes handles container networking. That Continue reading

Docker Networking 101 – Mapped Container Mode

In this post I want to cover what I’m considering the final docker provided network mode. We haven’t covered the ‘none’ option but that will come up in future posts when we discuss more advanced deployment options. That being said, let’s get right into it.

Mapped container network mode is also referred to as ‘container in container’ mode. Essentially, all you’re doing is placing a new containers network interface inside an existing containers network stack. This leads to some interesting options in regards to how containers can consume network services.

In this blog I’ll be using two docker images…

web_container_80 – Runs CentOS image with Apache configured to listen on port 80

web_container_8080 – Runs CentOS image with Apache configured to listen on port 8080

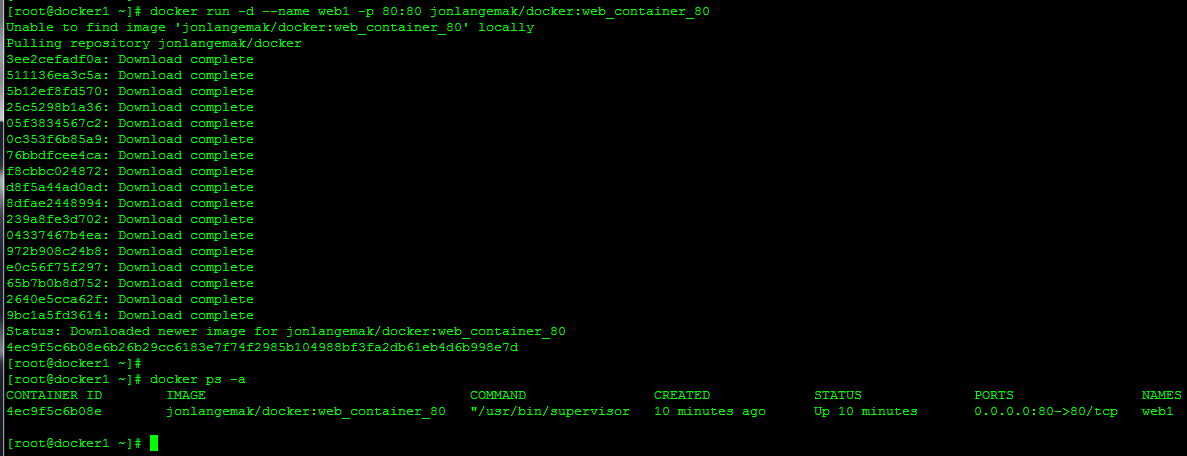

These containers aren’t much different from what we had before, save the fact that I made the index pages a little more noticeable. So let’s download web_container_80 and run it with port 80 on the docker host mapped to port 80 on the container…

docker run -d --name web1 -p 80:80 jonlangemak/docker:web_container_80

Once it’s downloaded let’s take a look and make sure its running…

Here we can see that it’s Continue reading

Docker Networking 101 – Host mode

In our last post we covered what docker does with container networking in a default configuration. In this post, I’d like to start covering the remaining non-default network configuration modes. There are really 4 docker ‘provided’ network modes in which you can run containers…

In our last post we covered what docker does with container networking in a default configuration. In this post, I’d like to start covering the remaining non-default network configuration modes. There are really 4 docker ‘provided’ network modes in which you can run containers…

Bridge mode – This is the default, we saw how this worked in the last post with the containers being attached to the docker0 bridge.

Host mode – The docker documentation claims that this mode does ‘not containerize the containers networking!’. That being said, what this really does is just put the container in the hosts network stack. That is, all of the network interfaces defined on the host will be accessible to the container. This one is sort of interesting and has some caveats but we’ll talk about those in greater detail below.

Mapped Container mode – This mode essentially maps a new container into an existing containers network stack. This means that while other resources (processes, filesystem, etc) will be kept separate, the network resources such as port mappings and IP addresses of the first container will be shared by the second container.

None – This one Continue reading

Docker Networking 101 – The defaults

We’ve talked about docker in a few of my more recent posts but we haven’t really tackled how docker does networking. We know that docker can expose container services through port mapping, but that brings some interesting challenges along with it.

We’ve talked about docker in a few of my more recent posts but we haven’t really tackled how docker does networking. We know that docker can expose container services through port mapping, but that brings some interesting challenges along with it.

As with anything related to networking, our first challenge is to understand the basics. Moreover, to understand what our connectivity options are for the devices we want to connect to the network (docker(containers)). So the goal of this post is going to be to review docker networking defaults. Once we know what our host connectivity options are, we can spread quickly into advanced container networking.

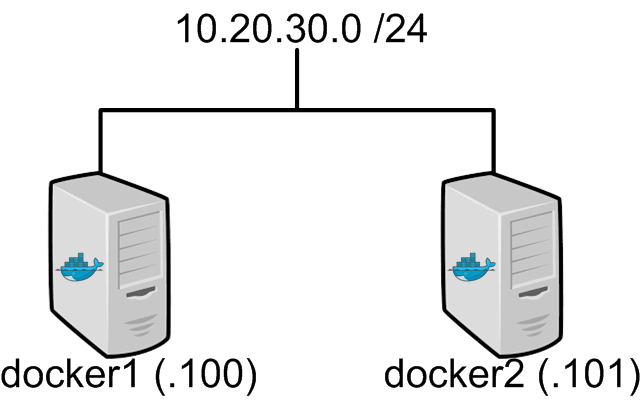

So let’s start with the basics. In this post, I’m going to be working with two docker hosts, docker1 and docker2. They sit on the network like this…

So nothing too complicated here. Two basic hosts with a very basic network configuration. So let’s assume that you’ve installed docker and are running with a default configuration. If you need instructions for the install see this. At this point, all I’ve done is configured a static IP on each host, configured a Continue reading

My 2015 goals

I’ve always sort of set goals for myself, but I never really write any of them down. This year, after talking to a friend about it, I decided to write down some actual goals for 2015. What really struck me about the conversation was a single sentence he said. I believe the exact words he used were “Write them down and you’ll be amazed at how motivated you can be”. Since it certainly sounded like he was speaking from experience, here’s my list. Some are more subjective which will make them harder to ‘check off’ than others. Some are related to my work/career, some are personal, and some are just sort of for fun.

I’ve always sort of set goals for myself, but I never really write any of them down. This year, after talking to a friend about it, I decided to write down some actual goals for 2015. What really struck me about the conversation was a single sentence he said. I believe the exact words he used were “Write them down and you’ll be amazed at how motivated you can be”. Since it certainly sounded like he was speaking from experience, here’s my list. Some are more subjective which will make them harder to ‘check off’ than others. Some are related to my work/career, some are personal, and some are just sort of for fun.

Run a marathon – Some of you know I made a serious attempt at this 2 years ago. It started with others offering tips and training schedules, continued with me disregarding the training plan, and ended with me doing it wrong and messing up my knee. So this year, I’m going to make a serious attempt at following a training schedule and try and get this done. I’ll aim for the Twin Cities marathon which happens Continue reading

CoreOS – Using fleet to deploy an application

At this point we’ve deployed three hosts in our first and second CoreOS posts. Now we can do some of the really cool stuff fleet is capable of doing on CoreOS! Again – I’ll apologize that we’re getting ahead of ourselves here but I really want to give you a demo of what CoreOS can do with fleet before we spend a few posts diving into the details of how it does this. So let’s dive right back in where we left off…

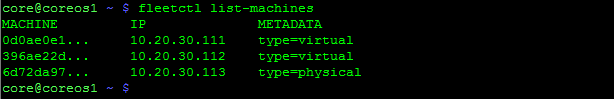

We should have 3 CoreOS hosts that are clustered. Let’s verify that by SSHing into one of CoreOS hosts…

Looks good, the cluster can see all three of our hosts. Let’s start work on deploying our first service using fleet.

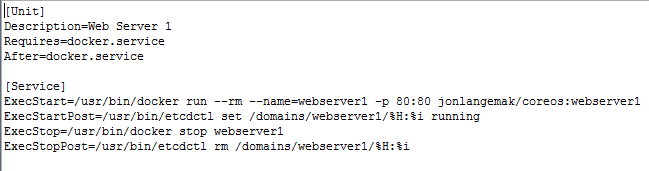

Fleet works off of unit files. This is a systemd construct and one that we’ll cover in greater detail in the upcoming systemd post. For now, let’s look at what a fleet unit file might look like…

Note: These config files are out on my github account – https://github.com/jonlangemak/coreos/

Systemd works off of units and targets. Suffice to say for now, the fleet service file describes a service Continue reading

CoreOS – Getting your second (and 3rd) host online

Quick note: For the sake of things formatting correctly on the blog I’m using a lot of screenshots of config files rather than the actual text. All of the text for the cloud-configs can be found on my github account here – https://github.com/jonlangemak/coreos/

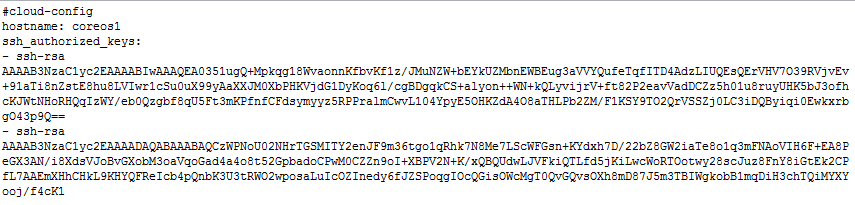

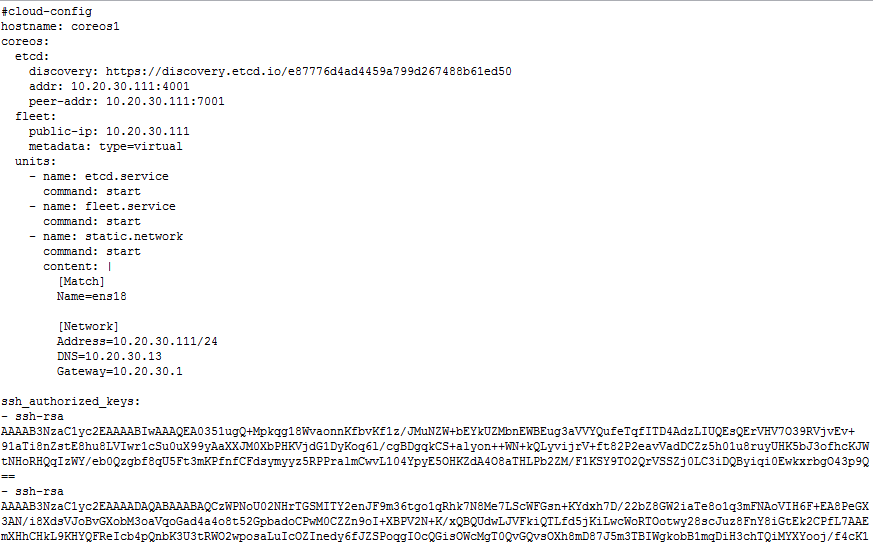

Now that we have our first CoreOS host online we can start the cool stuff. We’re going to pick up where we left off in my last post with our first installed CoreOS host which we called ‘coreOS1’. Recall we had started with a very basic cloud-config that looked something like this…

All this really did was get us up and running with a DHCP address and the base system services running. Since we’re looking to do a bit more in this post, we need to add some sections to the cloud-config. Namely, I’m going to configure a static IP address for the host, configure and start etcd, and configure and start fleet. So here’s what my new cloud config for coreOS1 will look like…

So there’s a lot more in this cloud-config. This config certainly deserves some explaining. However, in this post, I want to just get Continue reading

Installing CoreOS

If you haven’t heard of CoreOS it’s pretty much a minimal Linux distro designed and optimized to run docker. On top of that, it has some pretty cool services pre-installed that make clustering CoreOS pretty slick. Before we go that far, let’s start with a simple system installation and get one CoreOS host online. In future posts, we’ll bring up more hosts and talk about clustering.

The easiest way to install CoreOS is to use the ‘coreos-install’ script which essentially downloads the image and copies it bit for bit onto the disk of your choosing. The only real requirement here is that you can’t install to a disk you’re currently booted off of. To make this simple, I used a ArchLinux lightweight bootable Linux distro. So let’s download that ISO and get started…

Note: I use a mix of CoreOS VMs and physical servers in my lab. In this walkthrough I’ll be doing the install on a VM to make screenshots easier. The only real difference between the install on either side was how I booted the ArchLinux LiveCD. On the virtual side I just mounted the ISO and booted Continue reading

Generating SSH keys to use for CoreOS host connectivity

While this is likely widely known, I figured this was worth documenting for someone that might not have done this before. If you’ve looked at CoreOS at all you know that in order to connect to a CoreOS server it needs to be configured with your machines SSH key. This is done through the cloud-config file which we’ll cover in much greater detail in a later post. So let’s quickly walk through how to generate these keys on the three different operating systems I’m using for testing…

CentOS

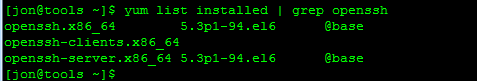

You’ll need to have OpenSSH installed on the CentOS host you’re using in order for this to work. Check to see if it’s installed…

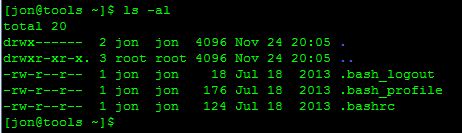

Now let’s check to make sure that we don’t already have a key generated…

There’s no .ssh folder listed in our home directory so let’s move on to generating the key.

Note: If there was a .ssh folder already present, there’s a good chance you already have a key generated. If you want to use this existing key, skip ahead to after we generate the new key.

Since there isn’t a .ssh folder present, let’s create a new key. Continue reading

Automation – Is the cart before the horse?

Over the last year I’ve had the opportunity to hear about lots of new and exciting products in the network and virtualization world. The one clear takeaway from all of these meetings has been that the vendors are putting a lot of their focus into ensuring their product can be automated. While I agree that any new product on the market needs to have a robust interface, I’m also sort of shocked at the way many vendors are approaching this. Before I go further, let me clarify two points. First, when I say ‘interface’ I’m purposefully being generic. An interface can be a user interface, it could be a REST interface, a Python interface, etc. Basically, its any means in which I, or something else, can interact with the product. Secondly, I’ll be the first person to tell you that any new product I look at should have a usable REST API interface. Why do I want REST? Simple, because I know that’s something that most automation tools or orchestrators can consume.

Over the last year I’ve had the opportunity to hear about lots of new and exciting products in the network and virtualization world. The one clear takeaway from all of these meetings has been that the vendors are putting a lot of their focus into ensuring their product can be automated. While I agree that any new product on the market needs to have a robust interface, I’m also sort of shocked at the way many vendors are approaching this. Before I go further, let me clarify two points. First, when I say ‘interface’ I’m purposefully being generic. An interface can be a user interface, it could be a REST interface, a Python interface, etc. Basically, its any means in which I, or something else, can interact with the product. Secondly, I’ll be the first person to tell you that any new product I look at should have a usable REST API interface. Why do I want REST? Simple, because I know that’s something that most automation tools or orchestrators can consume.

So what’s driving this? Why are we all of a sudden consumed with the need to automate Continue reading

Chef Basics – Hello World for Chef

In my last post, we covered setting up the basic install of the Chef Server, the Chef client, and a test node that we bootstrapped with Chef. Now let’s talk about some of the basics and hopefully by the end of this post we’ll get to see Chef in action! Let’s start off by talking about some of the basic constructs with Chef…

Cookbooks

Cookbooks can be seen as the fundamental configuration item in Chef. Cookbooks are used to configure a specific item. for instance, you might have a cookbook that’s called ‘mysql’ that’s used to install and configure a MySQL server on a host. There might be another cookbook called ‘httpd’ that installs and configures the Apache web server on a host. Cookbooks are created on the Chef client and then uploaded and stored on the Chef server. As we’ll see going forward, we don’t actually spend much time working directly on the Chef server. Rather, we work on the Chef client and then upload our work to the server for consumption by Chef nodes.

Recipes

Recipes are the main building block of cookbooks. Cookbooks can contain the Continue reading

Installing Chef Server, Client, and Node

I want to get Chef installed and running before we dive into all of the lingo required to fully understand what Chef is doing. In this post we’ll install the Chef Server, a Chef client, and a test node we’ll be testing our Chef configs on. That being said, let’s dive right into the configuration!

Installing Chef Server

The Linux servers I’ll be using are based on CentOS (the exact ISO is CentOS-6.4-x86_64-minimal.iso). The Chef server is really the brains of the operation. The other two components we’ll use in the initial lab are the client and the node both of which interact with the server. So I’m going to assume that I’ve just installed Linux and haven’t done anything besides configured the hostname, IP address, gateway, and name server (as a rule, I usually disable SELinux as well). We’ll SSH to the server and start from there…

The base installation of CentOS I’m running doesn’t have wget installed so the first step is to get that…

yum install wget –yThe next step is to go the Chef website and let them tell you how to install the server. Browse Continue reading

Working with VMware NSX – Logical to Physical connectivity

In our last post, we talked about how to deploy what I referred to as logical networking. I classify logical networking as any type of switching or routing that occurs solely on the ESXi hosts. It should be noted that with logical networking, the physical network is still used, but only for IP transport of overlay encapsulated packets.

That being said, in this post I’d like to talk about how to connect our one of our tenants to the outside world. In order for the logical tenant network to talk to the outside world, we need to find a means to connect the logical networks out to the physical network. In VMware NSX, this is done with the edge gateway. The edge gateway is similar to the DLR (distributed local router) we deployed in the last post, however there is one significant difference. The edge gateway is in the data plane, that is, it’s actually in the forwarding path for the network traffic.

Note – I will sometimes refer to the edge services gateway as the edge gateway or simply edge. Despite both the edge services gateway and the DLR Continue reading

Working with VMware NSX – Logical networking

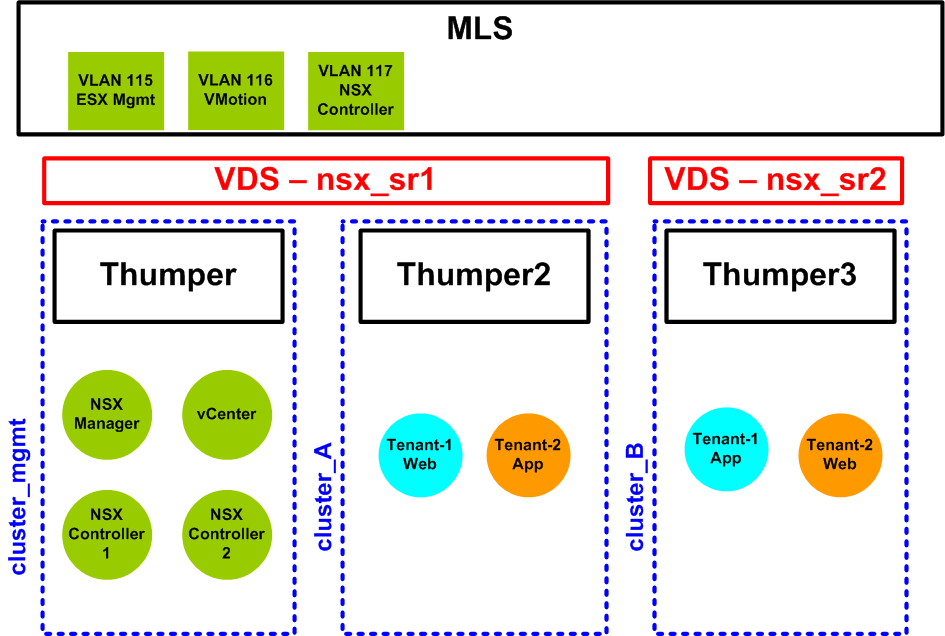

In my last post, we wrapped up the base components required to deploy NSX. In this post, we’re going to configure some logical routing and switching. I’m specifically referring to this as ‘logical’ since we are only going to deal with VM to VM traffic in this post. NSX allows you to logically connect VMs at either layer 2 or layer 3. So let’s look at our lab diagram…

If you recall, we had just finished creating the transport zones at the end of the last post. The next step is to provision logical switches. Since we want to test layer 2 and layer 3 connectivity, we’re going to provision NSX in two separate fashions. The first method will be using the logical distributed router functionality of NSX. In this method, tenant 1 will have two logical switches. One for the app layer and one for the web layer. We will then use the logical distributed router to allow the VMs to route to one another. The 2nd method will be to have both the web and app VMs on the same logical layer 2 segment. We Continue reading

Working with VMware NSX – The setup

I’ve spent some time over the last few weeks playing around with VMware’s NSX product. In this post, I’d like to talk about getting the base NSX configuration done which we’ll build on in later posts. However, when I say ‘base’, I don’t mean from scratch. I’m going to start with a VMware environment that has the NSX manager and NSX controllers deployed already. Since there isn’t a lot of ‘networking’ in getting the manager and controllers deployed, I’m not going to cover that piece. But, if you do want to start from total scratch with NSX, see these great walk through from Chris Wahl and Anthony Burke…

Chris Wahl

http://wahlnetwork.com/2014/04/28/working-nsx-deploying-nsx-manager/

http://wahlnetwork.com/2014/05/06/working-nsx-assigning-user-permissions/

http://wahlnetwork.com/2014/06/02/working-nsx-deploying-nsx-controllers-via-gui-api/

http://wahlnetwork.com/2014/06/12/working-nsx-preparing-cluster-hosts/

Anthony Burke

http://networkinferno.net/installing-vmware-nsx-part-1

http://networkinferno.net/installing-vmware-nsx-part-2

http://networkinferno.net/installing-vmware-nsx-part-3

Both of those guys are certainly worth keeping an eye on for future NSX posts (they have other posts around NSX but I only included the ones above to get you to where I’m going to pick up).

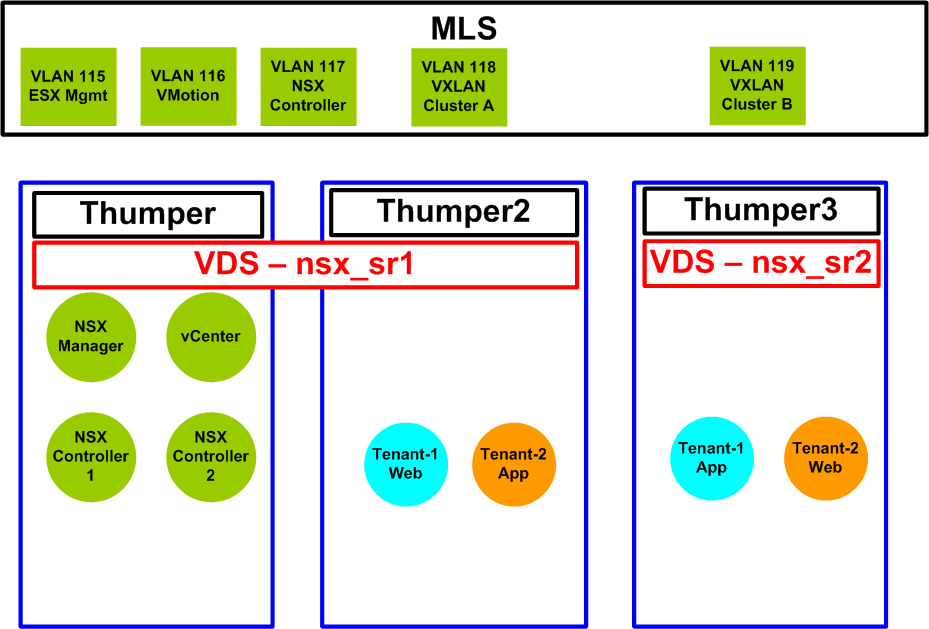

So let’s talk about where I’m going to start from. My topology from where I’ll start looks like this…

Note: For reference I’m going to try and use the green Continue reading

Docker Essentials – The docker file

So we’ve done quite a bit with docker up to this point. If you’ve missed the earlier posts, take a look at them here…

Getting Started with Docker

Docker essentials – Images and Containers

Docker essentials – More work with images

So I’d like to take us to the next step and talk about how to use docker files. As we do that, we’ll also get our first exposure to how docker handles networking. So let’s jump right in!

We saw earlier that when working with images that the primary method for modifying images was to commit your container changes to an image. This works, but it’s a bit clunky since you’re essentially starting a docker container, making changes, exiting out of it, and then committing the changes. What if we could just run a script that would build the image for us? Enter docker files!

Docker has the ability to build an image based on a set of instructions referred to as a docker file. Using the docker run command, we can rather easily build a custom image and then spin up containers based upon the image. Docker files use a Continue reading

Docker essentials – More work with images

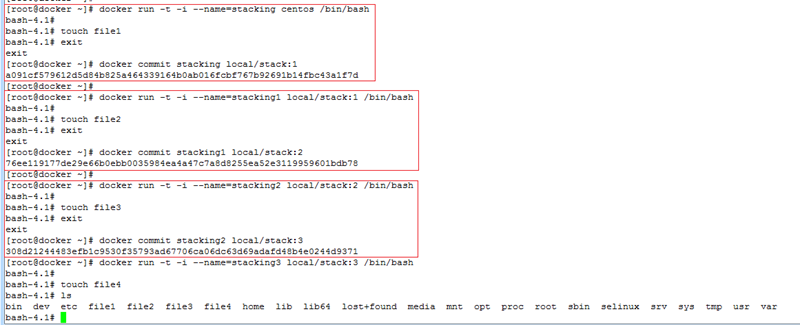

Images – Messing with the stack

So we’ve had some time to digest what containers and images are. Now let’s talk in a little greater detail about images and how they layer. A key piece of docker is how the images stack. For instance, let’s quickly build a container that has 3 user image layers in it. Recall, images are the read-only pieces of the container so having 3 user layers implies that I have done 3 commits and any changes after that will be in the 4th read/write layer that lives in the container itself…

Note: Im using the term ‘user images’ to distinguish between base images and the ones that I create. We’ll see in a minute that a base image can even have multiple images as part of the base. I’m also going to use the term ‘image stack’ to refer to all of the images that are linked together to make a running image or container.

I’ve highlighted each user image creation to break it out. Essentially this is what happened…

-Ran the base CentOS image creating a container called stacking

-Created a file in the container called Continue reading