Notes on Active Queue Management Techniques

“When you can measure what you are speaking about, and express it in numbers, you know something about it” - Lord KelvinNotes on Flow Control for High Speed Networks

“In theory, there is no difference between theory and practice. In practice, there is.” - Jan L. A. van de SnepscheutPacket Classification for High Speed Packet Processing

“We are drowning in information but starved for knowledge.” - John NaisbittHashing Strategies for Packet Processing

“It is not easy to become an educated person.” - Richard HammingTransistor Advances – From Planar MOSFETs to CFET Stacks

“No exponential is forever, but we can delay ‘forever’.” — Gordon MooreNetwork Traffic Modeling Approaches

The greatest value of a picture is when it forces us to notice what we never expected to see. - John TukeyLagrangian Methods in Constrained Optimization

An expert is a person who has made all the mistakes that can be made in a very narrow field. - Niles BohrLagrange

GeoGebra Interactive Graph

Quantile Regression

The Median Isn’t the Message - Stephen Jay GouldQuantile Regression

The Median Isn’t the Message - Stephen Jay Gould

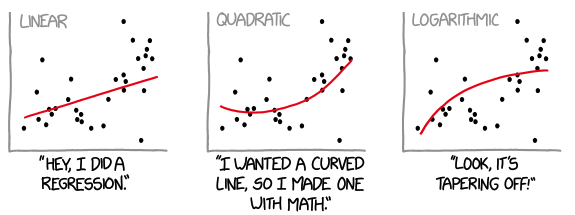

When we think of regression, the most common one, which we all know, is linear regression. It is a fairly popular and simple technique for estimating the mean of some variable conditional on the values of independent variables.

Now imagine if you are a grocery delivery or ride-hailing service and want to show the customer the estimated delivery or wait times. If the distance is smaller, there will be less variability in the waiting time, but if the distance is longer, many things can go wrong, and due to that there can be a lot of variability in the estimate time. If we have to create a model to predict that, we may not want to apply linear regression as that will only tell us the average time.

It’s important to note that one of the key assumptions for applying linear regression is a constant variance (Homoskedasticity). However, many times this is often not the case. The variability is not constant (Heteroscedastic), which violates the linear regression assumption (Linear Regression Notes).

Motivation

Let’s look at a running data for the distance vs. the time it takes to finish. We clearly know Continue reading

Optimal and Heuristic Approaches to Disjoint Path Routing

Two roads diverged in a wood, and I–I took the one less traveled by, And that has made all the difference.- Robert FrostOptimal and Heuristic Approaches to Disjoint Path Routing

To doubt everything or to believe everything are two equally convenient solutions; both dispense with the necessity of reflection. - Henri Poincaré

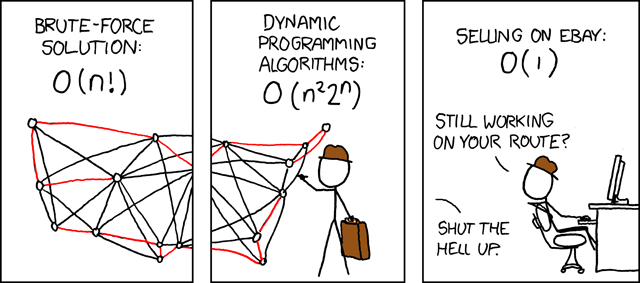

Disjoint Path routing problems involve finding multiple paths between a source and a destination pair without any shared components. There are different types of disjoint paths, each with specific requirements. For example, link disjoint paths ensure that the paths do not have any common links, while node disjoint paths guarantee that the paths do not share any common nodes. SRLG disjoint paths are another variation, where the paths do not share any common risk groups.

These problems are commonly addressed to ensure network reliability, load balancing, and congestion reduction. The first problem we will examine is the MIN-SUM problem, which aims to determine a set of disjoint routes with the lowest overall cost. To solve this issue, we will look at integer linear programming (ILP). Afterwards, we will explore the MIN-SUM problem in the context of networks with shared risk link groups (SRLGs) and present corresponding solutions.

Basic Disjoint Problem

Let’s say our problem is to find a simple link disjoint paths between a given source and destination. One of the common ways we hear to do in Continue reading

Balancing MMLU With The Shortest Path Cost Constraints

It is almost impossible for someone coming from a purely mathematical background with little exposure to applications to understand how to go about formulating a real-world problem in mathematical terms. - Dantzig.

In our previous post, we explored the Min. Max Link Utilization problem using Linear Programming. The focus was on optimizing network traffic distribution to achieve optimal link utilization. However, this approach did not consider the potential impact on path lengths, allowing flows to be placed on longer paths without constraints. We mentioned this briefly in a passing statement. However, I thought it would be worth exploring the extended problem formulation that introduces an additional constraint to strike a balance between minimizing link utilization and controlling the deviation of paths from their shortest counterparts.

The core objective remains the same—to minimize the maximum link utilization across the network. However, we now introduce a conflicting constraint that aims to limit the extent to which paths can deviate from their shortest routes. This constraint is crucial for keeping path lengths within an acceptable range, as excessively long paths can degrade the user experience.

Mathematically, we are faced with two opposing constraints. On the one hand, we strive to minimize link utilization, Continue reading

MMLU in Network Flow Optimization

In a previous post, I discussed how Maximum Flow problems can be used for network optimization. We focused on a scenario where demands were already routed in the network, and our objective was to determine the maximum demand that could be handled between a given source and a destination metro. We solved this problem by calculating the residual bandwidth for the graph, creating fake demand nodes for each metro with high-capacity edges to avoid them being bottlenecks, and applying Dinic’s algorithm between the source and the destination metro. This is also called a Single Commodity Flow Problem.

We then extended the problem to consider two metros sending traffic to the same destination sink and used the Network Simplex algorithm to determine the maximum traffic the network could accommodate. This is also known as a Multi Commodity Flow Problem. Finally, we validated our findings by routing the results through a network model.

In this post, we will discuss another constraint-based problem called Minimum Maximum Link Utilization (MMLU). The primary goal of MMLU is to route traffic demands in a network to minimize the maximum link utilization. In other words, we aim to distribute the traffic evenly across the network links to Continue reading

MMLU in Network Flow Optimization

In a previous post, I discussed how Maximum Flow problems can be used for network optimization. We focused on a scenario where demands were already routed in the network, and our objective was to determine the maximum demand that could be handled between a given source and a destination metro. We solved this problem by calculating the residual bandwidth for the graph, creating fake demand nodes for each metro with high-capacity edges to avoid them being bottlenecks, and applying Dinic’s algorithm between the source and the destination metro. This is also called a Single Commodity Flow Problem.Keeping the Pipe Just Full

In this post, I will be discussing a paper published by the Internet pioneer Leonard Kleinrock, titled “Keep the Pipe Just Full, But No Fuller”. The paper’s conclusion is that it is best to keep the internet “pipe” full, without overloading it. This idea is a take on Einstein’s famous quote, “Make everything as simple as possible, but not simpler.”

Introduction

It’s not always true that using the network to its fullest capacity will lead to better performance. When the network is overloaded, congestion and queueing delays can occur, which can affect the rate at which useful data is delivered and the time it takes for data to be delivered. This means there is a tradeoff between goodput and latency. To avoid network issues, congestion management is important. TCP regulates data flow from source to destination by sending packets while monitoring the delivery rate and response time to manage congestion. The challenge is to regulate traffic flow, as both underutilizing and overloading the capacity can waste resources and cause congestion.

For many years, TCP congestion control algorithms have relied on loss as a measure of congestion. However, loss causes sawtooth behaviors as flow expands until packets are dropped, then Continue reading

Keeping the Pipe Just Full

In this post, I will be discussing a paper published by the Internet pioneer Leonard Kleinrock, titled “Keep the Pipe Just Full, But No Fuller”. The paper’s conclusion is that it is best to keep the internet “pipe” full, without overloading it. This idea is a take on Einstein’s famous quote, “Make everything as simple as possible, but not simpler.”Flow Distribution Across ECMP Paths

ECMP is crucial for scaling and performance in modern data centers and wide-area networks, which rely on hash-based path selection. It leverages path diversity and keeps a flow’s packets on the same path, preventing reordering with useful properties like stateless operation and no reordering.

While simple and widely used, ECMP has some limitations. For example, it does not always distribute traffic evenly across all available paths. However, due to its ease of hardware implementation, ECMP remains the predominant approach. The core enabler for ECMP is hashing, which allows packet-by-packet path selection in a distributed manner across switches. ECMP limitations have also started getting more attention with the surge in building GPU clusters but fabrics suffer from Poor hashing due to a lack of flow entropy.

In this post, we’ll dive into ECMP and use statistical analysis to better understand the limitations.

Introduction

Here is a simplified explanation of how the lookup process functions. We aim to perform a prefix lookup that directs us to a specific ECMP Group listed in the ECMP group table. Each of these ECMP groups contains ECMP member counts for the ECMP group. A hash function takes Packet fields i.e. our typical five tuple (Source Continue reading