Set git behavior based on the repository path

I maintain a handful of git accounts at GitHub.com and on private git servers, and have repeated committed to a project using the wrong personality.

My early attempts to avoid this mistake involved scripts to set per-project git parameters, but I've found a more streamlined option.

The approach revolves around the file hierarchy in my home directory: Rather than dumping everything in a single ~/projects directory, they're now in ~/projects/personal, ~/projects/work, etc...

Whenever cloning a new project, or starting a new one, as long as I put it in the appropriate directory, git will chose the behaviors and identity appropriate for that project.

Here's how it works, with 'personal' and 'work' accounts at GitHub.com

1. Generate an SSH key for each account

ssh-keygen -t ed25519 -P '' -f ~/.ssh/work.github.com

ssh-keygen -t ed25519 -P '' -f ~/.ssh/personal.github.com

2. Add each public key to its respective GitHub account.

3. Continue reading

Dell 2161DS-2 serial port pinout

I picked up a Dell (Avocent) 2161DS-2 (same as 4161DS?) KVM recently, and needed to use the serial port to upgrade the software.

Naturally, the serial port pinout is non-standard and requires a proprietary cable which comes with the KVM. Dell part numbers 80DH7 and 3JY78 might be involved. I don't have, and have never seen these cables.

I was able to to find the RX, TX and Ground pins and interact with the system using 9600, 8, N, 1.

|

| Pinout in red text |

|

| Is the color coding inside these adaptors standardized? If so this may help. |

The system prints some unsolicited messages ("welcome" or somesuch) a little while after power-up.

Notes from upgrading the firmware from MacOS 12:

# Grab the firmware

URL="https://dl.dell.com/RACK SOLUTIONS/DELL_MULTI-DEVICE_A04_R301142.exe"

wget -P /tmp "$URL"

# Start MacOS tftp service

sudo launchctl load -w /System/Library/LaunchDaemons/tftp.plist

# Extract the firmware (it's a self-extracting exe, but we can open it with unzip)

sudo unzip -d /private/tftpboot "/tmp/$(basename "$URL")" Omega_DELL_1.3.51.0.fl

# Now, using the menu on the KVM serial port, point it toward the MacOS TFTP service

# to retrieve the Omega_DELL_1.3.51.0.fl file

VPC peering with terraform

I wrote up an example of using terraform modules and provider aliases to turn up interconnected cloud resources (vpcs / vpc peering / peering acceptance / routes) across multiple cloud regions.

How do RFC3161 timestamps work?

RFC3161 exists to demonstrate that a particular piece of information existed at a certain time, by relying on a timestamp attestation from a trusted 3rd party. It's the cryptographic analog of relying on the date found on a postmark or a notary public's stamp.

RFC3161 exists to demonstrate that a particular piece of information existed at a certain time, by relying on a timestamp attestation from a trusted 3rd party. It's the cryptographic analog of relying on the date found on a postmark or a notary public's stamp.How does it work? Let's timestamp some data and rip things apart as we go.

First, we'll create a document and have a brief look at it. The document will be one million bytes of random data:

$ dd if=/dev/urandom of=data bs=1000000 count=1

1+0 records in

1+0 records out

1000000 bytes transferred in 0.039391 secs (25386637 bytes/sec)

$ ls -l data

-rw-r--r-- 1 chris staff 1000000 Dec 21 14:10 data

$ shasum data

3de9de784b327c5ecec656bfbdcfc726d0f62137 data

$

Next, we'll create a timestamp request based on that data. The -cert option asks the timestamp authority (TSA) to include their identity (certificate chain) in their reply and -no_nonce omits anti-replay protection from the request. Without specifying that option we'd include a large random number in the request.

$ openssl ts -query -cert -no_nonce < data | hexdump -C

Using configuration from /opt/local/etc/openssl/openssl.cnf

00000000 30 29 02 01 01 30 21 30 09 06 05 2b 0e 03 Continue readingPhysically man-in-the-middling an IoT device with Linux Bridge

|

| Initial setup |

network:

version: 2

renderer: networkd

ethernets:

eth0:

dhcp4: no

eth1:

dhcp4: no

bridges:

br0:

addresses: [192.168.1.2/24]

gateway4: 192.168.1.1

interfaces:

- eth0

- eth1

SSH to all of the serial ports

This is just a quick-and-dirty script for logging into every serial port on an Opengear box, one in each tab of a MacOS terminal.Used it just recently because I couldn't remember where a device console was connected.

Don't change mouse focus while it's running: It'll wind up dumping keystrokes into the wrong window.

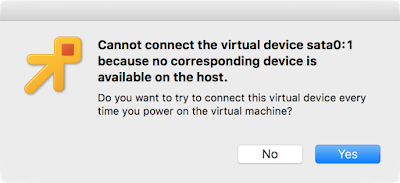

Cannot connect the virtual device … because no corresponding device is available on the host

Recently I've been building some VM templates on my MacBook and launching instances of them in VMware. Each time it produced following error:Cannot connect the virtual device sata0:1 because no corresponding device is available on the host.

Either button caused the guest to boot up. The "No" button ensured that it booted without error on subsequent reboots, while choosing "Yes" allowed me to enjoy the error with each power-on of the guest.

Sata0 is, of course a (virtual) disk controller, and device 1 is an optical drive. I knew that much, but the exact meaning of the error wasn't clear to me, and googling didn't lead to a great explanation.

I wasn't expecting there to be a "corresponding device ... available on the host" because the host has neither a SATA controller nor an optical drive, and no such hardware should be required for my use case, so, what did the error mean?

It turns out that I was producing the template (a .ova file) with the optical drive "connected" (VMware term) to ... something. The issue isn't related to the lack of a device on the host, but that there's no ISO file "inserted" into the virtual drive.

Here's the Continue reading

Syslog relay with Scapy

I needed to point some syslog data at a new toy being evaluated by security folks. #!/usr/bin/env python2.7

from scapy.all import *

def pkt_callback(pkt):

del pkt[Ether].src

del pkt[Ether].dst

del pkt[IP].chksum

del pkt[UDP].chksum

pkt[IP].dst = '192.168.100.100'

sendp(pkt)

sniff(iface='eth0', filter='udp port 514', prn=pkt_callback, store=0)

This script has scapy collecting frames matching udp port 514 (libpcap filter) from interface eth0. Each matching packet is handed off to the pkt_callback function. It clears fields which need to be recalculated, changes the destination IP (to the address of the new Security Thing) and puts the packets back onto the wire.

The source IP on these forged packets is unchanged, so the Security Thing thinks it's getting the original logs from real servers/routers/switches/PDUs/weather stations/printers/etc... around the Continue reading

SSH HashKnownHosts File Format

The HashKnownHosts option to the OpenSSH client causes it obfuscate the host field of the ~/.ssh/known_hosts file. Obfuscating this information makes it harder for threat actors (malware, border searches, etc...) to know which hosts you connect to via SSH.Hashing defaults to off, but some platforms turn it on for you:

chris:~$ grep Hash /etc/ssh/ssh_config

HashKnownHosts yes

chris:~$

|1|NWpzcOMkWUFWapbQ2ubC4NTpC9w=|ixkHdS+8OWezxVQvPLOHGi2Oawo= ecdsa-sha2-nistp256 AAAAE2Vj<...>ZHNLpyJsv

There's one record per line, with the fields separated by spaces. The first field is the remote host (SSH server) identifier.

In this case, the leading characters |1| in the host identifier are the magic string (HASH_MAGIC). It tells us that the field is hashed, rather than a plaintext hostname (or address). The remaining characters in the field comprise two parts: a 160-bit salt (random string) and a 160-bit SHA1 hash result. Both values are base64 encoded.

The various OpenSSH binaries that use information in this file feed both the remote hosts name (or address) and the salt to the hashing function in order to produce the hash result:

So, lets validate a host entry against this record the hard way. The entry above is for an IP address: Continue reading

Pluribus Networks… Wait, where are we again?

I was privileged to visit Pluribus Networks as a delegate at Network Field Day 16 a couple of weeks ago. Somebody else paid for the trip. Details here.

Much has changed at Pluribus, I hardly recognized the place!

I quite like Pluribus (their use of Solaris under their Netvisor switching OS got me right in the feels early on) so I'm happy to report that most of what's new looks like changes for the better.

When we arrived at Pluribus HQ we were greeted by some new faces, a new logo, color scheme... Even new accent lighting in the demo area!

Gone also are the Server Switches with their monstrous control planes (though still listed on the website, they weren't mentioned in the presentation), Solaris, and a partnership with Supermicro.

In their place we found:

- The new logo and colors

- New faces in management and marketing

- Netvisor running on Linux

- Whitebox and OCP-friendly switches

- A partnership with D-Link

- Some Netvisor improvements

Linux

KEMP Presented Some Interesting Features at NFD16

KEMP Technologies presented at Network Field Day 16, where I was privileged to be a delegate. Who paid for what? Answers here.

KEMP Technologies presented at Network Field Day 16, where I was privileged to be a delegate. Who paid for what? Answers here.Three facets of the KEMP presentation stood out to me:

The KEMP Management UI Can Manage Non-KEMP Devices

KEMP's centralized management UI, the KEMP 360 Controller, can manage/monitor other load balancers (ahem, Application Delivery Controllers) including AWS ELB, HAProxy, NGINX and F5 BIG-IP.This is pretty clever: If KEMP gets into an enterprise, perhaps because it's dipping a toe into the cloud at Azure, they may manage to worm their way deeper than would otherwise have been possible. Nice work, KEMPers.

VS Motion Can Streamline Manual Deployment Workflows

KEMP's VS Motion feature allows easy service migrations between KEMP instances by copying service definitions from one box to another. It's probably appropriate when replicating services between production instances and when promoting configurations between dev/test/prod. The mechanism is described in some detail here:The interface is pretty straightforward. It looks just like the balance transfer UI at my bank: Select the From instance, the To instance, what you want transferred (which virtual service) and then hit the Move button. The interface also sports a Copy button, so in that Continue reading

Using FQDN for DMVPN hubs

I've done some testing with specifying DMVPN hubs (NHRP servers, really) using their DNS name, rather than IP address.This matters to me because of some goofy environments where spoke routers can't predict what network they'll be on (possibly something other than internet), and where I can't leverage multiple hubs per tunnel due to a control plane scaling issue.

The DNS-based configuration includes the following:

interface Tunnel1

ip nhrp nhs dynamic nbma dmvpn-pool.fragmentationneeded.net

There's no longer a requirement for any ip nhrp map or ip nhrp nhs x.x.x.x configuration when using this new capability.

My testing included some tunnels with very short ISAKMP and IPSec re-key intervals. I found that the routers performed the DNS resolution just once. They didn't go back to DNS again for as long as the hub was reachable.

Spoke routers which failed to establish a secure connection for whatever reason would re-resolve the hub address each time the DNS response expired its TTL. But once they succeeded in connecting, I observed no further DNS traffic for as long as the tunnel survived.

The record I published (dmvpn-pool.fragmentationneeded.net above) includes multiple A records. The DNS server randomizes the record Continue reading

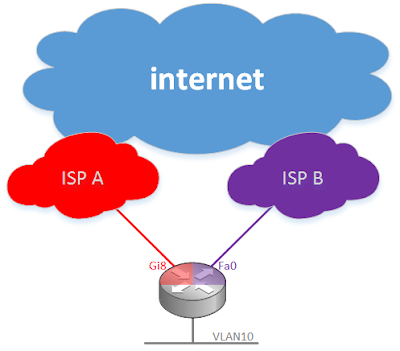

Small Site Multihoming with DHCP and Direct Internet Access

Cisco recently (15.6.3M2 ) resolved CSCve61996, which makes it possible to fail internet access back and forth between two DHCP-managed interfaces in two different front-door VRFs attached to consumer-grade internet service.Prior to the IOS fix there was a lot of weirdness with route configuration on DHCP interfaces assigned to VRFs.

I'm using a C891F-K9 for this example. The WAN interfaces are Gi0 and Fa8. They're in F-VRF's named ISP_A and ISP_B respectively:

First, create the F-VRFs and configure the interfaces:

ip vrf ISP_A

ip vrf ISP_B

interface GigabitEthernet8

ip vrf forwarding ISP_A

ip dhcp client default-router distance 10

ip address dhcp

interface FastEthernet0

ip vrf forwarding ISP_B

ip dhcp client default-router distance 20

ip address dhcp

The distance commands above assign the AD of the DHCP-assigned default route. Without these directives the distance would be 254 in each VRF. They're modified here because we'll be using the distance to select the preferred internet path when both ISPs are available.

Next, let's keep track of whether or not the internet is working via each provider. In this case I'm pinging 8.8.8.8 via both paths, but this health check can be whatever makes sense for your situation. So, Continue reading

Serial Pinout for APC

This is just a quick note to remind me how to make serial cables for APC power strips. This cable works between an APC AP8941 and an Opengear terminal server with Cisco-friendly (-X2 in Opengear nomenclature) pinout.Only pins 3,4 and 6 are populated on the 8P8C end. It probably doesn't matter whether the ground pin (black) lands on pin 4 or 5 because both should be ground on the Opengear end. The yellow wire is unused.

Cisco: Not Serious About Network Programmability

|

| "You can't fool me, there ain't no sanity clause!" |

The REST API plugin for newer ASA hardware is an example of that. It works fairly well, supports a broad swath of device features, is beautifully documented and has an awesome interactive test/dev dashboard. The dashboard even has the ability to spit out example code (java, javascript, python) based on your point/click interaction with it.

It's really slick.

But I Can't Trust It

Here's the problem: It's an un-versioned REST API, and the maintainers don't hesitate to change its behavior between minor releases. Here's what's different between 1.3(2) and 1.3(2)-100:New Features in ASA REST API 1.3(2)-100

So, any code based on earlier documentation is now broken when it calls /api/certificate/details.

This Shouldn't Happen

Don't take my word for it:Remember than an API is Continue reading

Epoch Rollover: Coming Two Years Early To A Router Near You!

The 2038 Problem

|

| Broken Time? - Roeland van der Hoorn |

Lots of application functions and system libraries keep track of the time using a 32-bit signed integer, which has a maximum value of around 2.1 billion. It's good for a bit more than 68 years worth of seconds.

Things are likely to get weird 2.1 billion seconds after the epoch on January 19th, 2038.

As the binary counter rolls over from 01111111111111111111111111111111 to 10000000000000000000000000000000, the sign bit gets flipped. The counter will have changed from its farthest reach after the epoch to its farthest reach before the epoch. time will appear to have jumped from early 2038 to late 1901.

Things might even get weird within the next year (January 2018!) as systems begin encounter freshly minted CA certificates with expirations after the epoch rollover (it's common for CA certificates to last for 20 years.) These certificates may appear to have expired in late 1901, over a century prior to their Continue reading

Docker’s namespaces – See them in CentOS

In the Docker Networking Cookbook (I got my copy directly from Pact Publishing), Jon Langemak explains why the iproute2 utilities can't see Docker's network namespaces: Docker creates its namespace objects in /var/run/docker/netns, but iproute2 expects to find them in /var/run/netns.Creating a symlink from /var/run/docker/netns to /var/run/netns is the obvious solution:

$ sudo ls -l /var/run/docker/netns

total 0

-r--r--r--. 1 root root 0 Feb 1 11:16 1-6ledhvw0x2

-r--r--r--. 1 root root 0 Feb 1 11:16 ingress_sbox

$ sudo ip netns list

$ sudo ln -s /var/run/docker/netns /var/run/netns

$ sudo ip netns list

1-6ledhvw0x2 (id: 0)

ingress_sbox (id: 1)

$

But there's a problem. Look where this stuff is mounted:

$ ls -l /var/run

lrwxrwxrwx. 1 root root 6 Jan 26 20:22 /var/run -> ../run

$ df -k /run

Filesystem 1K-blocks Used Available Use% Mounted on

tmpfs 16381984 16692 16365292 1% /run

$

The symlink won't survive a reboot because it lives in a memory-backed filesystem. My first instinct was to have a boot script (say /etc/rc.d/rc.local) create the symlink, but there's a much better way.

Fine, I'm starting to like systemd

Systemd's tmpfiles.d is a really elegant way of handling touch files, symlinks, empty Continue reading

Anuta Networks NCX: Overcoming Skepticism

Anuta Networks demonstrated their NCX network/service orchestration product at Network Field Day 14.

Disclaimer

Anuta Networks page at TechFieldDay.com with videos of their presentations

Anuta's promise with NCX is to provide a vendor and platform agnostic network provisioning tool with a slick user interface and powerful management / provisioning features.

I was skeptical, especially after seeing the impossibly long list of supported platforms.

Impossible!

Network device configurations are complicated! They've got endless features, each of which is tied to others the others in unpredictable ways. Sure, seasoned network ops folks have no problem hopping around a text configuration to discover the ways in which ACLs, prefix lists, route maps, class maps, service policies, interfaces, and whatnot relate to one another... But capturing these complicated relationships in a GUI? In a vendor independent way?

I left the presentation with an entirely different perspective, and a desire to try it out on a network I manage. Seriously, I have a use case for this thing. Here's why I was wrong:

Not a general purpose UI

Okay, so it's a provisioning system, not a general purpose UI. Setup is likely nontrivial because it requires you to consider the types of services Continue reading

ERSPAN on Comware

The Comware documentation doesn't spell it out clearly, but it's possible to get ERSPAN-like functionality by using a GRE tunnel interface as the target for a local port mirror session.This is very handy for quick analysis of stuff that's not L2 adjacent with an analysis station.

First, create a local mirror session:

mirroring-group 1 local

Next configure an unused physical interface for use by tunnel interfaces:

service-loopback group 1 type tunnel

interface <unused-interface>

port service-loopback group 1

quit

Now configure a GRE tunnel interface as the destination for the mirror group:

interface Tunnel0 mode gre

source <whatever>

destination <machine running wireshark>

mirroring-group 1 monitor-port

quit

Finally, configure the source interface(s):

interface <interesting-source-interface-1>

mirroring-group 1 mirroring-port inbound

interface <interesting-source-interface-2>

mirroring-group 1 mirroring-port inbound

Traffic from the source interfaces arrives at the analyzer with extra Ethernet/IP/GRE headers attached. Inside each GRE payload is the original frame as collected at a mirroring-group source interface. If the original traffic with extra headers attached (14+20+4 == 38 bytes) exceeds MTU, then the switch fragments the frame. Nothing gets lost and Wireshark handles it gracefully.

ICMP Covert Channel for IOS

I wrote a quick-and-dirty covert channel via ICMP for IOS routers.The channel in question isn't super covert. It's all in plaintext and is quite noisy because it only delivers a single byte of message payload per ping. But it gets messages from routers to the listener via pings, and that was the objective. I expect it to be useful when diagnosing IPSec issues behind unknown overload NATs.

It lives here.

Invoke it on a router like this:

It will then send 14 pings (13 for the characters in 'testing 1 2 3' plus an <EOM> terminator) to the target machine.

The listener functions as a packet sniffer, so it requires root access. It prints out a line per incoming message, preceded by the sender's IP address: