Video: IPv6 RA Guard and Extension Headers

Last week’s IPv6 security video introduced the rogue IPv6 RA challenges and the usual countermeasure – RA guard. Unfortunately, IPv6 tends to be a wonderfully extensible protocol, creating all sorts of opportunities for nefarious actors and security researchers.

For years, the networking vendors were furiously trying to plug the holes created by the academically minded IPv6 designers in love with fragmented extension headers. In the meantime, security researches had absolutely no problem finding yet another weird combination of IPv6 headers that would bypass any IPv6 RA guard implementation until IETF gave up and admitted one cannot have “infinitely extensible” and “secure” in the same sentence.

For more details watch the video by Christopher Werny describing how one could use IPv6 extension headers to circumvent IPv6 RA guard

MLAG Deep Dive: Layer-2 Flooding

In the previous blog post of the MLAG Technology Deep Dive series, we explored the intricacies of layer-2 unicast forwarding. Now let’s focus on layer-2 BUM1 flooding functionality of an MLAG system.

Our network topology will have two switches and five hosts, some connected to a single switch. That’s not a good idea in an MLAG environment, but even if you have a picture-perfect design with everything redundantly connected, you will have to deal with it after a single link failure.

MLAG Deep Dive: Layer-2 Flooding

In the previous blog post of the MLAG Technology Deep Dive series, we explored the intricacies of layer-2 unicast forwarding. Now let’s focus on layer-2 BUM1 flooding functionality of an MLAG system.

Our network topology will have two switches and five hosts, some connected to a single switch. That’s not a good idea in an MLAG environment, but even if you have a picture-perfect design with everything redundantly connected, you will have to deal with it after a single link failure.

Beware of Vendors Bringing White Papers

A few weeks ago I wrote about tradeoffs vendors have to make when designing data center switching ASICs, followed by another blog post discussing how to select the ASICs for various roles in data center fabrics.

You REALLY SHOULD read the two blog posts before moving on; here’s the buffer-related TL&DR for those of you ignoring my advice ;)

Beware of Vendors Bringing White Papers

A few weeks ago I wrote about tradeoffs vendors have to make when designing data center switching ASICs, followed by another blog post discussing how to select the ASICs for various roles in data center fabrics.

You REALLY SHOULD read the two blog posts before moving on; here’s the buffer-related TL&DR for those of you ignoring my advice ;)

When You Find Yourself on Mount Stupid

The early October 2021 Facebook outage generated a predictable phenomenon – couch epidemiologists became experts in little-known Bridging the Gap Protocol (BGP), including its Introvert and Extrovert variants. Unfortunately, I also witnessed several unexpected trips to Mount Stupid by people who should have known better.

To set the record straight: everyone’s been there, and the more vocal you tend to be on social media (including mailing lists), the more probable it is that you’ll take a wrong turn and end there. What matters is how gracefully you descend and what you’ve learned on the way back.

When You Find Yourself on Mount Stupid

The early October 2021 Facebook outage generated a predictable phenomenon – couch epidemiologists became experts in little-known Bridging the Gap Protocol (BGP), including its Introvert and Extrovert variants. Unfortunately, I also witnessed several unexpected trips to Mount Stupid by people who should have known better.

To set the record straight: everyone’s been there, and the more vocal you tend to be on social media (including mailing lists), the more probable it is that you’ll take a wrong turn and end there. What matters is how gracefully you descend and what you’ve learned on the way back.

netsim-tools: Combining VLANs with VRFs

Last two weeks we focused on access VLANs and VLAN trunk implementation in netsim-tools. Can we combine them with VRFs? Of course.

The trick is very simple: attributes within a VLAN definition become attributes of VLAN interfaces. Add vrf attribute to a VLAN and you get all VLAN interfaces created for that VLAN in the corresponding VRF. Can’t get any easier, can it?

How about extending our VLAN trunk lab topology with VRFs? We’ll put red VLAN in red VRF and blue VLAN in blue VRF.

netlab: Combining VLANs with VRFs

Last two weeks we focused on access VLANs and VLAN trunk netlab implementation. Can we combine them with VRFs? Of course.

The trick is very simple: attributes within a VLAN definition become attributes of VLAN interfaces. Add vrf attribute to a VLAN and you get all VLAN interfaces created for that VLAN in the corresponding VRF. Can’t get any easier, can it?

How about extending our VLAN trunk lab topology with VRFs? We’ll put red VLAN in red VRF and blue VLAN in blue VRF.

Video: Rogue IPv6 RA Challenges

IPv6 security-focused presentations were usually an awesome opportunity to lean back and enjoy another round of whack-a-mole, often starting with an attacker using IPv6 Router Advertisements to divert traffic (see also: getting bored at Brussels airport) .

Rogue IPv6 RA challenges and the corresponding countermeasures are thus a mandatory part of any IPv6 security training, and Christopher Werny did a great job describing them in IPv6 security webinar.

Video: Rogue IPv6 RA Challenges

IPv6 security-focused presentations were usually an awesome opportunity to lean back and enjoy another round of whack-a-mole, often starting with an attacker using IPv6 Router Advertisements to divert traffic (see also: getting bored at Brussels airport) .

Rogue IPv6 RA challenges and the corresponding countermeasures are thus a mandatory part of any IPv6 security training, and Christopher Werny did a great job describing them in IPv6 security webinar.

Using Custom Vagrant Boxes with netsim-tools

A friend of mine started using Vagrant with libvirt years ago (it was his enthusiasm that piqued my interest in this particular setup, eventually resulting in netsim-tools). Not surprisingly, he’s built Vagrant boxes for any device he ever encountered, created quite a collection that way, and would like to use them with netsim-tools.

While I didn’t think about this particular use case when programming the netsim-tools virtualization provider interface, I decided very early on that:

- Everything worth changing will be specified in the system defaults

- You will be able to change system defaults in topology file or user defaults.

Using Custom Vagrant Boxes with netlab

A friend of mine started using Vagrant with libvirt years ago (it was his enthusiasm that piqued my interest in this particular setup, eventually resulting in netlab). Not surprisingly, he’s built Vagrant boxes for any device he ever encountered, created quite a collection that way, and would like to use them with netlab.

While I didn’t think about this particular use case when programming the netlab virtualization provider interface, I decided very early on that:

- Everything worth changing will be specified in the system defaults

- You will be able to change system defaults in topology file or user defaults.

Select the Best Switching ASIC For the Job

Last week I described some of the data center switching ASIC design tradeoffs and the ASIC families Broadcom created to fit somewhere in that multi-dimensional space.

Next step: how could you design your data center fabric to make the most out of them? To keep things simple, we’ll build a typical leaf-and-spine fabric with a WAN edge layer (sometimes called border leaf switches).

Select the Best Switching ASIC For the Job

Last week I described some of the data center switching ASIC design tradeoffs and the ASIC families Broadcom created to fit somewhere in that multi-dimensional space.

Next step: how could you design your data center fabric to make the most out of them? To keep things simple, we’ll build a typical leaf-and-spine fabric with a WAN edge layer (sometimes called border leaf switches).

MLAG Deep Dive: Dynamic MAC Learning

In the first blog post of the MLAG Technology Deep Dive series, we explored the components of an MLAG system and the fundamental control plane requirements.

This post focuses on a major building block of the layer-2 data plane functionality: MAC learning. We’ll keep using the same network topology with two switches and five hosts, and assume our system tries its best to implement hot-potato switching (sending the frames toward the destination MAC address on the shortest possible path).

MLAG Deep Dive: Dynamic MAC Learning

In the first blog post of the MLAG Technology Deep Dive series, we explored the components of an MLAG system and the fundamental control plane requirements.

This post focuses on a major building block of the layer-2 data plane functionality: MAC learning. We’ll keep using the same network topology with two switches and five hosts, and assume our system tries its best to implement hot-potato switching (sending the frames toward the destination MAC address on the shortest possible path).

netsim-tools VLAN Trunk Example

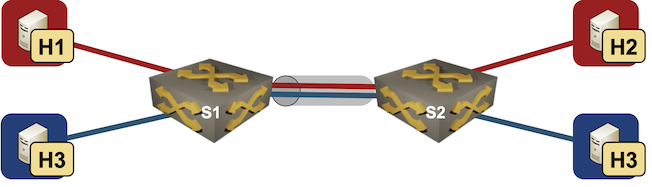

Last week I described how easy it is to use access VLANs in netsim-tools. Next step: VLAN trunks.

We’ll add two Linux hosts to the lab topology used in the previous blog post, resulting in two switches, two Linux hosts in red VLAN and two Linux hosts in blue VLAN.

netlab VLAN Trunk Example

Last week I described how easy it is to use access VLANs in netlab. Next step: VLAN trunks.

We’ll add two Linux hosts to the lab topology used in the previous blog post, resulting in two switches, two Linux hosts in red VLAN and two Linux hosts in blue VLAN.

Lab topology

Video: Network Address Scopes

When defining network addresses in IEN 19 John Shoch said:

Addresses must, therefore, be meaningful throughout the domain, and must be drawn from some uniform address space.

But what is a domain? Welcome to the address scope discussion ;)