Extending relational query processing with ML inference

Extending relational query processing with ML inference, Karanasos, CIDR’10

This paper provides a little more detail on the concrete work that Microsoft is doing to embed machine learning inference inside an RDBMS, as part of their vision for Enterprise Grade Machine Learning. The motivation is not that inference will perform better inside the database, but that the database is the best place to take advantage of enterprise features (transactions, security, auditing, HA, and so on). Given the desire to keep enterprise data within the database, and to treat models as data also, the question is can we do inference in the database with acceptable performance? Raven is the system that Microsoft built to explore this question, and answer it with a resounding yes.

… based on interactions with enterprise customers, we expect that storage and inference of ML models will be subject to the same scrutiny and performance requirements of sensitive/mission-critical operational data. When it comes to data, database management systems (DBMSs) have been the trusted repositories for the enterprise… We thus propose to store and serve ML models from within the DBMS…

The authors don’t just mean farming inference out to an external process from within the RDBMS, Continue reading

Cloudy with a high chance of DBMS: a 10-year prediction for enterprise-grade ML

Cloudy with a high chance of DBMS: a 10-year prediction for enterprise-grade ML, Agrawal et al., CIDR’20

"Cloudy with a high chance of DBMS" is a fascinating vision paper from a group of experts at Microsoft, looking at the transition of machine learning from being primarily the domain of large-scale, high-volume consumer applications to being an integral part of everyday enterprise applications.

When it comes to leveraging ML in enterprise applications, especially in regulated environments, the level of scrutiny for data handling, model fairness, user privacy, and debuggability will be substantially higher than in the first wave of ML applications.

Throughout the paper, this emerging class of applications are referred to as EGML apps: Enterprise Grade Machine Learning. And there’s going to be a lot of them!

Enterprises in every industry are developing strategies for digitally transforming their businesses at every level. The core idea is to continuously monitor all aspects of the business, actively interpret the observations using advanced data analysis – including ML – and integrate the learnings into appropriate actions that improve business outcomes. We predict that in the next 10 years, hundreds of thousands of small teams will build millions of ML-infused applications – Continue reading

Migrating a privacy-safe information extraction system to a Software 2.0 design

Migrating a privacy-safe information extraction system to a software 2.0 design, Sheng, CIDR’20

This is a comparatively short (7 pages) but very interesting paper detailing the migration of a software system to a ‘Software 2.0’ design. Software 2.0, in case you missed it, is a term coined by Andrej Karpathy to describe software in which key components are implemented by neural networks. Since we’ve recently spent quite a bit of time looking at the situations where interpretable models and simple rules are highly desirable, this case study makes a nice counterpoint: it describes a system that started out with hand-written rules, which then over time grew complex and hard to maintain until meaningful progress had pretty much slowed to a halt. (A set of rules that complex wouldn’t have been great from the perspective of interpretability either). Replacing these rules with a machine learned component dramatically simplified the code base (45 Kloc deleted) and set the system back onto a growth and improvement trajectory.

A really interesting thing happens when you go from developing a Software 1.0 (i.e., traditional software) to a Software 2.0 system. In Software 1.0 we spend Continue reading

Programs, life cycles, and laws of software evolution

Programs, life cycles, and laws of software evolution, Lehman, Proc. IEEE, 1980

Today’s paper came highly recommended by Kevlin Henney and Nat Pryce in a Twitter thread last week, thank you both!

The footnotes show that the manuscript for this paper was submitted almost exactly 40 years ago – on the 27th February 1980. The problems it describes though (and that the community had already been wrestling with for a couple of decades) seem as fresh and relevant as ever. Is there some kind of Lindy effect for problems as there is for published works? I.e., should we expect to still be grappling with these issues for at least another 60 years? In this particular instance at least, it seems likely.

As computers play an ever larger role in society and the life of the individual, it becomes more and more critical to be able to create and maintain effective, cost-effective, and timely software. For more than two decades, however, the programming fraternity, and through them the computer-user community, has faced serious problems achieving this.

On programming, projects, and products

What does a programmer do? A programmer’s task, according to Lehman, is to "state an algorithm Continue reading

Let’s Encrypt: an automated certificate authority to encrypt the entire web

Let’s encrypt: an automated certificate authority to encrypt the entire web, Aas et al., CCS’19

This paper tells the story of Let’s Encrypt, from it’s early beginnings in 2012/13 all the way to becoming the world’s largest HTTPS Certificate Authority (CA) today – accounting for more currently valid certificates than all other browser-trusted CAs combined. Beyond the functionality that Let’s Encrypt provides, the story stands out to me for two key ingredients. Firstly, whereas normally we trade-off between security and ease-of-use, Let’s Encrypt made the web more secure through ease-of-use. Secondly, Let’s Encrypt managed to find a sustainable funding model for a combination of an open source project and free online service, as compared to the more normal pattern which sadly seems to involve running a small number of beneficent maintainers into the ground.

Since it’s launch in December 2015, Let’s Encrypt has steadily grown to become the largest CA in the Web PKI by certificates issued and the fourth largest known CA by Firefox Beta TLS full handshakes. As of January 21, 2019, the CA had issued a total of 538M certificates for 223M unique FQDNs… Let’s Encrypt has been responsible for significant growth in HTTPS deployment.

Watching you watch: the tracking system of over-the-top TV streaming devices

Watching you watch: the tracking ecosystem of over-the-top TV streaming devices, Moghaddam et al., CCS’19

The results from this paper are all too predictable: channels on Over-The-Top (OTT) streaming devices are insecure and riddled with privacy leaks. The authors quantify the scale of the problem, and note that users have even less viable defence mechanisms than they do on web and mobile platforms. When you watch TV, the TV is watching you.

In this paper, we examine the advertising and tracking ecosystems of Over-The-Top ("OTT") streaming devices, which deliver Internet-based video content to traditional TVs/display devices. OTT devices refer to a family of services and devices that either directly connect to a TV (e.g., streaming sticks and boxes) or enable functionality within a TV (e.g. smart TVs) to facilitate the delivery of Internet-based video content.

The study focuses on Roku and Amazon Fire TV, which together account for between 59% and 65% of the global market. The top 1000 channels from each service are analysed using a custom-built crawling engine, and traffic is intercepted where possible using mitmproxy.

How they did it

For each service, a list of the top 1000 channels was compiled, as Continue reading

Cloudburst: stateful functions-as-a-service

Cloudburst: stateful functions-as-a-service, Sreekanti et al., arXiv 2020

Today’s paper choice is a fresh-from-the-arXivs take on serverless computing from the RISELab at Berkeley, addressing some of the limitations outlined in last year’s ‘Berkeley view on serverless computing.’ Stateless is fine until you need state, at which point the coarse-grained solutions offered by current platforms limit the kinds of application designs that work well. Last week we looked at a function shipping solution to the problem; Cloudburst uses the more common data shipping to bring data to caches next to function runtimes (though you could also make a case that the scheduling algorithm placing function execution in locations where the data is cached a flavour of function-shipping too).

Given the simplicity and economic appeal of FaaS, it is interesting to explore designs that preserve the autoscaling and operational benefits of current offerings, while adding performant, cost-efficient and consistent shared state and communication.

The key ingredients of Cloudburst are a highly-scalable key-value store for persistent state (Anna), local caches co-located with function execution environments, and cache-consistency protocols to preserve developer sanity while data is moved in and out of those caches. Oh, and there’s a scheduler Continue reading

POTS: protective optimization technologies

POTS: Protective optimization technologies, Kulynych, Overdorf et al., arXiv 2019

With thanks to @TedOnPrivacy for recommending this paper via Twitter.

Last time out we looked at fairness in the context of machine learning systems, coming to the realisation that you can’t define ‘fair’ solely from the perspective of an algorithm and the data it is trained on. Start pulling on that thread, and you end up with papers such as ‘Delayed impact of fair machine learning‘ that consider the longer term implications for groups the intention was to protect, when systems are deployed and interact with the real world creating feedback loops in a causal graph. Today’s paper looks even wider, encompassing the total impact of an algorithm, as part of a system, embedded in an environment. Not only for the groups explicitly considered by that algorithm, but also the impact on groups outside of consideration (the ‘utility function’) of the service provider. For example, navigational systems such as Waze can have negative impacts on communities near highways that they route much more traffic through, and Airbnb may have perfectly fair algorithms from the perspective of participants in the Airbnb ecosystem, whilst also having damaging consequences Continue reading

The measure and mismeasure of fairness: a critical review of fair machine learning

The measure and mismeasure of fairness: a critical review of fair machine learning, Corbett-Davies & Goel, arXiv 2018

With many thanks to Ben Fried and the ACM Queue editorial board for the paper recommendation.

We’ve visited the topic of fairness in the context of machine learning several times on The Morning Paper (see e.g. [1]1, [2]2, [3]3, [4]4). I’m still picking up new insights every time I revisit the topic though, and today’s paper choice is no exception.

In 1911 Russell & Whitehead published Principia Mathematica, with the goal of providing a solid foundation for all of mathematics. In 1931 Gödel’s Incompleteness Theorem shattered the dream, showing that for any consistent axiomatic system there will always be theorems that cannot be proven within the system. In case you’re wondering where on earth I’m going with this… it’s a very stretched analogy I’ve been playing with in my mind. One premise of many models of fairness in machine learning is that you can measure (‘prove’) fairness of a machine learning model from within the system – i.e. from properties of the Continue reading

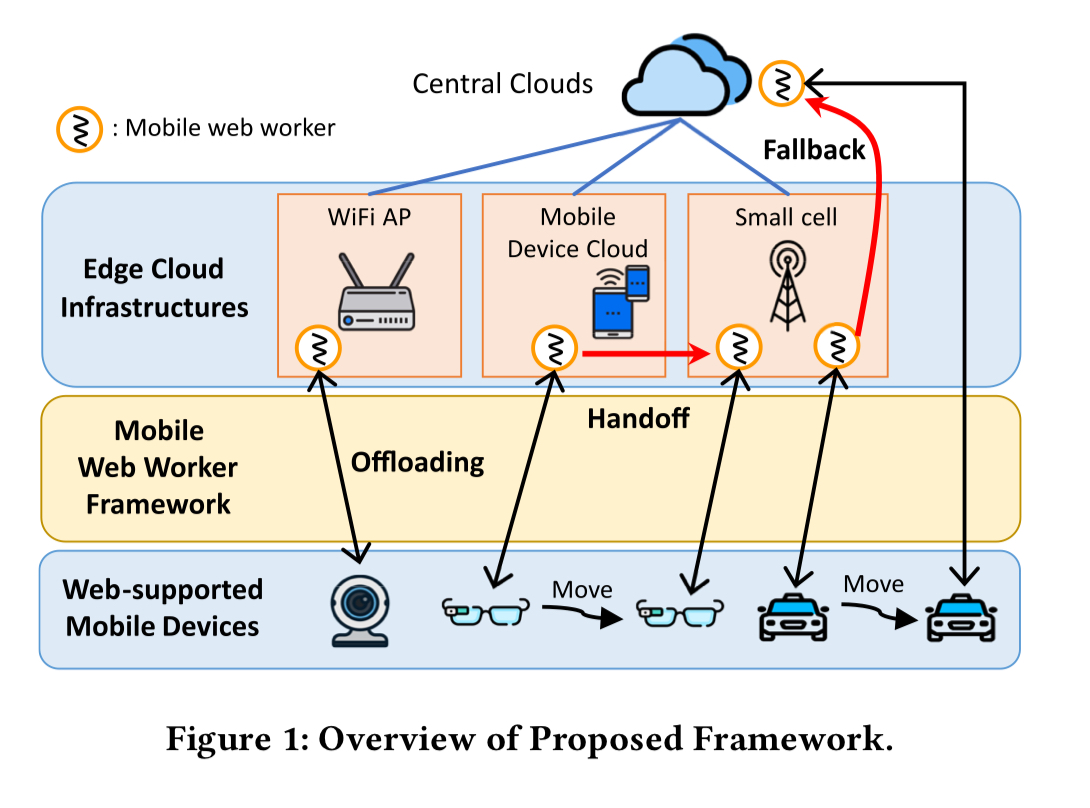

Seamless offloading of web app computations from mobile device to edge clouds via HTML5 Web Worker migration

Seamless offloading of web app computations from mobile device to edge clouds via HTML5 web worker migration, Jeong et al., SoCC’19 [^1]

This paper caught my eye for its combination of an intriguing idea (opportunistic offload of computation from mobile devices to the edge) and the elegance of the way the web worker interface supports this use case. It’s live migration – but for web workers instead of the more usual VMs or containers.

Why would we want to live migrate web workers?

Emerging mobile applications, such as mobile cloud gaming or augmented reality, require strict latency constraints as well as high computer power… A survey on the latency of games has reported that less than ~50ms of network latency is preferred for time-critical games, which is hard to achieve with a traditional cloud system where computing servers are located in datacenters far from clients…

So you’ve got mobile devices without the computing power needed to deliver a great experience, and cloud computing that has all the needed power that’s too far away. Edge servers are the middle ground – more compute power than a mobile device, but with latency of just a few ms. The kind of Continue reading

Narrowing the gap between serverless and its state with storage functions

Narrowing the gap between serverless and its state with storage functions, Zhang et al., SoCC’19

"Narrowing the gap" was runner-up in the SoCC’19 best paper awards. While being motivated by serverless use cases, there’s nothing especially serverless about the key-value store, Shredder, this paper reports on. Shredder’s novelty lies in a new implementation of an old idea. On the mainframe we used to call it function shipping. In databases you probably know it as stored procedures. The advantages of function shipping (as opposed to the data shipping we would normally do in a serverless application) are that (a) you can avoid moving potentially large amounts of data over the network in order to process it, and (b) you might be able to collapse multiple remote calls into one if the function traverses structures that otherwise could not be fetched in a single call.

Shredder is "a low-latency multi-tenant cloud store that allows small units of computation to be performed directly within storage nodes."

Running end-user compute inside the datastore is not without its challenges of course. From an operator perspective it makes it harder to follow the classic cloud-native design in which a global storage Continue reading

Reverb: speculative debugging for web applications

Reverb: speculative debugging for web applications, Netravali & Mickens, SOCC’19

This week we’ll be looking at a selection of papers from the 2019 edition of the ACM Symposium of Cloud Computing (SoCC). First up is Reverb, which won a best paper award for its record and replay debugging framework that accommodates speculative edits (i.e., candidate bug-fixes) during replay. In the context of the papers we’ve been looking at recently, and for a constrained environment, Reverb is helping its users to form an accurate mental model of the system state, and to form and evaluate hypotheses in-situ.

Reverb has three features which enable a fundamentally more powerful debugging experience. First, Reverb tracks precise value provenance, allowing a developer to quickly identify the reads and writes to JavaScript state that affected a particular variable’s value. Second, Reverb enables speculative bug fix analysis… Third, Reverb supports wide-area debugging for applications whose server-side components use event-driven architectures.

The problem

Reverb’s goal is to aid in debugging the client-side of JavaScript web applications. These are "pervasively asynchronous and event-driven" which makes it notoriously difficult to figure out what’s going on. See e.g. "Debugging data flows Continue reading

Trade-offs under pressure: heuristics and observations of teams resolving internet service outages (Part II)

Trade-offs under pressure: heuristics and observations of teams resolving internet service outages, Allspaw, Masters thesis, Lund University 2015

This is part 2 of our look at Allspaw’s 2015 master thesis (here’s part 1). Today we’ll be digging into the analysis of an incident that took place at Etsy on December 4th, 2014.

- 1:00pm Eastern Standard Time the Personalisation / Homepage Team for Etsy are in a conference room kicking off a lunch-and-learn session on the personalised feed feature on the Etsy.com homepage

- 1:06pm reports of the personalised homepage having issues start appearing from multiple sources. Instead of the personalised feed, the site has fallen back to serving a generic ‘trending items’ feed. This is a big deal during the important holiday shopping season. Members of the team begin diagnosing the issue using the #sysops and #warroom internal IRC channels.

- 1:18pm a key observation was made that an API call to populate the homepage sidebar saw a huge jump in latency

- 1:28pm an engineer reported that the profile of errors for a specific API method matched the pattern of sidebar errors

- 1:32pm the API errors were narrowed down to requests for data on a specific single shop. The Continue reading

Trade-offs under pressure: heuristics and observations of teams resolving internet service outages (Part 1)

Trade-offs under pressure: heuristics and observations of teams resolving internet service outages, Allspaw, Masters thesis, Lund University, 2015

Following on from the STELLA report, today we’re going back to the first major work to study the human and organisational side of incident management in business-critical Internet services: John Allspaw’s 2015 Masters thesis. The document runs to 87 pages, so I’m going to cover the material across two posts. Today we’ll be looking at the background and literature review sections, which place the activity in a rich context and provide many jumping off points for going deeper in areas of interest to you. In the next post we’ll look at the detailed analysis of how a team at Etsy handled a particular incident on December 4th 2014, to see what we can learn from it.

Why is this even a thing?

Perhaps it seems obvious that incident management is hard. But it’s worth recaping some of the reasons why this is the case, and what makes it an area worthy of study.

The operating environment of Internet services contains many of the ingredients necessary for ambiguity and high consequences for mistakes in the diagnosis and response of an adverse Continue reading

STELLA: report from the SNAFU-catchers workshop on coping with complexity

STELLA: report from the SNAFU-catchers workshop on coping with complexity, Woods 2017, Coping with Complexity workshop

“Coping with complexity” is about as good a three-word summary of the systems and software challenges facing us over the next decade as I can imagine. Today’s choice is a report from a 2017 workshop convened with that title, and recommended to me by John Allspaw – thank you John!

Workshop context

The workshop brought together about 20 experts from a variety of different companies to share and analyse the details of operational incidents (and their postmortems) that had taken place at their respective organisations. Six themes emerged from those discussions that sit at the intersection of resilience engineering and IT. These are all very much concerned with the interactions between humans and complex software systems, along the lines we examined in Ironies of Automation and Ten challenges for making automation a ‘team player’ in joint human-agent activity.

There’s a great quote on the very front page of the report that is worth the price of admission on its own:

Woods’ Theorem: As the complexity of a system increases, the accuracy of any single agent’s own model of that system decreases rapidly.

Remember Continue reading

Synthesizing data structure transformations from input-output examples

Synthesizing data structure transformations from input-output examples, Feser et al., PLDI’15

The Programmatically Interpretable Reinforcement Learning paper that we looked at last time out contained this passing comment coupled with a link to today’s paper choice:

It is known from prior work that such [functional] languages offer natural advantages in program synthesis.

That certainly caught my interest. The context for the quote is synthesis of programs by machines, but when I’m programming, I’m also engaged in the activity of program synthesis! So a work that shows functional languages have an advantage for programmatic synthesis might also contain the basis for an argument for natural advantages to the functional style of programming. I didn’t find that here. We can however say that this paper shows “functional languages are well suited to program synthesis.”

Never mind, because the ideas in the paper are still very connected to a question I’m fascinated by at the moment: “how will we be developing software systems over this coming decade?”. There are some major themes to be grappled with: system complexity, the consequences of increasing automation and societal integration, privacy, ethics, security, trust (especially in supply chains), interpretability vs black box models, Continue reading

Programmatically interpretable reinforcement learning

Programmatically interpretable reinforcement learning, Verma et al., ICML 2018

Being able to trust (interpret, verify) a controller learned through reinforcement learning (RL) is one of the key challenges for real-world deployments of RL that we looked at earlier this week. It’s also an essential requirement for agents in human-machine collaborations (i.e, all deployments at some level) as we saw last week. Since reading some of Cynthia Rudin’s work last year I’ve been fascinated with the notion of interpretable models. I believe there are a large set of use cases where an interpretable model should be the default choice. There are so many deployment benefits, even putting aside any ethical or safety concerns.

So how do you make an interpretable model? Today’s paper choice is the third paper we’ve looked at along these lines (following CORELS and RiskSlim), enough for a recognisable pattern to start to emerge. The first step is to define a language — grammar and associated semantics — in which the ultimate model to be deployed will be expressed. For CORRELS this consists of simple rule based expressions, and for RiskSlim it is scoring sheets. For Programmatically Interpretable Reinforcement Learning (PIRL) as we shall Continue reading

Challenges of real-world reinforcement learning

Challenges of real-world reinforcement learning, Dulac-Arnold et al., ICML’19

Last week we looked at some of the challenges inherent in automation and in building systems where humans and software agents collaborate. When we start talking about agents, policies, and modelling the environment, my thoughts naturally turn to reinforcement learning (RL). Today’s paper choice sets out some of the current (additional) challenges we face getting reinforcement learning to work well in many real-world systems.

We consider control systems grounded in the physical world, optimization of software systems, and systems that interact with users such as recommender systems and smart phones. … RL methods have been shown to be effective on a large set of simulated environments, but uptake in real-world problems has been much slower.

Why is this? The authors posit that there’s a meaningful gap between the tightly-controlled and amenable to simulation research settings where many RL systems do well, and the messy realities and constraints of real-world systems. For example, there may be no good simulator available, exploration may be curtailed by strong safety constraints, and feedback cycles for learning may be slow.

This lack of available simulators means learning must be done using Continue reading

Ten challenges for making automation a ‘team player’ in joint human-agent activity

Ten challenges for making automation a ‘team player’ in joint human-agent activity, Klein et al., IEEE Computer Nov/Dec 2004

With thanks to Thomas Depierre for the paper suggestion.

Last time out we looked at some of the difficulties inherit in automating control systems. However much we automate, we’re always ultimately dealing with some kind of human/machine collaboraton. Today’s choice looks at what it takes for machines to participate productively in collaborations with humans. Written in 2004, the ideas remind me very much of Mark Burgess’ promise theory, which was also initially developed around the same time.

Let’s work together

If a group of people (or people and machines) are going to coordinate with each other to achieve a set of shared ends then there are four basic requirements that must be met to underpin their joint activity:

- They must agree to work together (the authors call this agreement a Basic Compact).

- They must be mutually predictable in their actions

- They must be mutually directable.

- They must maintain common ground.

A basic compact is…

… an agreement (often tacit) to facilitate coordination, work toward shared goals, and prevent breakdowns in team coordination. This Compact involves a commitment Continue reading

Ironies of automation

Ironies of automation, Bainbridge, Automatica, Vol. 19, No. 6, 1983

With thanks to Thomas Depierre for the paper recommendation.

Making predictions is a dangerous game, but as we look forward to the next decade a few things seem certain: increasing automation, increasing system complexity, faster processing, more inter-connectivity, and an even greater human and societal dependence on technology. What could possibly go wrong? Automation is supposed to make our lives easier, but ~~if~~ when it goes wrong it can put us in a very tight spot indeed. Today’s paper choice, ‘Ironies of Automation’ explores these issues. Originally published in this form in 1983, its lessons are just as relevant today as they were then.

The central irony (‘combination of circumstances, the result of which is the direct opposite of what might be expected’) referred to in this paper is that the more we automate, and the more sophisticated we make that automation, the more we become dependent on a highly skilled human operator.

Automated systems need highly skilled operators

Why do we automate?

The designer’s view of the human operator may be that the operator is unreliable and inefficient, so should be eliminated from the system.

An automated system Continue reading