Optimized risk scores

Optimized risk scores Ustun & Rudin, KDD’17

On Monday we looked at the case for interpretable models, and in Wednesday’s edition of The Morning Paper we looked at CORELS which produces provably optimal rule lists for categorical assessments. Today we’ll be looking at RiskSLIM, which produces risk score models together with a proof of optimality.

A risk score model is a a very simple points-based system designed to be used (and understood by!) humans. Such models are widely used in e.g. medicine and criminal justice. Traditionally they have been built by panels of experts or by combining multiple heuristics . Here’s an example model for the CHADS2 score assessing stroke risk.

Even when you don’t consider interpretability as a goal (which you really should!), “doing the simplest thing which could possibly work” is always a good place to start. The fact that CORELS and RiskSLIM come with an optimality guarantee given the constraints fed to them on model size etc. also means you can make informed decisions about model complexity vs performance trade-offs if a more complex model looks like it may perform better. It’s a refreshing change of mindset to shift from “finding an Continue reading

Learning certifiably optimal rule lists for categorical data

Learning certifiably optimal rule lists for categorical data Angelino et al., JMLR 2018

Today we’re taking a closer look at CORELS, the Certifiably Optimal RulE ListS algorithm that we encountered in Rudin’s arguments for interpretable models earlier this week. We’ve been able to create rule lists (decision trees) for a long time, e.g. using CART, C4.5, or ID3 so why do we need CORELS?

…despite the apparent accuracy of the rule lists generated by these algorithms, there is no way to determine either if the generated rule list is optimal or how close it is to optimal, where optimality is defined with respect to minimization of a regularized loss function. Optimality is important, because there are societal implications for lack of optimality.

Rudin proposed a public policy that for high-stakes decisions no black-box model should be deployed when there exists a competitive interpretable model. For the class of logic problems addressable by CORELS, CORELS’ guarantees provide a technical foundation for such a policy:

…we would like to find both a transparent model that is optimal within a particular pre-determined class of models and produce a certificate of its optimality, with respect Continue reading

Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead

Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead Rudin et al., arXiv 2019

With thanks to Glyn Normington for pointing out this paper to me.

It’s pretty clear from the title alone what Cynthia Rudin would like us to do! The paper is a mix of technical and philosophical arguments and comes with two main takeaways for me: firstly, a sharpening of my understanding of the difference between explainability and interpretability, and why the former may be problematic; and secondly some great pointers to techniques for creating truly interpretable models.

There has been a increasing trend in healthcare and criminal justice to leverage machine learning (ML) for high-stakes prediction applications that deeply impact human lives… The lack of transparency and accountability of predictive models can have (and has already had) severe consequences…

Defining terms

A model can be a black box for one of two reasons: (a) the function that the model computes is far too complicated for any human to comprehend, or (b) the model may in actual fact be simple, but its details are proprietary and not available for inspection.

In explainable ML we make predictions using a complicated Continue reading

Task-based effectiveness of basic visualizations

Task-based effectiveness of basic visualizations Saket et al., IEEE Transactions on Visualization and Computer Graphics 2019

So far this week we’ve seen how to create all sorts of fantastic interactive visualisations, and taken a look at what data analysts actually do when they do ‘exploratory data analysis.’ To round off the week today’s choice is a recent paper on an age-old topic: what visualisation should I use?

No prizes for guessing “it depends!”

…the effectiveness of a visualization depends on several factors including task at the hand, and data attributes and datasets visualized.

Is this the paper to finally settle the age-old debate surrounding pie-charts??

Saket et al. look at five of the most basic visualisations —bar charts, line charts, pie charts, scatterplots, and tables— and study their effectiveness when presenting modest amounts of data (less than 50 visual marks) across 10 different tasks. The task taxonomy comes from the work of Amar et al., describing a set of ten low-level analysis tasks that describe users’ activities while using visualization tools.

- Finding anomalies

- Finding clusters (counting the number of groups with similar data attribute values)

- Finding correlations (determining whether or not there is a correlation between Continue reading

Futzing and moseying: interviews with professional data analysts on exploration practices

Futzing and moseying: interviews with professional data analysts on exploration practices Alspaugh et al., VAST’18

What do people actually do when they do ‘exploratory data analysis’ (EDA)? This 2018 paper reports on the findings from interviews with 30 professional data analysts to see what they get up to in practice. The only caveat to the results is that the interviews were conducted in 2015, and this is a fast-moving space. The essence of what and why is probably still the same, but the tools involved have evolved.

What is EDA?

Exploration here is defined as “open-ended information analysis,” which doesn’t require a precisely stated goal. It comes after data ingestion, wrangling and profiling (i.e., when you have the data in a good enough state to ask question of it). The authors place it within the overall analysis process like this:

That looks a lot more waterfall-like than my experience of reality though. I’d expect to see lots of iterations between explore and model, and possibly report as well.

The guidance given to survey participants when asking about EDA is as follows:

EDA is an approach to analyzing data, usually undertaken at the beginning of an analysis, to Continue reading

Vega-Lite: a grammar of interactive graphics

Vega-lite: a grammar of interactive graphics Satyanarayan et al., IEEE transactions on visualization and computer graphics, 2016

From time to time I receive a request for more HCI (human-computer interaction) related papers in The Morning Paper. If you’ve been a follower of The Morning Paper for any time at all you can probably tell that I naturally gravitate more towards the feeds-and-speeds end of the spectrum than user experience and interaction design. But what good is a super-fast system that nobody uses! With the help of Aditya Parameswaran, who recently received a VLDB Early Career Research Contribution Award for his work on the development of tools for large-scale data exploration targeting non-programmers, I’ve chosen a selection of data exploration, visualisation and interaction papers for this week. Thank you Aditya! Fingers crossed I’ll be able to bring you more from Aditya Parameswaran in future editions.

Vega and Vega-lite follow in a long line of work that can trace its roots back to Wilkinson’s ‘The Grammar of Graphics.’ It’s all the way back to 2015 since we last looked at the Vega-family on The Morning Paper (see ‘Declarative interaction design for data visualization’ and ‘[Reactive Continue reading

HackPPL: a universal probabilistic programming language

HackPPL: a universal probabilistic programming language Ai et al., MAPL’19

The Hack programming language, as the authors proudly tell us, is “a dominant web development language across large technology firms with over 100 million lines of production code.” Nail that niche! Does your market get any smaller if we also require those firms to have names starting with ‘F’ ? ;)

In all serious though, Hack powers large chunks of Facebook, running on top of the HipHopVM (HHVM). It started out as an optionally-typed dialect of PHP, but in recent times the Hack development team decided to discontinue PHP support ‘in order open up opportunities for sweeping language advancements’. One of those sweeping advancements is the introduction of support for probabilistic reasoning. This goes under the unfortunate sounding name of ‘HackPPL’. I’m not sure how it should officially be pronounced, but I can tell you that PPL stands for “probabilistic programming language”, not “people”!

This paper is interesting on a couple of levels. Firstly, the mere fact that probabilistic reasoning is becoming prevalent enough for Facebook to want to integrate it into the language, and secondly of course what that actually looks like.

Probabilistic reasoning has Continue reading

“I was told to buy a software or lose my computer: I ignored it.” A study of ransomware

“I was told to buy a software or lose my computer. I ignored it”: a study of ransomware Simoiu et al., SOUPS 2019

This is a very easy to digest paper shedding light on the prevalence of ransomware and the characteristics of those most likely to be vulnerable to it. The data comes from a survey of 1,180 US adults conducted by YouGov, an online global market research firm. YouGov works hard to ensure respondent participation representative of (in this case) the general population in the U.S., but the normal caveats apply.

We define ransomware as the class of malware that attempts to defraud users by restricting access to the user’s computer or data, typically by locking the computer or encrypting data. There are thousands of different ransomware strains in existence today, varying in design and sophistication.

The survey takes just under 10 minutes to complete, and goes to some lengths to ensure that self-reporting victims really were victims of ransomware (and not some other computer problem).

For respondents that indicated they had suffered from a ransomware attack, data was collected on month and year, the name of the ransomware variant, the ransom demanded, the payment method, Continue reading

Invisible mask: practical attacks on face recognition with infrared

Invisible mask: practical attacks on face recognition with infrared Zhou et al., arXiv’18

You might have seen selected write-ups from The Morning Paper appearing in ACM Queue. The editorial board there are also kind enough to send me paper recommendations when they come across something that sparks their interest. So this week things are going to get a little bit circular as we’ll be looking at three papers originally highlighted to me by the ACM Queue board!

‘Invisible Mask’ looks at the very topical subject of face authentication systems. We’ve looked at adversarial attacks on machine learning systems before, including those that can be deployed in the wild, such as decorating stop signs. Most adversarial attacks against image recognition systems require you to have pixel-level control over the input image though. Invisible Mask is different, it’s a practical attack in that the techniques described in this paper could be used to subvert face authentication systems deployed in the wild, without there being any obvious visual difference (e.g. specially printed glass frames) in the face of the attacker to a casual observer. That’s the invisible part: to the face recognition system it’s as if you are wearing a mask, Continue reading

Learning a unified embedding for visual search at Pinterest

Learning a unified embedding for visual search at Pinterest Zhai et al., KDD’19

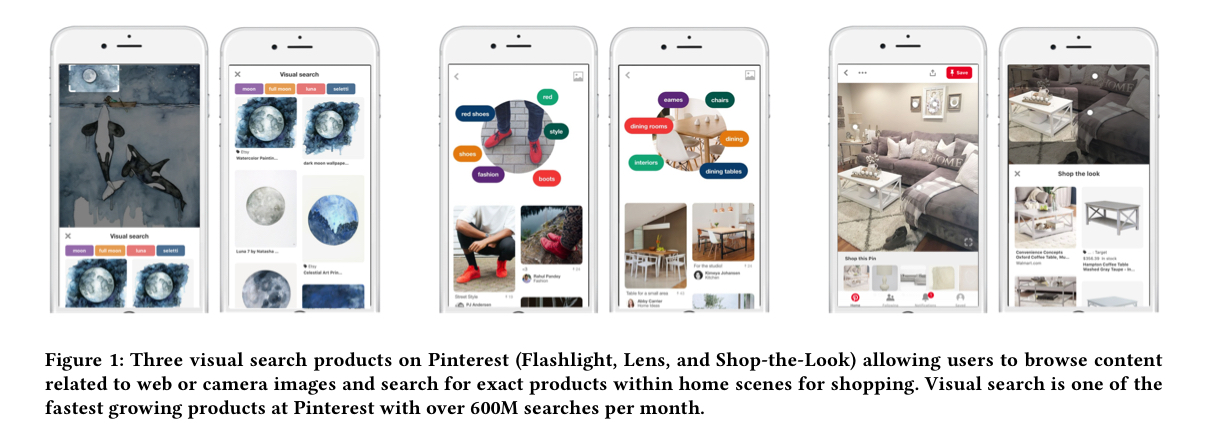

Last time out we looked at some great lessons from Airbnb as they introduced deep learning into their search system. Today’s paper choice highlights an organisation that has been deploying multiple deep learning models in search (visual search) for a while: Pinterest.

With over 600 million visual searches per month and growing, visual search is one of the fastest growing products at Pinterest and of increasing importance.

Visual search is pretty fundamental to the Pinterest experience. The paper focuses on three search-based products: Flashlight, Lens, and Shop-the-Look.

In Flashlight search the search query is a source image either from Pinterest or the web, and the search results are relevant pins. In Lens the search query is a photograph taken by the user with their camera, and the search results are relevant pins. In Shop-the-Look the search query is a source image from Pinterest or the web, and the results are products which match items in the image.

Models are like microservices in one sense: it seems that they have a tendency to proliferate within organisations once they start to take hold! (Aside, I wonder if there’s Continue reading

Applying deep learning to Airbnb search

Applying deep learning to Airbnb search Haldar et al., KDD’19

Last time out we looked at Booking.com’s lessons learned from introducing machine learning to their product stack. Today’s paper takes a look at what happened in Airbnb when they moved from standard machine learning approaches to deep learning. It’s written in a very approachable style and packed with great insights. I hope you enjoy it as much as I did!

Ours is a story of the elements we found useful in applying neural networks to a real life product. Deep learning was steep learning for us. To other teams embarking on similar journeys, we hope an account of our struggles and triumphs will provide some useful pointers.

An ecosystem of models

The core application of machine learning discussed in this paper is the model which orders available listings according to a guest’s likelihood of booking. This is one of a whole ecosystem of models which contribute towards search rankings when a user searches on Airbnb. New models are tested online through an A/B testing framework to compare their performance to previous generations.

The time is right

The very first version of search ranking at Airbnb was a hand-written Continue reading

150 successful machine learning models: 6 lessons learned at Booking.com

150 successful machine learning models: 6 lessons learned at Booking.com Bernadi et al., KDD’19

Here’s a paper that will reward careful study for many organisations. We’ve previously looked at the deep penetration of machine learning models in the product stacks of leading companies, and also some of the pre-requisites for being successful with it. Today’s paper choice is a wonderful summary of lessons learned integrating around 150 successful customer facing applications of machine learning at Booking.com. Oddly enough given the paper title, the six lessons are never explicitly listed or enumerated in the body of the paper, but they can be inferred from the division into sections. My interpretation of them is as follows:

- Projects introducing machine learned models deliver strong business value

- Model performance is not the same as business performance

- Be clear about the problem you’re trying to solve

- Prediction serving latency matters

- Get early feedback on model quality

- Test the business impact of your models using randomised controlled trials (follows from #2)

There are way more than 6 good pieces of advice contained within the paper though!

We found that driving true business impact is amazingly hard, plus it is difficult to isolate Continue reading

Detecting and characterizing lateral phishing at scale

Detecting and characterizing lateral phishing at scale Ho et al., USENIX Security Symposium 2019

This is an investigation into the phenomenon of lateral phishing attacks. A lateral phishing attack is one where a compromised account within an organisation is used to send out further phishing emails (typically to other employees within the same organisation). So ‘alice at example.com’ might receive a phishing email that has genuinely been sent by ‘bob at example.com’, and thus is more likely to trust it.

In recent years, work from both industry and academia has pointed to the emergence and growth of lateral phishing attacks: a new form of phishing that targets a diverse range of organizations and has already incurred billions of dollars in financial harm…. This attack proves particularly insidious because the attacker automatically benefits from the implicit trust in the hijacked account: trust from both human recipients and conventional email protection systems.

A dataset of 113 million emails…

The study is conducted in conjunction with Barracuda Networks, who obtained customer permission to use email data from the Office 365 employee mailboxes of 92 different organisations. 69 of these organisations were selected through random sampling across all organisations, and 23 Continue reading

In-toto: providing farm-to-table guarantees for bits and bytes

in-toto: providing farm-to-table guarantees for bits and bytes Torres-Arias et al., USENIX Security Symposium 2019

Small world with high risks did a great job of highlighting the absurd risks we’re currently carrying in many software supply chains. There are glimmers of hope though. This paper describes in-toto, and end-to-end system for ensuring the integrity of a software supply chain. To be a little more precise, in-toto secures the end-to-end delivery pipeline for one product or package. But it’s only a small step from there to imagine using in-toto to also verify the provenance of every third-party dependency included in the build, and suddenly you’ve got something that starts to look very interesting indeed.

In-toto is much more than just a research project, it’s already deployed and integrated into a number of different projects and ecosystems, quietly protecting artefacts used by millions of people daily. You can find the in-toto website at https://in-toto.io.

In-toto has about a dozen different integrations that protect software supply chains for millions of end-users.

- If you install a Debian package using apt, in-toto is protecting it.

- If you use

kubesecto analyze your Kubenetes configurations, in-toto is protecting it - If you use the Continue reading

Small world with high risks: a study of security threats in the npm ecosystem

Small world with high risks: a study of security threats in the npm ecosystem Zimmermann et al., USENIX Security Symposium 2019

This is a fascinating study of the npm ecosystem, looking at the graph of maintainers and packages and its evolution over time. It’s packed with some great data, and also helps us quantify something we’ve probably all had an intuition for— the high risks involved in depending on a open and fast-moving ecosystem. One the key takeaways for me is the concentration of reach in a comparatively small number of packages and maintainers, making these both very high value targets (event-stream, it turns out, wouldn’t even have made the top-1000 in a list of ranked targets!), but also high leverage points for defence. We have to couple this of course with an exceedingly long tail.

The npm ecosystem

As the primary source of third-party JavaScript packages for the client-side, server-side, and other platforms, npm is the centrerpiece of a large and important software ecosystem.

Npm is an open ecosystem hosting a collection of over 800,000 packages as of February 2019, and it continues to grow rapidly.

To share a package on npm, a maintainer creates Continue reading

Wireless attacks on aircraft instrument landing systems

Wireless attacks on aircraft instrument landing systems Sathaye et al., USENIX Security Symposium 2019

It’s been a while since we last looked at security attacks against connected real-world entities (e.g., industrial machinery, light-bulbs, and cars). Today’s paper is a good reminder of just how important it is becoming to consider cyber threat models in what are primary physical systems, especially if you happen to be flying on an aeroplane – which I am right now as I write this!

The first fully operational Instrument Landing System (ILS) for planes was deployed in 1932. But assumptions we’ve been making since then (and until the present day, it appears!) no longer hold:

Security was never considered by design as historically the ability to transmit and receive wireless signals required considerable resources and knowledge. However, the widespread availability of powerful and low-cost software-defined radio platforms has altered the threat landscape. In fact, today the majority of wireless systems employed in modern aviation have been shown to be vulnerable to some form of cyber-physical attacks.

Both sections 1 and 6 in the paper give some eye-opening details of known attacks against aviation systems, but to date no-one Continue reading

50 ways to leak your data: an exploration of apps’ circumvention of the Android permissions system

50 ways to leak your data: an exploration of apps’ circumvention of the Android permissions system Reardon et al., USENIX Security Symposium 2019

The problem is all inside your app, she said to me / The answer is easy if you take it logically / I’d like to help data in its struggle to be free / There must be fifty ways to leak their data.

You just slip it out the back, Jack / Make a new plan, Stan / You don’t need to be coy, Roy / Just get the data free.

Hop it on the bus, Gus / You don’t need to discuss much / Just drop off the key, Lee / And get the data free…

— Lyrics adapted from “50 ways to leave your lover” by Paul Simon (fabulous song btw., you should definitely check it out if you don’t already know it!).

This paper is a study of Android apps in the wild that leak permission protected data (identifiers which can be used for tracking, and location information), where those apps should not have been able to see such data due to a lack of granted permissions. By detecting Continue reading

The secret-sharer: evaluating and testing unintended memorization in neural networks

The secret sharer: evaluating and testing unintended memorization in neural networks Carlini et al., USENIX Security Symposium 2019

This is a really important paper for anyone working with language or generative models, and just in general for anyone interested in understanding some of the broader implications and possible unintended consequences of deep learning. There’s also a lovely sense of the human drama accompanying the discoveries that just creeps through around the edges.

Disclosure of secrets is of particular concern in neural network models that classify or predict sequences of natural language text… even if sensitive or private training data text is very rare, one should assume that well-trained models have paid attention to its precise details…. The users of such models may discover— either by accident or on purpose— that entering certain text prefixes causes the models to output surprisingly revealing text completions.

Take a system trained to make predictions on a language (word or character) model – an example you’re probably familiar with is Google Smart Compose. Now feed it a prefix such as “My social security number is “. Can you guess what happens next?

As a small scale demonstration, the authors trained a model on Continue reading

Even more amazing papers at VLDB 2019 (that I didn’t have space to cover yet)

We’ve been covering papers from VLDB 2019 for the last three weeks, and next week it will be time to mix things up again. There were so many interesting papers at the conference this year though that I haven’t been able to cover nearly as many as I would like. So today’s post is a short summary of things that caught my eye that I haven’t covered so far. A few of these might make it onto The Morning Paper in weeks to come, you never know!

Industry papers

- Tunable consistency in MongoDB. MongoDB is an important database, and this paper explains the tunable (per-operation) consistency models that MongoDB provides and how they are implemented under the covers. I really do want to cover this one at some point, along with Implementation of cluster-wide logical clock and causal consistency in MongoDB from SIGMOD 2019.

- We hear a lot from Google and Microsoft about their cloud platforms, but not quite so much from the other key industry players. So it’s great to see some papers from Alibaba and Tencent here. AliGraph covers Alibaba’s distributed graph engine supporting the development of new GNN applications. Their dataset has about 7B edges… Meanwhile, AnalyticDB Continue reading

Updating graph databases with Cypher

Updating graph databases with Cypher Green et al., VLDB’19

This is the story of a great collaboration between academia, industry, and users of the Cypher graph querying language as created by Neo4j. Beyond Neo4j, Cypher is also supported in SAP HANA Graph, RedisGraph, Agnes Graph, and Memgraph. Cypher for Apache Spark, and Cypher over Gremlin projects are also both available in open source. The openCypher project brings together Cypher implementors across different projects and products, and aims to produce a full and open specification of the language. There is also a Graph Query Language (GQL) standards organisation.

Cypher is used in hundreds of production applications across many industry vertical domains, such as financial services, telecommunications, manufacturing and retails, logistics, government, and healthcare.

Personally I would have expected that number to be in the thousands by now and there are some suggestions that it is, however Neo4j are still only claiming ‘hundreds of customers’ on their own website.

The read-only core of the Cypher language has already been fully formalised. But when it came time to extend that formalism to include the update mechanisms, the authors ran into difficulties.

Our understanding of updates in the popular graph Continue reading