The Network is the Computer

We recently registered the trademark for The Network is the Computer®, to encompass how Cloudflare is utilizing its network to pave the way for the future of the Internet.

The phrase was first coined in 1984 by John Gage, the 21st employee of Sun Microsystems, where he was credited with building Sun’s vision around “The Network is the Computer.” When Sun was acquired in 2010, the trademark was not renewed, but the vision remained.

Take it from him:

“When we built Sun Microsystems, every computer we made had the network at its core. But we could only imagine, over thirty years ago, today’s billions of networked devices, from the smallest camera or light bulb to the largest supercomputer, sharing their packets across Cloudflare’s distributed global network.

We based our vision of an interconnected world on open and shared standards. Cloudflare extends this dedication to new levels by openly sharing designs for security and resilience in the post-quantum computer world.

Most importantly, Cloudflare is committed to immediate, open, transparent accountability for network performance. I’m a dedicated reader of their technical blog, as the network becomes central to our security infrastructure and the global economy, demanding even more powerful technical innovation. Continue reading

Cloudflare’s Karma, Managing MSPs, & Agile Security

Cloudflare come out strong, pointing the finger at Verizon for shoddy practices putting the Internet at risk. It didn’t take long for karma to come around and for Cloudflare to have their own Internet impacting outage from a mistake of their own. In this episode we talk about that outage, the risk of centralization on the Internet, managing MSPs when trouble strikes, and whether or not agile processes are forgoing security in favor of faster releases.

Outro Music:

Danger Storm Kevin MacLeod (incompetech.com)

Licensed under Creative Commons: By Attribution 3.0 License

http://creativecommons.org/licenses/by/3.0/

The post Cloudflare’s Karma, Managing MSPs, & Agile Security appeared first on Network Collective.

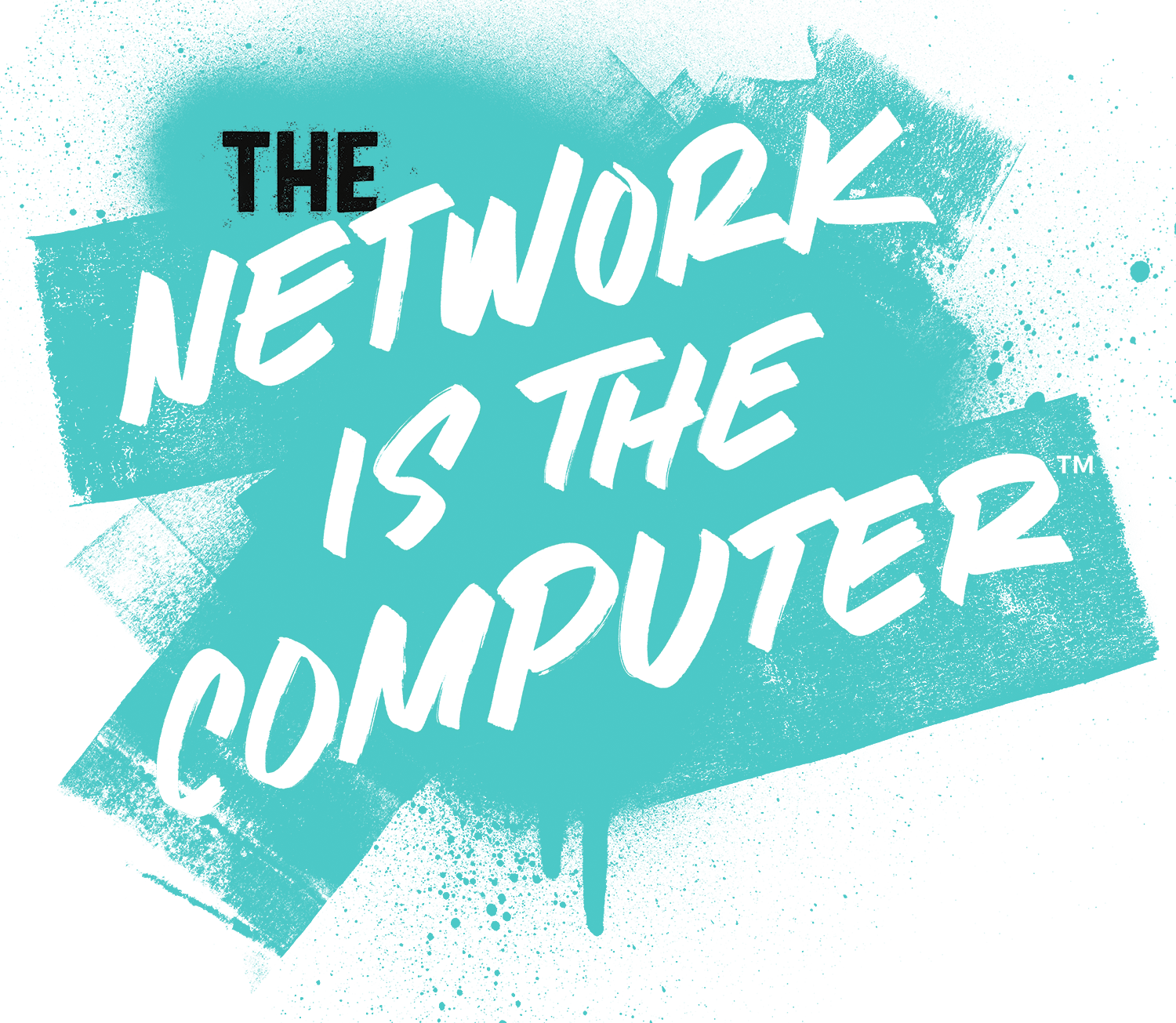

A gentle introduction to Linux Kernel fuzzing

For some time I’ve wanted to play with coverage-guided fuzzing. Fuzzing is a powerful testing technique where an automated program feeds semi-random inputs to a tested program. The intention is to find such inputs that trigger bugs. Fuzzing is especially useful in finding memory corruption bugs in C or C++ programs.

Normally it's recommended to pick a well known, but little explored, library that is heavy on parsing. Historically things like libjpeg, libpng and libyaml were perfect targets. Nowadays it's harder to find a good target - everything seems to have been fuzzed to death already. That's a good thing! I guess the software is getting better! Instead of choosing a userspace target I decided to have a go at the Linux Kernel netlink machinery.

Netlink is an internal Linux facility used by tools like "ss", "ip", "netstat". It's used for low level networking tasks - configuring network interfaces, IP addresses, routing tables and such. It's a good target: it's an obscure part of kernel, and it's relatively easy to automatically craft valid messages. Most importantly, we can learn a lot about Linux internals in the process. Bugs in netlink aren't going Continue reading

What Happens When The Internet Breaks?

It’s a crazy idea to think that a network built to be completely decentralized and resilient can be so easily knocked offline in a matter of minutes. But that basically happened twice in the past couple of weeks. CloudFlare is a service provide that offers to sit in front of your website and provide all kinds of important services. They can prevent smaller sites from being knocked offline by an influx of traffic. They can provide security and DNS services for you. They’re quickly becoming an indispensable part of the way the Internet functions. And what happens when we all start to rely on one service too much?

Bad BGP Behavior

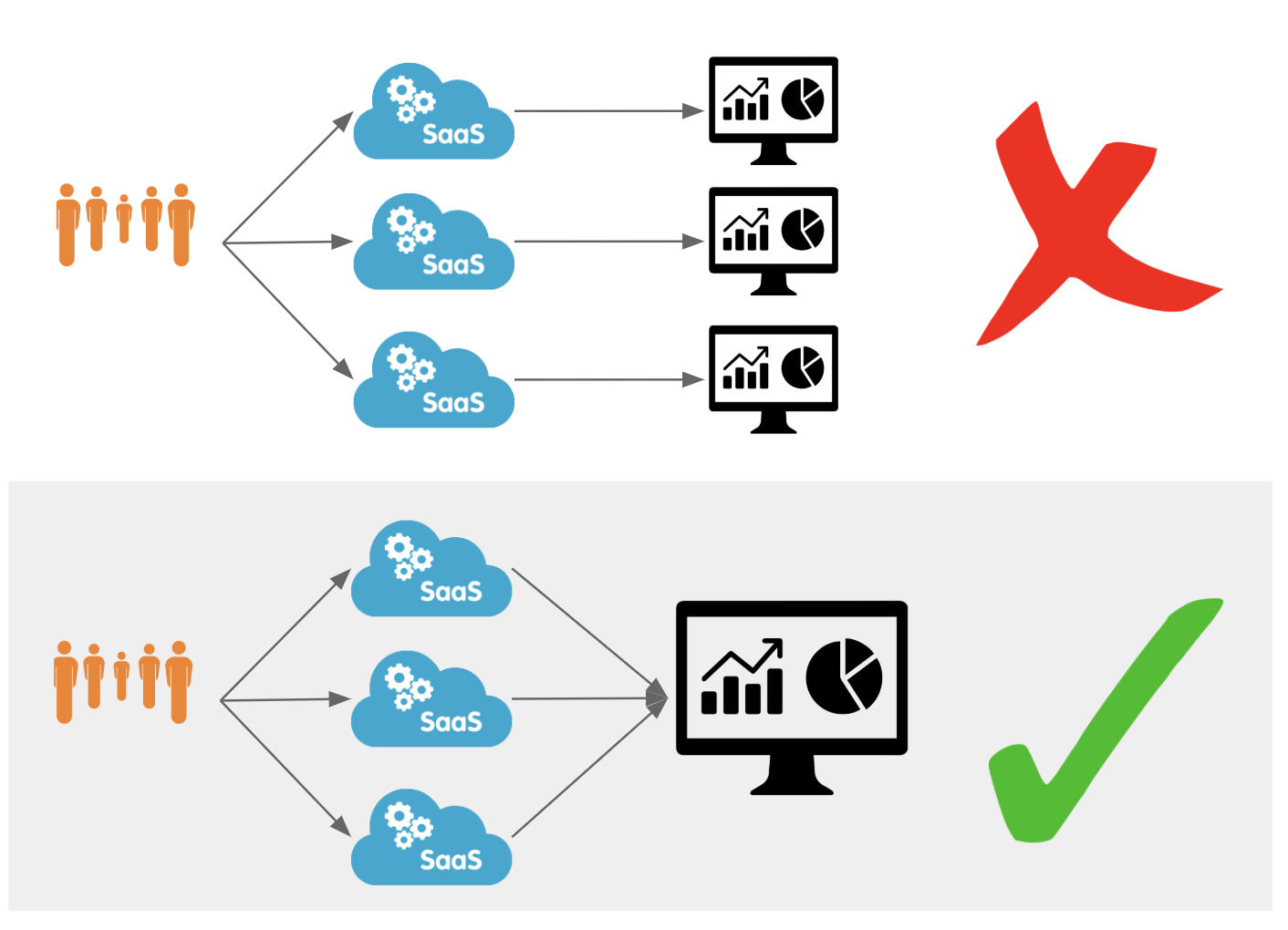

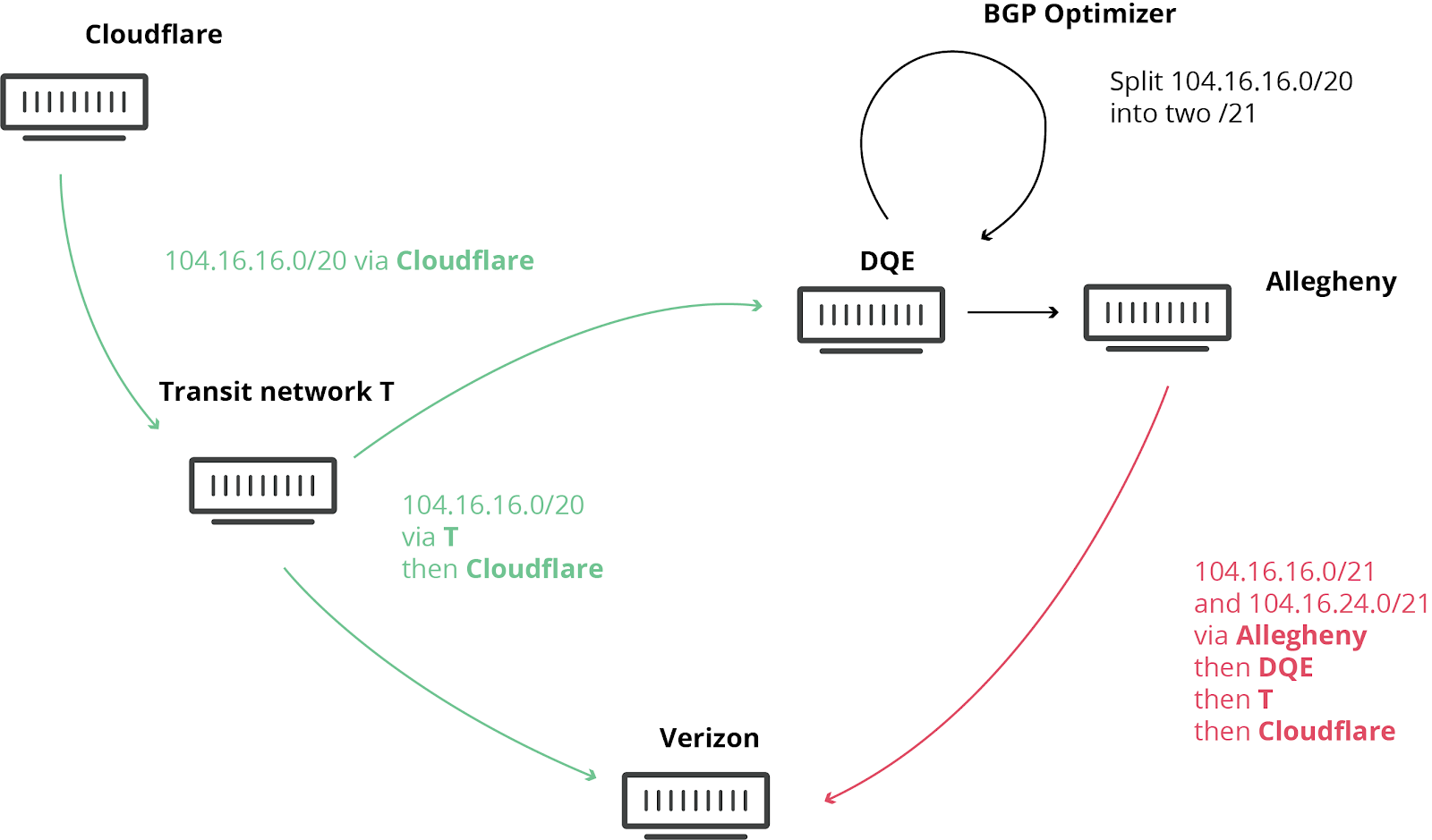

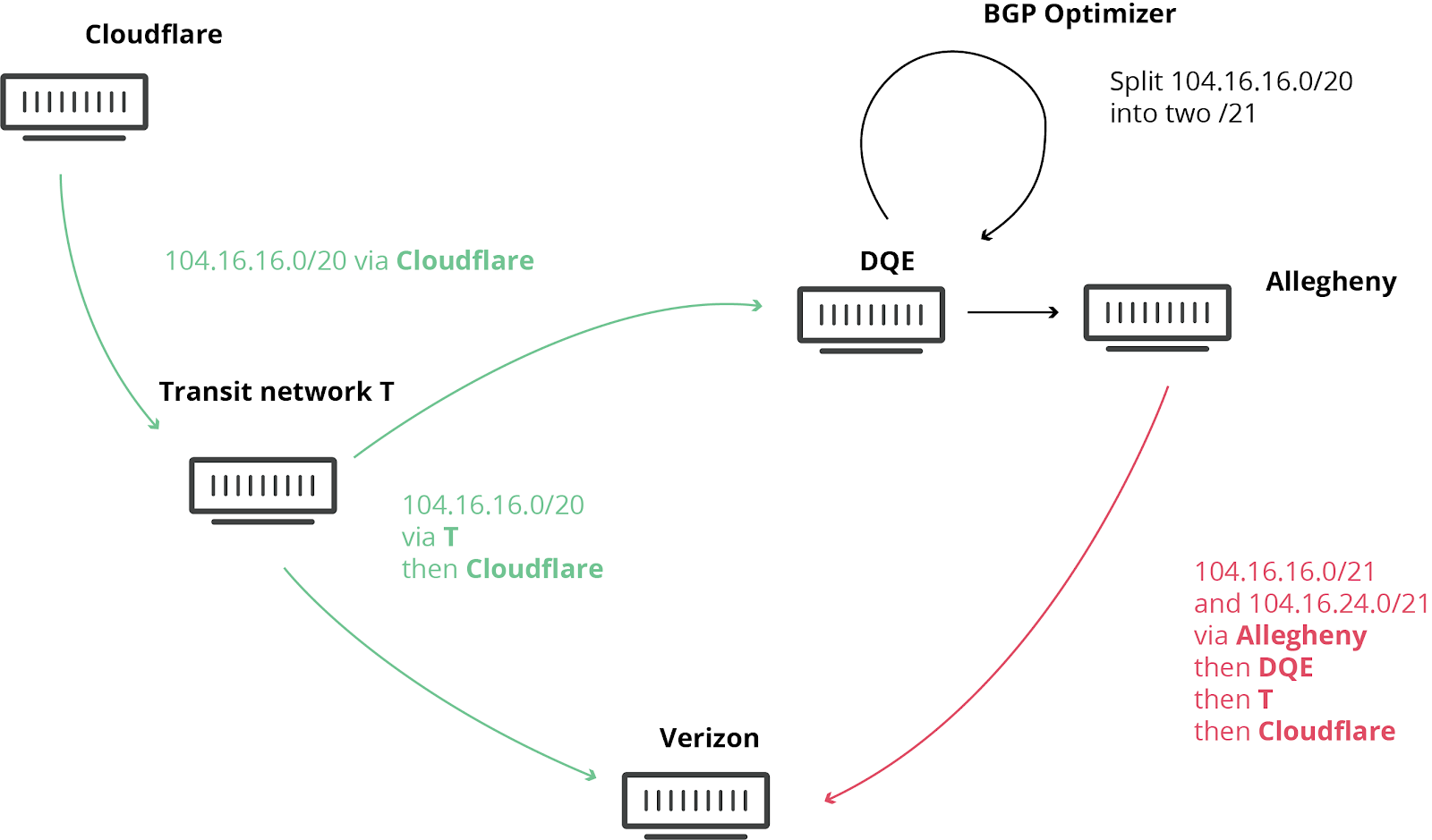

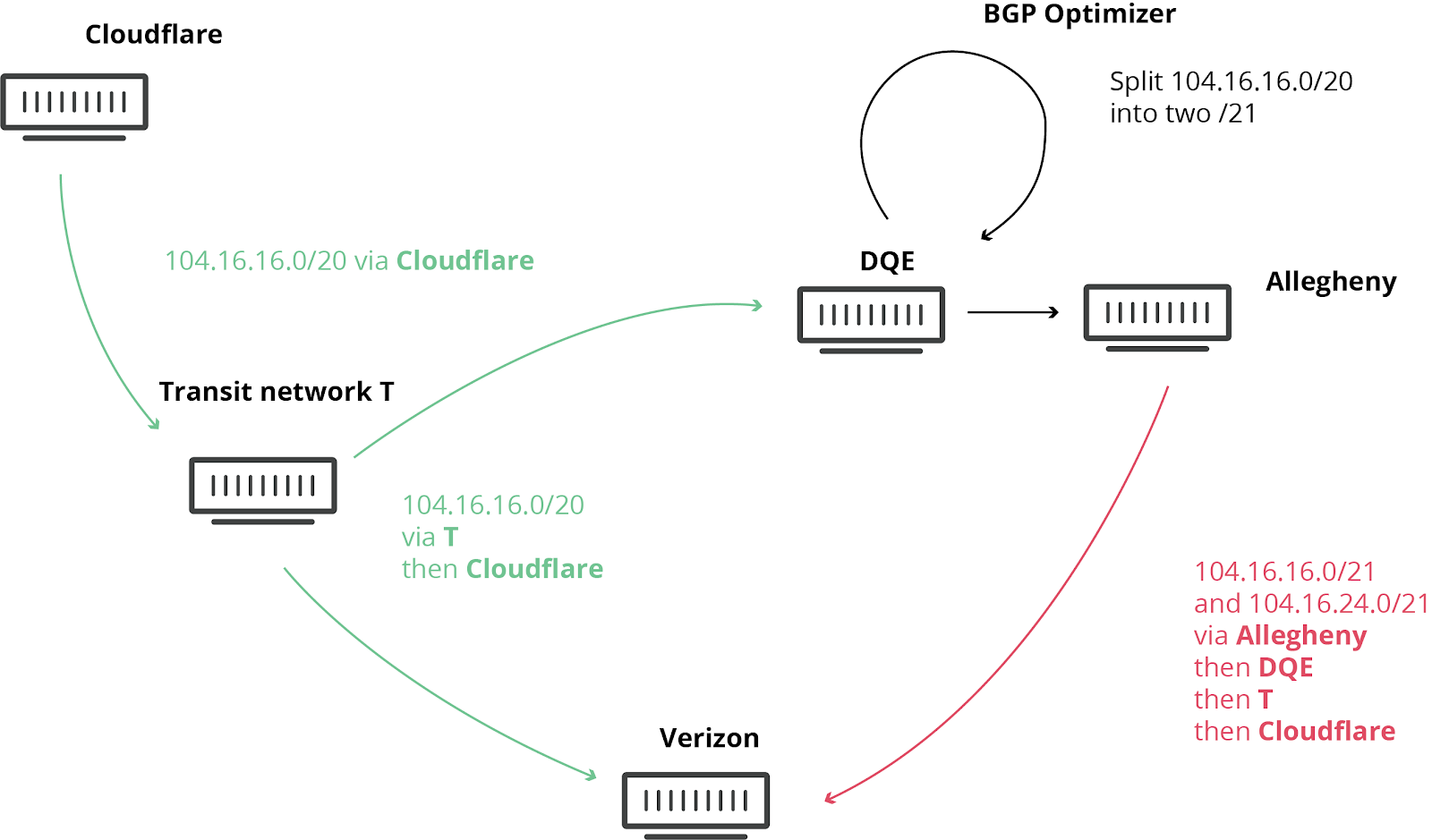

The first outage on June 24, 2019 wasn’t the fault of CloudFlare. A small service provider in Pennsylvania decided to use a BGP Optimizer from Noction to do some route optimization inside their autonomous system (AS). That in and of itself shouldn’t have caused a problem. At least, not until someone leaked those routes to the greater internet.

It was a comedy of errors. The provider in question announced their more specific routes to an upstream customer, who in turn announced them to Verizon. After that all bets are Continue reading

Cloudflare outage caused by bad software deploy (updated)

This is a short placeholder blog and will be replaced with a full post-mortem and disclosure of what happened today.

For about 30 minutes today, visitors to Cloudflare sites received 502 errors caused by a massive spike in CPU utilization on our network. This CPU spike was caused by a bad software deploy that was rolled back. Once rolled back the service returned to normal operation and all domains using Cloudflare returned to normal traffic levels.

This was not an attack (as some have speculated) and we are incredibly sorry that this incident occurred. Internal teams are meeting as I write performing a full post-mortem to understand how this occurred and how we prevent this from ever occurring again.

Update at 2009 UTC:

Starting at 1342 UTC today we experienced a global outage across our network that resulted in visitors to Cloudflare-proxied domains being shown 502 errors (“Bad Gateway”). The cause of this outage was deployment of a single misconfigured rule within the Cloudflare Web Application Firewall (WAF) during a routine deployment of new Cloudflare WAF Managed rules.

The intent of these new rules was to improve the blocking of inline JavaScript that is used in attacks. These rules were Continue reading

Cloudflare outage caused by bad software deploy (updated)

This is a short placeholder blog and will be replaced with a full post-mortem and disclosure of what happened today.

For about 30 minutes today, visitors to Cloudflare sites received 502 errors caused by a massive spike in CPU utilization on our network. This CPU spike was caused by a bad software deploy that was rolled back. Once rolled back the service returned to normal operation and all domains using Cloudflare returned to normal traffic levels.

This was not an attack (as some have speculated) and we are incredibly sorry that this incident occurred. Internal teams are meeting as I write performing a full post-mortem to understand how this occurred and how we prevent this from ever occurring again.

Update at 2009 UTC:

Starting at 1342 UTC today we experienced a global outage across our network that resulted in visitors to Cloudflare-proxied domains being shown 502 errors (“Bad Gateway”). The cause of this outage was deployment of a single misconfigured rule within the Cloudflare Web Application Firewall (WAF) during a routine deployment of new Cloudflare WAF Managed rules.

The intent of these new rules was to improve the blocking of inline JavaScript that is used in attacks. These rules were Continue reading

Third Time’s a Charm (A brief history of a gay marriage)

Happy Pride from Proudflare, Cloudflare’s LGBTQIA+ employee resource group. We wanted to share some stories from our members this month which highlight both the struggles behind the LGBTQIA+ rights movement and its successes. This first story is from Lesley.

The moment that crystalised the memory of that day…crystal blue afternoon, bright-coloured autumn leaves, borrowed tables, crockery and cutlery, flowers arranged by a cousin, cake baked by a neighbour, music mixed by a friend... our priest/rabbi a close gay friend with neither yarmulke nor collar. The venue, a backyard kitty-corner at the home my wife grew up in. Love and good wishes in abundance from a community that supports us and our union. And in the middle of all that, my wife… turning to me and smiling, grass stains on the bottom of her long cream wedding dress after abandoning her heels and dancing barefoot in the grass. As usual, a microphone in hand, bringing life and laughter to all with her charismatic quips.

Our first marriage was as legal as marrying two donkeys, with all the attached rights

This was the fall of 2002 and same-sex marriage was legal in 0 of the 50 United States.

Third Time’s a Charm (A brief history of a gay marriage)

Happy Pride from Proudflare, Cloudflare’s LGBTQIA+ employee resource group. We wanted to share some stories from our members this month which highlight both the struggles behind the LGBTQIA+ rights movement and its successes. This first story is from Lesley.

The moment that crystalised the memory of that day…crystal blue afternoon, bright-coloured autumn leaves, borrowed tables, crockery and cutlery, flowers arranged by a cousin, cake baked by a neighbour, music mixed by a friend... our priest/rabbi a close gay friend with neither yarmulke nor collar. The venue, a backyard kitty-corner at the home my wife grew up in. Love and good wishes in abundance from a community that supports us and our union. And in the middle of all that, my wife… turning to me and smiling, grass stains on the bottom of her long cream wedding dress after abandoning her heels and dancing barefoot in the grass. As usual, a microphone in hand, bringing life and laughter to all with her charismatic quips.

Our first marriage was as legal as marrying two donkeys, with all the attached rights

This was the fall of 2002 and same-sex marriage was legal in 0 of the 50 United States.

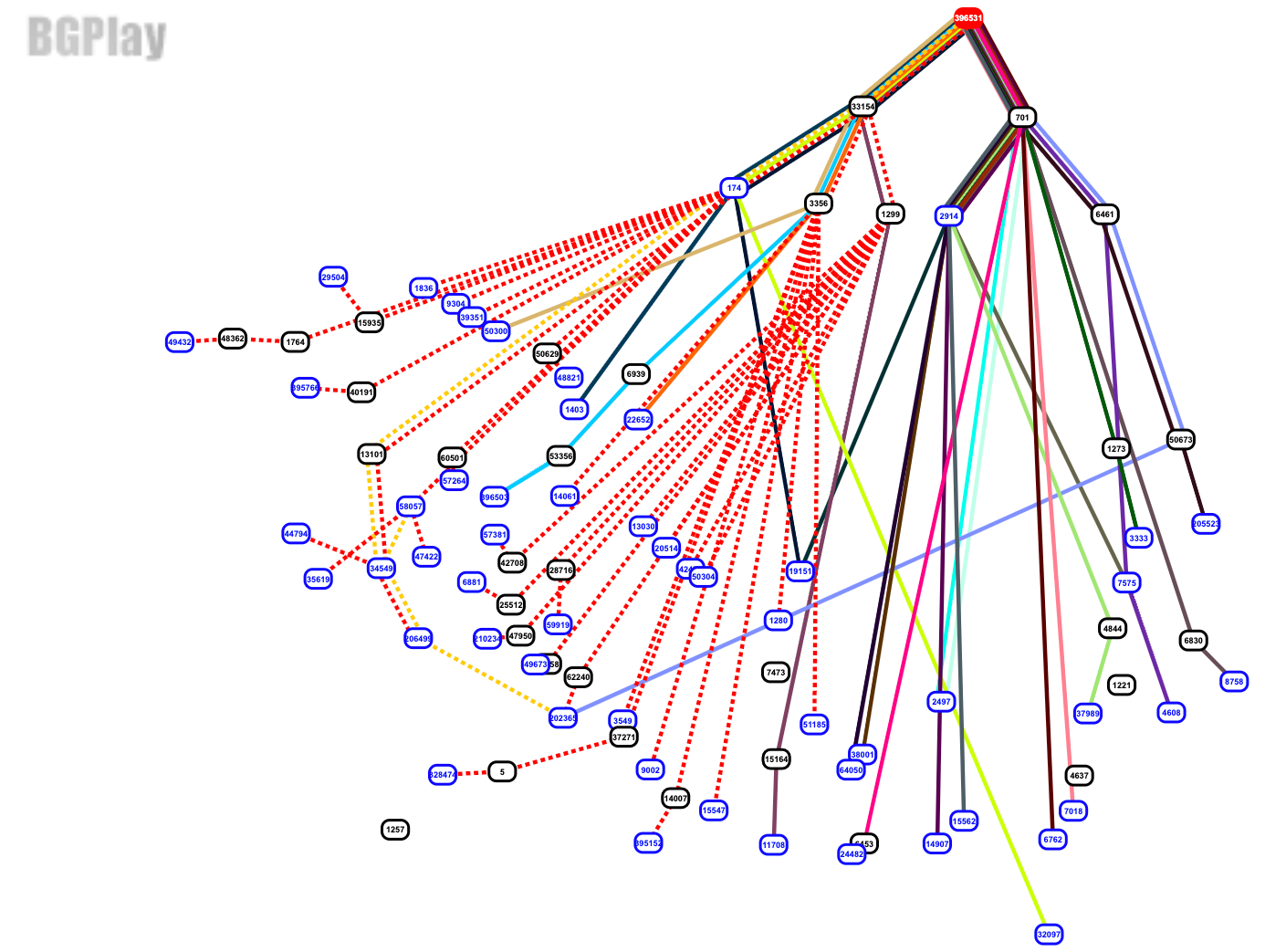

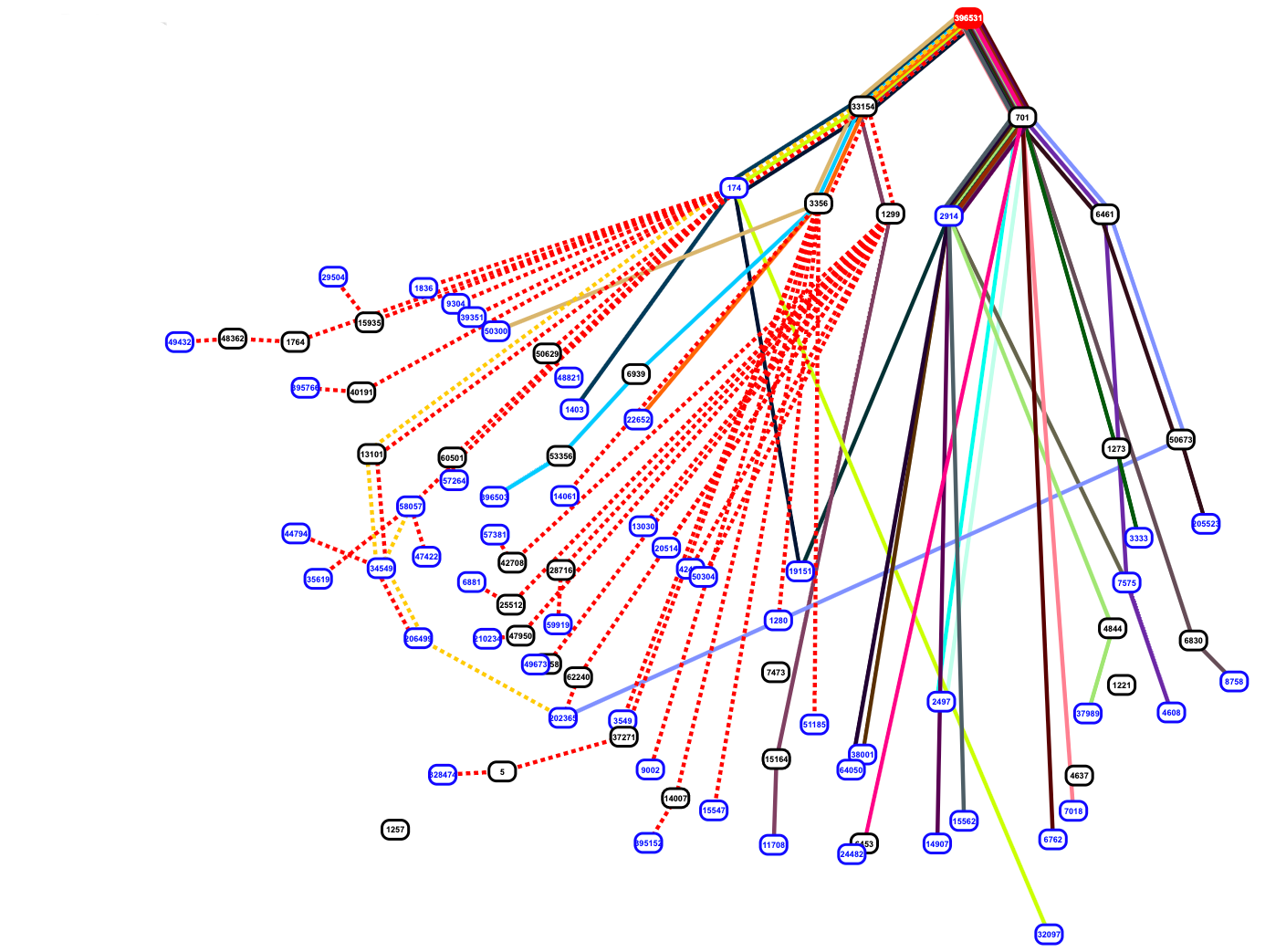

The deep-dive into how Verizon and a BGP Optimizer Knocked Large Parts of the Internet Offline Monday

A recap on what happened Monday

On Monday we wrote about a painful Internet wide route leak. We wrote that this should never have happened because Verizon should never have forwarded those routes to the rest of the Internet. That blog entry came out around 19:58 UTC, just over seven hours after the route leak finished (which will we see below was around 12:39 UTC). Today we will dive into the archived routing data and analyze it. The format of the code below is meant to use simple shell commands so that any reader can follow along and, more importantly, do their own investigations on the routing tables.

This was a very public BGP route leak event. It was both reported online via many news outlets and the event’s BGP data was reported via social media as it was happening. Andree Toonk tweeted a quick list of 2,400 ASNs that were affected.

Quick dumps through the data, showing about 2400 ASns (networks) affected. Cloudflare being hit the hardest. Top 20 of affected ASns below pic.twitter.com/9J7uvyasw2

— Andree Toonk (@atoonk) June 24, 2019

Using RIPE NCC archived data

The RIPE NCC operates a very useful archive of BGP routing. Continue reading

The deep-dive into how Verizon and a BGP Optimizer Knocked Large Parts of the Internet Offline Monday

A recap on what happened Monday

On Monday we wrote about a painful Internet wide route leak. We wrote that this should never have happened because Verizon should never have forwarded those routes to the rest of the Internet. That blog entry came out around 19:58 UTC, just over seven hours after the route leak finished (which will we see below was around 12:39 UTC). Today we will dive into the archived routing data and analyze it. The format of the code below is meant to use simple shell commands so that any reader can follow along and, more importantly, do their own investigations on the routing tables.

This was a very public BGP route leak event. It was both reported online via many news outlets and the event’s BGP data was reported via social media as it was happening. Andree Toonk tweeted a quick list of 2,400 ASNs that were affected.

Quick dumps through the data, showing about 2400 ASns (networks) affected. Cloudflare being hit the hardest. Top 20 of affected ASns below pic.twitter.com/9J7uvyasw2

— Andree Toonk (@atoonk) June 24, 2019

This blog contains a large number of acronyms and those are explained at the end of Continue reading

Deeper Connection with the Local Tech Community in India

On June 6th 2019, Cloudflare hosted the first ever customer event in a beautiful and green district of Bangalore, India. More than 60 people, including executives, developers, engineers, and even university students, have attended the half day forum.

The forum kicked off with a series of presentations on the current DDoS landscape, the cyber security trends, the Serverless computing and Cloudflare’s Workers. Trey Quinn, Cloudflare Global Head of Solution Engineering, gave a brief introduction on the evolution of edge computing.

We also invited business and thought leaders across various industries to share their insights and best practices on cyber security and performance strategy. Some of the keynote and penal sessions included live demos from our customers.

At this event, the guests had gained first-hand knowledge on the latest technology. They also learned some insider tactics that will help them to protect their business, to accelerate the performance and to identify the quick-wins in a complex internet environment.

To conclude the event, we arrange some dinner for the guests to network and to enjoy a cool summer night.

Through this event, Cloudflare has strengthened the connection with the local tech community. The success of the event cannot be separated from the Continue reading

Deeper Connection with the Local Tech Community in India

On June 6th 2019, Cloudflare hosted the first ever customer event in a beautiful and green district of Bangalore, India. More than 60 people, including executives, developers, engineers, and even university students, have attended the half day forum.

The forum kicked off with a series of presentations on the current DDoS landscape, the cyber security trends, the Serverless computing and Cloudflare’s Workers. Trey Quinn, Cloudflare Global Head of Solution Engineering, gave a brief introduction on the evolution of edge computing.

We also invited business and thought leaders across various industries to share their insights and best practices on cyber security and performance strategy. Some of the keynote and penal sessions included live demos from our customers.

At this event, the guests had gained first-hand knowledge on the latest technology. They also learned some insider tactics that will help them to protect their business, to accelerate the performance and to identify the quick-wins in a complex internet environment.

To conclude the event, we arrange some dinner for the guests to network and to enjoy a cool summer night.

Through this event, Cloudflare has strengthened the connection with the local tech community. The success of the event cannot be separated from the Continue reading

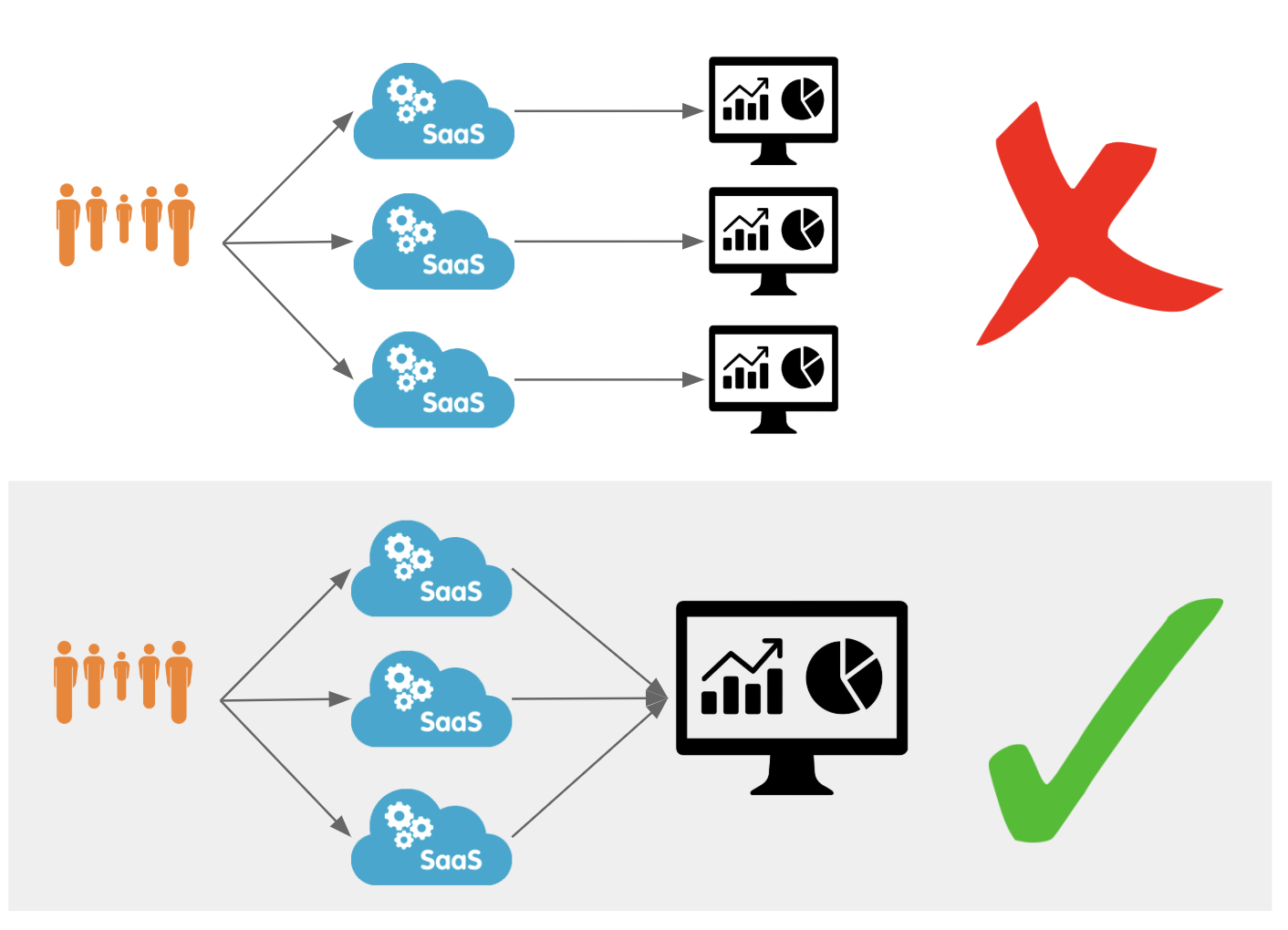

Get Cloudflare insights in your preferred analytics provider

Today, we’re excited to announce our partnerships with Chronicle Security, Datadog, Elastic, Looker, Splunk, and Sumo Logic to make it easy for our customers to analyze Cloudflare logs and metrics using their analytics provider of choice. In a joint effort, we have developed pre-built dashboards that are available as a Cloudflare App in each partner’s platform. These dashboards help customers better understand events and trends from their websites and applications on our network.

|

|

|

|

|

|

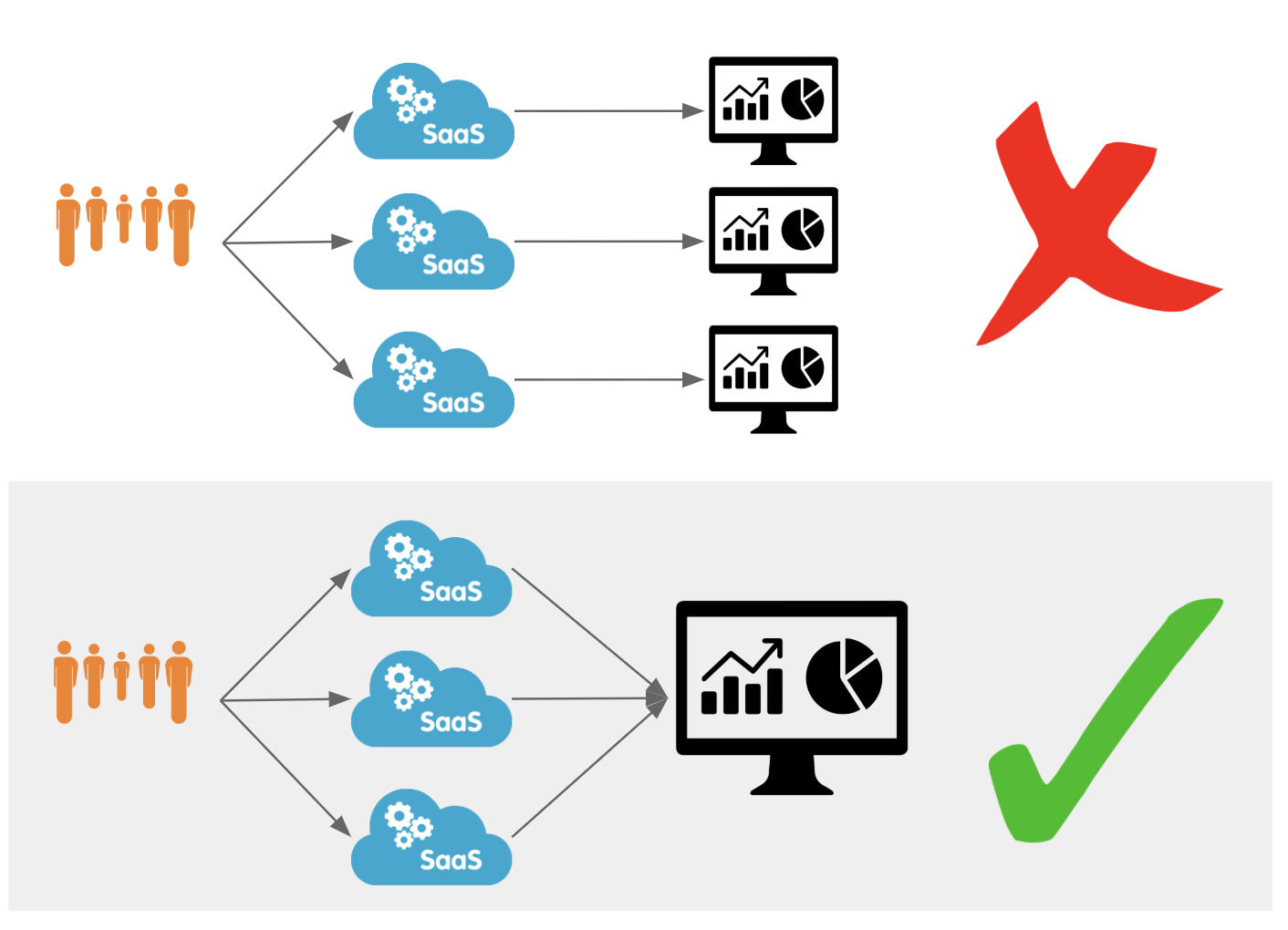

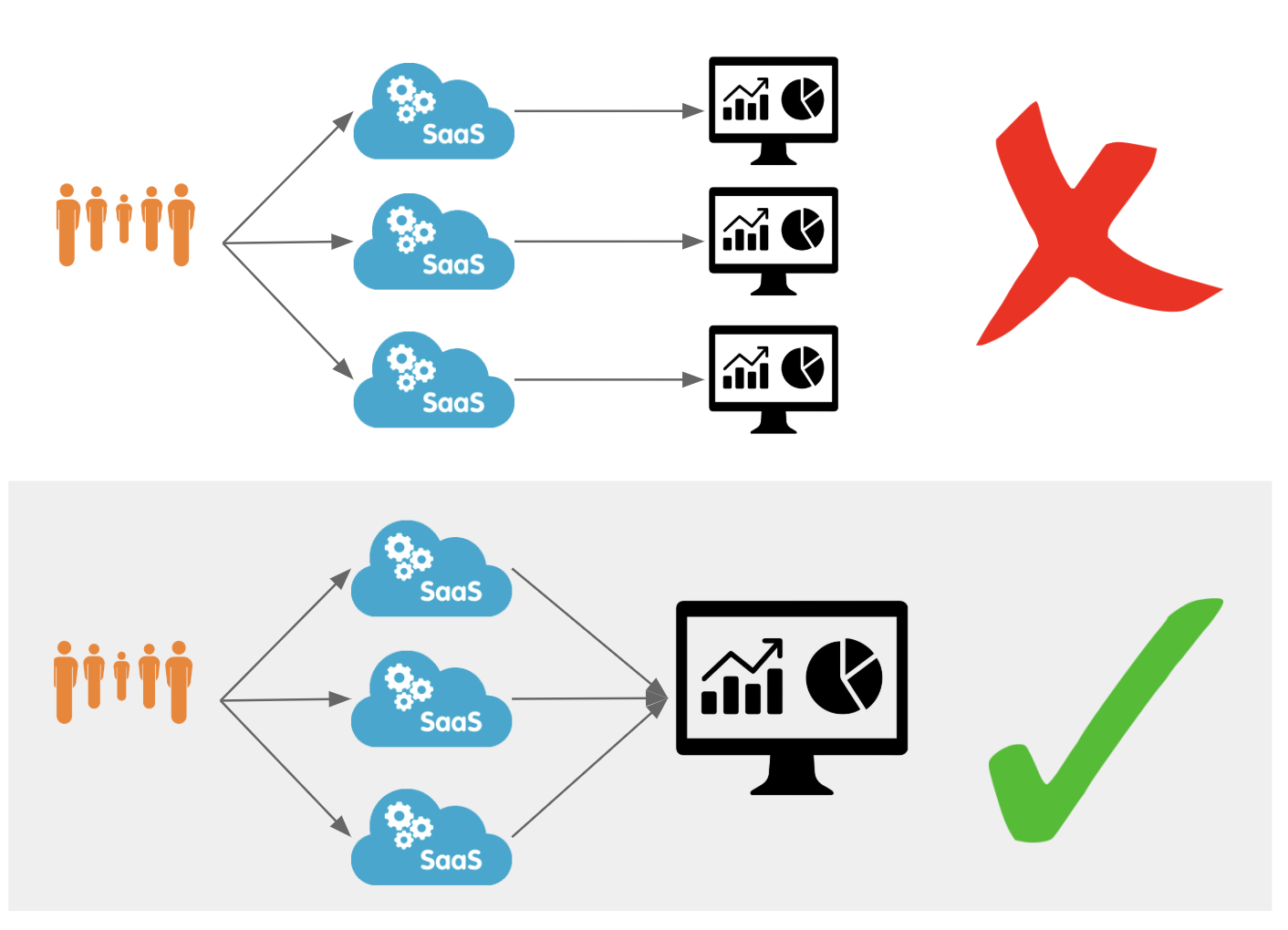

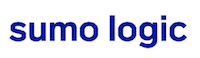

Cloudflare insights in the tools you're already using

Data analytics is a frequent theme in conversations with Cloudflare customers. Our customers want to understand how Cloudflare speeds up their websites and saves them bandwidth, ranks their fastest and slowest pages, and be alerted if they are under attack. While providing insights is a core tenet of Cloudflare's offering, the data analytics market has matured and many of our customers have started using third-party providers to analyze data—including Cloudflare logs and metrics. By aggregating data from multiple applications, infrastructure, and cloud platforms in one dedicated analytics platform, customers can create a single pane of glass and benefit from better end-to-end visibility over their entire stack.

While these analytics Continue reading

Get Cloudflare insights in your preferred analytics provider

Today, we’re excited to announce our partnerships with Chronicle Security, Datadog, Elastic, Looker, Splunk, and Sumo Logic to make it easy for our customers to analyze Cloudflare logs and metrics using their analytics provider of choice. In a joint effort, we have developed pre-built dashboards that are available as a Cloudflare App in each partner’s platform. These dashboards help customers better understand events and trends from their websites and applications on our network.

|

|

|

|

|

|

Cloudflare insights in the tools you're already using

Data analytics is a frequent theme in conversations with Cloudflare customers. Our customers want to understand how Cloudflare speeds up their websites and saves them bandwidth, ranks their fastest and slowest pages, and be alerted if they are under attack. While providing insights is a core tenet of Cloudflare's offering, the data analytics market has matured and many of our customers have started using third-party providers to analyze data—including Cloudflare logs and metrics. By aggregating data from multiple applications, infrastructure, and cloud platforms in one dedicated analytics platform, customers can create a single pane of glass and benefit from better end-to-end visibility over their entire stack.

While these analytics Continue reading

The Serverlist: Serverless makes a splash at JSConf EU and JSConf Asia

Check out our sixth edition of The Serverlist below. Get the latest scoop on the serverless space, get your hands dirty with new developer tutorials, engage in conversations with other serverless developers, and find upcoming meetups and conferences to attend.

Sign up below to have The Serverlist sent directly to your mailbox.

The Serverlist: Serverless makes a splash at JSConf EU and JSConf Asia

Check out our sixth edition of The Serverlist below. Get the latest scoop on the serverless space, get your hands dirty with new developer tutorials, engage in conversations with other serverless developers, and find upcoming meetups and conferences to attend.

Sign up below to have The Serverlist sent directly to your mailbox.

Verizon 和某 BGP 优化器如何在今日大范围重创互联网

大规模路由泄漏影响包括 Cloudflare 在内的主要互联网服务

事件经过

在 UTC 时间今天( 2019年6月24号)10 点 30 分,互联网遭受了一场不小的冲击。通过主要互联网转接服务提供供应商 Verizon (AS701) 转接的许多互联网路由,都被先导至宾夕法尼亚北部的一家小型公司。这相当于 滴滴错误地将整条高速公路导航至某小巷 – 导致大部分互联网用户无法访问 Cloudflare 上的许多网站和许多其他供应商的服务。这本不应该发生,因为 Verizon 不应将这些路径转送到互联网的其余部分。要理解事件发生原因,请继续阅读。

此类不幸事件并不罕见,我们以前曾发布相关博文。这一次,全世界都再次见证了它所带来的严重损害。而Noction “BGP 优化器”产品的涉及,则让今天的事件进一步恶化。这个产品有一个功能:可将接收到的 IP 前缀拆分为更小的组成部分(称为更具体前缀)。例如,我们自己的 IPv4 路由 104.20.0.0/20 被转换为 104.20.0.0/21 和 104.20.8.0/21。就好像通往 ”北京”的路标被两个路标取代,一个是 ”北京东”,另一个是 ”北京西”。通过将这些主要 IP 块拆分为更小的部分,网络可引导其内部的流量,但这种拆分原本不允许向全球互联网广播。正是这方面的原因导致了今天的网络中断。

为了解释后续发生的事情,我们先快速回顾一下互联网基础“地图”的工作原理。“Internet”的字面意思是网络互联,它由叫做自治系统(AS)的网络组成,每个网络都有唯一的标识符,即 AS 编号。所有这些网络都使用边界网关协议(BGP)来进行互连。BGP 将这些网络连接在一起,并构建互联网“地图”,使通信得以一个地方(例如,您的 ISP)传播到地球另一端的热门网站。

通过 BGP,各大网络可以交换路由信息:如何从您所在的地址访问它们。这些路由可能很具体,类似于在 GPS 上查找特定城市,也可能非常宽泛,就如同让 GPS 指向某个省。这就是今天的问题所在。

宾夕法尼亚州的互联网服务供应商(AS33154 - DQE Communications)在其网络中使用了 BGP 优化器,这意味着其网络中有很多更具体的路由。具体路由优先于更一般的路由(类似于在滴滴 中,前往北京火车站的路线比前往北京的路线更具体)。

DQE 向其客户(AS396531 - Allegheny)公布了这些具体路由。然后,所有这些路由信息被转送到他们的另一个转接服务供应商 (AS701 - Verizon),后者则将这些"更好"的路线泄露给整个互联网。由于这些路由更精细、更具体,因此被误认为是“更好”的路由。

泄漏本应止于 Verizon。然而,Verizon 违背了以下所述的众多最佳做法,因缺乏过滤机制而导致此次泄露变成重大事件,影响到众多互联网服务,如亚马逊、Linode 和 Cloudflare。

这意味着,Verizon、Allegheny 和 DQE 突然之间必须应对大量试图通过其网络访问这些服务的互联网用户。由于这些网络没有适当的设备来应对流量的急剧增长,导致服务中断。即便他们有足够的能力来应对流量剧增,DQE、Allegheny 和 Verizon 也不应该被允许宣称他们拥有访问 Cloudflare、亚马逊、Linode 等服务的最佳路由...

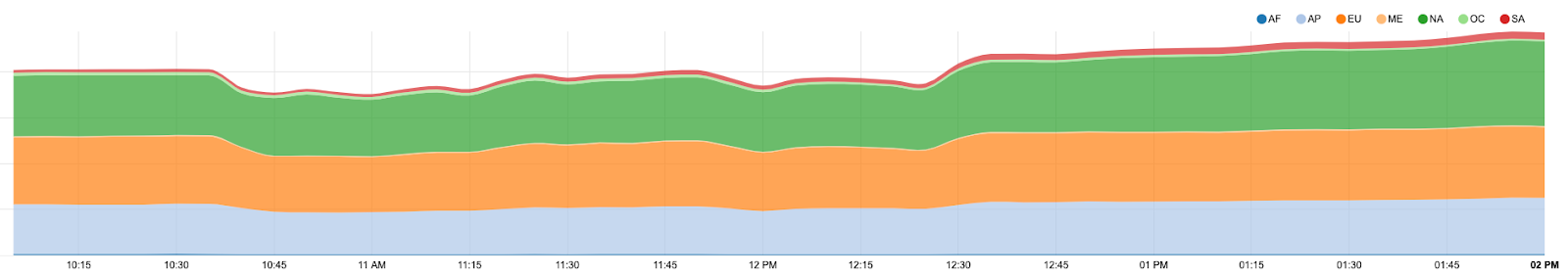

在事件中,我们观察到,在最严重的时段,我们损失了大约 15% 的全球流量。

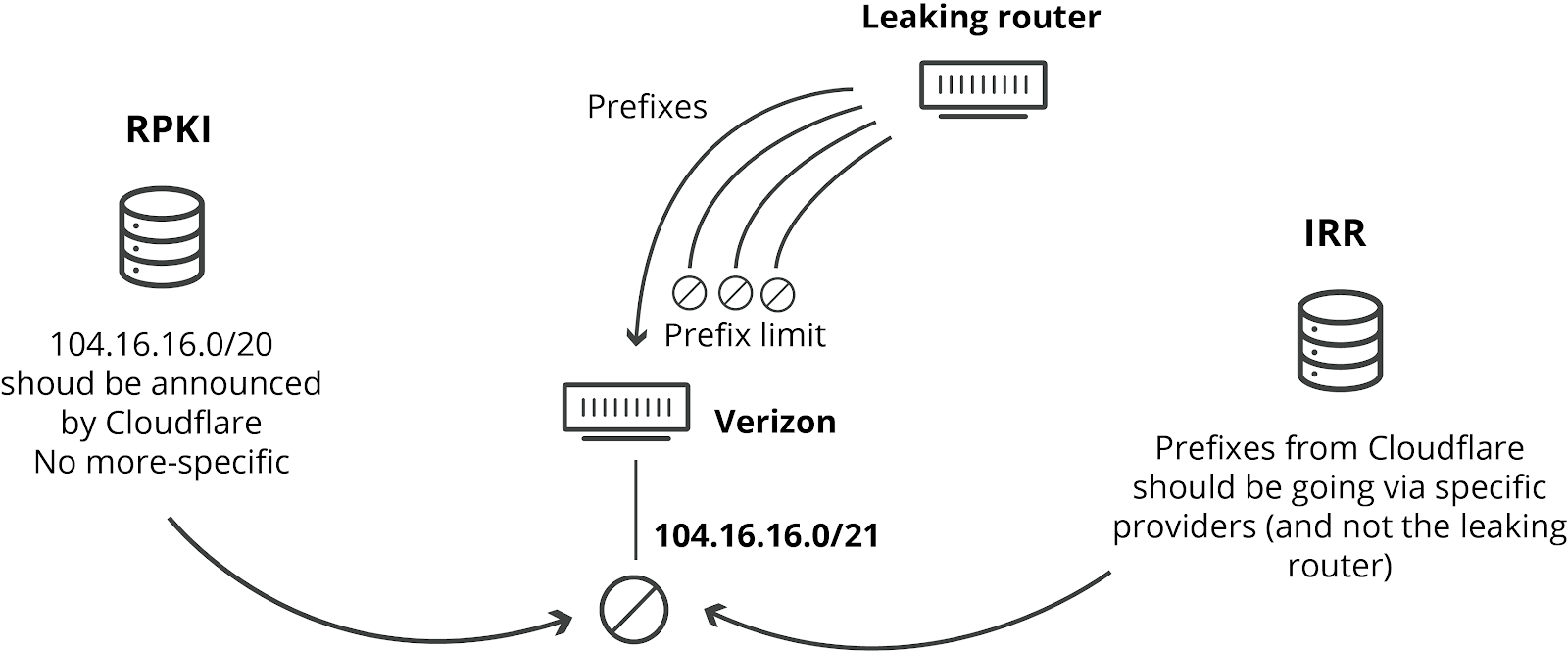

如何防止此类泄漏?

有多种方法可以避免此类泄漏:

一,在配置 BGP 会话的时候,可以对其接收的路由网段的数量设置硬性限制。这意味着,如果接收到的路由网段数超过阈值,路由器可以决定关闭会话。如果 Verizon 有这样的前缀限制,这次事件就不会发生。设置前缀数量限制是最佳做法。像 Verizon 这样的供应商可以无需付出任何成本便可以设置。没有任何合理的理由可以解释为什么他们没有设置这种限制的原因,只能归咎为草率或懒惰可能就是因为草率或懒惰。

二,网络运营商防止类似泄漏的另外一种方法是:实施基于 IRR (Internet Routing Registry)的筛选。IRR 是因特网路由注册表,各网络所有者可以将自己的网段条目添加到这些分布式数据库中。然后,其他网络运营商可以使用这些 IRR 记录,在与同类运营商产生BGP 会话时,生成特定的前缀列表。如果使用了 IRR 过滤,所涉及的任何网络都不会接受更具体的网段前缀。非常令人震惊的是,尽管 IRR 过滤已经存在了 24 年(并且有详细记录),Verizon 没有在其与 Allegheny的 BGP 会话中实施任何此类过滤。IRR 过滤不会增加 Verizon 的任何成本或限制其服务。再次,我们唯一能想到的原因是草率或懒惰。

三,我们去年在全球实施和部署的 RPKI 框架旨在防止此类泄漏。它支持对源网络和网段大小进行过滤。Cloudflare 发布的网段最长不超过 20 位。然后,RPKI 会指示无论路径是什么,都不应接受任何更具体网段前缀。要使此机制发挥作用,网络需要启用 BGP 源验证。AT&T 等许多供应商已在其网络中成功启用此功能。

如果 Verizon 使用了 RPKI,他们就会发现播发的路由无效,路由器就能自动丢弃这些路由。

Cloudflare 建议所有网络运营商立即部署 RPKI!

上述所有建议经充分浓缩后已纳入 MANRS(共同协议路由安全规范)

事件解决

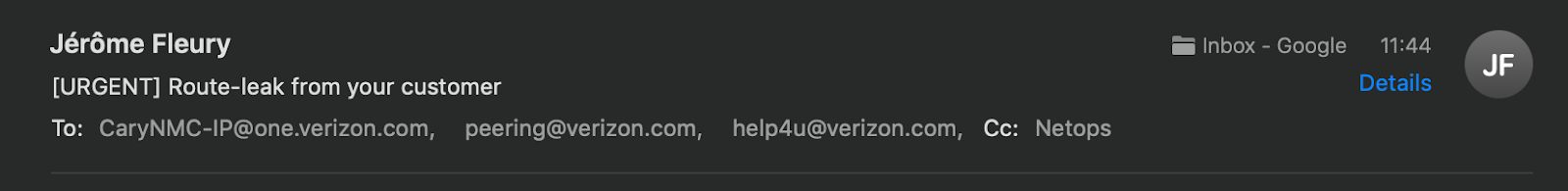

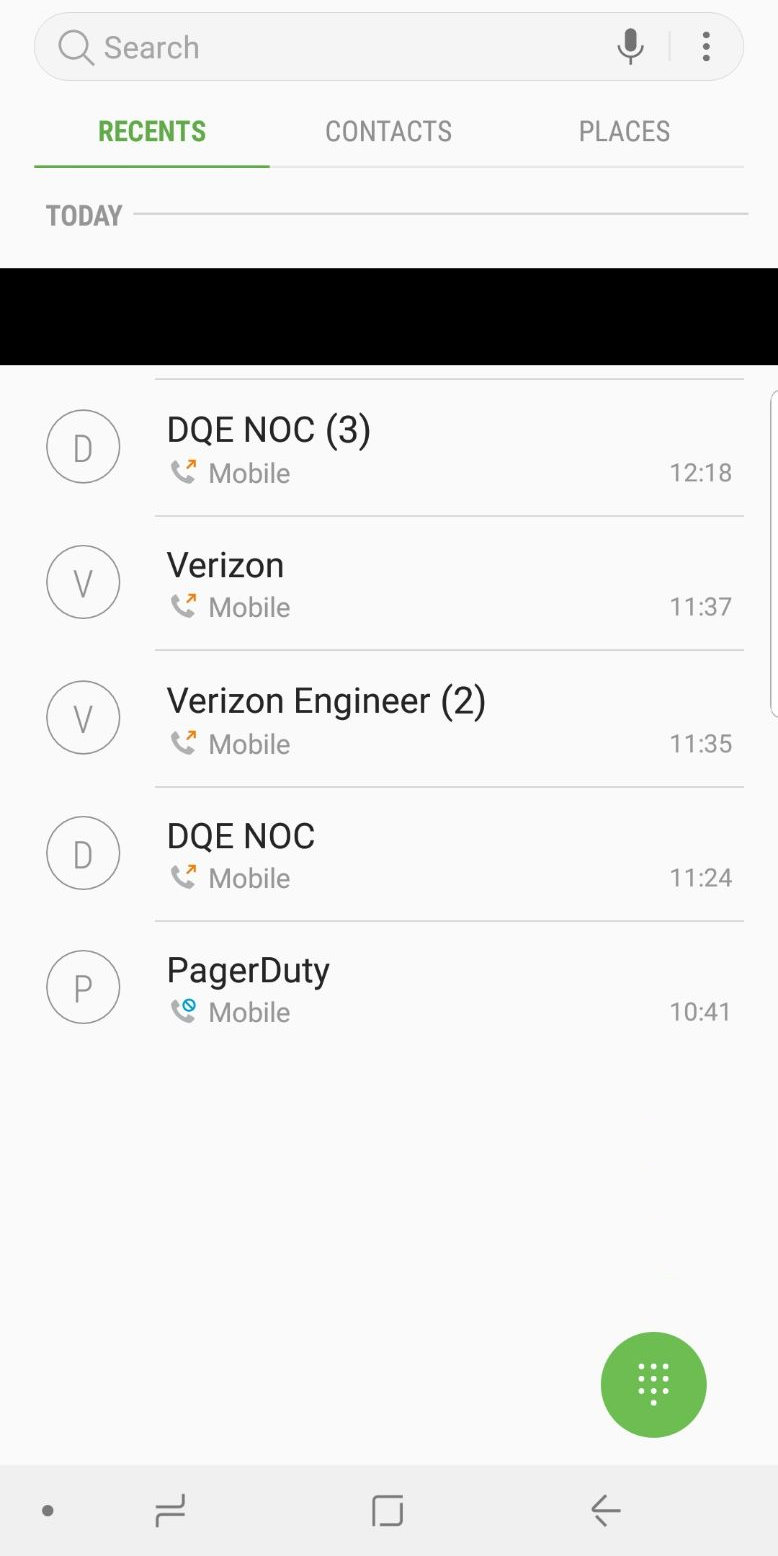

Cloudflare 的网络团队联系了涉事网络:AS33154 (DQE Communications) 和 AS701 (Verizon)。联系过程并不顺畅,这可能是由于路由泄露发生在美国东海岸凌晨时间。

我们的一位网络工程师迅速联系了 DQE Communications,稍有耽搁之后,他们帮助我们与解决该问题的相关人员取得了联系。DQE 与我们通过电话合作,停止向 Allegheny 播发这些“优化”路线路由。我们对他们的帮助表示感谢。采取此措施后,互联网变回稳定,事态恢复正常。

很遗憾,我们尝试通过电子邮件和电话联系 Verizon,但直到撰写本文时(事件发生后超过 8 小时),尚未收到他们的回复,我们也不清楚他们是否正在采取行动以解决问题。

Cloudflare 希望此类事件永不发生,但很遗憾,当前的互联网环境在预防防止此类事件方面作出的努力甚微。现在,业界都应该通过 RPKI 等系统部署更好,更安全的路由的时候。我们希望主要供应商能效仿 Cloudflare、亚马逊和 AT&T,开始验证路由。尤其并且,我们正密切关注 Verizon 并仍在等候其回复。

尽管导致此次服务中断的事件并非我们所能控制,但我们仍对此感到抱歉。我们的团队非常关注我们的服务,在发现此问题几分钟后,即已安排美国、英国、澳大利亚和新加坡的工程师上线解决问题。

How Verizon and a BGP Optimizer Knocked Large Parts of the Internet Offline Today

Massive route leak impacts major parts of the Internet, including Cloudflare

What happened?

Today at 10:30UTC, the Internet had a small heart attack. A small company in Northern Pennsylvania became a preferred path of many Internet routes through Verizon (AS701), a major Internet transit provider. This was the equivalent of Waze routing an entire freeway down a neighborhood street — resulting in many websites on Cloudflare, and many other providers, to be unavailable from large parts of the Internet. This should never have happened because Verizon should never have forwarded those routes to the rest of the Internet. To understand why, read on.

We have blogged about these unfortunate events in the past, as they are not uncommon. This time, the damage was seen worldwide. What exacerbated the problem today was the involvement of a “BGP Optimizer” product from Noction. This product has a feature that splits up received IP prefixes into smaller, contributing parts (called more-specifics). For example, our own IPv4 route 104.20.0.0/20 was turned into 104.20.0.0/21 and 104.20.8.0/21. It’s as if the road sign directing traffic to “Pennsylvania” was replaced by two road signs, one for “Pittsburgh, PA” and Continue reading

How Verizon and a BGP Optimizer Knocked Large Parts of the Internet Offline Today

Massive route leak impacts major parts of the Internet, including Cloudflare

What happened?

Today at 10:30UTC, the Internet had a small heart attack. A small company in Northern Pennsylvania became a preferred path of many Internet routes through Verizon (AS701), a major Internet transit provider. This was the equivalent of Waze routing an entire freeway down a neighborhood street — resulting in many websites on Cloudflare, and many other providers, to be unavailable from large parts of the Internet. This should never have happened because Verizon should never have forwarded those routes to the rest of the Internet. To understand why, read on.

We have blogged about these unfortunate events in the past, as they are not uncommon. This time, the damage was seen worldwide. What exacerbated the problem today was the involvement of a “BGP Optimizer” product from Noction. This product has a feature that splits up received IP prefixes into smaller, contributing parts (called more-specifics). For example, our own IPv4 route 104.20.0.0/20 was turned into 104.20.0.0/21 and 104.20.8.0/21. It’s as if the road sign directing traffic to “Pennsylvania” was replaced by two road signs, one for “Pittsburgh, PA” and Continue reading

Comment Verizon et un optimiseur BGP ont affecté de nombreuses partie d’Internet aujourd’hu

Une fuite massive de routes a eu un impact sur de nombreuses parties d'Internet, y compris sur Cloudflare

Que s'est-il passé ?

Aujourd'hui à 10h30 UTC, Internet a connu une sorte de mini crise cardiaque. Une petite entreprise du nord de la Pennsylvanie est devenue le chemin privilégié de nombreuses routes Internet à cause de Verizon (AS701), un important fournisseur de transit Internet. C’est un peu comme si Waze venait à diriger le trafic d’une autoroute complète vers une petite rue de quartier : de nombreux sites Web sur Cloudflare et beaucoup d’autres fournisseurs étaient indisponibles depuis une grande partie du réseau. Cet incident n'aurait jamais dû arriver, car Verizon n'aurait jamais dû transmettre ces itinéraires au reste d’Internet. Pour en comprendre les raisons, lisez la suite de cet article.

Nous avons déjà écrit un certain nombre d’articles par le passé sur ces événements malheureux qui sont plus fréquents qu’on ne le pense. Cette fois, les effets ont pu être observés dans le monde entier. Aujourd’hui, le problème a été aggravé par l’implication d’un produit « Optimiseur BGP » de Noction. Ce produit dispose d’une fonctionnalité qui permet de diviser les préfixes IP reçus en parties contributives plus petites (appelées « Continue reading