Going Proactive on Security: Driving Encryption Adoption Intelligently

It's no secret that Cloudflare operates at a huge scale. Cloudflare provides security and performance to over 9 million websites all around the world, from small businesses and WordPress blogs to Fortune 500 companies. That means one in every 10 web requests goes through our network.

However, hidden behind the scenes, we offer support in using our platform to all our customers - whether they're on our free plan or on our Enterprise offering. This blog post dives into some of the technology that helps make this possible and how we're using it to drive encryption and build a better web.

Why Now?

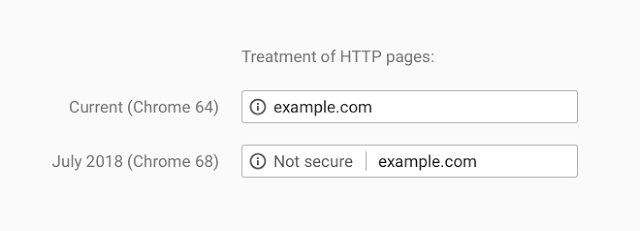

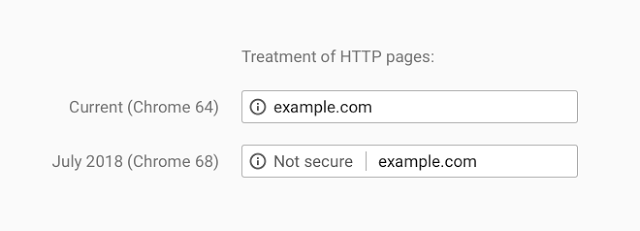

Recently web browser vendors have been working on extending encryption on the internet. Traditionally they would use positive indicators to mark encrypted traffic as secure; when traffic was served securely over HTTPS, a green padlock would indicate in your browser that this was the case. In moving to standardise encryption online, Google Chrome have been leading the charge in marking insecure page loads as "Not Secure". Today, this UI change has been pushed out to all Google Chrome users globally for all websites: any website loaded over HTTP will be marked as insecure.

That's not all though; Continue reading

Cloudflare Access: Now teams of any size can turn off their VPN

Using a VPN is painful. Logging-in interrupts your workflow. You have to remember a separate set of credentials, which your administrator has to manage. The VPN slows you down when you're away from the office. Beyond just inconvenience, a VPN can pose a real security risk. A single infected device or malicious user can compromise your network once inside the perimeter.

In response, large enterprises have deployed expensive zero trust solutions. The name sounds counterintuitive - don’t we want to add trust to our network security? Zero trust refers to the default state of these tools. They trust no one; each request has to prove that itself. This architecture, most notably demonstrated at Google with Beyondcorp, has allowed teams to start to migrate to a more secure method of access control.

However, users of zero trust tools still suffer from the same latency problems they endured with old-school VPNs. Even worse, the price tag puts these tools out of reach for most teams.

Here at Cloudflare, we shared those same frustrations with VPNs. After evaluating our options, we realized we could build a better zero trust solution by leveraging some of the unique capabilities we have here at Cloudflare:

Our Continue reading

Today, Chrome Takes Another Step Forward in Addressing the Design Flaw That is an Unencrypted Web

The following is a guest post by Troy Hunt, awarded Security expert, blogger, and Pluralsight author. He’s also the creator of the popular Have I been pwned?, the free aggregation service that helps the owners of over 5 billion accounts impacted by data breaches.

I still clearly remember my first foray onto the internet as a university student back in the mid 90's. It was a simpler online time back then, of course; we weren't doing our personal banking or our tax returns or handling our medical records so the whole premise of encrypting the transport layer wasn't exactly a high priority. In time, those services came along and so did the need to have some assurances about the confidentiality of the material we were sending around over other people's networks and computers. SSL as it was at the time was costly, but hey, banks and the like could absorb that given the nature of their businesses. However, at the time, there were all sorts of problems with the premise of serving traffic securely ranging from the cost of certs to the effort involved in obtaining and configuring them through to the performance hit on the Continue reading

I Wanna Go Fast – Load Balancing Dynamic Steering

Earlier this month we released Dynamic Steering for Load Balancing which allows you to have your Cloudflare load balancer direct traffic to the fastest pool for a given Cloudflare region or colo (Enterprise only).

To build this feature, we had to solve two key problems: 1) How to decide which pool of origins was the fastest and 2) How to distribute this decision to a growing group of 151 locations around the world.

Distance, Approximate Latency, and a Better Way

As my math teacher taught me, the shortest distance between two points is a straight line. This is also typically true on the internet - the shorter approximate distance there is between a user going through Cloudflare location to a customer origin, the better the experience is for the user. Geography is one way to approximate speed and we included the Geo Steering function when we initially introduced the Cloudflare Load Balancer. It is powerful, but manual; it’s not the best way. A customer on Twitter said it best:

@Cloudflare #FeatureRequest why can’t your load balancers determine which server is closest to the user then direct them to that one?

I don't want to have configure 10+ regions manually. This Continue reading

Securing U.S. Democracy: Athenian Project Update

Last December, Cloudflare announced the Athenian Project to help protect U.S. state and local election websites from cyber attack.

Since then, the need to protect our electoral systems has become increasingly urgent. As described by Director of National Intelligence Dan Coats, the “digital infrastructure that serves this country is literally under attack.” Just last week, we learned new details about how state election systems were targeted for cyberattack during the 2016 election. The U.S. government’s indictment of twelve Russian military intelligence officers describes the scanning of state election-related websites for vulnerabilities and theft of personal information related to approximately 500,000 voters.

This direct attack on the U.S. election systems using common Internet vulnerabilities reinforces the need to ensure democratic institutions are protected from attack in the future. The Athenian Project is Cloudflare’s attempt to do our part to secure our democracy.

Engaging with Elections Officials

Since announcing the Athenian Project, we’ve talked to state, county, and municipal officials around the country about protecting their election and voter registration websites. Today, we’re proud to report that we have Athenian Project participants in 19 states, and are in talks with many more. We have also strategized with civil Continue reading

IPv6 in China

Photo by chuttersnap / Unsplash

At the end of 2017, Xinhua reported that there will be 200 Million IPv6 users inside Mainland China by the end of this year. Halfway into the year, we’re seeing a rapid growth in IPv6 users and traffic originating from Mainland China.

Why does this matter?

IPv6 is often referred to the next generation of IP addressing. The reality is, IPv6 is what is needed for addressing today. Taking the largest mobile network in China today, China Mobile has over 900 Million mobile subscribers and over 670 Million 4G/LTE subscribers. To be able to provide service to their users, they need to provide an IP address to each subscriber’s device. This means close to a billion IP addresses would be required, which is far more than what is available in IPv4, especially as the available IP address pools have been exhausted.

What is the solution?

To solve the addressability of clients, many networks, especially mobile networks, will use Carrier Grade NAT (CGN). This allows thousands, possibly up to hundreds of thousands, of devices to be shared behind a single internet IP address. The CGN equipment can be very expensive to scale and further, given the Continue reading

Proxying traffic to Report URI with Cloudflare Workers

The following is a guest post by Scott Helme, a Security Researcher, international speaker, and blogger. He's also the founder of the popular securityheaders.com and report-uri.com, free tools to help people deploy better security.

With the continued growth of Report URI we're seeing a larger and larger variety of sites use the service. With that diversity comes additional requirements that need to be met, some of them simple and some of them less so. Here's a quick look at those challenges and how they can be solved easily with a Cloudflare Worker. Continue reading

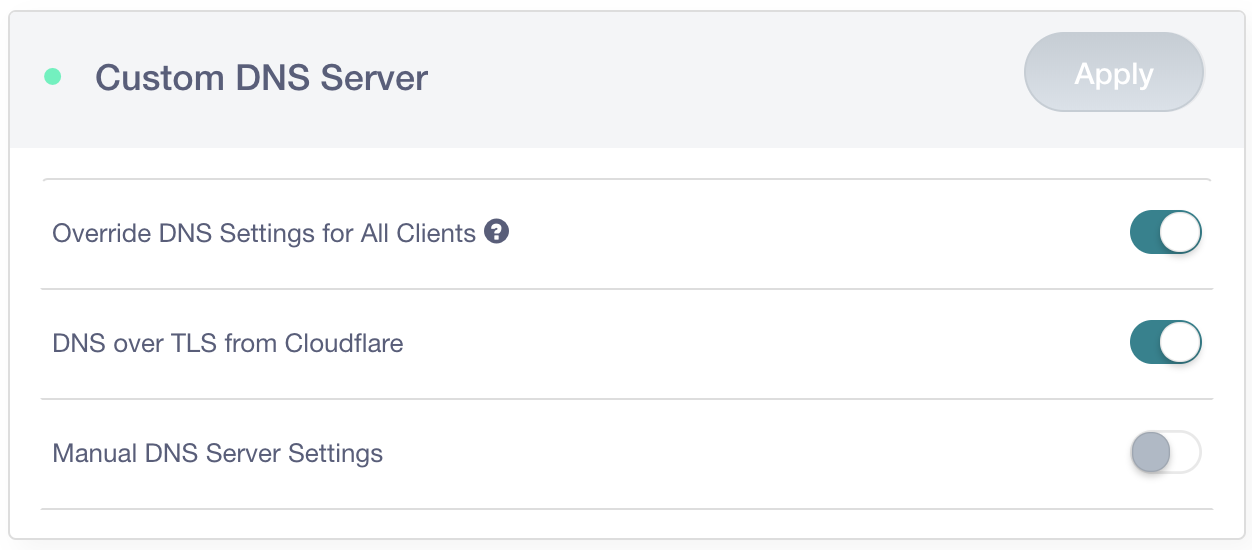

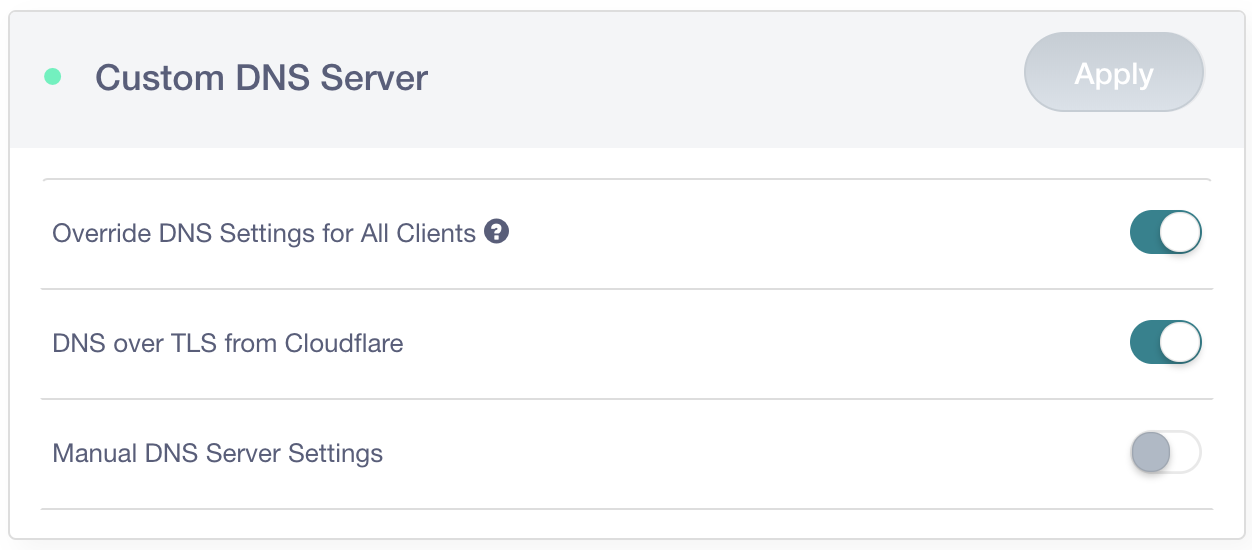

DNS-Over-TLS Built-In & Enforced – 1.1.1.1 and the GL.iNet GL-AR750S

GL.iNet GL-AR750S in black, same form-factor as the prior white GL.iNet GL-AR750. Credit card for comparison.

GL.iNet GL-AR750S in black, same form-factor as the prior white GL.iNet GL-AR750. Credit card for comparison.

Back in April, I wrote about how it was possible to modify a router to encrypt DNS queries over TLS using Cloudflare's 1.1.1.1 DNS Resolver. For this, I used the GL.iNet GL-AR750 because it was pre-installed with OpenWRT (LEDE). The folks at GL.iNet read that blog post and decided to bake DNS-Over-TLS support into their new router using the 1.1.1.1 resolver, they sent me one to take a look at before it's available for pre-release. Their new router can also be configured to force DNS traffic to be encrypted before leaving your local network, which is particularly useful for any IoT or mobile device with hard-coded DNS settings that would ordinarily ignore your routers DNS settings and send DNS queries in plain-text.

In my previous blog post I discussed how DNS was often the weakest link in the chain when it came to browsing privacy; whilst HTTP traffic is increasingly encrypted, this is seldom the case for DNS traffic. This makes it relatively trivial for an intermediary to work out what site you're sending Continue reading

Introducing Proudflare, Cloudflare’s LGBTQIA+ Group

With Pride month now in our collective rearview mirror for 2018, I wanted to share what some of us have been up to at Cloudflare. We're so proud that, in the last 8 months, we've formed a LGBTQIA+ Employee Resource Group (ERG) called Proudflare. We've launched chapters and monthly activities in each of our primary locations: San Francisco, London, Singapore, and Austin. This month, we came out in force! We transformed our company's social profiles, wrapped our HQ building in rainbow window decals, highlighted several non-profits we support, and threw a heck of an inaugural Pride Celebration.

We’re a very young group — just 8 months old — but we have big plans. Check out some of our activities and future plans, follow us on social media, and consider starting an ERG at your company too.

The History of Proudflare

On my first day at Cloudflare in October, 2017, I logged into Hipchat and searched LGBTQ. Fortunately for me, there was a "LGBT at Cloudflare" chat room already created, and I started establishing connections right away. I found that there had been a couple of informal group outings, but there was no regular activity, sharing of resources, nor an official Continue reading

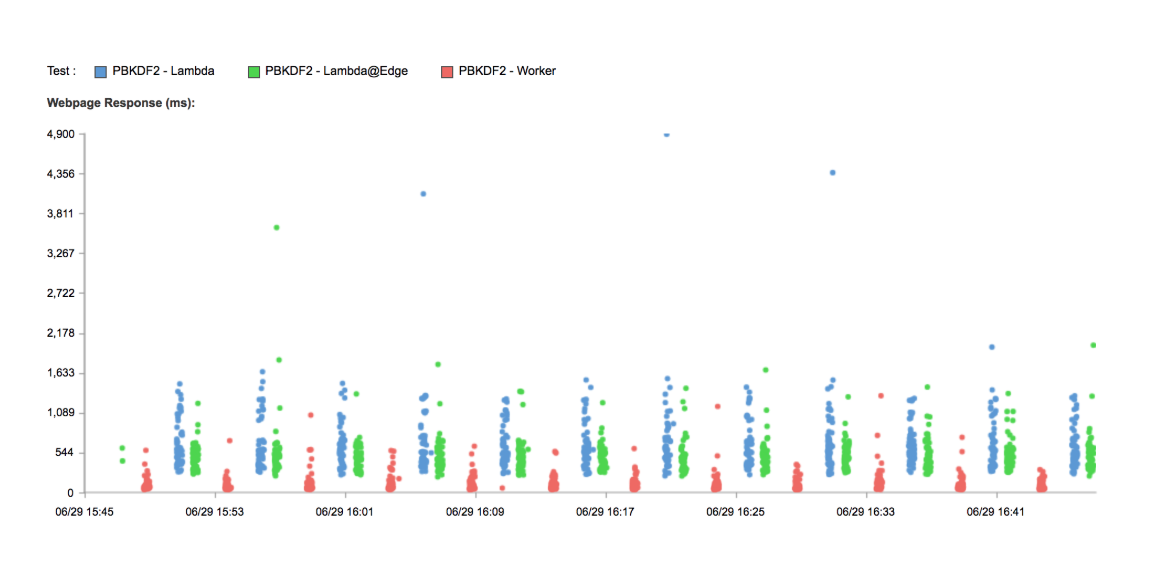

Comparing Serverless Performance for CPU Bound Tasks

This post is a part of an ongoing series comparing the performance of Cloudflare Workers with other Serverless providers. In our past tests we intentionally chose a workload which imposes virtually no CPU load (returning the current time). For these tests, let's look at something which pushes hardware to the limit: cryptography.

tl;dr Cloudflare Workers are seven times faster than a default Lambda function for workloads which push the CPU. Workers are six times faster than Lambda@Edge, tested globally.

Slow Crypto

The PBKDF2 algorithm is designed to be slow to compute. It's used to hash passwords; its slowness makes it harder for a password cracker to do their job. Its extreme CPU usage also makes it a good benchmark for the CPU performance of a service like Lambda or Cloudflare Workers.

We've written a test based on the Node Crypto (Lambda) and the WebCrypto (Workers) APIs. Our Lambda is deployed to with the default 128MB of memory behind an API Gateway in us-east-1, our Worker is, as always, deployed around the world. I also have our function running in a Lambda@Edge deployment to compare that performance as well. Again, we're using Catchpoint to test from hundreds of locations around Continue reading

How To Minikube + Cloudflare

The following is a guest blog post by Nathan Franzen, Software Engineer at StackPointCloud. StackPointCloud is the creator of Stackpoint.io, the leading multi-cloud management platform for cloud native workloads. They are the developers of the Cloudflare Ingress Controller for Kubernetes.

Deploying Applications on Minikube with Argo Tunnels

This article assumes basic knowledge of Kubernetes. If you're not familiar with Kubernetes, visit https://kubernetes.io/docs/tutorials/kubernetes-basics/ to learn the basics.

Minikube is a tool which allows you to run a Kubernetes cluster locally. It’s not only a great way to experiment with Kubernetes, but also a great way to try out deploying services using a reverse tunnel.

At Cloudflare, we've created a product called Argo Tunnel which allows you to host services through a tunnel using Cloudflare as your edge. Tunnels provide a way to expose your services to the internet by creating a connection to Cloudflare's edge and routing your traffic over it. Since your service is creating its own outbound connection to the edge, you don’t have to open ports, configure a firewall, or even have a public IP address for your service. All traffic flows through Cloudflare, blocking attacks and intrusion attempts before they ever make it to Continue reading

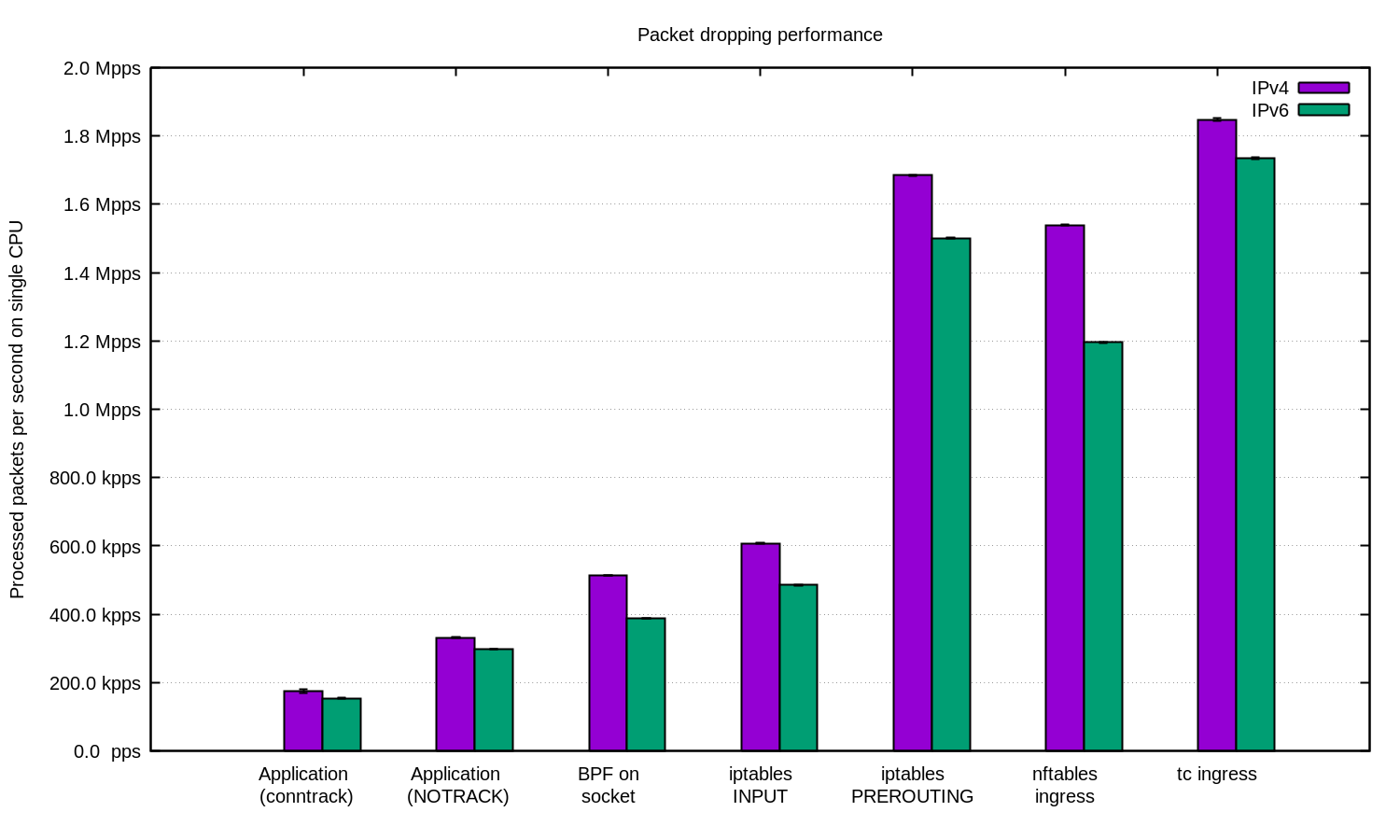

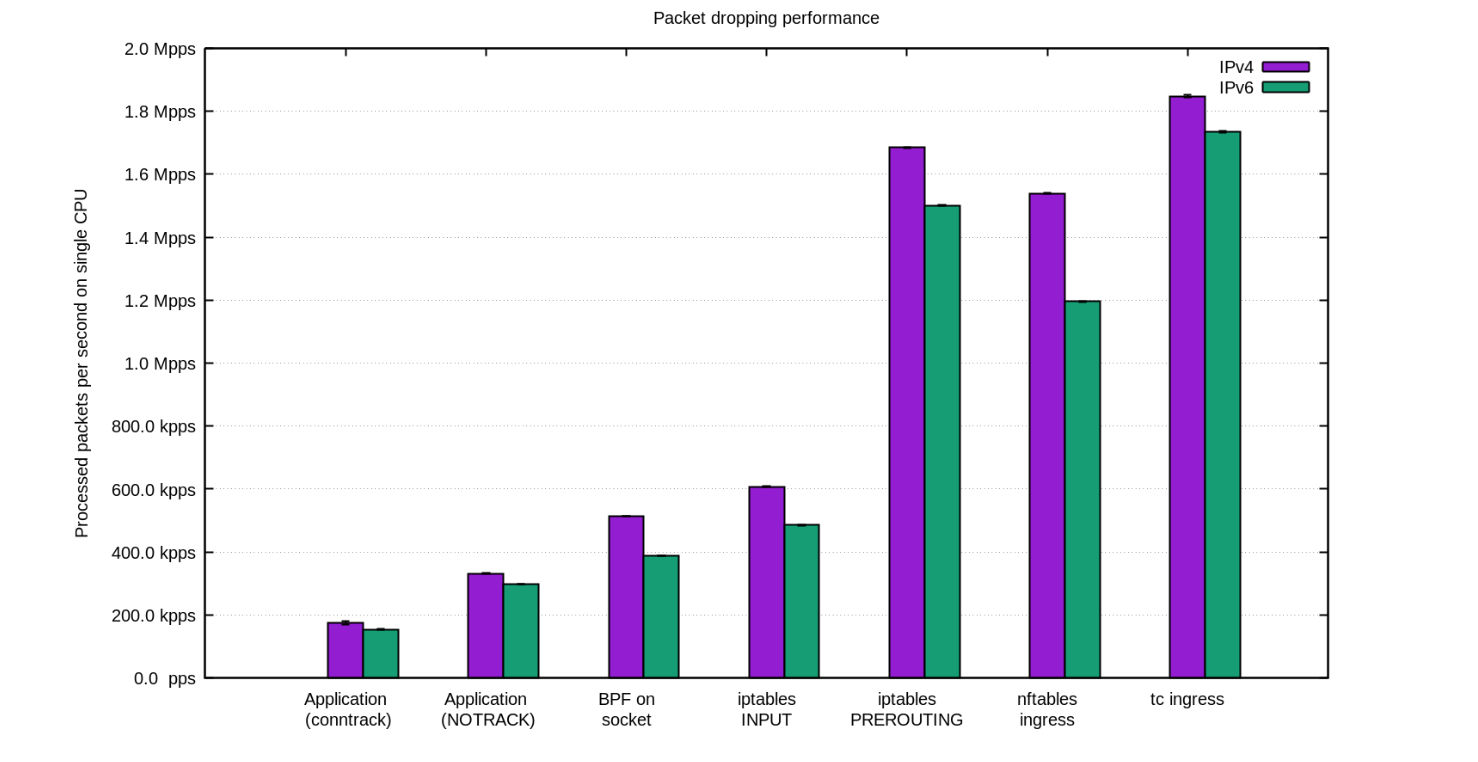

How to drop 10 million packets per second

Internally our DDoS mitigation team is sometimes called "the packet droppers". When other teams build exciting products to do smart things with the traffic that passes through our network, we take joy in discovering novel ways of discarding it.

CC BY-SA 2.0 image by Brian Evans

Being able to quickly discard packets is very important to withstand DDoS attacks.

Dropping packets hitting our servers, as simple as it sounds, can be done on multiple layers. Each technique has its advantages and limitations. In this blog post we'll review all the techniques we tried thus far.

Test bench

To illustrate the relative performance of the methods we'll show some numbers. The benchmarks are synthetic, so take the numbers with a grain of salt. We'll use one of our Intel servers, with a 10Gbps network card. The hardware details aren't too important, since the tests are prepared to show the operating system, not hardware, limitations.

Our testing setup is prepared as follows:

-

We transmit a large number of tiny UDP packets, reaching 14Mpps (millions packets per second).

-

This traffic is directed towards a single CPU on a target server.

-

We measure the number of packets handled by the kernel on that Continue reading

초당 천만개의 패킷을 버리는 방법

This is a Korean translation of a prior post by Marek Majkowski.

사내에서 DDoS 대응팀은 종종 "패킷 버리는 사람들"이라 불립니다. 다른 팀이 우리 네트워크를 통해 지나가는 트래픽으로 스마트한 일을 하며 신나할 때 우리는 그걸 버리는 여러가지 방법을 찾아가며 즐거워 합니다.

CC BY-SA 2.0 image by Brian Evans

DDoS 공격을 견뎌내기 위해서는 빠르게 패킷을 버릴 수 있는 능력이 매우 중요합니다.

쉽게 들리겠지만 서버에 도달한 패킷을 버리는 것은 여러 단계에서 가능합니다. 각 기법은 장점과 한계점이 있습니다. 이 블로그 글에서는 지금까지 시도해 본 기법들을 모두 정리해 보도록 하겠습니다.

테스트 벤치마크

각 기법의 상대적인 성능을 시각화하기 위해서 먼저 숫자를 볼 것입니다. 벤치마크는 합성 테스트이므로 실제 숫자와는 일부 차이가 있을 수 있습니다. 테스트를 위해서는 10Gbps 네트워크 카드가 달린 인텔 서버를 사용할 것입니다. 하드웨어가 아니라 운영체제의 한계를 보여주기 위한 테스트이므로 하드웨어의 상세 사항은 적지 않겠습니다.

테스트 설정은 다음과 같습니다:

- 작은 크기의 UDP 패킷을 14Mpps (Mpps = 초당 백만 패킷) 에 도달하도록 대량으로 전송

- 이 트래픽은 테스트 서버의 단일 CPU에 전달되도록 함

- 단일 CPU에서 커널에 의해 처리되는 패킷의 개수를 측정

테스트는 사용자 공간 어플리케이션의 속도나 패킷 처리 속도를 최대화하려는 것이 아니라 커널의 병목 지점을 알고자 하는 것입니다.

합성 트래픽은 conntrack에 최대한의 부하를 주도록 준비되었습니다 - 임의의 소스 IP와 포트 필드를 사용합니다. tcpdump는 다음과 같이 Continue reading

Simple DDNS solution supporting IPv6 and your own domains using Cloudflare and some Python

I think it is safe to say that most of IT professionals have some kind of lab environment and many of us have some kind of lab at home. Sometimes it is necessary to use resources from that lab outside of the house, which means they need to be exposed to the Internet and you […]Debugging Serverless Apps

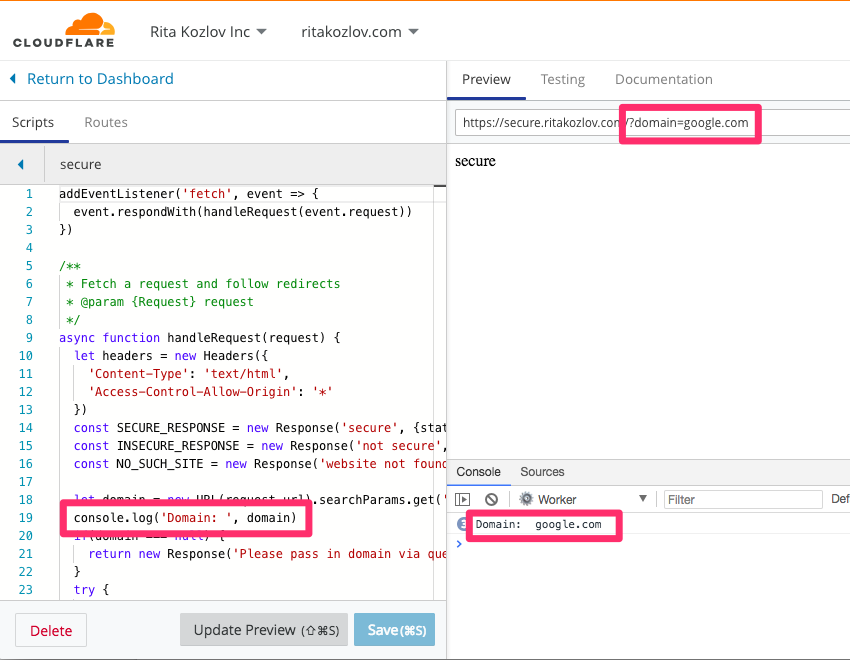

The Workers team have already done an amazing job of creating a functional, familiar edit and debug tooling experience in the Workers IDE. It's Chrome Developer Tools fully integrated to Workers.

console.log in your Worker goes straight to the console, just as if you were debugging locally! Furthermore, errors and even log lines come complete with call-site info, so you click and navigate straight to the relevant line.

In this blog post I’m going to show a small and powerful technique I use to make debugging serverless apps simple and quick.

There is a comprehensive guide to common debugging approaches and I'm going to focus on returning debug information in a header. This is a great tip and one that I use to capture debug information when I'm using curl or Postman, or integration tests. It was a little finicky to get right the first time, so let me save you some trouble.

If you've followed part 1 or part 2 of my Workers series, you'll know I'm using Typescript, but the approach would equally apply to Javascript. In the rest of this example, I’ll be using the routing framework I created in part 2.

Requesting Debug Info

Too Old To Rocket Load, Too Young To Die

Rocket Loader is in the news again. One of Cloudflare's earliest web performance products has been re-engineered for contemporary browsers and Web standards.

No longer a beta product, Rocket Loader controls the load and execution of your page JavaScript, ensuring useful and meaningful page content is unblocked and displayed sooner.

For a high-level discussion of Rocket Loader aims, please refer to our sister post, We have lift off - Rocket Loader GA is mobile!

Below, we offer a lower-level outline of how Rocket Loader actually achieves its goals.

Prehistory

Early humans looked upon Netscape 2.0, with its new ability to script HTML using LiveScript, and <BLINK>ed to ensure themselves they weren’t dreaming. They decided to use this technology, soon to be re-christened JavaScript (a story told often and elsewhere), for everything they didn’t know they needed: form input validation, image substitution, frameset manipulation, popup windows, and more. The sole requirement was a few interpreted commands enclosed in a <script> tag. The possibilities were endless.

Soon, the introduction of the src attribute allowed them to import a file full of JS into their pages. Little need to fiddle with the markup, when all the requisite JS for the page Continue reading

Serverless Performance: Cloudflare Workers, Lambda and Lambda@Edge

A few months ago we released a new way for people to run serverless Javascript called Cloudflare Workers. We believe Workers is the fastest way to execute serverless functions.

If it is truly the fastest, and it is comparable in price, it should be how every team deploys all of their serverless infrastructure. So I set out to see just how fast Worker execution is and prove it.

tl;dr Workers is much faster than Lambda and Lambda@Edge:

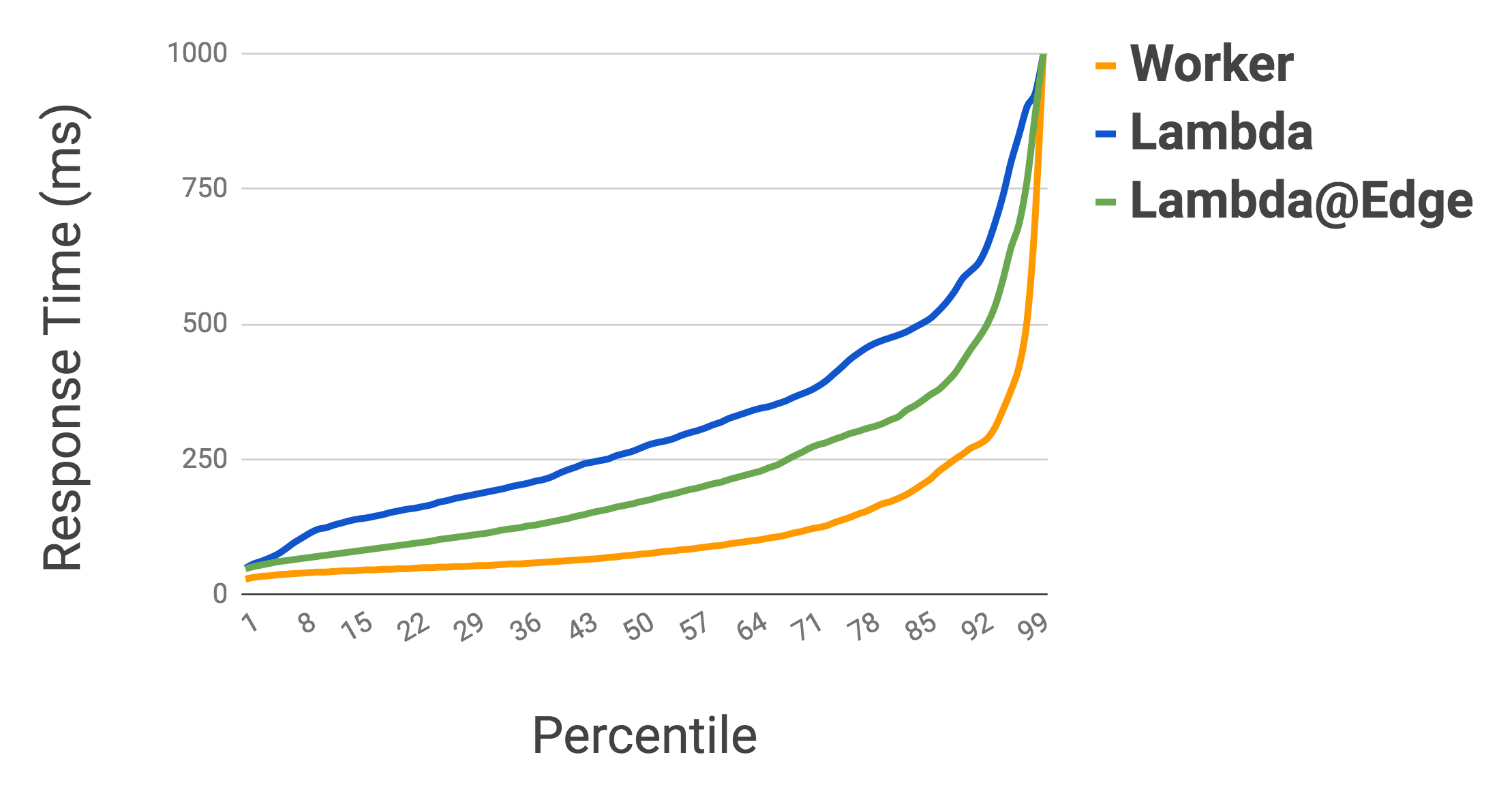

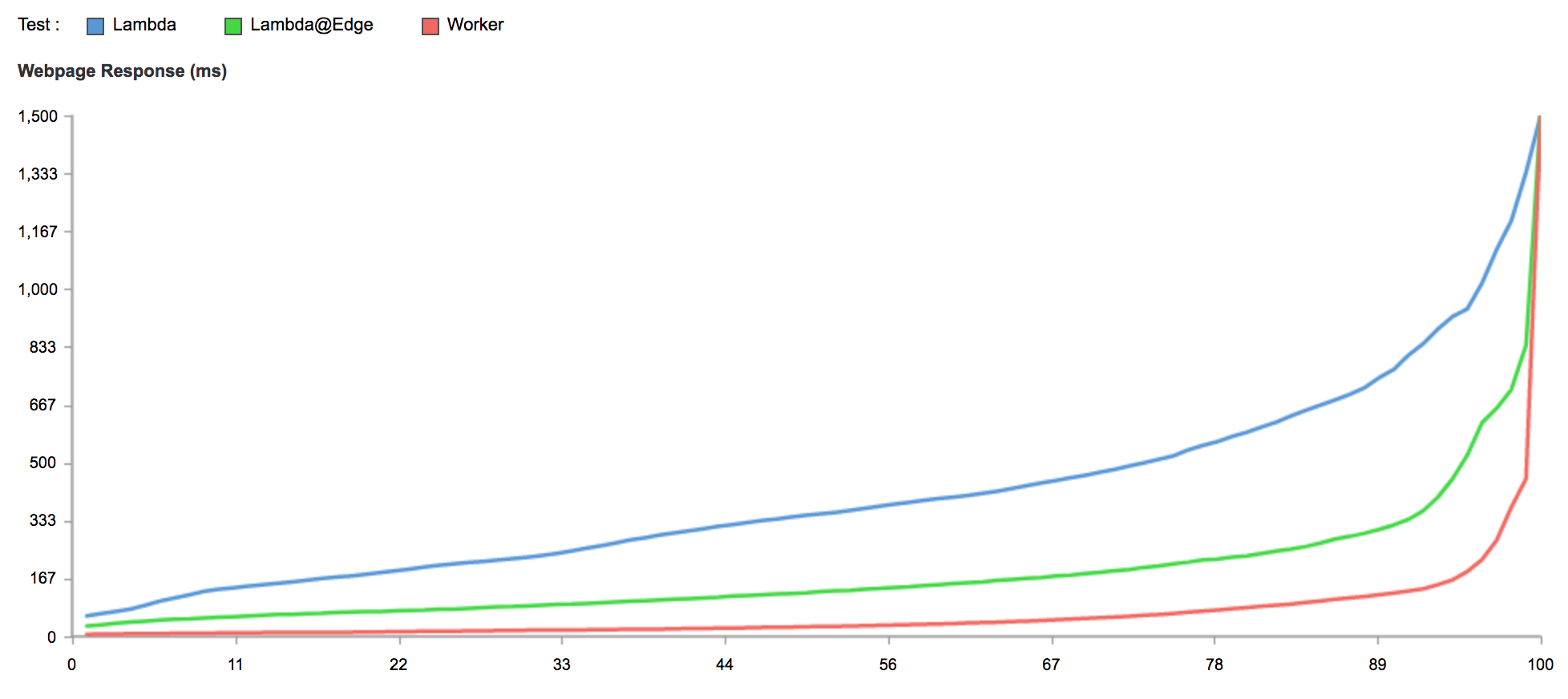

This is a chart showing what percentage of requests to each service were faster than a given number of ms. It is based on thousands of tests from all around the world, evenly sampled over the past 12 hours. At the 95th percentile, Workers is 441% faster than a Lambda function, and 192% faster than Lambda@Edge.

The functions being tested simply return the current time. All three scripts are available on Github. The testing is being done by a service called Catchpoint which has hundreds of testing locations around the world.

The Gold Coast

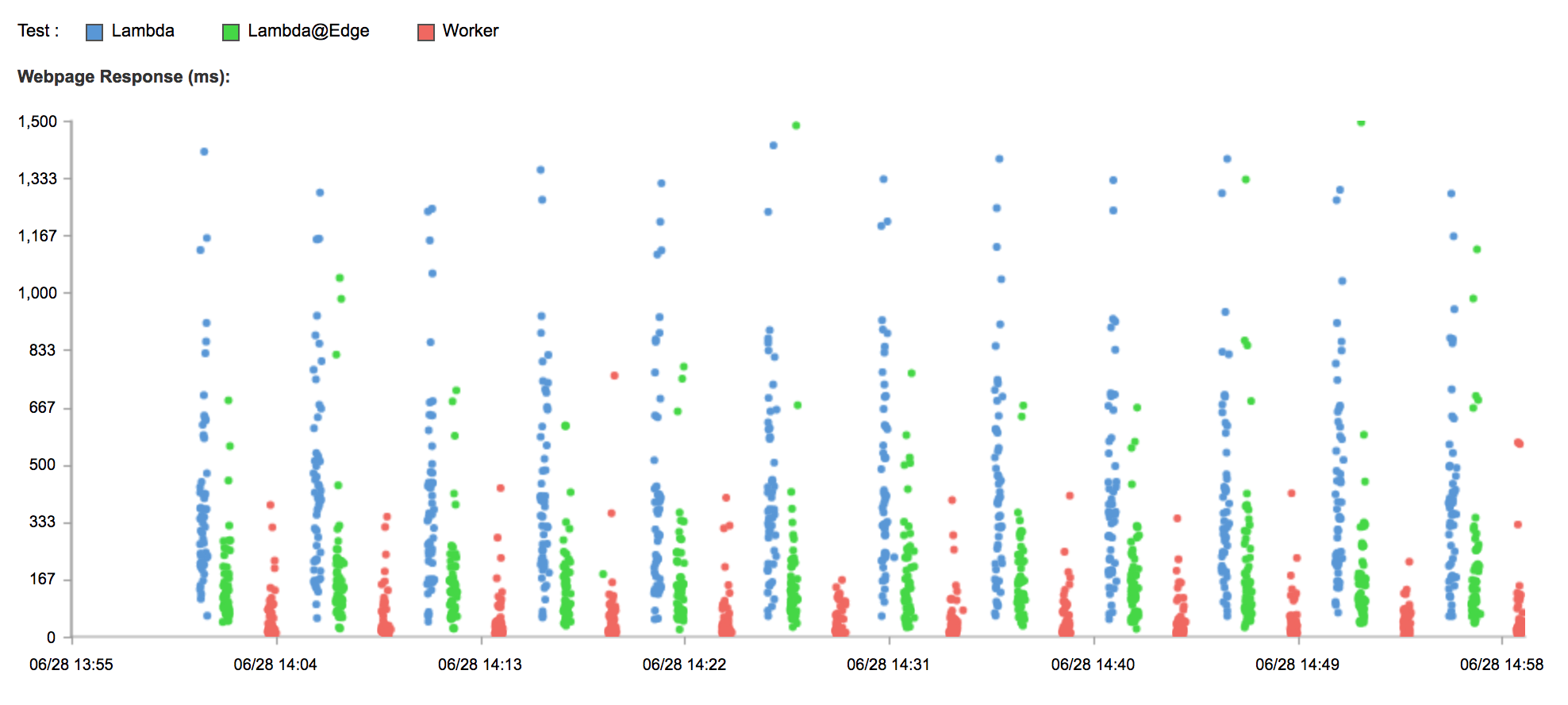

This is every test ran in the last hour, with results over 1500ms filtered out:

You can immediately see that Worker results are tightly clustered around the x-axis, Continue reading

Cryptocurrency API Gateway using Typescript+Workers

If you followed part one, I have an environment setup where I can write Typescript with tests and deploy to the Cloudflare Edge with npm run upload. For this post, I want to take one of the Worker Recipes further.

I'm going to build a mini HTTP request routing and handling framework, then use it to build a gateway to multiple cryptocurrency API providers. My point here is that in a single file, with no dependencies, you can quickly build pretty sophisticated logic and deploy fast and easily to the Edge. Furthermore, using modern Typescript with async/await and the rich type structure, you also write clean, async code.

OK, here we go...

My API will look like this:

| Verb | Path | Description |

|---|---|---|

| GET | /api/ping |

Check the Worker is up |

| GET | /api/all/spot/:symbol |

Aggregate the responses from all our configured gateways |

| GET | /api/race/spot/:symbol |

Return the response of the provider who responds fastest |

| GET | /api/direct/:exchange/spot/:symbol |

Pass through the request to the gateway. E.g. gdax or bitfinex |

The Framework

OK, this is Typescript, I get interfaces and I'm going to use them. Here's my ultra-mini-http-routing framework definition:

export interface IRouter {

route(req: RequestContextBase): IRouteHandler;

}

/**

* A route

*/

export interface IRoute Continue readingDelivering a Serverless API in 10 minutes using Workers

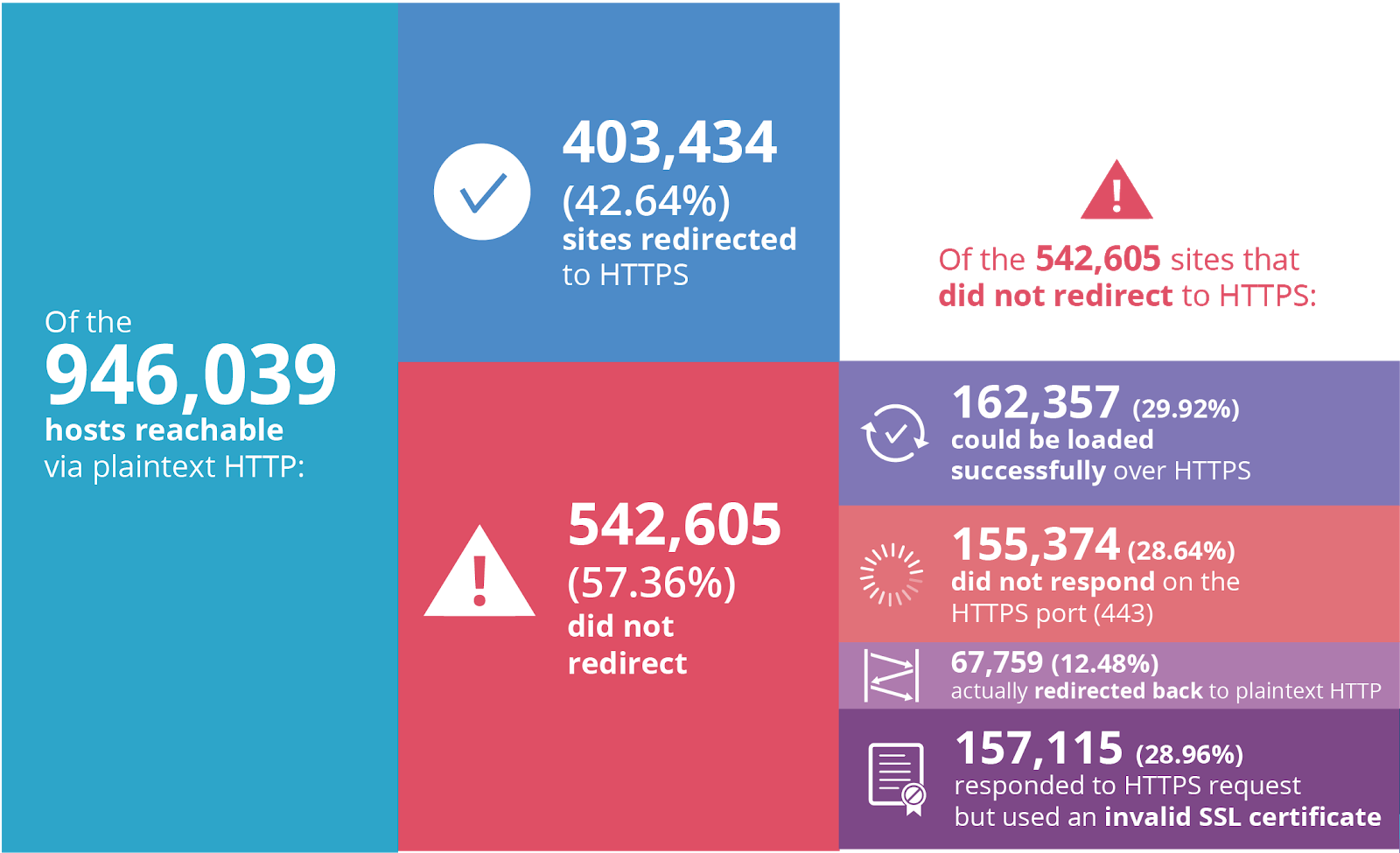

In preparation for Chrome’s Not Secure flag, which will update the indicator to show Not Secure when a site is not accessed over https, we wanted people to be able to test whether their site would pass. If you read our previous blog post about the existing misconceptions around using https, and preparing your site, you may have noticed a small fiddle, allowing you to test which sites will be deemed “Secure”. In preparation for the blog post itself, one of our PMs approached me asking for help making this fiddle come to life. It was a simple ask: we need an endpoint which runs logic to see if a given domain will automatically redirect to https.

The logic and requirements turned out to be very simple:

Make a serverless API endpoint

Input: domain (e.g. example.com)

Output: “secure” / “not secure”

Logic:

if http://example.com redirects to https://example.com

Return “secure”

Else

Return “not secure”

One additional requirement here was that we needed to follow redirects all the way; sites often redirect to http://www.example.com first, and only then redirect to https. That is an additional line of code I was prepared to handle.

I’ve done some Continue reading

T-25 days until Chrome starts flagging HTTP sites as “Not Secure”

Less than one month from today, on July 23, Google will start prominently labeling any site loaded in Chrome without HTTPS as "Not Secure".

When we wrote about Google’s plans back in February, the percent of sites loaded over HTTPS clocked in at 69.7%. Just one year prior to that only 52.5% of sites were loaded using SSL/TLS—the encryption protocol behind HTTPS—so tremendous progress has been made.

Unfortunately, quite a few Continue reading