Project Jengo Celebrates One Year Anniversary by Releasing Prior Art

Today marks the one year anniversary of Project Jengo, a crowdsourced search for prior art that Cloudflare created and funded in response to the actions of Blackbird Technologies, a notorious patent troll. Blackbird has filed more than one hundred lawsuits asserting dormant patents without engaging in any innovative or commercial activities of its own. In homage to the typical anniversary cliché, we are taking this opportunity to reflect on the last year and confirm that we’re still going strong.

Project Jengo arose from a sense of immense frustration over the way that patent trolls purchase over-broad patents and use aggressive litigation tactics to elicit painful settlements from companies. These trolls know that the system is slanted in their favor, and we wanted to change that. Patent lawsuits take years to reach trial and cost an inordinate sum to defend. Knowing this, trolls just sit back and wait for companies to settle. Instead of perpetuating this cycle, Cloudflare decided to bring the community together and fight back.

After Blackbird filed a lawsuit against Cloudflare alleging infringement of a vague and overly-broad patent (‘335 Patent), we launched Project Jengo, which offered a reward to people who submitted prior art that could Continue reading

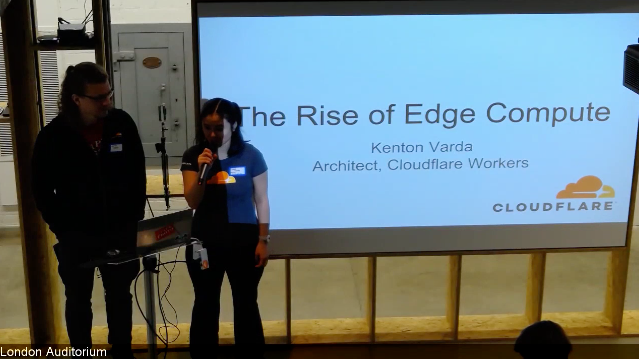

The Rise of Edge Compute: The Video

At the end of March, Kenton Varda, tech lead and architect for Cloudflare Workers, traveled to London and led a talk about the Rise of Edge Compute where he laid out our vision for the future of the Internet as a platform.

Several of those who were unable to attend on-site asked for us to produce a recording. Well, we've completed the audio edits, so here it is!

Visit the Workers category on Cloudflare's community forum to learn more about Workers and share questions, answers, and ideas with other developers.

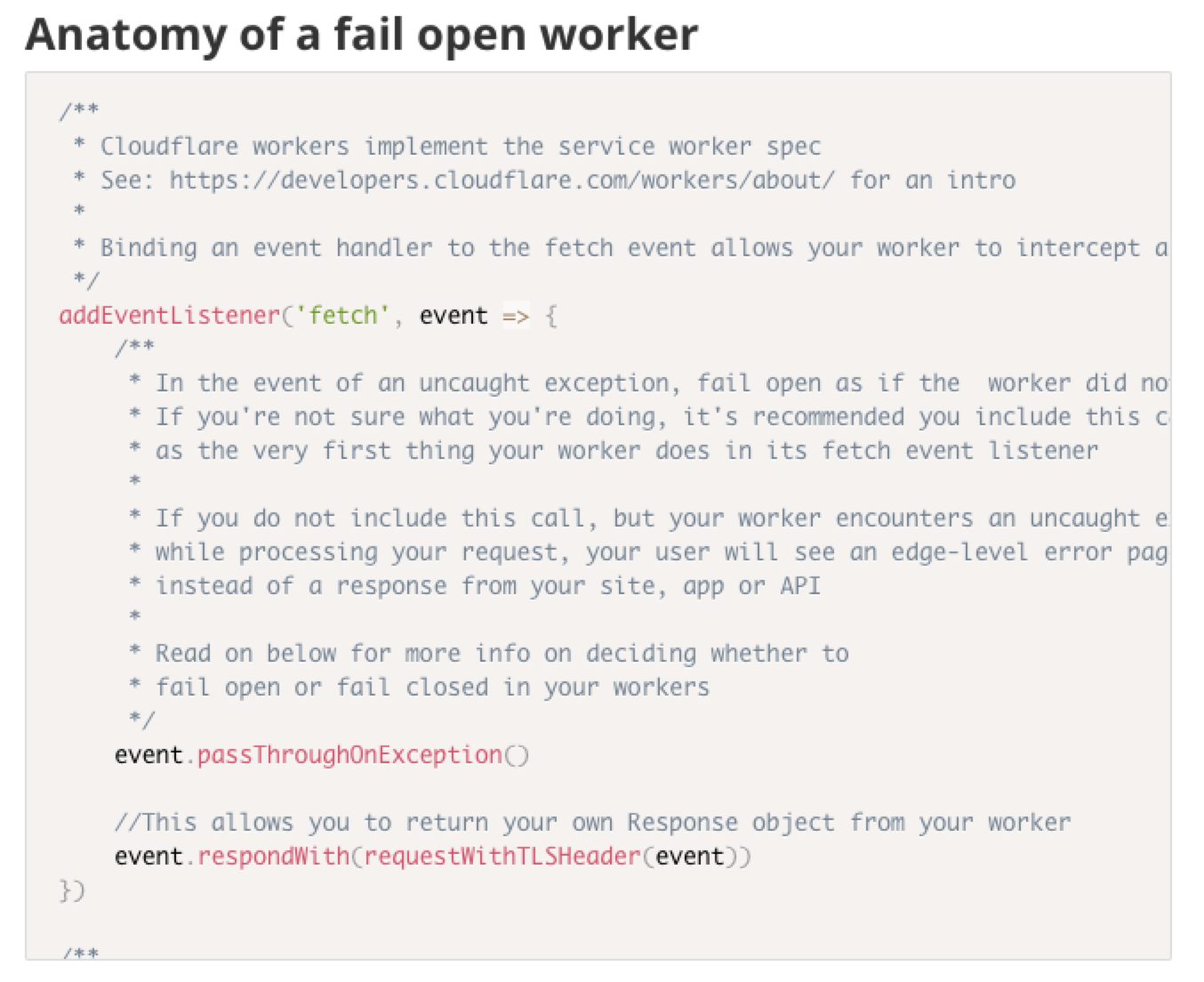

Dogfooding Cloudflare Workers

On the WWW team, we’re responsible for Cloudflare’s REST APIs, account management services and the dashboard experience. We take security and PCI compliance seriously, which means we move quickly to stay up to date with regulations and relevant laws.

A recent compliance project had a requirement of detecting certain end user request data at the edge, and reacting to it both in API responses as well as visually in the dashboard. We realized that this was an excellent opportunity to dogfood Cloudflare Workers.

Deploying workers to www.cloudflare.com and api.cloudflare.com

In this blog post, we’ll break down the problem we solved using a single worker that we shipped to multiple hosts, share the annotated source code of our worker, and share some best practices and tips and tricks we discovered along the way.

Since being deployed, our worker has served over 400 million requests for both calls to api.cloudflare.com and the www.cloudflare.com dashboard.

The task

First, we needed to detect when a client was connecting to our services using an outdated TLS protocol. Next, we wanted to pass this information deeper into our application stack so that we could act upon it and Continue reading

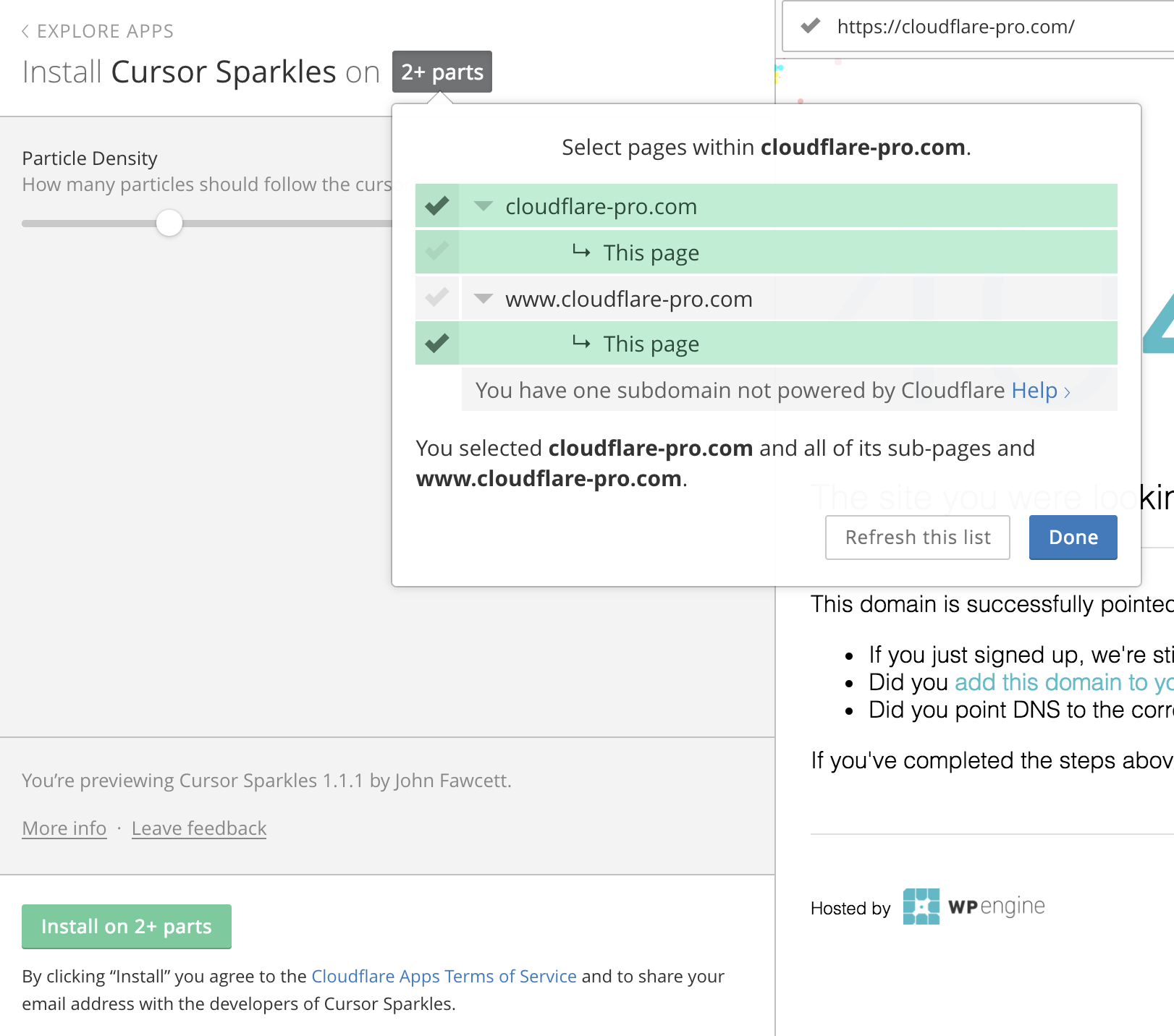

Custom Page Selection for Cloudflare Apps

In July 2016, Cloudflare integrated with Eager - an apps platform. During this integration, several decisions were made to ensure an optimal experience installing apps. We wanted to make sure site owners on Cloudflare could customize and install an app with the minimal number of clicks possible. Customizability often adds complexity and clicks for the user. We’ve been tinkering to find the right balance of user control and simplicity since.

When installing an app, a site owner must select where - what URLs on their site - they want what apps installed. Our original plan for selecting the URLs an app would be installed on took a few twists and turns. Our end decision was to utilize our Always Online crawler to pre-populate a tree of the user’s site. Always Online is a feature that crawls Cloudflare sites and serves pages from our cache if the site goes down.

The benefits to this original setup are:

1. Only valid pages appear

An app only allows installations on html pages. For example, since injecting Javascript into a JPEG image isn’t possible, we would prevent the installer from trying it by not showing that path. Preventing the user from that type of Continue reading

Sharing more Details, not more Data: Our new Privacy Policy and Data Protection Plans

After an exhilarating first month as Cloudflare’s first Data Protection Officer (DPO), I’m excited to announce that today we are launching a new Privacy Policy. Our new policy explains the kind of information we collect, from whom we collect it, and how we use it in a more transparent way. We also provide clearer instructions for how you, our users, can exercise your data subject rights. Importantly, nothing in our privacy policy changes the level of privacy protection for your information.

Our new policy is a key milestone in our GDPR readiness journey, and it goes into effect on May 25 — the same day as the GDPR. (You can learn more about the European Union’s General Data Protection Regulation here.) But our GDPR journey doesn’t end on May 25.

Over the coming months, we’ll be following GDPR-related developments, providing you periodic updates about what we learn, and adapting our approach as needed. And I’ll continue to focus on GDPR compliance efforts, including coordinating our responses to data subject requests for information about how their data is being handled, evaluating the privacy impact of new products and services on our users’ personal data, and working with customers who want Continue reading

Expanding Multi-User Access on dash.cloudflare.com

One of the most common feature requests we get is to allow customers to share account access. This has been supported at our Enterprise level of service, but is now being extended to all customers. Starting today, users can go to the new home of Cloudflare’s Dashboard at dash.cloudflare.com. Upon login, users will see the redesigned account experience. Now users can manage all of their account level settings and features in a more streamlined UI.

CC BY 2.0 image by Mike Lawrence

All customers now have the ability to invite others to manage their account as Administrators. They can do this from the ‘Members’ tab in the new Account area on the Cloudflare dashboard. Invited Administrators have full control over the account except for managing members and changing billing information.

For Customers who belong to multiple accounts (previously known as organizations), the first thing they will see is an account selector. This allows easy searching and selection between accounts. Additionally, there is a zone selector for searching through zones across all accounts. Enterprise customers still have access to the same roles as before with the addition of the Administrator and Billing Roles.

The New Dashboard @ dash. Continue reading

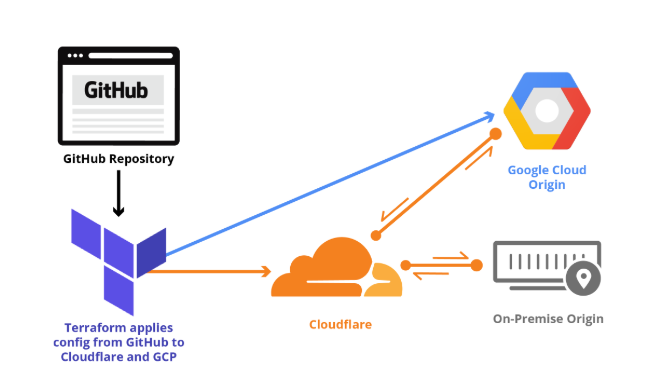

Getting started with Terraform and Cloudflare (Part 2 of 2)

In Part 1 of Getting Started with Terraform, we explained how Terraform lets developers store Cloudflare configuration in their own source code repository, institute change management processes that include code review, track their configuration versions and history over time, and easily roll back changes as needed.

We covered installing Terraform, provider initialization, storing configuration in git, applying zone settings, and managing rate limits. This post continues the Cloudflare Terraform provider walkthrough with examples of load balancing, page rules, reviewing and rolling back configuration, and importing state.

Reviewing the current configuration

Before we build on Part 1, let's quickly review what we configured in that post. Because our configuration is in git, we can easily view the current configuration and change history that got us to this point.

$ git log

commit e1c38cf6f4230a48114ce7b747b77d6435d4646c

Author: Me

Date: Mon Apr 9 12:34:44 2018 -0700

Step 4 - Update /login rate limit rule from 'simulate' to 'ban'.

commit 0f7e499c70bf5994b5d89120e0449b8545ffdd24

Author: Me

Date: Mon Apr 9 12:22:43 2018 -0700

Step 4 - Add rate limiting rule to protect /login.

commit d540600b942cbd89d03db52211698d331f7bd6d7

Author: Me

Date: Sun Apr 8 22:21:27 2018 -0700

Step 3 - Enable TLS 1.3, Continue readingGetting started with Terraform and Cloudflare (Part 1 of 2)

As a Product Manager at Cloudflare, I spend quite a bit of my time talking to customers. One of the most common topics I'm asked about is configuration management. Developers want to know how they can write code to manage their Cloudflare config, without interacting with our APIs or UI directly.

Following best practices in software development, they want to store configuration in their own source code repository (be it GitHub or otherwise), institute a change management process that includes code review, and be able to track their configuration versions and history over time. Additionally, they want the ability to quickly and easily roll back changes when required.

When I first spoke with our engineering teams about these requirements, they gave me the best answer a Product Manager could hope to hear: there's already an open source tool out there that does all of that (and more), with a strong community and plugin system to boot—it's called Terraform.

This blog post is about getting started using Terraform with Cloudflare and the new version 1.0 of our Terraform provider. A "provider" is simply a plugin that knows how to talk to a specific set of APIs—in this case, Cloudflare, but Continue reading

Copenhagen & London developers, join us for five events this May

Photo by Nick Karvounis / Unsplash

Are you based in Copenhagen or London? Drop by one or all of these five events.

Ross Guarino and Terin Stock, both Systems Engineers at Cloudflare are traveling to Europe to lead Go and Kubernetes talks in Copenhagen. They'll then join Junade Ali and lead talks on their use of Go, Kubernetes, and Cloudflare’s Mobile SDK at Cloudflare's London office.

My Developer Relations teammates and I are visiting these cities over the next two weeks to produce these events with Ross, Terin, and Junade. We’d love to meet you and invite you along.

Our trip will begin with two meetups and a conference talk in Copenhagen.

Event #1 (Copenhagen): 6 Cloud Native Talks, 1 Evening: Special KubeCon + CloudNativeCon EU Meetup

Tuesday, 1 May: 17:00-21:00

Location: Trifork Copenhagen - Borgergade 24B, 1300 København K

How to extend your Kubernetes cluster

A brief introduction to controllers, webhooks and CRDs. Ross and Terin will talk about how Cloudflare’s internal platform builds on Kubernetes.

Speakers: Ross Guarino and Terin Stock

View Event Details & Register Here »

Event #2 (Copenhagen): Gopher Meetup At Falcon.io: Building Go With Bazel & Internationalization in Go

BGP leaks and cryptocurrencies

Over the few last hours, a dozen news stories have broken about how an attacker attempted (and perhaps managed) to steal cryptocurrencies using a BGP leak.

CC BY 2.0 image by elhombredenegro

What is BGP?

The Internet is composed of routes. For our DNS resolver 1.1.1.1 , we tell the world that all the IPs in the range 1.1.1.0 to 1.1.1.255 can be accessed at any Cloudflare PoP.

For the people who do not have a direct link to our routers, they receive the route via transit providers, who will deliver packets to those addresses as they are connected to Cloudflare and the rest of the Internet.

This is the normal way the Internet operates.

There are authorities (Regional Internet Registries, or RIRs) in charge of distributing IP addresses in order to avoid people using the same address space. Those are IANA, RIPE, ARIN, LACNIC, APNIC and AFRINIC.

What is a BGP leak?

The broad definition of a BGP leak would be IP space that is announced by somebody not allowed by the owner of the Continue reading

Now You Can Setup Centrify, OneLogin, Ping and Other Identity Providers with Cloudflare Access

We use Cloudflare Access to secure our own internal tools instead of a VPN. As someone that does a lot of work on the train, I can attest this is awesome (though I might be biased). You can see it in action below. Instead of having to connect to a VPN to reach our internal jira, we just login with our Google account and we are good to go:

Before today, you could setup Access if you used GSuite, Okta or Azure AD to manage your employee accounts. Today we would like to announce support for two more Identity Providers with Cloudflare Access: Centrify and OneLogin.

We launched Cloudflare Access earlier this year and have been overwhelmed by the response from our customers and community. Customers tell us they love the simplicity of setting up Access to secure applications and integrate with their existing identity provider solution. Access helps customers implement a holistic solution for both corporate and remote employees without having to use a VPN.

If you are using Centrify or OneLogin as your identity provider you can now easily integrate them with Cloudflare Access and have your team members login with their accounts to securely reach your internal Continue reading

A Carbon Neutral North America

Photo by Karsten Würth (@inf1783) / Unsplash

Cloudflare's mission is to help build a better Internet. While working toward our goals, we want to make sure our processes are conducted in a sustainable manner.

In an effort to do so, we’ve reduced Cloudflare’s environmental impact by contracting to purchase regional renewable energy certificates, or “RECs,” to match 100% of the electricity used in our North American data centers as well as our U.S. offices. Cloudflare now has servers in 154 unique cities around the world, with 38 located in North America. Cloudflare has opted to support geographically diverse projects in proximity to our office and data center electricity usage. This renewable energy initiative reduces our electricity-based carbon footprint by 5,561 tons of CO2 which has a positive environmental impact. The impact can be compared to growing 144,132 trees seedlings for 10 years, or taking 1,191 cars off the road for one year.

How does buying a REC help reduce Cloudflare's carbon footprint you may ask? When 1MWh of electricity is produced from a renewable generator, such as a wind turbine, there are two products: the energy, which is delivered to the grid and mixes with other forms of energy, Continue reading

Keeping Drupal sites safe with Cloudflare’s WAF

Cloudflare’s team of security analysts monitor for upcoming threats and vulnerabilities and where possible put protection in place for upcoming threats before they compromise our customers. This post examines how we protected people against a new major vulnerability in the Drupal CMS, nicknamed Drupalgeddon 2.

Two weeks after adding protection with WAF rule ID D0003 which mitigates the critical remote code execution Drupal exploit (SA-CORE-2018-002/CVE-2018-7600), we have seen significant spikes of attack attempts. Since the 13th of April the Drupal security team has been aware of automated attack attempts and it significantly increased the security risk score of the vulnerability. It makes sense to go back and analyse what happened in the last seven days in Cloudflare’s WAF environment.

What is Drupalgeddon 2

The vulnerability potentially allows attackers to exploit multiple attack vectors on a Drupal site, which could make a site completely compromised.

Drupal introduced renderable arrays, which are a key-value structure, with keys starting with a ‘#’ symbol, that allows you to alter data during form rendering. These arrays however, did not have enough input validation. This means that an attacker could inject a custom renderable array on one of these keys in the form structure.

Continue reading

1 year and 3 months working at Cloudflare: How is it going so far?

This post is inspired by a very good blog post from one of my colleague in the US, which I really appreciated as I was a newcomer to the company. It was great to see what it is like working for Cloudflare after one year and to learn from the lessons she had learnt.

I'll try to do the same in three parts. Beginning with how my on-boarding went, my first customer experiences and finally what is my day-to-day life at Cloudflare. These writings only reflect my personal feelings and thoughts. The experience is different for each and every newcomer to Cloudflare.

Chapter 1 - On-boarding, being impressed and filling the (big) knowledge gaps.

Before I joined Cloudflare, I was working as a Security Consultant in Paris, France. I never had the opportunity to move abroad to speak English (me.englishLevel = 0), I never had any reason to live outside of France and was at the same time looking for another Job. Perfect then!

When I saw the job posting, I immediately applied as I knew the company well, the mindset and the products Cloudflare provided. It took me 6 months to get the offer probably because Continue reading

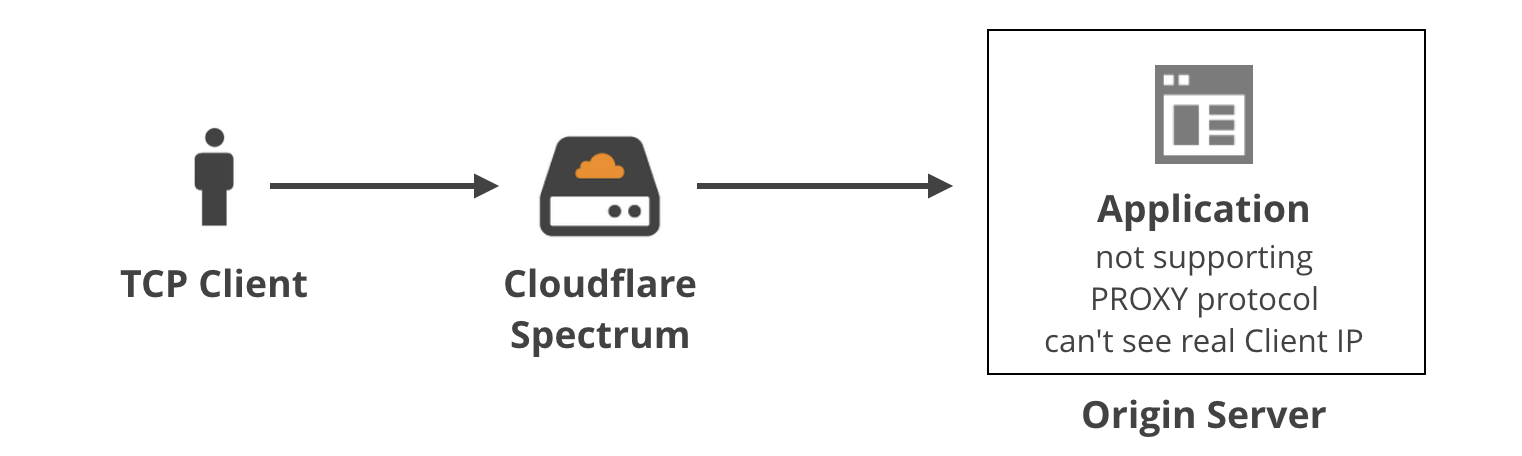

mmproxy – Creative Linux routing to preserve client IP addresses in L7 proxies

In previous blog post we discussed how we use the TPROXY iptables module to power Cloudflare Spectrum. With TPROXY we solved a major technical issue on the server side, and we thought we might find another use for it on the client side of our product.

This is Addressograph. Source Wikipedia

When building an application level proxy, the first consideration is always about retaining real client source IP addresses. Some protocols make it easy, e.g. HTTP has a defined X-Forwarded-For header[1], but there isn't a similar thing for generic TCP tunnels.

Others have faced this problem before us, and have devised three general solutions:

(1) Ignore the client IP

For certain applications it may be okay to ignore the real client IP address. For example, sometimes the client needs to identify itself with a username and password anyway, so the source IP doesn't really matter. In general, it's not a good practice because...

(2) Nonstandard TCP header

A second method was developed by Akamai: the client IP is saved inside a custom option in the TCP header in the SYN packet. Early implementations of this method weren't conforming to any standards, e.g. using option field 28 Continue reading

NEON is the new black: fast JPEG optimization on ARM server

As engineers at Cloudflare quickly adapt our software stack to run on ARM, a few parts of our software stack have not been performing as well on ARM processors as they currently do on our Xeon® Silver 4116 CPUs. For the most part this is a matter of Intel specific optimizations some of which utilize SIMD or other special instructions.

One such example is the venerable jpegtran, one of the workhorses behind our Polish image optimization service.

A while ago I optimized our version of jpegtran for Intel processors. So when I ran a comparison on my test image, I was expecting that the Xeon would outperform ARM:

vlad@xeon:~$ time ./jpegtran -outfile /dev/null -progressive -optimise -copy none test.jpg

real 0m2.305s

user 0m2.059s

sys 0m0.252s

vlad@arm:~$ time ./jpegtran -outfile /dev/null -progressive -optimise -copy none test.jpg

real 0m8.654s

user 0m8.433s

sys 0m0.225s

Ideally we want to have the ARM performing at or above 50% of the Xeon performance per core. This would make sure we have no performance regressions, and net performance gain, since the ARM CPUs have double the core count as our current 2 socket setup.

In this case, however, I Continue reading

Cloudflare launches 1.1.1.1 DNS service with privacy, TLS and more

There was an important development this month with the launch of Cloudflare’s new 1.1.1.1 DNS resolver service. This is a significant development for several reasons, but in particular it supports the new DNS-over-TLS and DNS-over-HTTPS protocols that allow for confidential DNS querying and response.

Why 1.1.1.1?

Before we get to that though, Cloudflare joins Google’s Public DNS that uses 8.8.8.8 and Quad9 DNS that uses 9.9.9.9, by implementing 1.1.1.1 as a memorable IP address for accessing its new DNS service. IP addresses are generally not as memorable as domain names, but you need access to a DNS server before you can resolve domain names to IP addresses, so configuring numbers is a necessity. And whilst a memorable IP address might be cool, it’s also proved important recently when DNS resolvers have been blocked or taken down, requiring devices to be pointed elsewhere.

The 1.1.1.1 address is part of the 1.1.1.0 – 1.1.1.255 public IP address range actually allocated to APNIC, one of the five Regional Internet Registries, but it has been randomly used as an address for Continue reading

Introducing Spectrum: Extending Cloudflare To 65,533 More Ports

Today we are introducing Spectrum, which brings Cloudflare’s security and acceleration to the whole spectrum of TCP ports and protocols for our Enterprise customers. It’s DDoS protection for any box, container or VM that connects to the internet; whether it runs email, file transfer or a custom protocol, it can now get the full benefits of Cloudflare. If you want to skip ahead and see it in action, you can scroll to the video demo at the bottom.

DDoS Protection

The core functionality of Spectrum is its ability to block large DDoS attacks. Spectrum benefits from Cloudflare’s existing DDoS mitigation (which this week blocked a 900 Gbps flood). Spectrum’s DDoS protection has already been battle tested. Just soon as we opened up Spectrum for beta, Spectrum received its first SYN flood.

One of Spectrum's earliest deployments was in front of Hypixel’s infrastructure. Hypixel runs the largest minecraft server, and because gamers can be - uh, passionate - they were one of the earliest targets of the terabit-per-second Mirai botnet. “Hypixel was one of the first subjects of the Mirai botnet DDoS attacks and frequently receives large attacks. Before Spectrum, we had to rely on unstable services & techniques Continue reading

Abusing Linux’s firewall: the hack that allowed us to build Spectrum

Today we are introducing Spectrum: a new Cloudflare feature that brings DDoS protection, load balancing, and content acceleration to any TCP-based protocol.

CC BY-SA 2.0 image by Staffan Vilcans

Soon after we started building Spectrum, we hit a major technical obstacle: Spectrum requires us to accept connections on any valid TCP port, from 1 to 65535. On our Linux edge servers it's impossible to "accept inbound connections on any port number". This is not a Linux-specific limitation: it's a characteristic of the BSD sockets API, the basis for network applications on most operating systems. Under the hood there are two overlapping problems that we needed to solve in order to deliver Spectrum:

- how to accept TCP connections on all port numbers from 1 to 65535

- how to configure a single Linux server to accept connections on a very large number of IP addresses (we have many thousands of IP addresses in our anycast ranges)

Assigning millions of IPs to a server

Cloudflare’s edge servers have an almost identical configuration. In our early days, we used to assign specific /32 (and /128) IP addresses to the loopback network interface[1]. This worked well when we had dozens of IP Continue reading

리눅스 방화벽을 남용하기: Spectrum 을 만들 수 있었던 해킹

This is a Korean translation of a prior post by Marek Majkowski.

얼마전 우리는 Spectrum을 발표하였습니다: 어떤 TCP 기반의 프로토콜이라도 DDoS 방어, 로드밸런싱 그리고 컨텐츠 가속을 할 수 있는 새로운 Cloudflare의 기능입니다.

CC BY-SA 2.0 image by Staffan Vilcans

Spectrum을 만들기 시작하고 얼마 되지 않아서 중요한 기술적 난관에 부딛히게 되었습니다: Spectrum은 1부터 65535 사이의 어떤 유효한 TCP 포트라도 접속을 허용해야 합니다. 우리의 리눅스 엣지 서버에서는 "임의의 포트 번호에 인바운드 연결을 허용"은 불가능합니다. 이것은 리눅스만의 제한은 아닙니다: 이것은 대부분 운영 체제의 네트워크 어플리케이션의 기반인 BSD 소켓 API의 특성입니다. 내부적으로 Spectrum을 완성하기 위해서 풀어야 하는 서로 겹치는 문제가 둘 있었습니다:

- 1에서 65535 사이의 모든 포트 번호에 TCP 연결을 어떻게 받아들일 것인가

- 매우 많은 수의 IP 주소로 오는 연결을 받아들이도록 단일 리눅스 서버를 어떻게 설정할 것인가 (우리는 애니캐스트 대역에 수많은 IP주소를 갖고 있습니다)

서버에 수백만의 IP를 할당

Cloudflare의 엣지 서버는 거의 동일한 구성을 갖고 있습니다. 초창기에는 루프백 네트워크 인터페이스에 특정한 /32 (그리고 /128) IP 주소를 할당하였습니다[1]. 이것은 수십개의 IP주소만 갖고 있었을 때에는 잘 동작 하였지만 더 성장함에 따라 확대 적용하는 것에는 실패하였습니다.

그때 "AnyIP" 트릭이 등장하였습니다. AnyIP는 단일 주소가 아니라 전체 IP 프리픽스 (서브넷)을 루프백 인터페이스에 할당하도록 해 줍니다. 사실 AnyIP를 많이 사용하고 있습니다: 여러분 컴퓨터에는 루브백 인터페이스에 Continue reading