Why TLS 1.3 isn’t in browsers yet

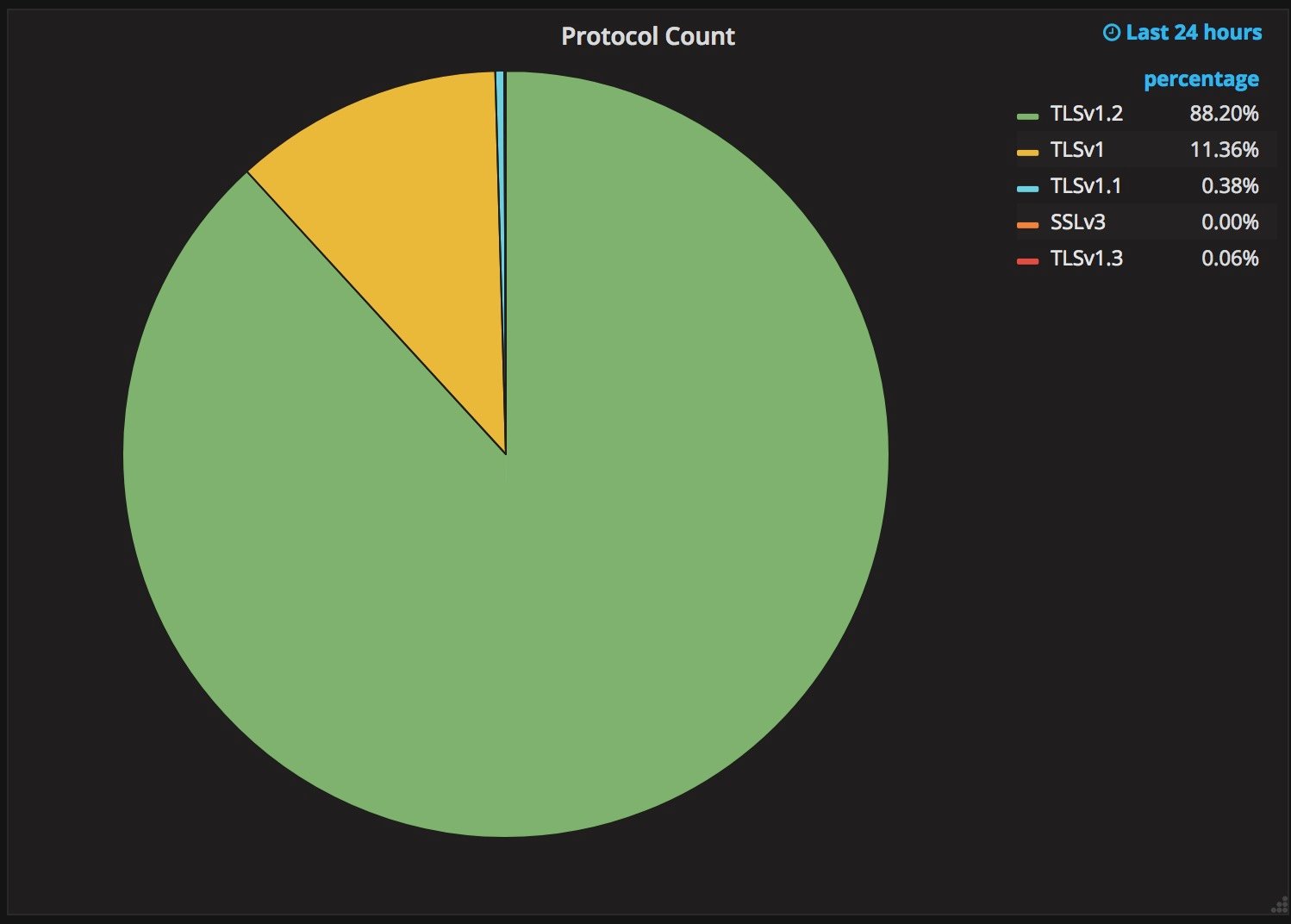

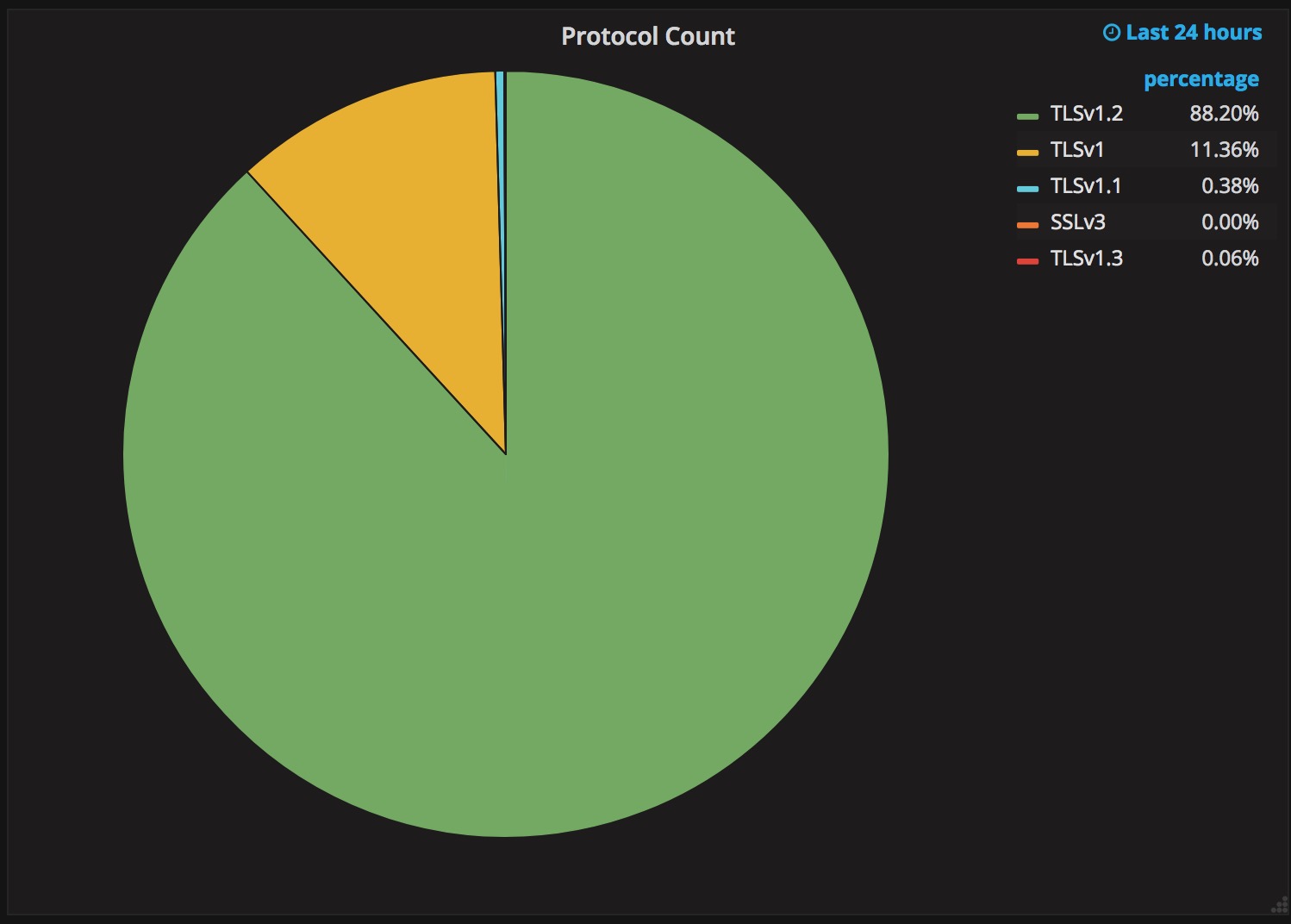

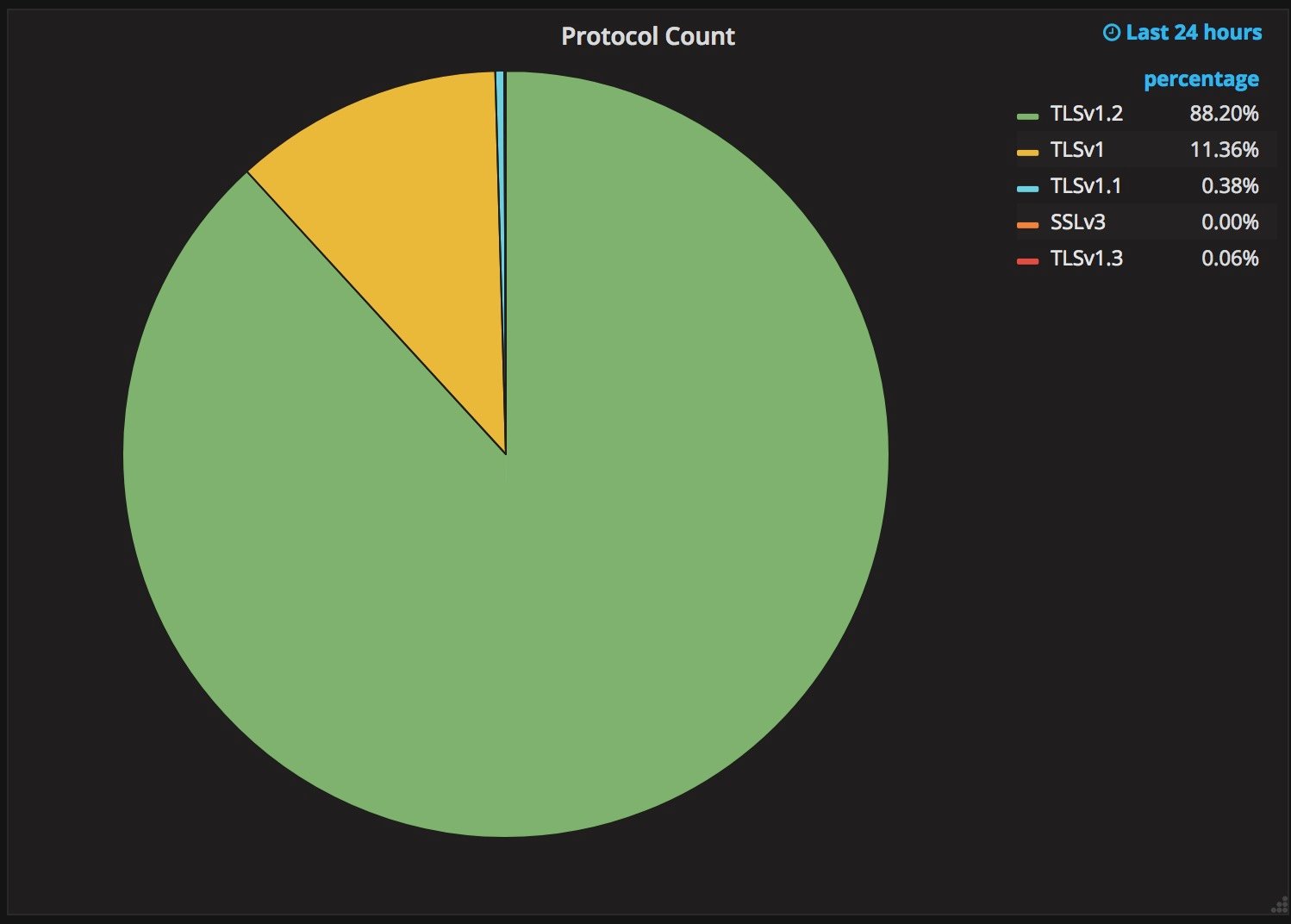

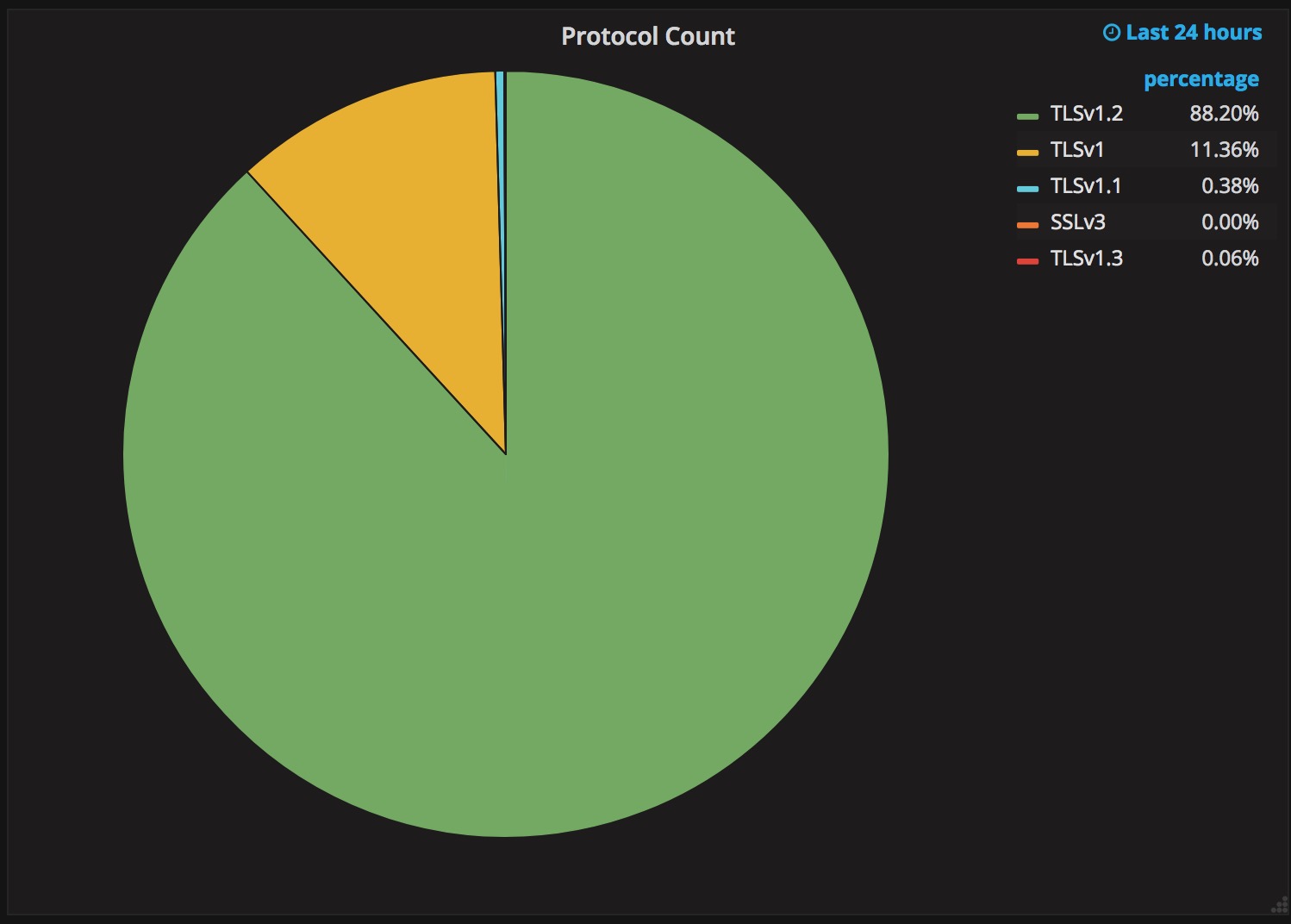

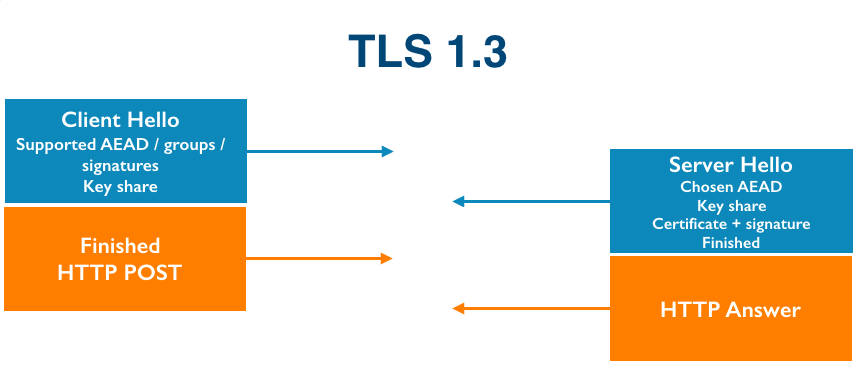

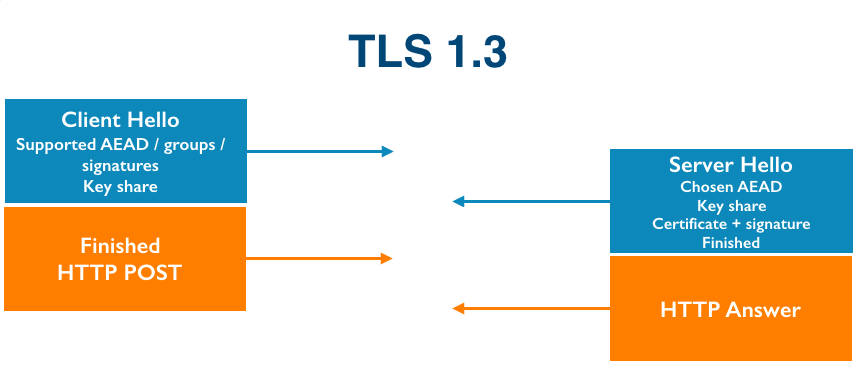

Upgrading a security protocol in an ecosystem as complex as the Internet is difficult. You need to update clients and servers and make sure everything in between continues to work correctly. The Internet is in the middle of such an upgrade right now. Transport Layer Security (TLS), the protocol that keeps web browsing confidential (and many people persist in calling SSL), is getting its first major overhaul with the introduction of TLS 1.3. Last year, Cloudflare was the first major provider to support TLS 1.3 by default on the server side. We expected the client side would follow suit and be enabled in all major browsers soon thereafter. It has been over a year since Cloudflare’s TLS 1.3 launch and still, none of the major browsers have enabled TLS 1.3 by default.

The reductive answer to why TLS 1.3 hasn’t been deployed yet is middleboxes: network appliances designed to monitor and sometimes intercept HTTPS traffic inside corporate environments and mobile networks. Some of these middleboxes implemented TLS 1.2 incorrectly and now that’s blocking browsers from releasing TLS 1.3. However, simply blaming network appliance vendors would be disingenuous. The deeper truth of the Continue reading

Why TLS 1.3 isn’t in browsers yet

Upgrading a security protocol in an ecosystem as complex as the Internet is difficult. You need to update clients and servers and make sure everything in between continues to work correctly. The Internet is in the middle of such an upgrade right now. Transport Layer Security (TLS), the protocol that keeps web browsing confidential (and many people persist in calling SSL), is getting its first major overhaul with the introduction of TLS 1.3. Last year, Cloudflare was the first major provider to support TLS 1.3 by default on the server side. We expected the client side would follow suit and be enabled in all major browsers soon thereafter. It has been over a year since Cloudflare’s TLS 1.3 launch and still, none of the major browsers have enabled TLS 1.3 by default.

The reductive answer to why TLS 1.3 hasn’t been deployed yet is middleboxes: network appliances designed to monitor and sometimes intercept HTTPS traffic inside corporate environments and mobile networks. Some of these middleboxes implemented TLS 1.2 incorrectly and now that’s blocking browsers from releasing TLS 1.3. However, simply blaming network appliance vendors would be disingenuous. The deeper truth of the Continue reading

Concise (Post-Christmas) Cryptography Challenges

It's the day after Christmas; or, depending on your geography, Boxing Day. With the festivities over, you may still find yourself stuck at home and somewhat bored.

Either way; here are three relatively short cryptography challenges, you can use to keep you momentarily occupied. Other than the hints (and some internet searching), you shouldn't require a particularly deep cryptography knowledge to start diving into these challenges. For hints and spoilers, scroll down below the challenges!

Challenges

Password Hashing

The first one is simple enough to explain; here are 5 hashes (from user passwords), crack them:

$2y$10$TYau45etgP4173/zx1usm.uO34TXAld/8e0/jKC5b0jHCqs/MZGBi

$2y$10$qQVWugep3jGmh4ZHuHqw8exczy4t8BZ/Jy6H4vnbRiXw.BGwQUrHu

$2y$10$DuZ0T/Qieif009SdR5HD5OOiFl/WJaDyCDB/ztWIM.1koiDJrN5eu

$2y$10$0ClJ1I7LQxMNva/NwRa5L.4ly3EHB8eFR5CckXpgRRKAQHXvEL5oS

$2y$10$LIWMJJgX.Ti9DYrYiaotHuqi34eZ2axl8/i1Cd68GYsYAG02Icwve

HTTP Strict Transport Security

A website works by redirecting its www. subdomain to a regional subdomain (i.e. uk.), the site uses HSTS to prevent SSLStrip attacks. You can see cURL requests of the headers from the redirects below, how would you practically go about stripping HTTPS in this example?

$ curl -i http://www.example.com

HTTP/1.1 302 Moved Temporarily

Server: nginx

Date: Tue, 26 Dec 2017 12:26:51 GMT

Content-Type: text/html

Transfer-Encoding: chunked

Connection: keep-alive

location: https://uk.example.com/

$ curl -i http://uk.example.com

HTTP/1.1 200 OK

Server: nginx

Content-Type: text/html; Continue readingConcise (Post-Christmas) Cryptography Challenges

It's the day after Christmas; or, depending on your geography, Boxing Day. With the festivities over, you may still find yourself stuck at home and somewhat bored.

Either way; here are three relatively short cryptography challenges, you can use to keep you momentarily occupied. Other than the hints (and some internet searching), you shouldn't require a particularly deep cryptography knowledge to start diving into these challenges. For hints and spoilers, scroll down below the challenges!

Challenges

Password Hashing

The first one is simple enough to explain; here are 5 hashes (from user passwords), crack them:

$2y$10$TYau45etgP4173/zx1usm.uO34TXAld/8e0/jKC5b0jHCqs/MZGBi

$2y$10$qQVWugep3jGmh4ZHuHqw8exczy4t8BZ/Jy6H4vnbRiXw.BGwQUrHu

$2y$10$DuZ0T/Qieif009SdR5HD5OOiFl/WJaDyCDB/ztWIM.1koiDJrN5eu

$2y$10$0ClJ1I7LQxMNva/NwRa5L.4ly3EHB8eFR5CckXpgRRKAQHXvEL5oS

$2y$10$LIWMJJgX.Ti9DYrYiaotHuqi34eZ2axl8/i1Cd68GYsYAG02Icwve

HTTP Strict Transport Security

A website works by redirecting its www. subdomain to a regional subdomain (i.e. uk.), the site uses HSTS to prevent SSLStrip attacks. You can see cURL requests of the headers from the redirects below, how would you practically go about stripping HTTPS in this example?

$ curl -i http://www.example.com

HTTP/1.1 302 Moved Temporarily

Server: nginx

Date: Tue, 26 Dec 2017 12:26:51 GMT

Content-Type: text/html

Transfer-Encoding: chunked

Connection: keep-alive

location: https://uk.example.com/

$ curl -i http://uk.example.com

HTTP/1.1 200 OK

Server: nginx

Content-Type: text/html; Continue readingThe History of Stock Quotes

In honor of all the fervor around Bitcoin, we thought it would be fun to revisit the role finance has had in the history of technology even before the Internet came around. This was adapted from a post which originally appeared on the Eager blog.

The issue was not the lack of a rapid communication system in France, it just hadn’t expanded far enough yet. France had an elaborate semaphore system. Arranged all around the French countryside were buildings with mechanical flags which could be rotated to transmit specific characters to the next station in line. When the following station showed the same flag positions as this one, you knew the letter was acknowledged, and you could show the next character. This system allowed roughly one character to be transmitted per minute, with the start of a message moving down the line at almost 900 miles per hour. It wouldn’t expand to Toulouse until 1834 however, Continue reading

The History of Stock Quotes

In honor of all the fervor around Bitcoin, we thought it would be fun to revisit the role finance has had in the history of technology even before the Internet came around. This was adapted from a post which originally appeared on the Eager blog.

The issue was not the lack of a rapid communication system in France, it just hadn’t expanded far enough yet. France had an elaborate semaphore system. Arranged all around the French countryside were buildings with mechanical flags which could be rotated to transmit specific characters to the next station in line. When the following station showed the same flag positions as this one, you knew the letter was acknowledged, and you could show the next character. This system allowed roughly one character to be transmitted per minute, with the start of a message moving down the line at almost 900 miles per hour. It wouldn’t expand to Toulouse until 1834 however, Continue reading

Simple Cyber Security Tips (for your Parents)

Today, December 25th, Cloudflare offices around the world are taking a break. From San Francisco to London and Singapore; engineers have retreated home for the holidays (albeit with those engineers on-call closely monitoring their mobile phones).

Software engineering pro-tip:

— Chris Albon (@chrisalbon) December 20, 2017

Do not, I repeat, do not deploy this week. That is how you end up debugging a critical issue from your parent's wifi in your old bedroom while your spouse hates you for abandoning them with your racist uncle.

Whilst our Support and SRE teams operated on a schedule to ensure fingers were on keyboards; on Saturday, I headed out of the London bound for the Warwickshire countryside. Away from the barracks of the London tech scene, it didn't take long for the following conversation to happen:

- Family member: "So what do you do nowadays?"

- Me: "I work in Cyber Security."

- Family member: "There seems to be a new cyber attack every day on the news! What can I possibly do to keep myself safe?"

If you work in the tech industry, you may find a family member asking you for advice on cybersecurity. This blog post will hopefully save you Continue reading

Simple Cyber Security Tips (for your Parents)

Today, December 25th, Cloudflare offices around the world are taking a break. From San Francisco to London and Singapore; engineers have retreated home for the holidays (albeit with those engineers on-call closely monitoring their mobile phones).

Software engineering pro-tip:

— Chris Albon (@chrisalbon) December 20, 2017

Do not, I repeat, do not deploy this week. That is how you end up debugging a critical issue from your parent's wifi in your old bedroom while your spouse hates you for abandoning them with your racist uncle.

Whilst our Support and SRE teams operated on a schedule to ensure fingers were on keyboards; on Saturday, I headed out of the London bound for the Warwickshire countryside. Away from the barracks of the London tech scene, it didn't take long for the following conversation to happen:

- Family member: "So what do you do nowadays?"

- Me: "I work in Cyber Security."

- Family member: "There seems to be a new cyber attack every day on the news! What can I possibly do to keep myself safe?"

If you work in the tech industry, you may find a family member asking you for advice on cybersecurity. This blog post will hopefully save you Continue reading

TLS 1.3 is going to save us all, and other reasons why IoT is still insecure

As I’m writing this, four DDoS attacks are ongoing and being automatically mitigated by Gatebot. Cloudflare’s job is to get attacked. Our network gets attacked constantly.

Around the fall of 2016, we started seeing DDoS attacks that looked a little different than usual. One attack we saw around that time had traffic coming from 52,467 unique IP addresses. The clients weren’t servers or desktop computers; when we tried to connect to the clients over port 80, we got the login pages to CCTV cameras.

Obviously it’s important to lock down IoT devices so that they can’t be co-opted into evil botnet armies, but when we talk to some IoT developers, we hear a few concerning security patterns. We’ll dive into two problematic areas and their solutions: software updates and TLS.

The Trouble With Updates

With PCs, the end user is ultimately responsible for securing their devices. People understand that they need to update their computers and phones. Just 4 months after Apple released iOS 10, it was installed on 76% of active devices.

People just don’t know that they are supposed to update IoT things like they are supposed to update their computers because they’ve never had to update things Continue reading

TLS 1.3 is going to save us all, and other reasons why IoT is still insecure

As I’m writing this, four DDoS attacks are ongoing and being automatically mitigated by Gatebot. Cloudflare’s job is to get attacked. Our network gets attacked constantly.

Around the fall of 2016, we started seeing DDoS attacks that looked a little different than usual. One attack we saw around that time had traffic coming from 52,467 unique IP addresses. The clients weren’t servers or desktop computers; when we tried to connect to the clients over port 80, we got the login pages to CCTV cameras.

Obviously it’s important to lock down IoT devices so that they can’t be co-opted into evil botnet armies, but when we talk to some IoT developers, we hear a few concerning security patterns. We’ll dive into two problematic areas and their solutions: software updates and TLS.

The Trouble With Updates

With PCs, the end user is ultimately responsible for securing their devices. People understand that they need to update their computers and phones. Just 4 months after Apple released iOS 10, it was installed on 76% of active devices.

People just don’t know that they are supposed to update IoT things like they are supposed to update their computers because they’ve never had to update things Continue reading

Technical reading from the Cloudflare blog for the holidays

During 2017 Cloudflare published 172 blog posts (including this one). If you need a distraction from the holiday festivities at this time of year here are some highlights from the year.

The WireX Botnet: How Industry Collaboration Disrupted a DDoS Attack

We worked closely with companies across the industry to track and take down the Android WireX Botnet. This blog post goes into detail about how that botnet operated, how it was distributed and how it was taken down.

Randomness 101: LavaRand in Production

The wall of Lava Lamps in the San Francisco office is used to feed entropy into random number generators across our network. This blog post explains how.

ARM Takes Wing: Qualcomm vs. Intel CPU comparison

Our network of data centers around the world all contain Intel-based servers, but we're interested in ARM-based servers because of the potential cost/power savings. This blog post took a look at the relative performance of Intel processors and Qualcomm's latest server offering.

How to Monkey Patch the Linux Kernel

One engineer wanted to combine the Dvorak and QWERTY keyboard layouts and did so by patching the Linux kernel using SystemTap. This blog explains Continue reading

Technical reading from the Cloudflare blog for the holidays

During 2017 Cloudflare published 172 blog posts (including this one). If you need a distraction from the holiday festivities at this time of year here are some highlights from the year.

The WireX Botnet: How Industry Collaboration Disrupted a DDoS Attack

We worked closely with companies across the industry to track and take down the Android WireX Botnet. This blog post goes into detail about how that botnet operated, how it was distributed and how it was taken down.

Randomness 101: LavaRand in Production

The wall of Lava Lamps in the San Francisco office is used to feed entropy into random number generators across our network. This blog post explains how.

ARM Takes Wing: Qualcomm vs. Intel CPU comparison

Our network of data centers around the world all contain Intel-based servers, but we're interested in ARM-based servers because of the potential cost/power savings. This blog post took a look at the relative performance of Intel processors and Qualcomm's latest server offering.

How to Monkey Patch the Linux Kernel

One engineer wanted to combine the Dvorak and QWERTY keyboard layouts and did so by patching the Linux kernel using SystemTap. This blog explains Continue reading

2018 and the Internet: our predictions

At the end of 2016, I wrote a blog post with seven predictions for 2017. Let’s start by reviewing how I did.

Public Domain image by Michael Sharpe

Public Domain image by Michael Sharpe

I’ll score myself with two points for being correct, one point for mostly right and zero for wrong. That’ll give me a maximum possible score of fourteen. Here goes...

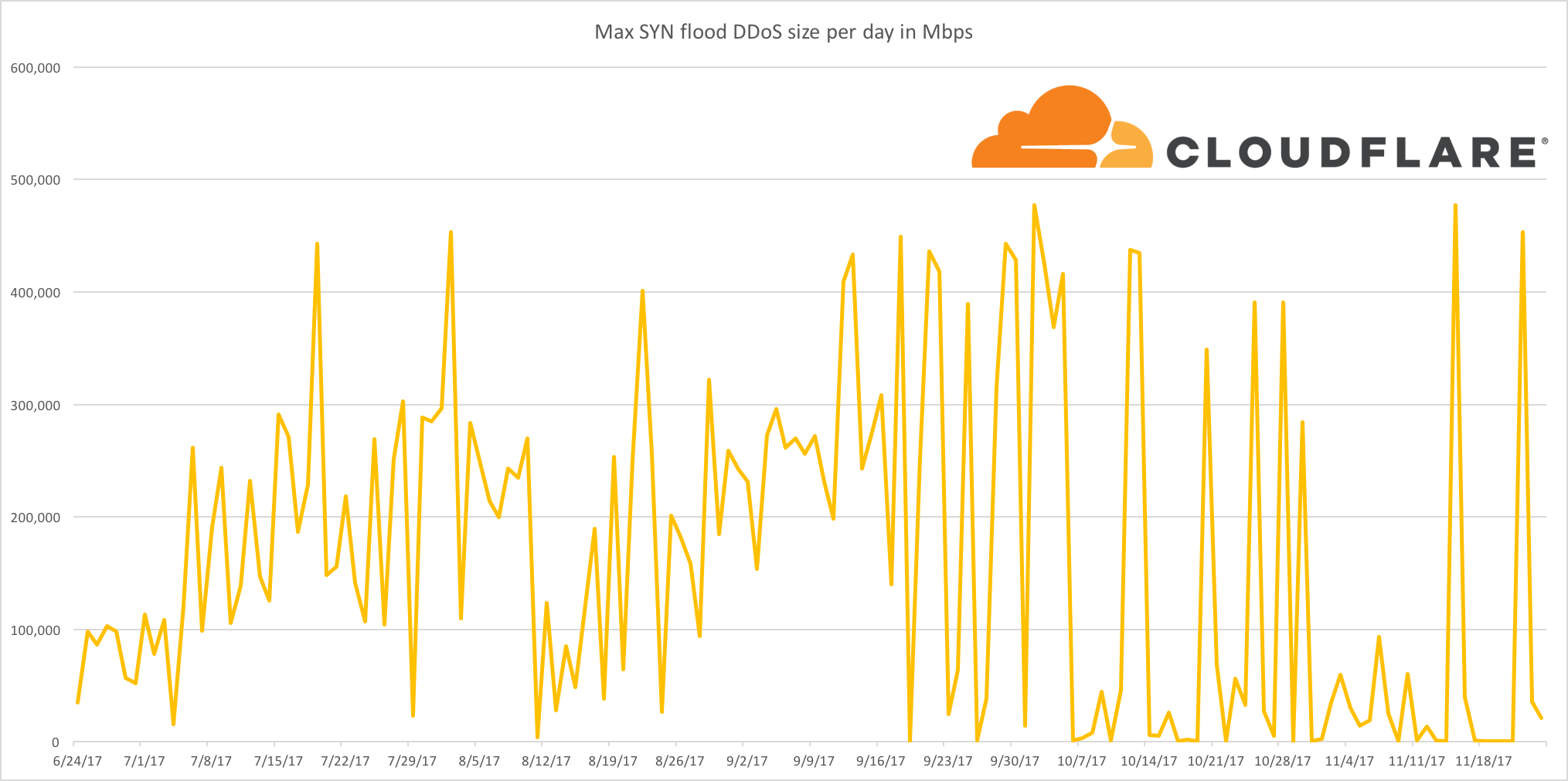

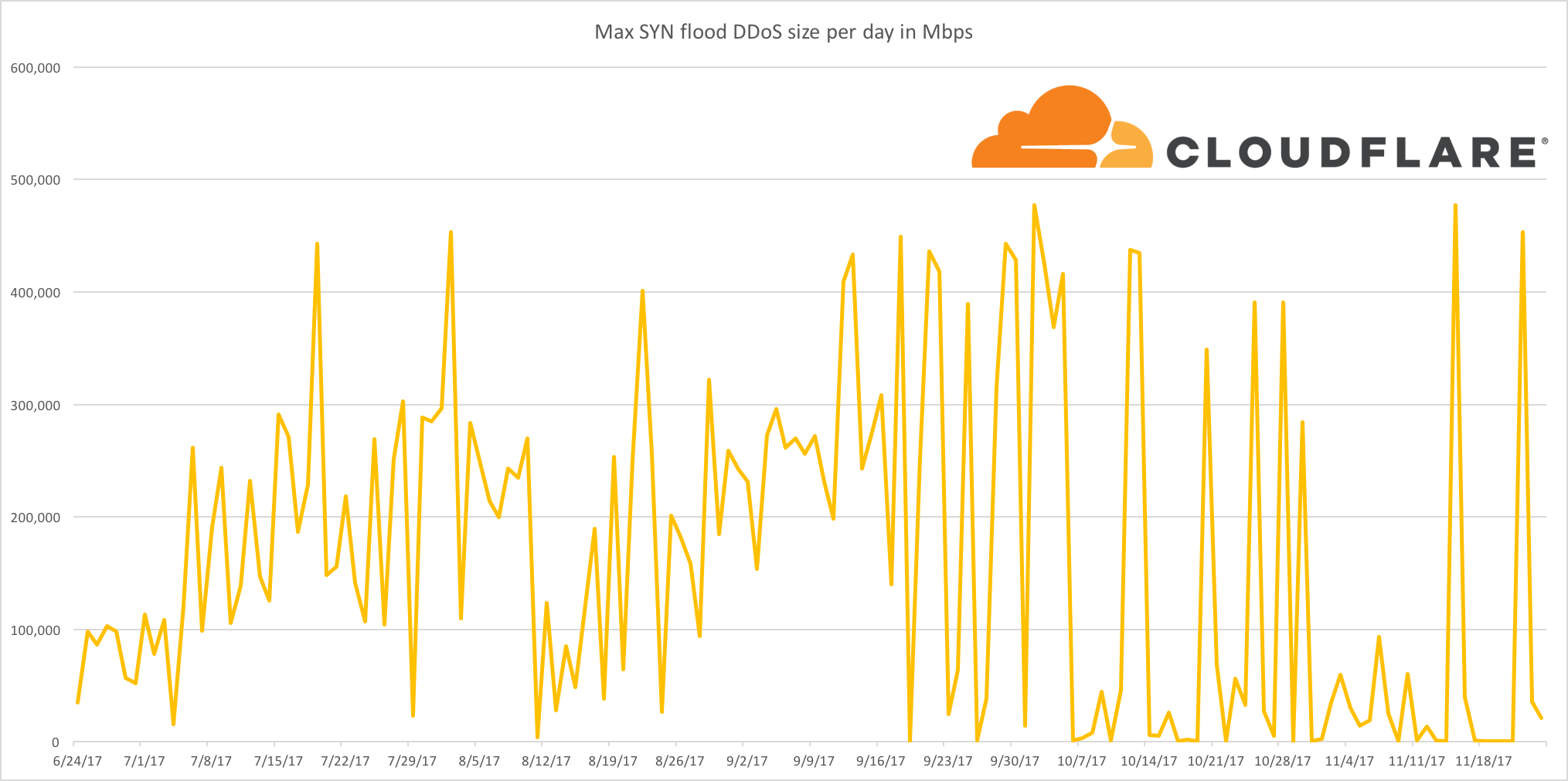

2017-1: 1Tbps DDoS attacks will become the baseline for ‘massive attacks’

This turned out to be true but mostly because massive attacks went away as Layer 3 and Layer 4 DDoS mitigation services got good at filtering out high bandwidth and high packet rates. Over the year we saw many DDoS attacks in the 100s of Gbps (up to 0.5Tbps) and then in September announced Unmetered Mitigation. Almost immediately we saw attackers stop bothering to attack Cloudflare-protected sites with large DDoS.

So, I’ll be generous and give myself one point.

2017-2: The Internet will get faster yet again as protocols like QUIC become more prevalent

Well, yes and no. QUIC has become more prevalent as Google has widely deployed it in the Chrome browser and it accounts for about 7% of Internet traffic. At the same time the protocol is working its Continue reading

2018 and the Internet: our predictions

At the end of 2016, I wrote a blog post with seven predictions for 2017. Let’s start by reviewing how I did.

Public Domain image by Michael Sharpe

I’ll score myself with two points for being correct, one point for mostly right and zero for wrong. That’ll give me a maximum possible score of fourteen. Here goes...

2017-1: 1Tbps DDoS attacks will become the baseline for ‘massive attacks’

This turned out to be true but mostly because massive attacks went away as Layer 3 and Layer 4 DDoS mitigation services got good at filtering out high bandwidth and high packet rates. Over the year we saw many DDoS attacks in the 100s of Gbps (up to 0.5Tbps) and then in September announced Unmetered Mitigation. Almost immediately we saw attackers stop bothering to attack Cloudflare-protected sites with large DDoS.

So, I’ll be generous and give myself one point.

2017-2: The Internet will get faster yet again as protocols like QUIC become more prevalent

Well, yes and no. QUIC has become more prevalent as Google has widely deployed it in the Chrome browser and it accounts for about 7% of Internet traffic. At the same time the protocol is working its Continue reading

Highlights from Cloudflare’s Weekend at YHack

Along with four other Cloudflare colleagues, I traveled to New Haven, CT last weekend to support 1,000+ college students from Yale and beyond at YHack hackathon.

Throughout the weekend-long event, student attendees were supported by mentors, workshops, entertaining performances, tons of food and caffeine breaks, and a lot of air-mattresses and sleeping bags. Their purpose was to create projects to solve real world problems and to learn and have fun in the process.

How Cloudflare contributed

Cloudflare sponsored YHack. Our team of five wanted to support, educate, and positively impact the experience and learning of these college students. Here are some ways we engaged with students.

1. Mentoring

Our team of five mentors from three different teams and two different Cloudflare locations (San Francisco and Austin) was available at the Cloudflare table or via Slack for almost every hour of the event. There were a few hours in the early morning when all of us were asleep, I'm sure, but we were available to help otherwise.

2. Providing challenges

Cloudflare submitted two challenges to the student attendees, encouraging them to protect and improve the performance of their projects and/or create an opportunity for exposure to 6 million+ potential users of Continue reading

Highlights from Cloudflare’s Weekend at YHack

Along with four other Cloudflare colleagues, I traveled to New Haven, CT last weekend to support 1,000+ college students from Yale and beyond at YHack hackathon.

Throughout the weekend-long event, student attendees were supported by mentors, workshops, entertaining performances, tons of food and caffeine breaks, and a lot of air-mattresses and sleeping bags. Their purpose was to create projects to solve real world problems and to learn and have fun in the process.

How Cloudflare contributed

Cloudflare sponsored YHack. Our team of five wanted to support, educate, and positively impact the experience and learning of these college students. Here are some ways we engaged with students.

1. Mentoring

Our team of five mentors from three different teams and two different Cloudflare locations (San Francisco and Austin) was available at the Cloudflare table or via Slack for almost every hour of the event. There were a few hours in the early morning when all of us were asleep, I'm sure, but we were available to help otherwise.

2. Providing challenges

Cloudflare submitted two challenges to the student attendees, encouraging them to protect and improve the performance of their projects and/or create an opportunity for exposure to 6 million+ potential users of Continue reading

The Athenian Project: Helping Protect Elections

From cyberattacks on election infrastructure, to attempted hacking of voting machines, to attacks on campaign websites, the last few years have brought us unprecedented attempts to use online vulnerabilities to affect elections both in the United States and abroad. In the United States, the Department of Homeland Security reported that individuals tried to hack voter registration files or public election sites in 21 states prior to the 2016 elections. In Europe, hackers targeted not only the campaign of Emmanuel Macron in France, but government election infrastructure in the Czech Republic and Montenegro.

Cyber attack is only one of the many online challenges facing election officials. Unpredictable website traffic patterns are another. Voter registration websites see a flood of legitimate traffic as registration deadlines approach. Election websites must integrate reported results and stay online notwithstanding notoriously hard-to-model election day loads.

We at Cloudflare have seen many election-related cyber challenges firsthand. In the 2016 U.S. presidential campaign, Cloudflare protected most of the major presidential campaign websites from cyberattack, including the Trump/Pence campaign website, the website for the campaign of Senator Bernie Sanders, and websites for 14 of the 15 leading candidates from the two major parties. We have also protected election Continue reading

The Athenian Project: Helping Protect Elections

From cyberattacks on election infrastructure, to attempted hacking of voting machines, to attacks on campaign websites, the last few years have brought us unprecedented attempts to use online vulnerabilities to affect elections both in the United States and abroad. In the United States, the Department of Homeland Security reported that individuals tried to hack voter registration files or public election sites in 21 states prior to the 2016 elections. In Europe, hackers targeted not only the campaign of Emmanuel Macron in France, but government election infrastructure in the Czech Republic and Montenegro.

Cyber attack is only one of the many online challenges facing election officials. Unpredictable website traffic patterns are another. Voter registration websites see a flood of legitimate traffic as registration deadlines approach. Election websites must integrate reported results and stay online notwithstanding notoriously hard-to-model election day loads.

We at Cloudflare have seen many election-related cyber challenges firsthand. In the 2016 U.S. presidential campaign, Cloudflare protected most of the major presidential campaign websites from cyberattack, including the Trump/Pence campaign website, the website for the campaign of Senator Bernie Sanders, and websites for 14 of the 15 leading candidates from the two major parties. We have also protected election Continue reading

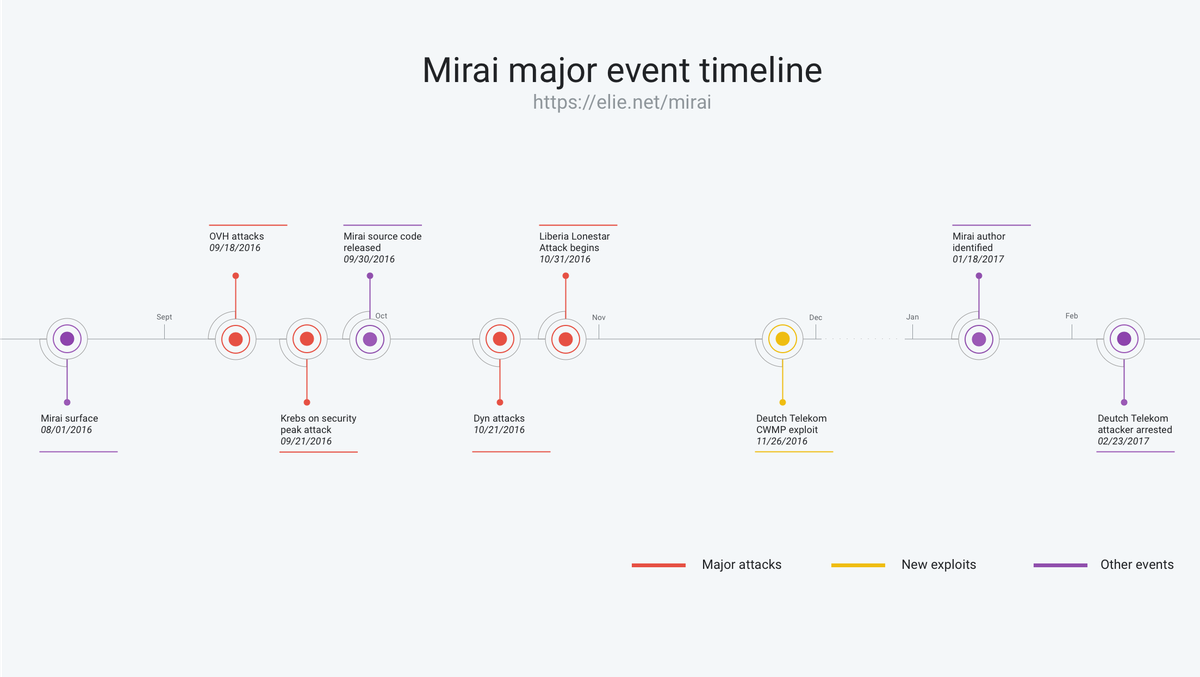

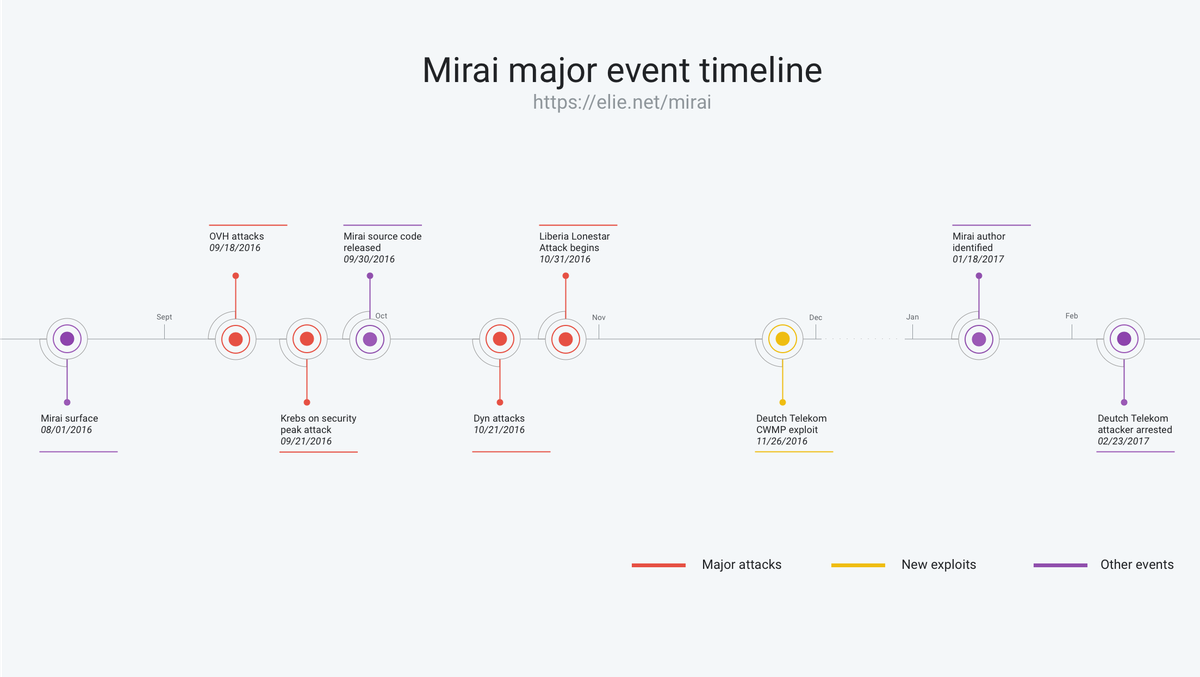

Inside the infamous Mirai IoT Botnet: A Retrospective Analysis

This is a guest post by Elie Bursztein who writes about security and anti-abuse research. It was first published on his blog and has been lightly edited.

This post provides a retrospective analysis of Mirai — the infamous Internet-of-Things botnet that took down major websites via massive distributed denial-of-service using hundreds of thousands of compromised Internet-Of-Things devices. This research was conducted by a team of researchers from Cloudflare, Georgia Tech, Google, Akamai, the University of Illinois, the University of Michigan, and Merit Network and resulted in a paper published at USENIX Security 2017.

At its peak in September 2016, Mirai temporarily crippled several high-profile services such as OVH, Dyn, and Krebs on Security via massive distributed Denial of service attacks (DDoS). OVH reported that these attacks exceeded 1 Tbps—the largest on public record.

What’s remarkable about these record-breaking attacks is they were carried out via small, innocuous Internet-of-Things (IoT) devices like home routers, air-quality monitors, and personal surveillance cameras. At its peak, Mirai infected over 600,000 vulnerable IoT devices, according to our measurements.

This blog post follows the timeline above

This blog post follows the timeline above

- Mirai Genesis: Discusses Mirai’s early days and provides a brief technical overview of how Mirai works and Continue reading

Inside the infamous Mirai IoT Botnet: A Retrospective Analysis

This is a guest post by Elie Bursztein who writes about security and anti-abuse research. It was first published on his blog and has been lightly edited.

This post provides a retrospective analysis of Mirai — the infamous Internet-of-Things botnet that took down major websites via massive distributed denial-of-service using hundreds of thousands of compromised Internet-Of-Things devices. This research was conducted by a team of researchers from Cloudflare (Jaime Cochran, Nick Sullivan), Georgia Tech, Google, Akamai, the University of Illinois, the University of Michigan, and Merit Network and resulted in a paper published at USENIX Security 2017.

At its peak in September 2016, Mirai temporarily crippled several high-profile services such as OVH, Dyn, and Krebs on Security via massive distributed Denial of service attacks (DDoS). OVH reported that these attacks exceeded 1 Tbps—the largest on public record.

What’s remarkable about these record-breaking attacks is they were carried out via small, innocuous Internet-of-Things (IoT) devices like home routers, air-quality monitors, and personal surveillance cameras. At its peak, Mirai infected over 600,000 vulnerable IoT devices, according to our measurements.

This blog post follows the timeline above

- Mirai Genesis: Discusses Mirai’s early days and provides a brief technical overview of Continue reading