The Super Secret Cloudflare Master Plan, or why we acquired Neumob

We announced today that Cloudflare has acquired Neumob. Neumob’s team built exceptional technology to speed up mobile apps, reduce errors on challenging mobile networks, and increase conversions. Cloudflare will integrate the Neumob technology with our global network to give Neumob truly global reach.

It’s tempting to think of the Neumob acquisition as a point product added to the Cloudflare portfolio. But it actually represents a key part of a long term “Super Secret Cloudflare Master Plan”.

CC BY 2.0 image by Neil Rickards

CC BY 2.0 image by Neil Rickards

Over the last few years Cloudflare has been building a large network of data centers across the world to help fulfill our mission of helping to build a better Internet. These data centers all run an identical software stack that implements Cloudflare’s cache, DNS, DDoS, WAF, load balancing, rate limiting, etc.

We’re now at 118 data centers in 58 countries and are continuing to expand with a goal of being as close to end users as possible worldwide.

The data centers are tied together by secure connections which are optimized using our Argo smart routing capability. Our Quicksilver technology enables us to update and modify the settings and software running across this vast network in seconds.

Thwarting the Tactics of the Equifax Attackers

We are now 3 months on from one of the biggest, most significant data breaches in history, but has it redefined people's awareness on security?

The answer to that is absolutely yes, awareness is at an all-time high. Awareness, however, does not always result in positive action. The fallacy which is often assumed is "surely, if I keep my software up to date with all the patches, that's more than enough to keep me safe?". It's true, keeping software up to date does defend against known vulnerabilities, but it's a very reactive stance. The more important part is protecting against the unknown.

Something every engineer will agree on is that security is hard, and maintaining systems is even harder. Patching or upgrading systems can lead to unforeseen outages or unexpected behaviour due to other fixes which may be applied. This, in most cases, can cause huge delays in the deployment of patches or upgrades, due to requiring either regression testing or deployment in a staging environment. Whilst processes are followed, and tests are done, systems are sat vulnerable, ready to be exploited if they are exposed to the internet.

Looking at the wider landscape, an increase in security research Continue reading

Go, don’t collect my garbage

Not long ago I needed to benchmark the performance of Golang on a many-core machine. I took several of the benchmarks that are bundled with the Go source code, copied them, and modified them to run on all available threads. In that case the machine has 24 cores and 48 threads.

CC BY-SA 2.0 image by sponki25

CC BY-SA 2.0 image by sponki25

I started with ECDSA P256 Sign, probably because I have warm feeling for that function, since I optimized it for amd64.

First, I ran the benchmark on a single goroutine: ECDSA-P256 Sign,30618.50, op/s

That looks good; next I ran it on 48 goroutines: ECDSA-P256 Sign,78940.67, op/s.

OK, that is not what I expected. Just over 2X speedup, from 24 physical cores? I must be doing something wrong. Maybe Go only uses two cores? I ran top, it showed 2,266% utilization. That is not the 4,800% I expected, but it is also way above 400%.

How about taking a step back, and running the benchmark on two goroutines? ECDSA-P256 Sign,55966.40, op/s. Almost double, so pretty good. How about four goroutines? ECDSA-P256 Sign,108731.00, op/s. That is actually faster than 48 goroutines, what is going on?

I ran the benchmark Continue reading

Cloudflare Wants to Buy Your Meetup Group Pizza

If you’re a web dev / devops / etc. meetup group that also works toward building a faster, safer Internet, I want to support your awesome group by buying you pizza. If your group’s focus falls within one of the subject categories below and you’re willing to give us a 30 second shout out and tweet a photo of your group and @Cloudflare, your meetup’s pizza expense will be reimbursed.

Developer Relations at Cloudflare & why we’re doing this

I’m Andrew Fitch and I work on the Developer Relations team at Cloudflare. One of the things I like most about working in DevRel is empowering community members who are already doing great things out in the world. Whether they’re starting conferences, hosting local meetups, or writing educational content, I think it’s important to support them in their efforts and reward them for doing what they do. Community organizers are the glue that holds developers together socially. Let’s support them and make their lives easier by taking care of the pizza part of the equation.

What’s in it for Cloudflare?

- We want web developers to target the apps platform

- We want more people to Continue reading

On the dangers of Intel’s frequency scaling

While I was writing the post comparing the new Qualcomm server chip, Centriq, to our current stock of Intel Skylake-based Xeons, I noticed a disturbing phenomena.

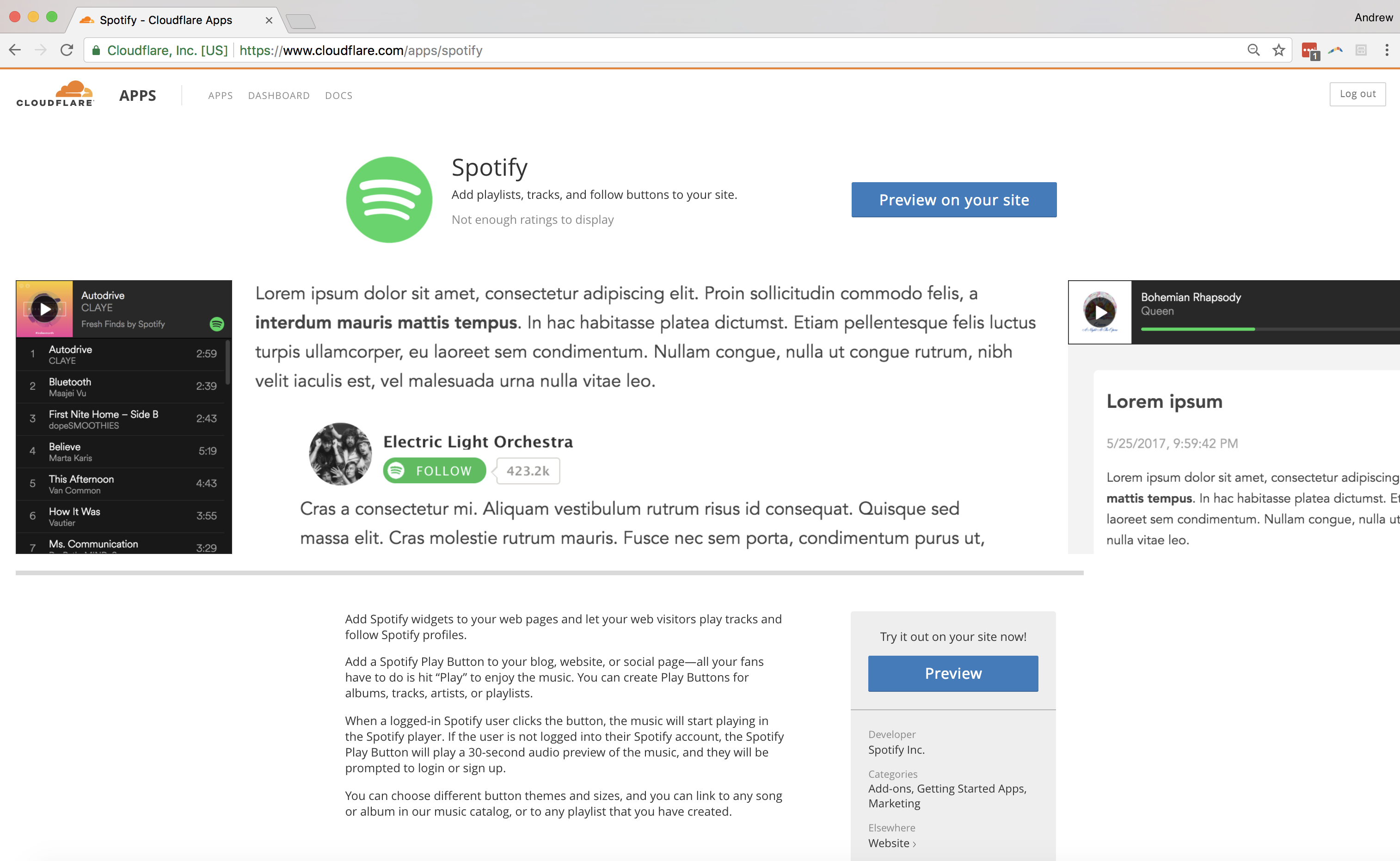

When benchmarking OpenSSL 1.1.1dev, I discovered that the performance of the cipher ChaCha20-Poly1305 does not scale very well. On a single thread, it performed at the speed of approximately 2.89GB/s, whereas on 24 cores, and 48 threads it performed at just over 35 GB/s.

CC BY-SA 2.0 image by blumblaum

CC BY-SA 2.0 image by blumblaum

Now this is a very high number, but I would like to see something closer to 69GB/s. 35GB/s is just 1.46GB/s/core, or roughly 50% of the single core performance. AES-GCM scales much better, to 80% of single core performance, which is understandable, because the CPU can sustain higher frequency turbo on a single core, but not all cores.

Why is the scaling of ChaCha20-Poly1305 so poor? Meet AVX-512. AVX-512 is a new Intel instruction set that adds many new 512-bit wide SIMD instructions and promotes most of the existing ones to 512-bit. The problem with such wide instructions is that they consume power. A lot of power. Imagine a single instruction that does the work of 64 regular Continue reading

Privacy Pass – “The Math”

This is a guest post by Alex Davidson, a PhD student in Cryptography at Royal Holloway, University of London, who is part of the team that developed Privacy Pass. Alex worked at Cloudflare for the summer on deploying Privacy Pass on the Cloudflare network.

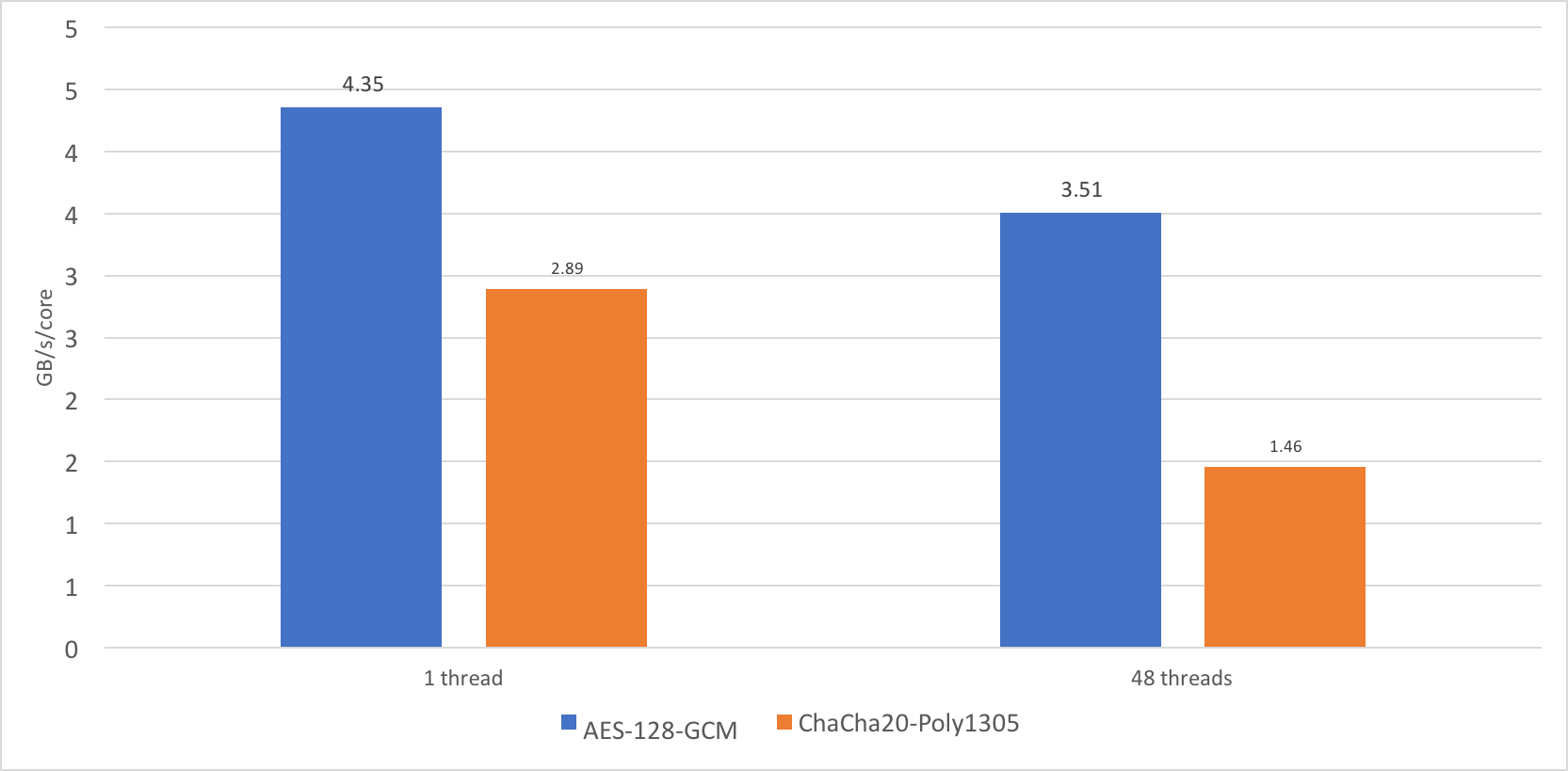

During a recent internship at Cloudflare, I had the chance to help integrate support for improving the accessibility of websites that are protected by the Cloudflare edge network. Specifically, I helped develop an open-source browser extension named ‘Privacy Pass’ and added support for the Privacy Pass protocol within Cloudflare infrastructure. Currently, Privacy Pass works with the Cloudflare edge to help honest users to reduce the number of Cloudflare CAPTCHA pages that they see when browsing the web. However, the operation of Privacy Pass is not limited to the Cloudflare use-case and we envisage that it has applications over a wider and more diverse range of applications as support grows.

In summary, this browser extension allows a user to generate cryptographically ‘blinded’ tokens that can then be signed by supporting servers following some receipt of authenticity (e.g. a CAPTCHA solution). The browser extension can then use these tokens to ‘prove’ honesty in future communications with the Continue reading

Cloudflare supports Privacy Pass

Enabling anonymous access to the web with privacy-preserving cryptography

Cloudflare supports Privacy Pass, a recently-announced privacy-preserving protocol developed in collaboration with researchers from Royal Holloway and the University of Waterloo. Privacy Pass leverages an idea from cryptography — zero-knowledge proofs — to let users prove their identity across multiple sites anonymously without enabling tracking. Users can now use the Privacy Pass browser extension to reduce the number of challenge pages presented by Cloudflare. We are happy to support this protocol and believe that it will help improve the browsing experience for some of the Internet’s least privileged users.

The Privacy Pass extension is available for both Chrome and Firefox. When people use anonymity services or shared IPs, it makes it more difficult for website protection services like Cloudflare to identify their requests as coming from legitimate users and not bots. Privacy Pass helps reduce the friction for these users—which include some of the most vulnerable users online—by providing them a way to prove that they are a human across multiple sites on the Cloudflare network. This is done without revealing their identity, and without exposing Cloudflare customers to additional threats from malicious bots. As the first service to support Privacy Continue reading

ARM Takes Wing: Qualcomm vs. Intel CPU comparison

One of the nicer perks I have here at Cloudflare is access to the latest hardware, long before it even reaches the market.

Until recently I mostly played with Intel hardware. For example Intel supplied us with an engineering sample of their Skylake based Purley platform back in August 2016, to give us time to evaluate it and optimize our software. As a former Intel Architect, who did a lot of work on Skylake (as well as Sandy Bridge, Ivy Bridge and Icelake), I really enjoy that.

Our previous generation of servers was based on the Intel Broadwell micro-architecture. Our configuration includes dual-socket Xeons E5-2630 v4, with 10 cores each, running at 2.2GHz, with a 3.1GHz turbo boost and hyper-threading enabled, for a total of 40 threads per server.

Since Intel was, and still is, the undisputed leader of the server CPU market with greater than 98% market share, our upgrade process until now was pretty straightforward: every year Intel releases a new generation of CPUs, and every year we buy them. In the process we usually get two extra cores per socket, and all the extra architectural features such upgrade brings: hardware AES and CLMUL in Westmere, Continue reading

LavaRand in Production: The Nitty-Gritty Technical Details

Introduction

Courtesy of @mahtin

As some of you may know, there's a wall of lava lamps in the lobby of our San Francisco office that we use for cryptography. In this post, we’re going to explore how that works in technical detail. This post assumes a technical background. For a higher-level discussion that requires no technical background, see Randomness 101: LavaRand in Production.

Background

As we’ve discussed in the past, cryptography relies on the ability to generate random numbers that are both unpredictable and kept secret from any adversary. In this post, we’re going to go into fairly deep technical detail, so there is some background that we’ll need to ensure that everybody is on the same page.

True Randomness vs Pseudorandomness

In cryptography, the term random means unpredictable. That is, a process for generating random bits is secure if an attacker is unable to predict the next bit with greater than 50% accuracy (in other words, no better than random chance).

We can obtain randomness that is unpredictable using one of two approaches. The first produces true randomness, while the second produces pseudorandomness.

True randomness is any information learned through the Continue reading

Randomness 101: LavaRand in Production

Introduction

Courtesy of @mahtin

As some of you may know, there's a wall of lava lamps in the lobby of our San Francisco office that we use for cryptography. In this post, we’re going to explore how that works. This post assumes no technical background. For a more in-depth look at the technical details, see LavaRand in Production: The Nitty-Gritty Technical Details.

Background

Randomness in Cryptography

As we’ve discussed in the past, cryptography relies on the ability to generate random numbers that are both unpredictable and kept secret from any adversary.

But “random” is a pretty tricky term; it’s used in many different fields to mean slightly different things. And like all of those fields, its use in cryptography is very precise. In some fields, a process is random simply if it has the right statistical properties. For example, the digits of pi are said to be random because all sequences of numbers appear with equal frequency (“15” appears as frequently as “38”, “426” appears as frequently as “297”, etc). But for cryptography, this isn’t enough - random numbers must be unpredictable.

To understand what unpredictable means, it helps to consider that all Continue reading

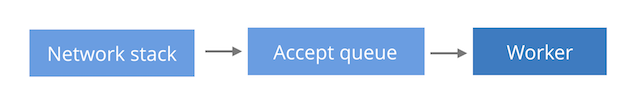

Perfect locality and three epic SystemTap scripts

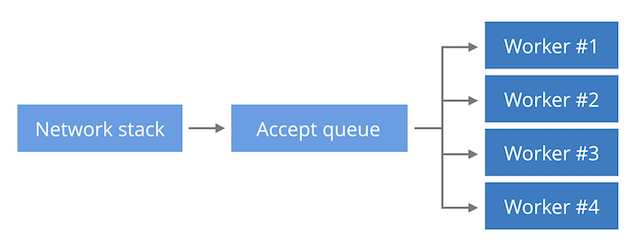

In a recent blog post we discussed epoll behavior causing uneven load among NGINX worker processes. We suggested a work around - the REUSEPORT socket option. It changes the queuing from "combined queue model" aka Waitrose (formally: M/M/s), to a dedicated accept queue per worker aka "the Tesco superstore model" (formally: M/M/1). With this setup the load is spread more evenly, but in certain conditions the latency distribution might suffer.

After reading that piece, a colleague of mine, John, said: "Hey Marek, don't forget that REUSEPORT has an additional advantage: it can improve packet locality! Packets can avoid being passed around CPUs!"

John had a point. Let's dig into this step by step.

In this blog post we'll explain the REUSEPORT socket option, how it can help with packet locality and its performance implications. We'll show three advanced SystemTap scripts which we used to help us understand and measure the packet locality.

A shared queue

The standard BSD socket API model is rather simple. In order to receive new TCP connections a program calls bind() and then listen() on a fresh socket. This will create a single accept queue. Programs can share the file descriptor - pointing Continue reading

5 Strategies to Promote Your App

Brady Gentile from Cloudflare's product team wrote an App Developer Playbook, embedded within the developer documentation page. He decided to write it after he and his team conducted several app developer interviews, finding that many developers wanted to learn how to better promote their apps.

They wanted to help app authors out in the areas outside of developer core expertise. Social media posting, community outreach, email deployment, SEO, blog posting and syndication, etc. can be daunting.

I wanted to take a moment to highlight some of the tips from the App Developer Playbook because I think Brady did a great job of providing clear ways to approach promotional strategies.

5 Promotional Strategies

1. Share with online communities

Your app’s potential audience likely reads community-aggregated news sites such as HackerNews, Product Hunt, or reddit. Sharing your app across these websites is a great way for users to find your app.

For apps that are interesting to developers, designers, scientists, entrepreneurs, etc., be sure to share your work with the Hacker News community. Be sure to follow the official guidelines when posting and when engaging with the community. It may be tempting to ask your friends to upvote Continue reading

Using Google Cloud Platform to Analyze Cloudflare Logs

We’re excited to announce that we now offer deep insights into your domain’s web traffic, working with Google Cloud Platform (GCP). While Cloudflare Enterprise customers always have had access to their logs, they previously had to rely on their own tools to process them, adding extra complexity and cost.

Cloudflare logs provide real time insight into traffic, malicious activity, attack incidents, and infrastructure health checks. The output is used to help customers adjust their settings, manage costs and resources, and plan for expansion.

Working with Google, we created an end-to-end solution that allows customers to retrieve Cloudflare access logs, store and process data in a simple way. GCP components such as Google Storage, Cloud Function, BigQuery and Data Studio come together to make this possible.

One of the biggest challenges of data analysis is to store and process large volume of data within a short time period while avoiding high costs. GCP Storage and BigQuery easily address these challenges.

Cloudflare customers can decide if they wish to obtain and process data from Cloudflare access logs on demand or on a regular basis. The full solution is described in this Knowledge Base article. Initial setup takes no more than 30 minutes Continue reading

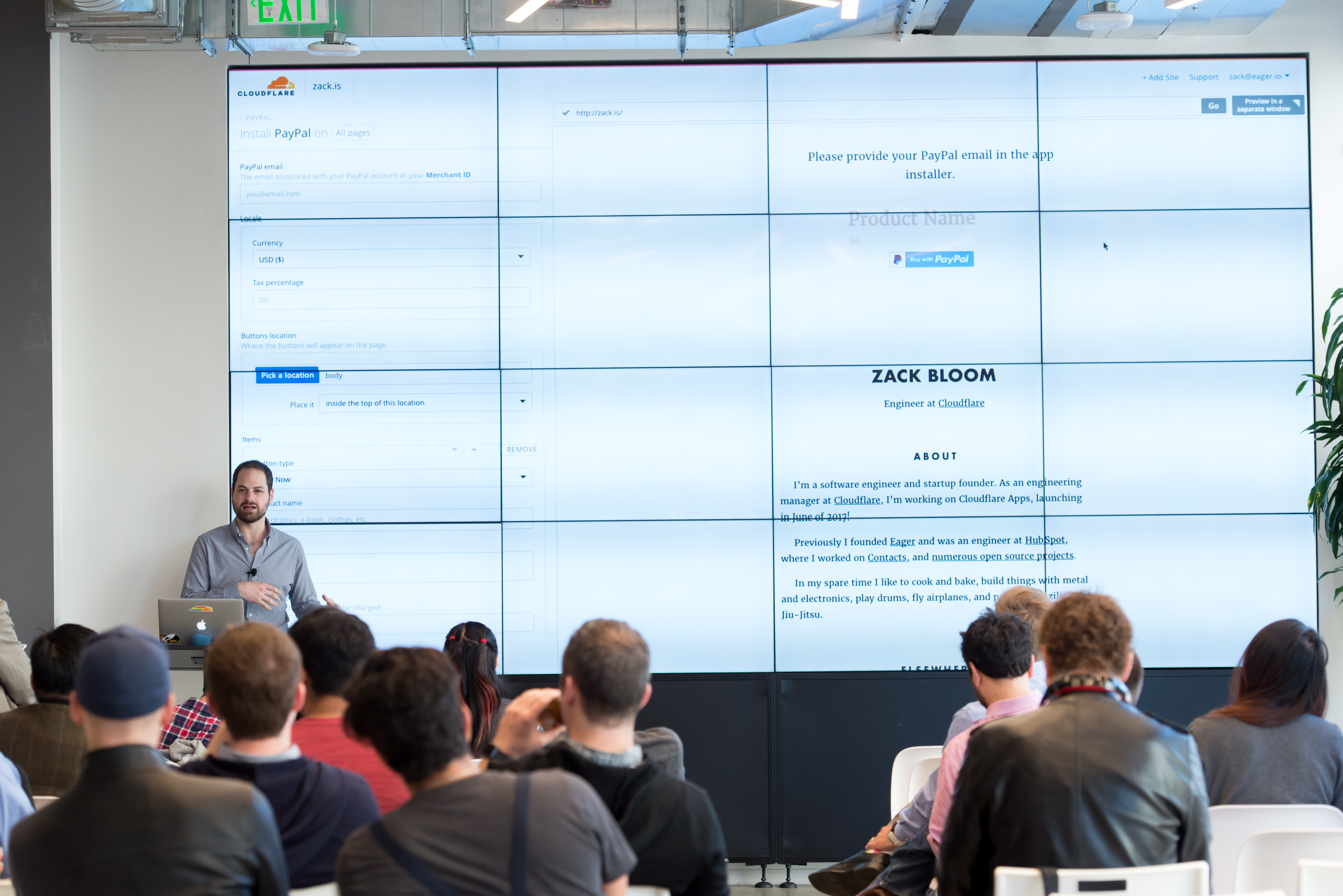

Spotify’s Cloudflare App is open source: fork it for your next project

Earlier this year, Cloudflare Apps was launched so app developers may leverage our global network of 6 million+ websites, applications, and APIs. I’d like to take a moment to highlight Spotify, which was a launch partner for Cloudflare Apps, especially since they have elected to open source the code to their Cloudflare App.

About Spotify

Spotify is the leading digital service for streaming music, serving more than 140 million listeners.

What does the Spotify app do?

Recently, Spotify launched a Cloudflare App to instantly and easily embed the Spotify player onto your website without having to copy / paste anything.

Who should install the Spotify app?

A musician who runs a site for their band - they can now play samples of new tracks on their tour calendar page and psych up their fans.

A game creator who wants to share their game's soundtrack with their fans.

An activewear company which wants to deliver popular running playlists to its customers.

Web properties that install the Spotify app have the ability to increase user engagement.

Add Spotify widgets to your web pages and let your users play tracks and follow Spotify profiles. Add a Spotify Play Button Continue reading

How to Monkey-Patch the Linux Kernel

I have a weird setup. I type in Dvorak. But, when I hold ctrl or alt, my keyboard reverts to Qwerty.

You see, the classic text-editing hotkeys, ctrl+Z, ctrl+X, ctrl+C, and ctrl+V are all located optimally for a Qwerty layout: next to the control key, easy to reach with your left hand while mousing with your right. In Dvorak, unfortunately, these hotkeys are scattered around mostly on the right half of the keyboard, making them much less convenient. Using Dvorak for typing but Qwerty for hotkeys turns out to be a nice compromise.

But, the only way I could find to make this work on Linux / X was to write a program that uses X "grabs" to intercept key events and rewrite them. That was mostly fine, until recently, when my machine, unannounced, updated to Wayland. Remarkably, I didn't even notice at first! But at some point, I realized my hotkeys weren't working right. You see, Wayland, unlike X, actually has some sensible security rules, and as a result, random programs can't just man-in-the-middle all keyboard events anymore. Which broke my setup.

Yes, that's right, I'm that guy:

Source: xkcd 1172

So what was I to do? I began Continue reading

Why does one NGINX worker take all the load?

Scaling up TCP servers is usually straightforward. Most deployments start by using a single process setup. When the need arises more worker processes are added. This is a scalability model for many applications, including HTTP servers like Apache, NGINX or Lighttpd.

CC BY-SA 2.0 image by Paul Townsend

CC BY-SA 2.0 image by Paul Townsend

Increasing the number of worker processes is a great way to overcome a single CPU core bottleneck, but opens a whole new set of problems.

There are generally three ways of designing a TCP server with regard to performance:

(a) Single listen socket, single worker process.

(b) Single listen socket, multiple worker processes.

(c) Multiple worker processes, each with separate listen socket.

(a) Single listen socket, single worker process This is the simplest model, where processing is limited to a single CPU. A single worker process is doing both accept() calls to receive the new connections and processing of the requests themselves. This model is the preferred Lighttpd setup.

(b) Single listen socket, multiple worker process The new connections sit in a single kernel data structure (the listen socket). Multiple worker processes are doing both the accept() calls and processing of the requests. This model enables some spreading of the inbound Continue reading

Performing & Preventing SSL Stripping: A Plain-English Primer

Over the past few days we learnt about a new attack that posed a serious weakness in the encryption protocol used to secure all modern Wi-Fi networks. The KRACK Attack effectively allows interception of traffic on wireless networks secured by the WPA2 protocol. Whilst it is possible to backward patch implementations to mitigate this vulnerability, security updates are rarely installed universally.

Prior to this vulnerability, there were no shortage of wireless networks that were vulnerable to interception attacks. Some wireless networks continue to use a dated security protocol (called WEP) that is demonstrably "totally insecure" 1; other wireless networks, such as those in coffee shops and airports, remain completely open and do not authenticate users. Once an attacker gains access to a network, they can act as a Man-in-the-Middle to intercept connections over the network (using tactics known as ARP Cache Poisoning and DNS Hijacking). And yes, these interception tactics can easily be deployed against wired networks where someone gains access to an ethernet port.

With all this known, it is beyond doubt that it is simply not secure to blindly trust the medium that connects your users to the internet. HTTPS was created to allow HTTP traffic to Continue reading

Helping to make LuaJIT faster

This is a guest post by Laurence Tratt, who is a programmer and Reader in Software Development in the Department of Informatics at King's College London where he leads the Software Development Team. He is also an EPSRC Fellow.

Programming language Virtual Machines (VMs) are familiar beasts: we use them to run apps on our phone, code inside our browsers, and programs on our servers. Traditional VMs are useful and widely used: nearly every working programmer is familiar with one or more of the “standard” Lua, Python, or Ruby VMs. However, such VMs are simplistic, containing only an interpreter (a simple implementation of a language). These often can’t run our programs as fast as we need; and, even when they can, they often waste huge amounts of server CPU time. We sometimes forget that servers consume a large, and growing, chunk of the world’s electricity output: slow language implementations are, quite literally, changing the world, and not in a good way.

More advanced VMs come with Just-In-Time (JIT) compilers (well known examples include LuaJIT, HotSpot (aka “the JVM”), PyPy, and V8). Such VMs observe a program’s run-time behaviour and use that to compile frequently executed parts of the program Continue reading

A Celebration of Learning at Grace Hopper

Over the course of my career, I’ve been to many conferences, interacted with thousands of candidates, and attended countless keynotes, roundtables, and sessions. I can say without a doubt, that the Grace Hopper Celebration, stood out from the rest. And I think my team would agree.

During the three day event, we screened more than 50 candidates, conducted 24 onsite interviews, and had more than 600 people visit our booth. Not bad for a booth near the back competing with an AirBnB booth that had a literal house on top of it.

Before the conference, we were expecting about 200 visitors to our booth, so the turnout clearly exceeded our expectations. More importantly, we couldn’t have predicted the breadth of talent we would interact with at the conference. That’s not to say that I was surprised; Grace Hopper attracts women from all over the world, including students, seasoned professionals, hackers, engineers, and business leaders. This year was the biggest yet, with more than 12,000 attendees from across all tech sectors, backgrounds, and interests. So I certainly wasn’t surprised to meet all of these women, but I was definitely inspired.

My team Continue reading

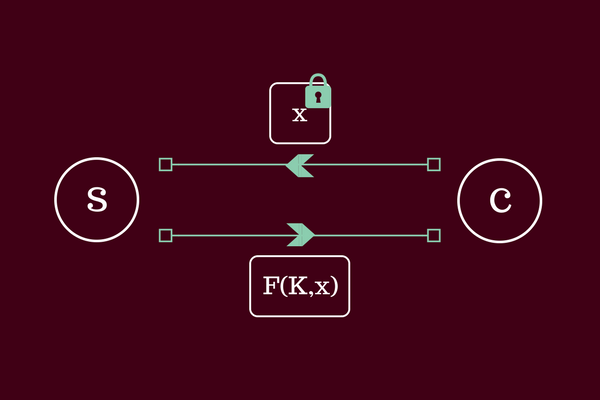

Cloudflare London Meetup Recap

Cloudflare helps make over 6 million websites faster and more secure. In doing so, Cloudflare has a vast and diverse community of users throughout the world. Whether discussing Cloudflare on social media, browsing our community forums or following Pull Requests on our open-source projects; there is no shortage of lively discussions amongst Cloudflare users. Occasionally, however, it is important to move these discussions out from cyberspace and take time to connect in person.

A little while ago, we did exactly this and ran a meetup in the Cloudflare London office. Ivan Rustic from Hardenize was our guest speaker, he demonstrated how Hardenize developed a Cloudflare App to help build a culture of security. I presented two other talks which included a primer on how the Cloudflare network is architected and wrapped up with a discussion on how you can build and monetise your very own Cloudflare App.

Since we presented this meet-up, I've received a few requests to share the videos of all the talks. You can find all three of the talks from our last London office meet-up in this blog post.