Introducing WARP for Desktop and Cloudflare for Teams

Cloudflare launched ten years ago to keep web-facing properties safe from attack and fast for visitors. Cloudflare customers owned Internet properties that they placed on our network. Visitors to those sites and applications enjoyed a faster experience, but that speed was not consistent for accessing Internet properties outside the Cloudflare network.

Over the last few years, we began building products that could help deliver a faster and safer Internet to everyone, not just visitors to sites on our network. We started with the first step to visiting any website, a DNS query, and released the world’s fastest public DNS resolver, 1.1.1.1. Any Internet user could improve the speed to connect to any website simply by changing their resolver.

While making the Internet faster for users, we also focused on making it more private. We built 1.1.1.1 to accelerate the last mile of connections, from user to our edge or other destinations on the Internet. Unlike other providers, we did not build it to sell ads.

Last year we went one step further to make the entire connection from a device both faster and safer when we launched Cloudflare WARP. With the push of a Continue reading

Cloudflare Gateway now protects teams, wherever they are

In January 2020, we launched Cloudflare for Teams—a new way to protect organizations and their employees globally, without sacrificing performance. Cloudflare for Teams centers around two core products - Cloudflare Access and Cloudflare Gateway.

In March 2020, Cloudflare launched the first feature of Cloudflare Gateway, a secure DNS filtering solution powered by the world’s fastest DNS resolver. Gateway’s DNS filtering feature kept users safe by blocking DNS queries to potentially harmful destinations associated with threats like malware, phishing, or ransomware. Organizations could change the router settings in their office and, in about five minutes, keep the entire team safe.

Shortly after that launch, entire companies began leaving their offices. Users connected from initially makeshift home offices that have become permanent in the last several months. Protecting users and data has now shifted from a single office-level setting to user and device management in hundreds or thousands of locations.

Security threats on the Internet have also evolved. Phishing campaigns and malware attacks have increased in the last six months. Detecting those types of attacks requires looking deeper than just the DNS query.

Starting today, we’re excited to announce two features in Cloudflare Gateway that solve those new challenges. First, Continue reading

Argo Tunnels that live forever

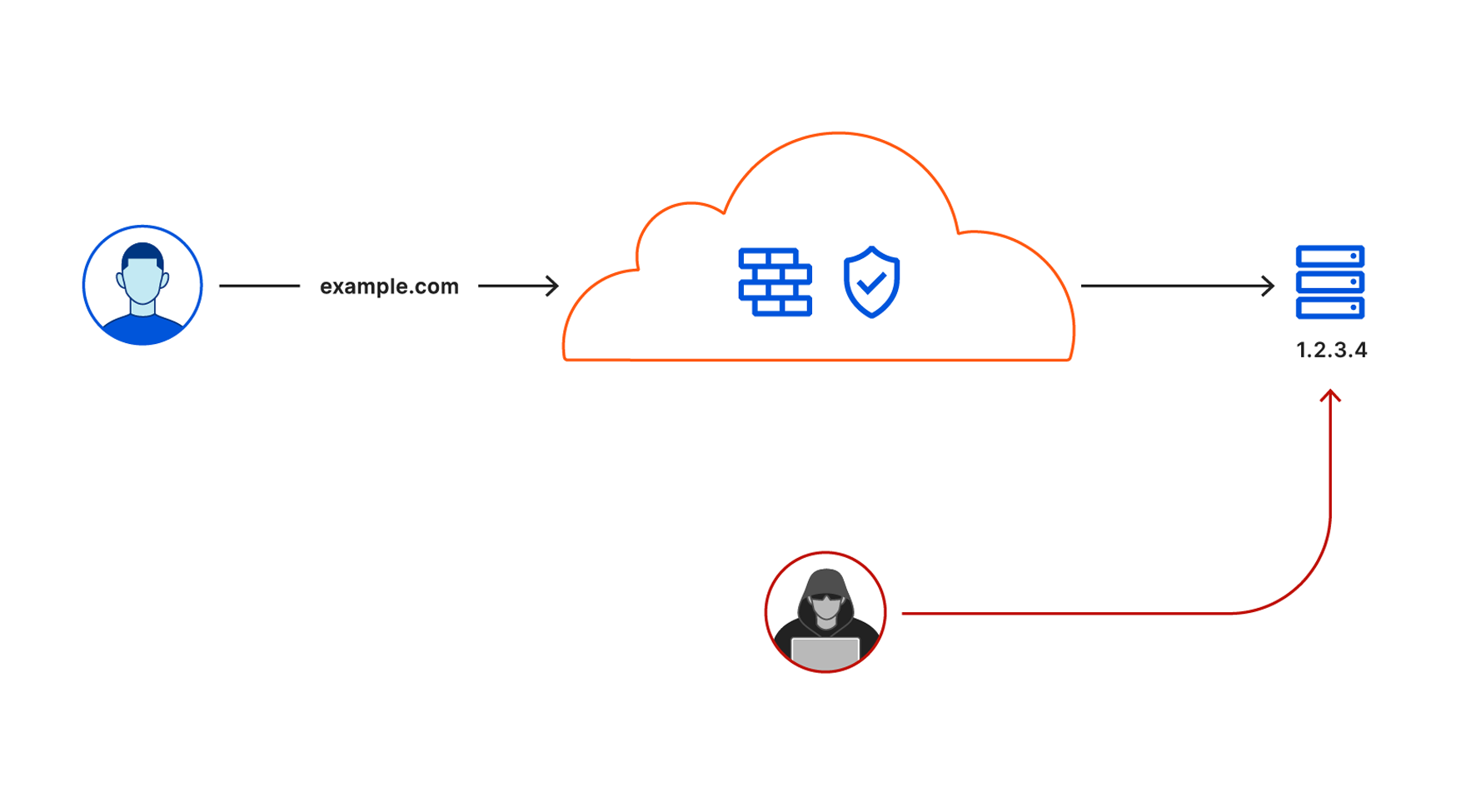

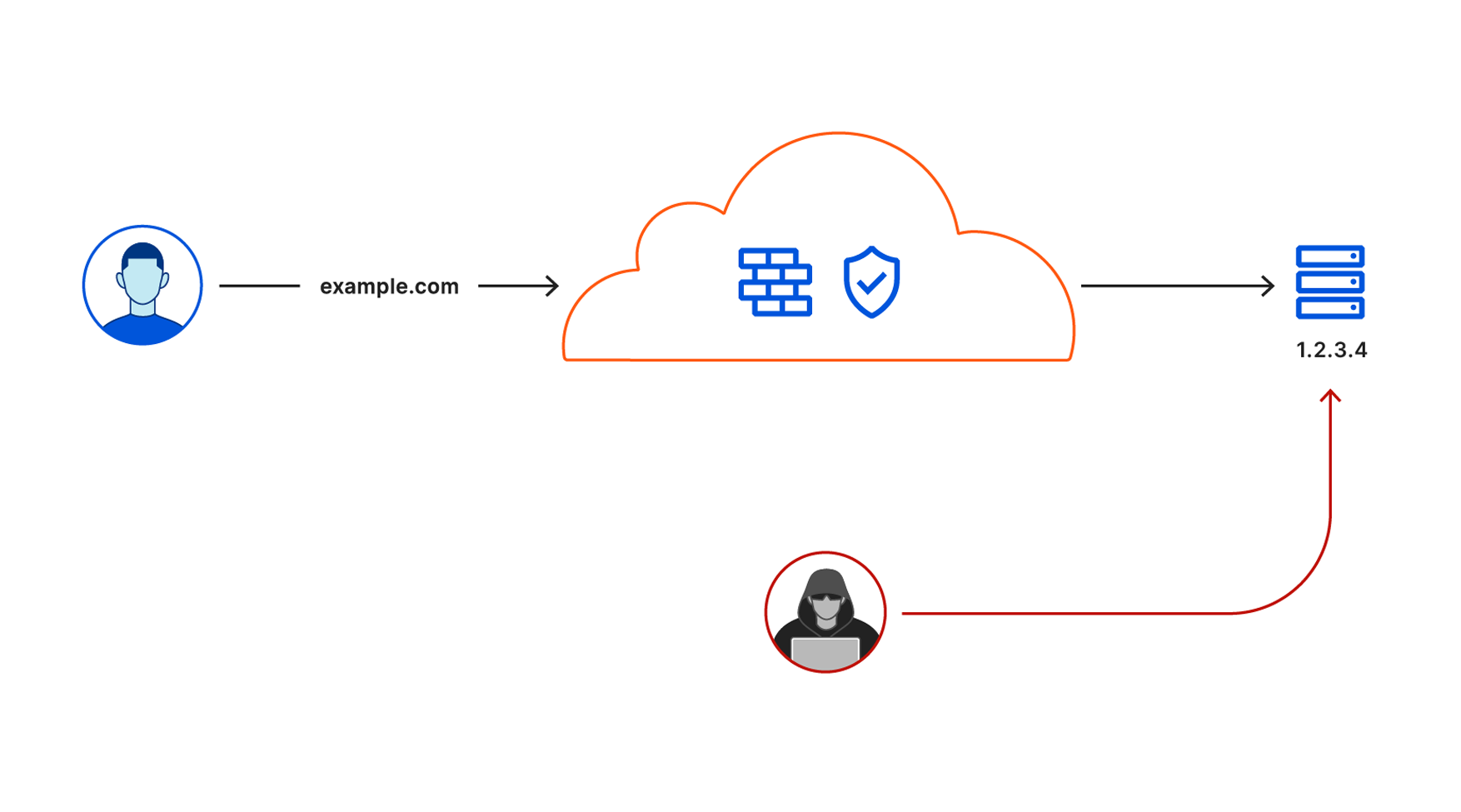

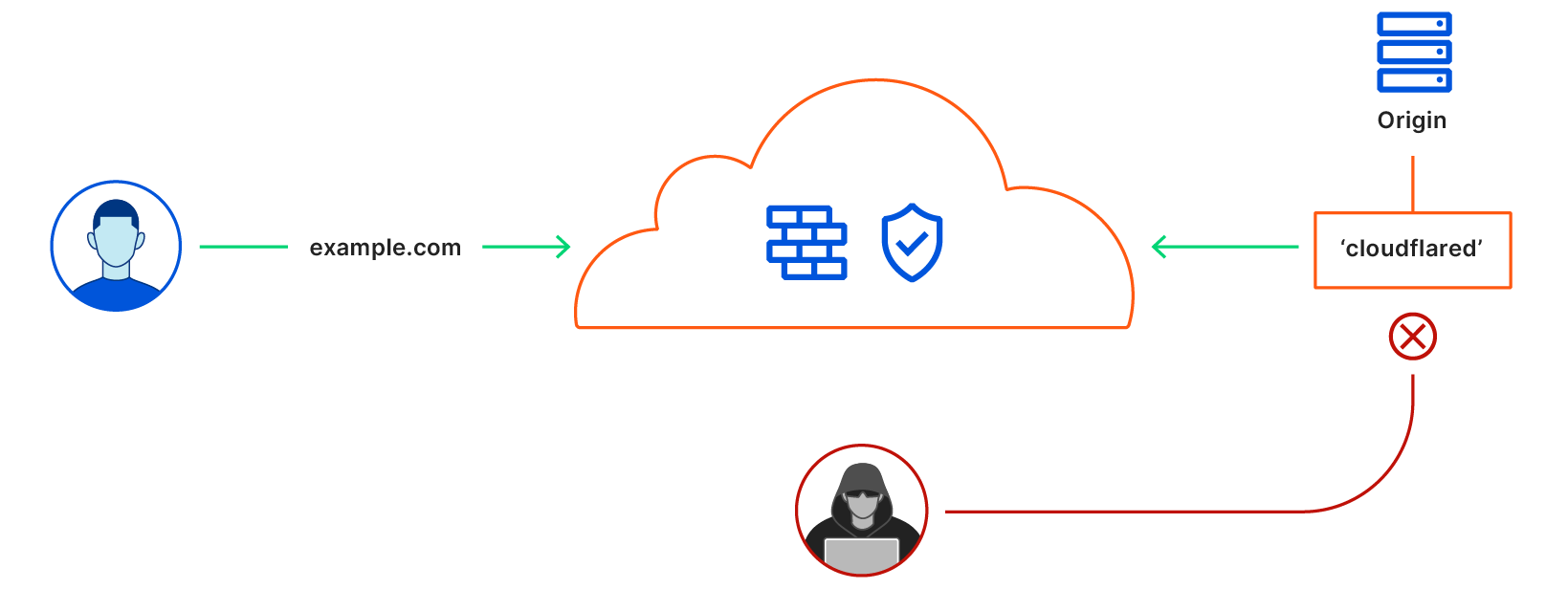

Cloudflare secures your origin servers by proxying requests to your DNS records through our anycast network and to the external IP of your origin. However, external IP addresses can provide attackers with a path around Cloudflare security if they discover those destinations.

We launched Argo Tunnel as a secure way to connect your origin to Cloudflare without a publicly routable IP address. With Tunnel, you don’t send traffic to an external IP. Instead, a lightweight daemon runs in your infrastructure and creates outbound-only connections to Cloudflare’s edge. With Argo Tunnel, you can quickly deploy infrastructure in a Zero Trust model by ensuring all requests to your resources pass through Cloudflare’s security filters.

Originally, your Argo Tunnel connection corresponded to a DNS record in your account. Requests to that hostname hit Cloudflare’s network first and our edge sends those requests over the Argo Tunnel to your origin. Since these connections are outbound-only, you no longer need to poke holes in your infrastructure’s firewall. Your origins can serve traffic through Cloudflare without being vulnerable to attacks that bypass Cloudflare.

However, fitting an outbound-only connection into a reverse proxy creates some ergonomic and stability hurdles. The original Argo Tunnel architecture attempted to both Continue reading

Zero Trust For Everyone

We launched Cloudflare for Teams to make Zero Trust security accessible for all organizations, regardless of size, scale, or resources. Starting today, we are excited to take another step on this journey by announcing our new Teams plans, and more specifically, our Cloudflare for Teams Free plan, which protects up to 50 users at no cost. To get started, sign up today.

If you’re interested in how and why we’re doing this, keep scrolling.

Our Approach to Zero Trust

Cloudflare Access is one-half of Cloudflare for Teams - a Zero Trust solution that secures inbound connections to your protected applications. Cloudflare Access works like a bouncer, checking identity at the door to all of your applications.

The other half of Cloudflare for Teams is Cloudflare Gateway which, as our clever name implies, is a Secure Web Gateway protecting all of your users’ outbound connections to the Internet. To continue with this analogy, Cloudflare Gateway is your organization’s bodyguard, securing your users as they navigate the Internet.

Together, these two solutions provide a powerful, single dashboard to protect your users, networks, and applications from malicious actors.

A Mission-Driven Solution

At Cloudflare, our mission is to help build a better Internet. That Continue reading

Cloudflare Access: now for SaaS apps, too

We built Cloudflare Access™ as a tool to solve a problem we had inside of Cloudflare. We rely on a set of applications to manage and monitor our network. Some of these are popular products that we self-host, like the Atlassian suite, and others are tools we built ourselves. We deployed those applications on a private network. To reach them, you had to either connect through a secure WiFi network in a Cloudflare office, or use a VPN.

That VPN added friction to how we work. We had to dedicate part of Cloudflare’s onboarding just to teaching users how to connect. If someone received a PagerDuty alert, they had to rush to their laptop and sit and wait while the VPN connected. Team members struggled to work while mobile. New offices had to backhaul their traffic. In 2017 and early 2018, our IT team triaged hundreds of help desk tickets with titles like these:

While our IT team wrestled with usability issues, our Security team decided that poking holes in our private network was too much of a risk to maintain. Once on the VPN, users almost always had too much access. We had limited visibility into what happened on Continue reading

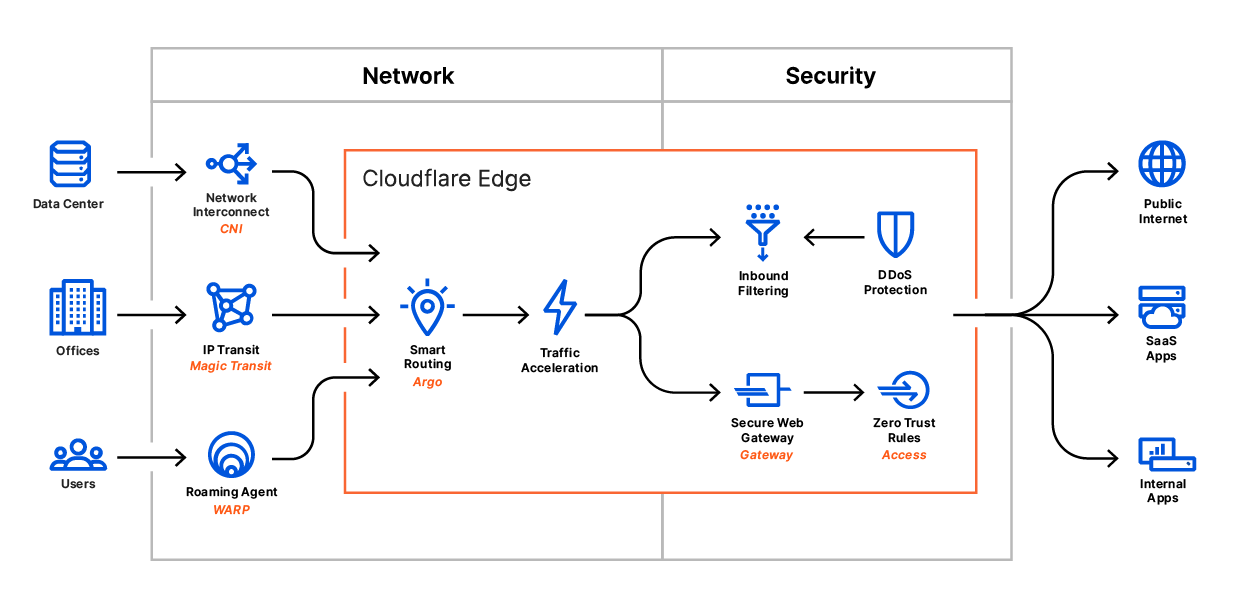

Introducing Cloudflare One

Today we’re announcing Cloudflare One™. It is the culmination of engineering and technical development guided by conversations with thousands of customers about the future of the corporate network. It provides secure, fast, reliable, cost-effective network services, integrated with leading identity management and endpoint security providers.

Over the course of this week, we'll be rolling out the components that enable Cloudflare One, including our WARP Gateway Clients for desktop and mobile, our Access for SaaS solution, our browser isolation product, and our next generation network firewall and intrusion detection system.

The old model of the corporate network has been made obsolete by mobile, SaaS, and the public cloud. The events of 2020 have only accelerated the need for a new model. Zero Trust networking is the future and we are proud to be enabling that future. Having worked on the components of what is Cloudflare One for the last two years, we’re excited to unveil today how they’ve come together into a robust SASE solution and share how customers are already using it to deliver the more secure and productive future of the corporate network.

What Is Cloudflare One? Secure, Optimized Global Networking

Cloudflare One is a comprehensive, cloud-based network-as-a-service solution Continue reading

What is Cloudflare One?

Running a secure enterprise network is really difficult. Employees spread all over the world work from home. Applications are run from data centers, hosted in public cloud, and delivered as services. Persistent and motivated attackers exploit any vulnerability.

Enterprises used to build networks that resembled a castle-and-moat. The walls and moat kept attackers out and data in. Team members entered over a drawbridge and tended to stay inside the walls. Trust folks on the inside of the castle to do the right thing, and deploy whatever you need in the relative tranquility of your secure network perimeter.

The Internet, SaaS, and “the cloud” threw a wrench in that plan. Today, more of the workloads in a modern enterprise run outside the castle than inside. So why are enterprises still spending money building more complicated and more ineffective moats?

Today, we’re excited to share Cloudflare One™, our vision to tackle the intractable job of corporate security and networking.

Cloudflare One combines networking products that enable employees to do their best work, no matter where they are, with consistent security controls deployed globally.

Starting today, you can begin replacing traffic backhauls to security appliances with Cloudflare WARP and Gateway to filter Continue reading

Exploring WebAssembly AI Services on Cloudflare Workers

This is a guest post by Videet Parekh, Abelardo Lopez-Lagunas, Sek Chai at Latent AI.

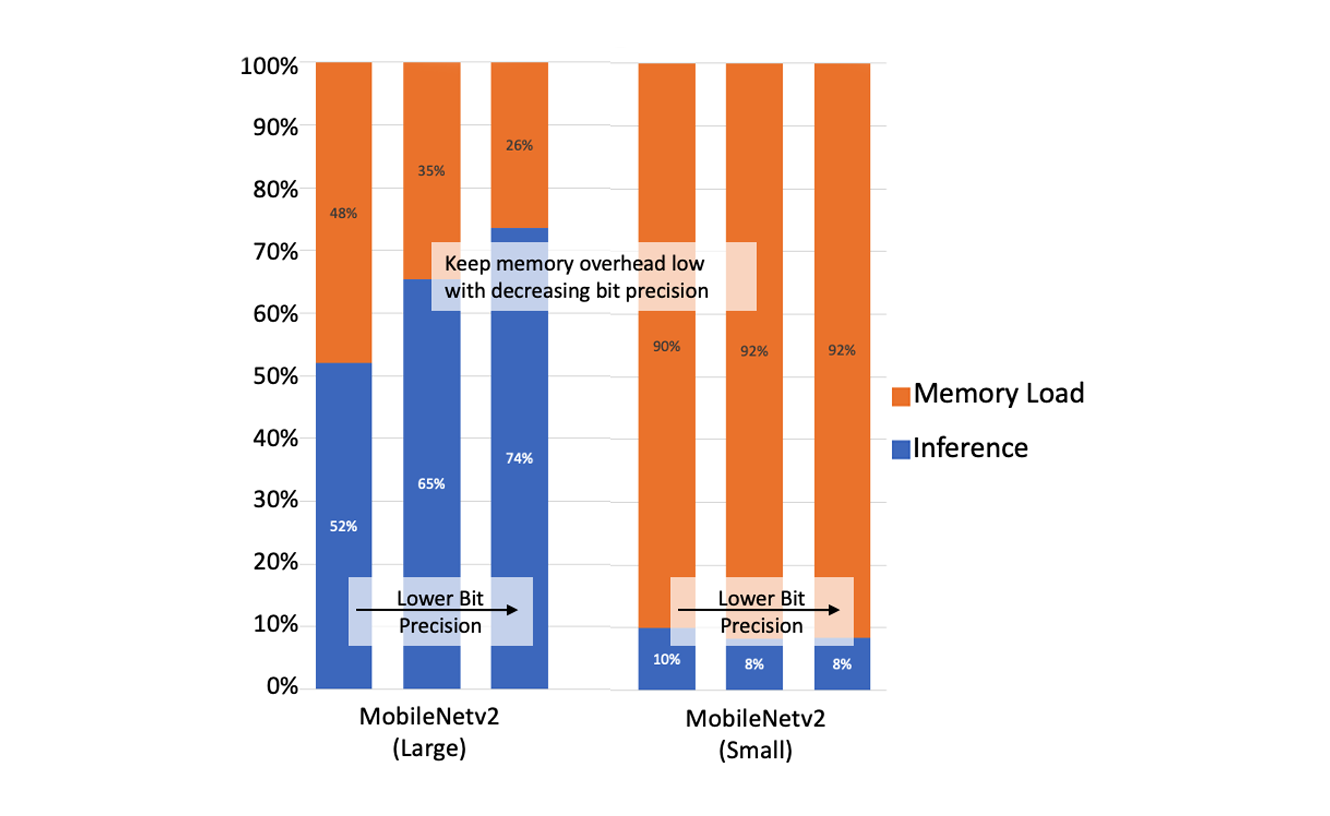

Edge networks present a significant opportunity for Artificial Intelligence (AI) performance and applicability. AI technologies already make it possible to run compelling applications like object and voice recognition, navigation, and recommendations.

AI at the edge presents a host of benefits. One is scalability—it is simply impractical to send all data to a centralized cloud. In fact, one study has predicted a global scope of 90 zettabytes generated by billions of IoT devices by 2025. Another is privacy—many users are reluctant to move their personal data to the cloud, whereas data processed at the edge are more ephemeral.

When AI services are distributed away from centralized data centers and closer to the service edge, it becomes possible to enhance the overall application speed without moving data unnecessarily. However, there are still challenges to make AI from the deep-cloud run efficiently on edge hardware. Here, we use the term deep-cloud to refer to highly centralized, massively-sized data centers. Deploying edge AI services can be hard because AI is both computational and memory bandwidth intensive. We need to tune the AI models so the computational latency and bandwidth Continue reading

Let’s build a Cloudflare Worker with WebAssembly and Haskell

This is a guest post by Cristhian Motoche of Stack Builders.

At Stack Builders, we believe that Haskell’s system of expressive static types offers many benefits to the software industry and the world-wide community that depends on our services. In order to fully realize these benefits, it is necessary to have proper training and access to an ecosystem that allows for reliable deployment of services. In exploring the tools that help us run our systems based on Haskell, our developer Cristhian Motoche has created a tutorial that shows how to compile Haskell to WebAssembly using Asterius for deployment on Cloudflare.

What is a Cloudflare Worker?

Cloudflare Workers is a serverless platform that allows us to run our code on the edge of the Cloudflare infrastructure. It's built on Google V8, so it’s possible to write functionalities in JavaScript or any other language that targets WebAssembly.

WebAssembly is a portable binary instruction format that can be executed fast in a memory-safe sandboxed environment. For this reason, it’s especially useful for tasks that need to perform resource-demanding and self-contained operations.

Why use Haskell to target WebAssembly?

Haskell is a pure functional languages that can target WebAssembly. As such, It helps developers Continue reading

Know When You’ve Been DDoS’d

Today we’re announcing the availability of DDoS attack alerts. The alerts are available for free for all Cloudflare’s customers on paid plans.

Unmetered DDoS protection

Last week we celebrated Cloudflare’s 10th birthday in what we call Birthday Week. Every year, on each day of Birthday Week, we announce a new product with the goal of helping make the Internet a better place -- one that is safer and faster. To do that, over the years we’ve democratized many products that were previously only available to large enterprises by making them available for free (or at very low cost) to all. For example, on Cloudflare’s 7th birthday in 2017, we announced free unmetered DDoS protection as part of every Cloudflare product and every plan, including the free plan.

DDoS attacks aim to take down websites or online services and make them unavailable to the public. We wanted to make sure that every organization and every website is available and accessible, regardless if they can or can’t afford enterprise-grade DDoS protection. This has been a core part of our mission. We’ve been heavily investing in our DDoS protection capabilities over the last 10 years, and we will continue to do so in Continue reading

Birthday week: Cloudflare turns 10

2020 marks a major milestone for Cloudflare: it’s our 10th birthday.

We’ve always used birthdays as an opportunity to give back to the Internet. But this year — a year in which the Internet has been so central to giving us all some degree of connectedness and normalcy — it feels like giving back to the Internet has been more important than ever.

And while we couldn’t celebrate in person, we were humbled by some of the incredible minds that joined us online to talk about how the Internet has changed over the last ten years — and what we might see over the next ten.

With that, let’s recap the key announcements from Birthday Week 2020.

Day 1, Monday: Workers

During Birthday Week in 2017, Cloudflare announced Workers — a serverless platform that represented a completely new way to build applications: by writing your code directly onto our network edge. On Monday of this year’s Birthday Week, we announced Durable Objects and Cron Triggers — both of which continue to expand the use cases that Workers can address.

Many folks associate the serverless paradigm with functions as a service — which, at its core, is stateless. Workers KV started Continue reading

Introducing support for the AVIF image format

We've added support for the new AVIF image format in Image Resizing. It compresses images significantly better than older-generation formats such as WebP and JPEG. It's supported in Chrome desktop today, and support is coming to other Chromium-based browsers, as well as Firefox.

What’s the benefit?

More than a half of an average website's bandwidth is spent on images. Improved image compression can save bandwidth and improve overall performance of the web. The compression in AVIF is so good that images can reduce to half the size of JPEG and WebP

What is AVIF?

AVIF is a combination of the HEIF ISO standard, and a royalty-free AV1 codec by Mozilla, Xiph, Google, Cisco, and many others.

Currently JPEG is the most popular image format on the Web. It's doing remarkably well for its age, and it will likely remain popular for years to come thanks to its excellent compatibility. There have been many previous attempts at replacing JPEG, such as JPEG 2000, JPEG XR and WebP. However, these formats offered only modest compression improvements, and didn't always beat JPEG on image quality. Compression and image quality in AVIF is better than in all of them, and by a wide margin.

|

|

|

| Continue reading |

Introducing Automatic Platform Optimization, starting with WordPress

Today, we are announcing a new service to serve more than just the static content of your website with the Automatic Platform Optimization (APO) service. With this launch, we are supporting WordPress, the most popular website hosting solution serving 38% of all websites. Our testing, as detailed below, showed a 72% reduction in Time to First Byte (TTFB), 23% reduction to First Contentful Paint, and 13% reduction in Speed Index for desktop users at the 90th percentile, by serving nearly all of your website’s content from Cloudflare’s network. This means visitors to your website see not only the first content sooner but all content more quickly.

With Automatic Platform Optimization for WordPress, your customers won’t suffer any slowness caused by common issues like shared hosting congestion, slow database lookups, or misbehaving plugins. This service is now available for anyone using WordPress. It costs $5/month for customers on our Free plan and is included, at no additional cost, in our Professional, Business, and Enterprise plans. No usage fees, no surprises, just speed.

How to get started

The easiest way to get started with APO is from your WordPress admin console.

1. First, install the Cloudflare WordPress plugin on your WordPress Continue reading

Building Automatic Platform Optimization for WordPress using Cloudflare Workers

This post explains how we implemented the Automatic Platform Optimization for WordPress. In doing so, we have defined a new place to run WordPress plugins, at the edge written with Cloudflare Workers. We provide the feature as a Cloudflare service but what’s exciting is that anyone could build this using the Workers platform.

The service is an evolution of the ideas explained in an earlier zero-config edge caching of HTML blog post. The post will explain how Automatic Platform Optimization combines the best qualities of the regular Cloudflare cache with Workers KV to improve cache cold starts globally.

The optimization will work both with and without the Cloudflare for WordPress plugin integration. Not only have we provided a zero config edge HTML caching solution but by using the Workers platform we were also able to improve the performance of Google font loading for all pages.

We are launching the feature first for WordPress specifically but the concept can be applied to any website and/or content management system (CMS).

A new place to run WordPress plugins?

There are many individual WordPress plugins for performance that use similar optimizations to existing Cloudflare services. Automatic Platform Optimization is bringing them all together into Continue reading

DNS Flag Day 2020

October 1 was this year’s DNS Flag Day. Read on to find out all about DNS Flag Day and how it affects Cloudflare’s DNS services (hint: it doesn’t, we already did the work to be compliant).

What is DNS Flag Day?

DNS Flag Day is an initiative by several DNS vendors and operators to increase the compliance of implementations with DNS standards. The goal is to make DNS more secure, reliable and robust. Rather than a push for new features, DNS flag day is meant to ensure that workarounds for non-compliance can be reduced and a common set of functionalities can be established and relied upon.

Last year’s flag day was February 1, and it set forth that servers and clients must be able to properly handle the Extensions to DNS (EDNS0) protocol (first RFC about EDNS0 are from 1999 - RFC 2671). This way, by assuming clients have a working implementation of EDNS0, servers can resort to always sending messages as EDNS0. This is needed to support DNSSEC, the DNS security extensions. We were, of course, more than thrilled to support the effort, as we’re keen to push DNSSEC adoption forward .

DNS Flag Day 2020

The goal for Continue reading

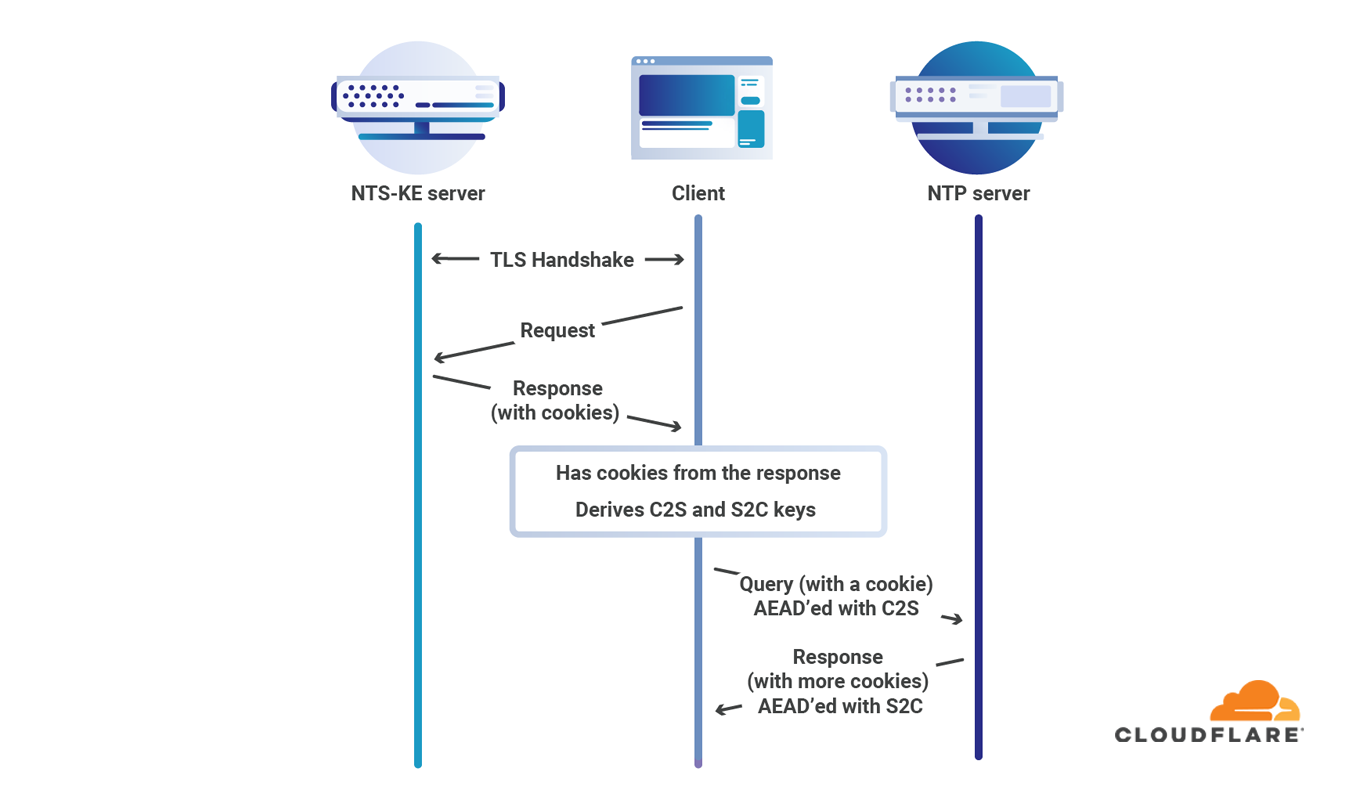

NTS is now an RFC

Earlier today the document describing Network Time Security for NTP officially became RFC 8915. This means that Network Time Security (NTS) is officially part of the collection of protocols that makes the Internet work. We’ve changed our time service to use the officially assigned port of 4460 for NTS key exchange, so you can use our service with ease. This is big progress towards securing a ubiquitous Internet protocol.

Over the past months we’ve seen many users of our time service, but very few using Network Time Security. This leaves computers vulnerable to attacks that imitate the server they use to obtain NTP. Part of the problem was the lack of available NTP daemons that supported NTS. That problem is now solved: chrony and ntpsec both support NTS.

Time underlies the security of many of the protocols such as TLS that we rely on to secure our online lives. Without accurate time, there is no way to determine whether or not credentials have expired. The absence of an easily deployed secure time protocol has been a problem for Internet security.

Without NTS or symmetric key authentication there is no guarantee that your computer is actually talking NTP with the computer Continue reading

Introducing API Shield

APIs are the lifeblood of modern Internet-connected applications. Every millisecond they carry requests from mobile applications—place this food delivery order, “like” this picture—and directions to IoT devices—unlock the car door, start the wash cycle, my human just finished a 5k run—among countless other calls.

They’re also the target of widespread attacks designed to perform unauthorized actions or exfiltrate data, as data from Gartner increasingly shows: “by 2021, 90% of web-enabled applications will have more surface area for attack in the form of exposed APIs rather than the UI, up from 40% in 2019, and “Gartner predicted that, by 2022, API abuses will move from an infrequent to the most-frequent attack vector, resulting in data breaches for enterprise web applications”[1][2]. Of the 18 million requests per second that traverse Cloudflare’s network, 50% are directed towards APIs—with the majority of these requests blocked as malicious.

To combat these threats, Cloudflare is making it simple to secure APIs through the use of strong client certificate-based identity and strict schema-based validation. As of today, these capabilities are available free for all plans within our new “API Shield” offering. And as of today, the security benefits also extend to gRPC-based APIs, which use binary Continue reading

Announcing support for gRPC

Today we're excited to announce beta support for proxying gRPC, a next-generation protocol that allows you to build APIs at scale. With gRPC on Cloudflare, you get access to the security, reliability and performance features that you're used to having at your fingertips for traditional APIs. Sign up for the beta today in the Network tab of the Cloudflare dashboard.

gRPC has proven itself to be a popular new protocol for building APIs at scale: it’s more efficient and built to offer superior bi-directional streaming capabilities. However, because gRPC uses newer technology, like HTTP/2, under the covers, existing security and performance tools did not support gRPC traffic out of the box. This meant that customers adopting gRPC to power their APIs had to pick between modernity on one hand, and things like security, performance, and reliability on the other. Because supporting modern protocols and making sure people can operate them safely and performantly is in our DNA, we set out to fix this.

When you put your gRPC APIs on Cloudflare, you immediately gain all the benefits that come with Cloudflare. Apprehensive of exposing your APIs to bad actors? Add security features such as WAF and Bot Management. Need Continue reading

Introducing Cloudflare Radar

Unlike the tides, Internet use ebbs and flows with the motion of the sun not the moon. Across the world usage quietens during the night and picks up as morning comes. Internet use also follows patterns that humans create, dipping down when people stopped to applaud healthcare workers fighting COVID-19, or pausing to watch their country’s president address them, or slowing for religious reasons.

And while humans leave a mark on the Internet, so do automated systems. These systems might be doing useful work (like building search engine databases) or harm (like scraping content, or attacking an Internet property).

All the while Internet use (and attacks) is growing. Zoom into any day and you’ll see the familiar daily wave of Internet use reflecting day and night, zoom out and you’ll likely spot weekends when Internet use often slows down a little, zoom out further and you might spot the occasional change in use caused by a holiday, zoom out further and you’ll see that Internet use grows inexorably.

And attacks don’t only grow, they change. New techniques are invented while old ones remain evergreen. DDoS activity continues day and night roaming from one victim to another. Automated scanning tools look Continue reading

Speeding up HTTPS and HTTP/3 negotiation with… DNS

In late June, Cloudflare's resolver team noticed a spike in DNS requests for the 65479 Resource Record thanks to data exposed through our new Radar service. We began investigating and found these to be a part of Apple’s iOS14 beta release where they were testing out a new SVCB/HTTPS record type.

Once we saw that Apple was requesting this record type, and while the iOS 14 beta was still on-going, we rolled out support across the Cloudflare customer base.

This blog post explains what this new record type does and its significance, but there’s also a deeper story: Cloudflare customers get automatic support for new protocols like this.

That means that today if you’ve enabled HTTP/3 on an Apple device running iOS 14, when it needs to talk to a Cloudflare customer (say you browse to a Cloudflare-protected website, or use an app whose API is on Cloudflare) it can find the best way of making that connection automatically.

And if you’re a Cloudflare customer you have to do… absolutely nothing… to give Apple users the best connection to your Internet property.

Negotiating HTTP security and performance

Whenever a user types a URL in the browser box without specifying a Continue reading