Commit to Diversity, Equity and Inclusion, Every Day

The world is waking up

Protesting in the name of Black Lives Matter.

Reading the book “White Fragility”.

Watching the documentary “13th”.

The world is waking up to the fight against racism and I couldn’t be happier!

But let’s be clear: learning about anti-racism and being anti-racist are not the same things. Learning is a good first step and a necessary one. But if you don’t apply the knowledge you acquire, then you are not helping to move the needle.

Since the murder of George Floyd at the hands/knees of the Minneapolis police, people all over the world have been focused on Black Lives Matter and anti-racism. At Cloudflare, we’ve seen an increase in cyberattacks, we’ve heard from the leadership of Afroflare, our Employee Resource Group for employees of African descent, and we held our first ever Day On, held on June 18, Cloudflare’s employee day of learning about bias, the history and psychological effects of racism,, and how racism can get baked into algorithms.

By way of this blog post, I want to share my thoughts about where I think we go from here and how I believe we can truly embody Diversity Equity and Inclusion (DEI) Continue reading

Making magic: Reimagining Developer Experience for the World of Serverless

This week we’ve talked about how Workers provides a step function improvement in the TTFB (time to first byte) of applications, by running lightweight isolates in over 200 cities around the world, free of cold starts. Today I’m going to talk about another metric, one that’s arguably even more important: TTFD, or time to first dopamine, and announce a huge improvement to the Workers development experience — wrangler dev, our edge-based development environment with all the perks of a local environment.

There’s nothing quite like the rush of getting your first few lines of code to work — no matter how many times you’ve done it before, there's something so magical about the computer understanding exactly what you wanted it to do and doing it!

This is the kind of magic I expected of “serverless”, and while it’s true that most serverless offerings today get you to that feeling faster than setting up a virtual server ever would, I still can’t help but be disappointed with how lackluster developing with most serverless platforms is today.

Some of my disappointment can be attributed to the leaky nature of the abstraction: the journey to getting you to the point of writing Continue reading

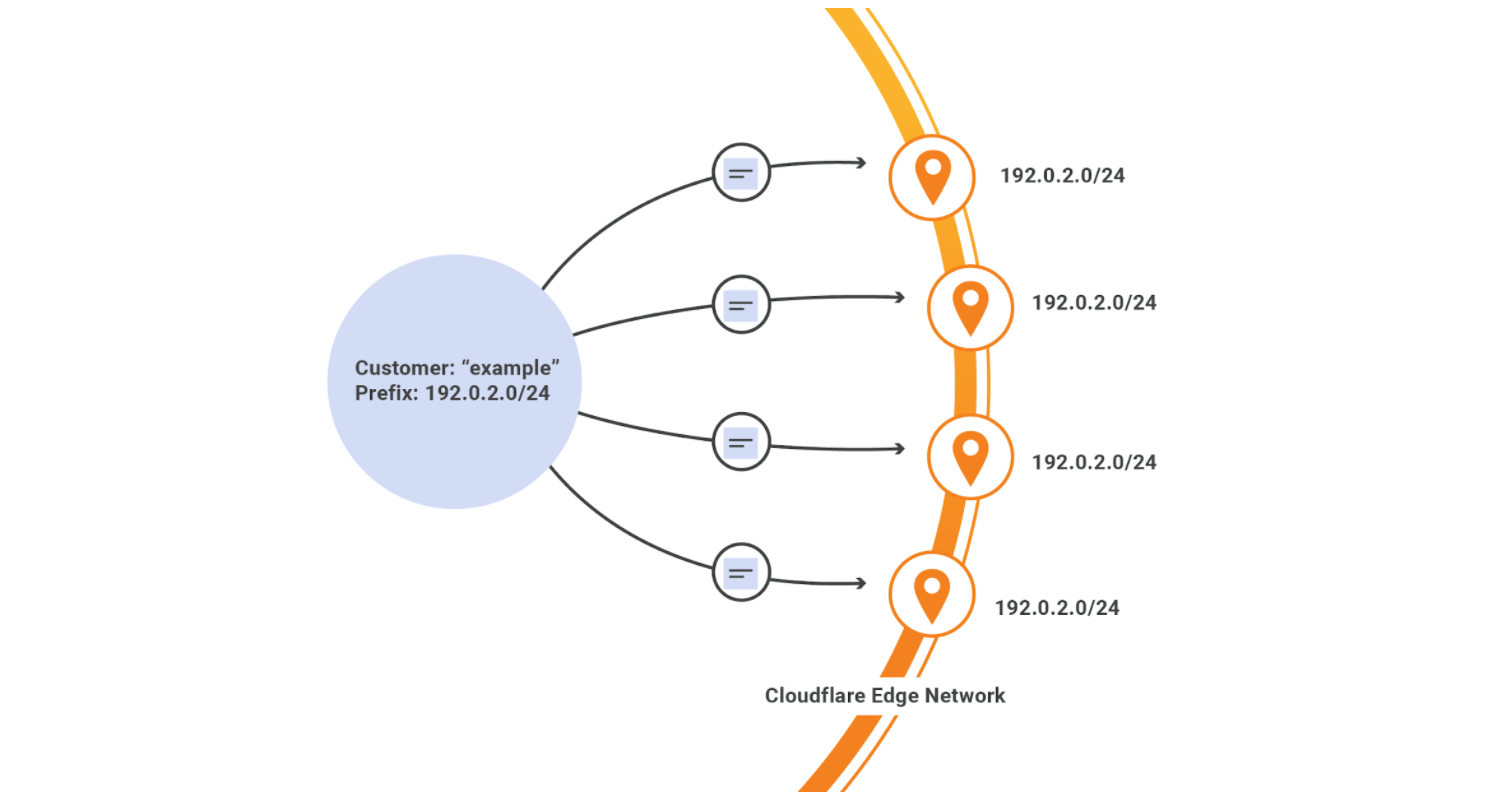

Bringing Your Own IPs to Cloudflare (BYOIP)

Today we’re thrilled to announce general availability of Bring Your Own IP (BYOIP) across our Layer 7 products as well as Spectrum and Magic Transit services. When BYOIP is configured, the Cloudflare edge will announce a customer’s own IP prefixes and the prefixes can be used with our Layer 7 services, Spectrum, or Magic Transit. If you’re not familiar with the term, an IP prefix is a range of IP addresses. Routers create a table of reachable prefixes, known as a routing table, to ensure that packets are delivered correctly across the Internet.

As part of this announcement, we are listing BYOIP on the relevant product pages, developer documentation, and UI support for controlling your prefixes. Previous support was API only.

Customers choose BYOIP with Cloudflare for a number of reasons. It may be the case that your IP prefix is already allow-listed in many important places, and updating firewall rules to also allow Cloudflare address space may represent a large administrative hurdle. Additionally, you may have hundreds of thousands, or even millions, of end users pointed directly to your IPs via DNS, and it would be hugely time consuming to get them all to update their records Continue reading

Eliminating cold starts with Cloudflare Workers

A “cold start” is the time it takes to load and execute a new copy of a serverless function for the first time. It’s a problem that’s both complicated to solve and costly to fix. Other serverless platforms make you choose between suffering from random increases in execution time, or paying your way out with synthetic requests to keep your function warm. Cold starts are a horrible experience, especially when serverless containers can take full seconds to warm up.

Unlike containers, Cloudflare Workers utilize isolate technology, which measure cold starts in single-digit milliseconds. Well, at least they did. Today, we’re removing the need to worry about cold starts entirely, by introducing support for Workers that have no cold starts at all – that’s right, zero. Forget about cold starts, warm starts, or... any starts, with Cloudflare Workers you get always-hot, raw performance in more than 200 cities worldwide.

Why is there a cold start problem?

It’s impractical to keep everyone’s functions warm in memory all the time. Instead, serverless providers only warm up a function after the first request is received. Then, after a period of inactivity, the function becomes cold again and the cycle continues.

For Workers, this has Continue reading

Workers Security

Hello, I'm an engineer on the Workers team, and today I want to talk to you about security.

Cloudflare is a security company, and the heart of Workers is, in my view, a security project. Running code written by third parties is always a scary proposition, and the primary concern of the Workers team is to make that safe.

For a project like this, it is not enough to pass a security review and say "ok, we're secure" and move on. It's not even enough to consider security at every stage of design and implementation. For Workers, security in and of itself is an ongoing project, and that work is never done. There are always things we can do to reduce the risk and impact of future vulnerabilities.

Today, I want to give you an overview of our security architecture, and then address two specific issues that we are frequently asked about: V8 bugs, and Spectre.

Architectural Overview

Let's start with a quick overview of the Workers Runtime architecture.

There are two fundamental parts of designing a code sandbox: secure isolation and API design.

Isolation

First, we need to create an execution environment where code can't access anything it's not Continue reading

Cloudflare Workers Announces Broad Language Support

We initially launched Cloudflare Workers with support for JavaScript and languages that compile to WebAssembly, such as Rust, C, and C++. Since then, Cloudflare and the community have improved the usability of Typescript on Workers. But we haven't talked much about the many other popular languages that compile to JavaScript. Today, we’re excited to announce support for Python, Scala, Kotlin, Reason and Dart.

You can build applications on Cloudflare Workers using your favorite language starting today.

Getting Started

Getting started is as simple as installing Wrangler, then running generate for the template for your chosen language: Python, Scala, Kotlin, Dart, or Reason. For Python, this looks like:

wrangler generate my-python-project https://github.com/cloudflare/python-worker-hello-world

Follow the installation instructions in the README inside the generated project directory, then run wrangler publish. You can see the output of your Worker at your workers.dev subdomain, e.g. https://my-python-project.cody.workers.dev/. You can sign up for a free Workers account if you don't have one yet.

That’s it. It is really easy to write in your favorite languages. But, this wouldn’t be a very compelling blog post if we left it at that. Now, I’ll shift the focus to Continue reading

The Migration of Legacy Applications to Workers

As Cloudflare Workers, and other Serverless platforms, continue to drive down costs while making it easier for developers to stand up globally scaled applications, the migration of legacy applications is becoming increasingly common. In this post, I want to show how easy it is to migrate such an application onto Workers. To demonstrate, I’m going to use a common migration scenario: moving a legacy application — on an old compute platform behind a VPN or in a private cloud — to a serverless compute platform behind zero-trust security.

Wait but why?

Before we dive further into the technical work, however, let me just address up front: why would someone want to do this? What benefits would they get from such a migration? In my experience, there are two sets of reasons: (1) factors that are “pushing” off legacy platforms, or the constraints and problems of the legacy approach; and (2) factors that are “pulling” onto serverless platforms like Workers, which speaks to the many benefits of this new approach. In terms of the push factors, we often see three core ones:

- Legacy compute resources are not flexible and must be constantly maintained, often leading to capacity constraints or excess cost;

- Continue reading

Introducing Workers Unbound

We launched Cloudflare Workers® in 2017 with the goal of building the development platform that we wished we had. We want to enable developers to build great software while Cloudflare manages the overhead of configuring and maintaining the infrastructure. Workers is with you from the first line of code, to the first application, all the way to a globally scaled product. By making our Edge network programmable and providing servers in 200+ locations around the world, we offer you the power to execute on even the biggest ideas.

Behind the scenes at Cloudflare, we’ve been steadily working towards making development on the Edge even more powerful and flexible. Today, we are excited to announce the next phase of this with the launch of our new platform, Workers Unbound, without restrictive CPU limits in a private beta (sign up for details here).

What is Workers Unbound? How is it different from Cloudflare Workers?

Workers Unbound is like our classic Cloudflare Workers (now referred to as Workers Bundled), but for applications that need longer execution times. We are extending our CPU limits to allow customers to bring all of their workloads onto Workers, no matter how intensive. It eliminates the choice Continue reading

The Edge Computing Opportunity: It’s Not What You Think

Cloudflare Workers® is one of the largest, most widely used edge computing platforms. We announced Cloudflare Workers nearly three years ago and it's been generally available for the last two years. Over that time, we've seen hundreds of thousands of developers write tens of millions of lines of code that now run across Cloudflare's network.

Just last quarter, 20,000 developers deployed for the first time a new application using Cloudflare Workers. More than 10% of all requests flowing through our network today use Cloudflare Workers. And, among our largest customers, approximately 20% are adopting Cloudflare Workers as part of their deployments. It's been incredible to watch the platform grow.

Over the course of the coming week, which we’re calling Serverless Week, we're going to be announcing a series of enhancements to the Cloudflare Workers platform to allow you to build much more complicated applications, lower your serverless computing bills, make your applications even faster, and prove that the Workers platform is secure to its core.

Matthew’s Hierarchy of Developers' Needs

Before the week begins, I wanted to step back and talk a bit about what we've learned about edge computing over the course of the last three years. When we Continue reading

Reflecting on my first year at Cloudflare as a Field Marketer in APAC

Hey there! I am Els (short form for Elspeth) and I am the Field Marketing and Events Manager for APAC. I am responsible for building brand awareness and supporting our lovely sales team in acquiring new logos across APAC.

I was inspired to write about my first year in Cloudflare, because John, our CTO, encouraged more women to write for our Cloudflare blog after reviewing our blogging statistics and found out that more men than women blog for Cloudflare. I jumped at the chance because I thought this is a great way to share many side stories as people might not know about how it feels to work in Cloudflare.

Why Cloudflare?

Before I continue, I must mention that I really wanted to join Cloudflare after reading our co-founder Michelle’s reply on Quora regarding "What is it like to work in Cloudflare?." Michelle’s answer as follows:

“my answer is 'adult-like.' While we haven’t adopted this as our official company-wide mantra, I like the simplicity of that answer. People work hard, but go home at the end of the day. People care about their work and want to do a great job. When someone does a good job, Continue reading

Diversity Welcome – A Latinx journey into Cloudflare

I came to the United States chasing the love of my life, today my wife, in 2015.

A Spanish native speaker, Portuguese as my second language and born in the Argentine city of Córdoba more than 6,000 miles from San Francisco, there is no doubt that the definition of "Latino" fits me very well and with pride.

Cloudflare was not my first job in this country but it has been the organization in which I have learned many of the things that have allowed me to understand the corporate culture of a society totally alien to the one which I come from.

I was hired in January 2018 as the first Business Development Representative for the Latin America (LATAM) region based in San Francisco. This was long before the company went public in September 2019. The organization was looking for a specialist in Latin American markets with not only good experience and knowledge beyond languages (Spanish/Portuguese), but understanding of the economy, politics, culture, history, go-to-market strategies, etc.—I was lucky enough to be chosen as "that person". Cloudflare invested in me to a great extent and I was amazed at the freedom I had to propose ideas and bring them Continue reading

Internationalizing the Cloudflare Dashboard

Cloudflare’s dashboard now supports four new languages (and multiple locales): Spanish (with country-specific locales: Chile, Ecuador, Mexico, Peru, and Spain), Brazilian Portuguese, Korean, and Traditional Chinese. Our customers are global and diverse, so in helping build a better Internet for everyone, it is imperative that we bring our products and services to customers in their native language.

Since last year Cloudflare has been hard at work internationalizing our dashboard. At the end of 2019, we launched our first language other than US English: German. At the end of March 2020, we released three additional languages: French, Japanese, and Simplified Chinese. If you want to start using the dashboard in any of these languages, you can change your language preference in the top right of the Cloudflare dashboard. The preference selected will be saved and used across all sessions.

In this blog post, I want to help those unfamiliar with internationalization and localization to better understand how it works. I also would like to tell the story of how we made internationalizing and localizing our application a standard and repeatable process along with sharing a few tips that may help you as you do the same.

Beginning the journey

The first Continue reading

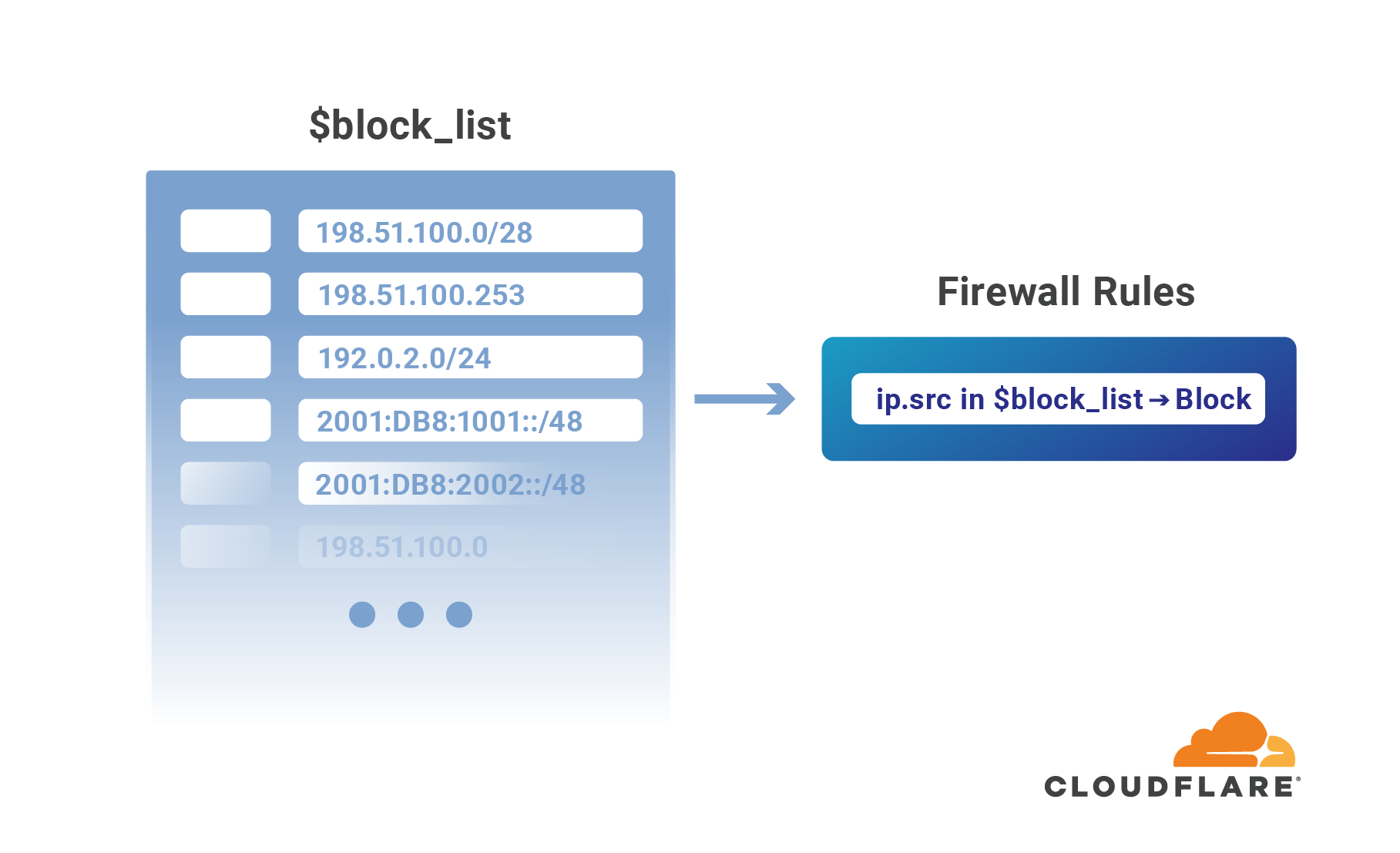

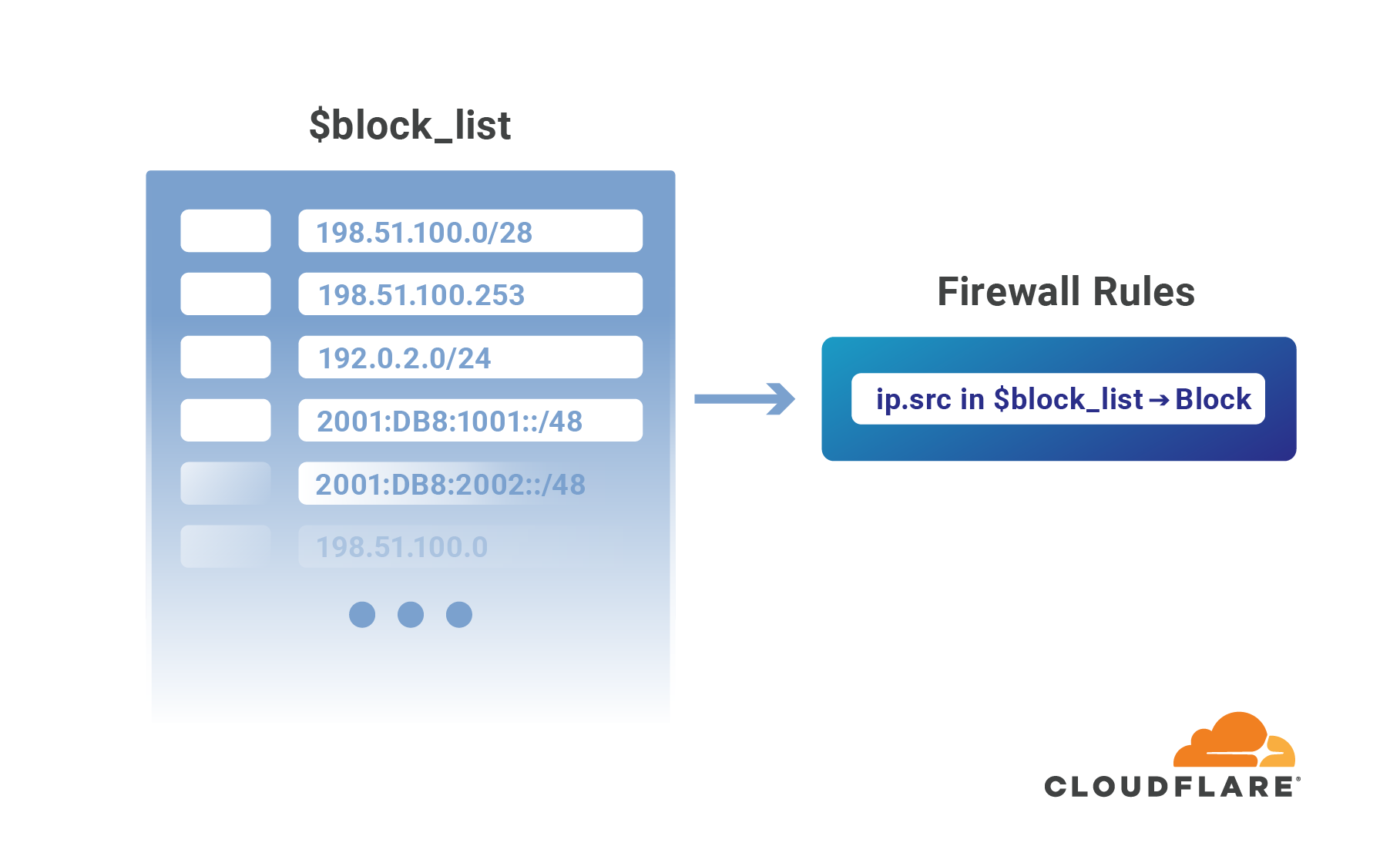

Introducing IP Lists

Authentication on the web has been steadily moving to the application layer using services such as Cloudflare Access to establish and enforce software-controlled, zero trust perimeters. However, there are still several important use cases for restricting access at the network-level by source IP address, autonomous system number (ASN), or country. For example, some businesses are prohibited from doing business with customers in certain countries, while others maintain a blocklist of problematic IPs that have previously attacked them.

Enforcing these network restrictions at centralized chokepoints using appliances—hardware or virtualized—adds unacceptable latency and complexity, but doing so performantly for individual IPs at the Cloudflare edge is easy. Today we’re making it just as easy to manage tens of thousands of IPs across all of your zones by grouping them in data structures known as IP Lists. Lists can be stored with metadata at the Cloudflare edge, replicated within seconds to our data centers in 200+ cities, and used as part of our powerful, expressive Firewall Rules engine to take action on incoming requests.

Previously, these sort of network-based security controls have been configured using IP Access or Zone Lockdown rules. Both tools have a number of Continue reading

Why I’m Helping Cloudflare Grow in Japan

If you'd like to read this post in Japanese click here.

I’m excited to say that I’ve recently joined the Cloudflare team as Head of Japan. Cloudflare has had a presence in Japan for a while now, not only with its network spanning the country, but also with many Japanese customers and partners which I’m now looking forward to growing with. In this new role, I’m focused on expanding our capabilities in the Japanese market, building upon our current efforts, and helping more companies in the region address and put an end to the technical pain points they are facing. This is an exciting time for me and an important time for the company. Today, I’m particularly eager to share that we are opening Cloudflare’s first Japan office, in Tokyo! I can’t wait to grow the Cloudflare business and team here.

Why Cloudflare?

The web was built 25 years ago. This invention changed the way people connected—to anyone and anywhere—and the way we work, play, live, learn, and on. We have seen this become more and more complex. With complexities come difficulties, such as ensuring security, performance, and reliability while online. Cloudflare is helping to solve these challenges that businesses Continue reading

Cloudflare outage on July 17, 2020

Today a configuration error in our backbone network caused an outage for Internet properties and Cloudflare services that lasted 27 minutes. We saw traffic drop by about 50% across our network. Because of the architecture of our backbone this outage didn’t affect the entire Cloudflare network and was localized to certain geographies.

The outage occurred because, while working on an unrelated issue with a segment of the backbone from Newark to Chicago, our network engineering team updated the configuration on a router in Atlanta to alleviate congestion. This configuration contained an error that caused all traffic across our backbone to be sent to Atlanta. This quickly overwhelmed the Atlanta router and caused Cloudflare network locations connected to the backbone to fail.

The affected locations were San Jose, Dallas, Seattle, Los Angeles, Chicago, Washington, DC, Richmond, Newark, Atlanta, London, Amsterdam, Frankfurt, Paris, Stockholm, Moscow, St. Petersburg, São Paulo, Curitiba, and Porto Alegre. Other locations continued to operate normally.

For the avoidance of doubt: this was not caused by an attack or breach of any kind.

We are sorry for this outage and have already made a global change to the backbone configuration that will prevent it from being able to occur Continue reading

Serverless Rendering with Cloudflare Workers

Cloudflare’s Workers platform is a powerful tool; a single compute platform for tasks as simple as manipulating requests or complex as bringing application logic to the network edge. Today I want to show you how to do server-side rendering at the network edge using Workers Sites, Wrangler, HTMLRewriter, and tools from the broader Workers platform.

Each page returned to the user will be static HTML, with dynamic content being rendered on our serverless stack upon user request. Cloudflare’s ability to run this across the global network allows pages to be rendered in a distributed fashion, close to the user, with miniscule cold start times for the application logic. Because this is all built into Cloudflare’s edge, we can implement caching logic to significantly reduce load times, support link previews, and maximize SEO rankings, all while allowing the site to feel like a dynamic application.

A Brief History of Web Pages

In the early days of the web pages were almost entirely static - think raw HTML. As Internet connections, browsers, and hardware matured, so did the content on the web. The world went from static sites to more dynamic content, powered by technologies like CGI, PHP, Flash, CSS, JavaScript, and Continue reading

Cloudflare’s first year in Lisbon

A year ago I wrote about the opening of Cloudflare’s office in Lisbon, it’s hard to believe that a year has flown by. At the time I wrote:

Lisbon’s combination of a large and growing existing tech ecosystem, attractive immigration policy, political stability, high standard of living, as well as logistical factors like time zone (the same as the UK) and direct flights to San Francisco made it the clear winner.

We landed in Lisbon with a small team of transplants from other Cloudflare offices. Twelve of us moved from the UK, US and Singapore to bootstrap here. Today we are 35 people with another 10 having accepted offers; we’ve almost quadrupled in a year and we intend to keep growing to around 80 by the end of 2020.

If you read back to my description of why we chose Lisbon only one item hasn’t turned out quite as we expected. Sure enough TAP Portugal does have direct flights to San Francisco but the pandemic put an end to all business flying worldwide for Cloudflare. We all look forward to getting back to being able to visit our colleagues in other locations.

The pandemic also put us in the Continue reading

Cloudflare Network expands to more than 100 Countries

2020 has been a historic year that will forever be associated with the COVID-19 pandemic. Over the past six months, we have seen societies, businesses, and entire industries unsettled. The situation at Cloudflare has been no different. And while this pandemic has affected each and every one of us, we here at Cloudflare have not forgotten what our mission is: to help build a better Internet.

We have expanded our global network to 206 cities across more than 100 countries. This is in addition to completing 40+ datacenter expansion projects and adding over 1Tbps in dedicated “backbone” (transport) capacity connecting our major data centers so far this year.

Pandemic times means new processes

There was zero chance that 2020 would mean business as usual within the Infrastructure department. We were thrown a curve-ball as the pandemic began affecting our supply chains and operations. By April, the vast majority of the world’s passenger flights were grounded. The majority of bulk air freight ships within the lower deck (“belly”) of these flights, which saw an imbalance between supply and demand with the sudden 74% decrease in passenger belly cargo capacity relative to the same period last year.

We were fortunate to have Continue reading

flowtrackd: DDoS Protection with Unidirectional TCP Flow Tracking

Magic Transit is Cloudflare’s L3 DDoS Scrubbing service for protecting network infrastructure. As part of our ongoing investment in Magic Transit and our DDoS protection capabilities, we’re excited to talk about a new piece of software helping to protect Magic Transit customers: flowtrackd. flowrackd is a software-defined DDoS protection system that significantly improves our ability to automatically detect and mitigate even the most complex TCP-based DDoS attacks. If you are a Magic Transit customer, this feature will be enabled by default at no additional cost on July 29, 2020.

TCP-Based DDoS Attacks

In the first quarter of 2020, one out of every two L3/4 DDoS attacks Cloudflare mitigated was an ACK Flood, and over 66% of all L3/4 attacks were TCP based. Most types of DDoS attacks can be mitigated by finding unique characteristics that are present in all attack packets and using that to distinguish ‘good’ packets from the ‘bad’ ones. This is called "stateless" mitigation, because any packet that has these unique characteristics can simply be dropped without remembering any information (or "state") about the other packets that came before it. However, when attack packets have no unique characteristics, then "stateful" mitigation is required, because whether a Continue reading

No Humans Involved: Mitigating a 754 Million PPS DDoS Attack Automatically

On June 21, Cloudflare automatically mitigated a highly volumetric DDoS attack that peaked at 754 million packets per second. The attack was part of an organized four day campaign starting on June 18 and ending on June 21: attack traffic was sent from over 316,000 IP addresses towards a single Cloudflare IP address that was mostly used for websites on our Free plan. No downtime or service degradation was reported during the attack, and no charges accrued to customers due to our unmetered mitigation guarantee.

The attack was detected and handled automatically by Gatebot, our global DDoS detection and mitigation system without any manual intervention by our teams. Notably, because our automated systems were able to mitigate the attack without issue, no alerts or pages were sent to our on-call teams and no humans were involved at all.

During those four days, the attack utilized a combination of three attack vectors over the TCP protocol: SYN floods, ACK floods and SYN-ACK floods. The attack campaign sustained for multiple hours at rates exceeding 400-600 million packets per second Continue reading