CCDE – CCDE Qualification Exam Passed

A couple of days ago I passed the Cisco Certified Design Expert (CCDE) Qualification Exam which means that I am now eligible to take the CCDE practical. I’m aiming to give that a try in May. This post will give some insight into what a candidate needs to pass the CCDE Qualification exam and how to study for it.

The CCDE is a very broad exam. The ideal candidate must have a very strong background in Routing & Switching (RS) and Service Provider (SP) technologies. These are the meat of the exam. It is also desirable to have a decent knowledge of Data Center (DC) and security technologies. It’s also desirable to have a basic understanding of wireless and storage technologies.

It’s difficult to study for the CCDE and the CCDE Qualification Exam if you don’t have enough experience in the real world. While a person can study for the CCIE without a lot of experience, doing the same for the CCDE is difficult because design and network architecture requires implementation experience and design experience. The ideal candidate should be CCIE RS and SP certified already or have the equivalent knowledge of someone that is. Does that mean that it’s Continue reading

Network Simulation – Cisco Releases VIRL 1.0

Just in time for thanksgiving, Cisco has released version 1.0 of the popular network simulation tool VIRL. This is a major new release moving from Openstack Icehouse to Openstack Kilo. This means that your previous release of VIRL will NOT be upgradeable, only a fresh install is available. Cisco has started mailing out a link to the new release and I received my download link yesterday. It is also possible to download the image from the Salt server to the VM itself and then SCP it out from the VM, this is described in the release notes here.

The following platform reference VMs are included in this release:

- IOSv – 15.5(3)M image

- IOSvL2 – 15.2.4055 DSGS image

- IOSXRv – 5.3.2 image

- CSR1000v – 3.16 XE-based image

- NX-OSv 7.2.0.D1.1(121)

- ASAv 9.5.1

- Ubuntu 14.4.2 Cloud-init

There are also Linux container images included. These are the following:

- Ubuntu 14.4.2 LXC

- iPerf LXC

- Routem LXC

- Ostinato LXC

This means that it will be a lot easier to do traffic generation, bandwidth testing and simulating a WAN by inserting delay, packet loss and jitter. It’s great to see Continue reading

General – Behavior Of QoS Queues On Cisco IOS

I have been running some QoS tests lately and wanted to share some of my results. Some of this behavior is described in various documentation guides but it’s not really clearly described in one place. I’ll describe what I have found so far in this post.

QoS is only active during congestion. This is well known but it’s not as well known how congestion is detected. the TX ring is used to hold packets before they get transmitted out on an interface. This is a hardware FIFO queue and when the queue gets filled, the interface is congested. When buying a subrate circuit from a SP, something must be added to achieve the backpressure so that the TX ring is considered full. This is done by applying a parent shaper and a child policy with the actual queue configuration.

The LLQ is used for high priority traffic. When the interface is not congested, the LLQ can use all available bandwidth unless an explicit policer is configured under the LLQ.

A normal queue can use more bandwidth than it is guaranteed when there is no congestion.

When a normal queue wants to use more bandwidth than its guaranteed, it can if Continue reading

CCDE – Firewall And IPS Design Considerations

Introduction

This post will discuss different design options for deploying firewalls and Intrusion Prevention Systems (IPS) and how firewalls can be used in the data center.

Firewall Designs

Firewalls have traditionally been used to protect inside resources from being accessed from the outside. The firewall is then deployed at the edge of the network. The security zones are then referred to as “outside” and “inside” or “untrusted” and “trusted”.

Anything coming from the outside is by default blocked unless the connection initiated from the inside. Anything from the inside going out is allowed by default. The default behavior can of course be modified with access-lists.

It is also common to use a Demilitarized Zone (DMZ) when publishing external services such as e-mail, web and DNS. The goal of the DMZ is to separate the servers hosting these external services from the inside LAN to lower the risk of having a breach on the inside. From the outside only the ports that the service is using will be allowed in to the DMZ such as port 80, 443, 53 and so on. From the DMZ only a very limited set of traffic will be allowed Continue reading

Network Simulation – Cisco VIRL Increases Node Count

Great news everyone. Some of you might have seen that I created a petition to increase the node limit in VIRL. I know there have been discussions within Cisco about the node limit and surely our petition wasn’t the single thing that convinced the VIRL team but I know that they have seen it and I’m proud that we were able to make a difference!

On November 1st the node limit will be increased to 20 nodes for free! That’s right, you get 5 extra nodes for free. There will also be a license upgrade available that gets you to 30 nodes. I’m not sure of the pricing yet for the 30 node limit so I will get back when I get more information on that.

When the community comes together, great things happen! This post on Cisco VIRL will get updated as I get more information. Cisco VIRL will be a much more useful tool now to simulate the CCIE lab and large customer topologies. I tip my hat to the Cisco VIRL team for listening to the community.

The post Network Simulation – Cisco VIRL Increases Node Count appeared first on Daniels Networking Blog.

CCDE – Load Balancer Designs

Introduction

This post will describe different load balancer designs, the pros and cons of the designs and how they affect the forwarding of packets.

Load Sharing Vs Load Balancing

The terms load sharing and load balancing often get intermixed. An algorithm such as Cisco Express Forwarding (CEF) does load sharing of packets meaning that packets get sent on a link based on parameters such as source and destination MAC address or source and destination IP address or in some cases also the layer 4 ports in the IP packet. The CEF algorithm does not take into consideration the utilization of the link or how many flows have been assigned to each link. Load balancing on the other hand tries to utilize the links more evenly by tracking the bandwidth of the flows and assigning flows based on this information to the different links. The goal is to distribute the traffic across the links as evenly as possible. However load balancing is mostly used to distribute traffic to different servers to share the load among them.

Why Load Balancing?

What warrants the use of a load balancer? Think of a web site such as facebook.com. Imagine the number of users Continue reading

CCNA – Operation Of IP Data Networks 1.5

We move on to the next topic which is

1.5 Predict the data flow between two hosts across a network

This is a very important topic for the CCNA. It may feel a bit overwhelming at first to grasp all the steps of the data flow but as a CCNA you need to learn how this process works. We will start out with an example where two hosts are on the same LAN and then we will look at an example which involves routing as well.

The first topology has two hosts H1 and H2 with IP adresses 10.0.0.10 and 10.0.0.20 respectively.

Host 1 and Host 2 are both connected to Switch 1 and has not communicated previously. H1 has the MAC adress 0000.0000.0001 and H2 has the MAC address 0000.0000.0002. H1 wants to send data to H2, which steps are involved?

1. H1 knows the destination IP of H2 (10.0.0.20) and runs AND to determine that they are on the same subnet.

2. H1 checkts its ARP cache which is empty for 10.0.0.20.

3. H1 generates ARP message Continue reading

CCNA – Operation Of IP Data Networks 1.4

It’s time for the next topic for the CCNA.

1.4 Describe the purpose and basic operation of the protocols in the OSI and TCP/IP models

There are tons of books written on the OSI and TCP/IP model so I won’t describe these models in depth here. What I will do is explain what you need to know at each level and explain how the real world works. We have two models, one from OSI and one from DOD.

In the real life everyone references the OSI model. I’ve never heard anyone reference the DOD model which doesn’t mean it doesn’t have its merits but everyone always uses the OSI model as a reference.

The OSI model has seven layers but people sometimes joke that layer 8 is financial and layer 9 is political.

Starting out with the physical layer, what you need to know is auto negotiation. Auto negotiation is good, hard coding speed and duplex will no doubt lead to ports that are hard coded on one side and auto on the other side to end up in half duplex. Gone are the days when auto negotiation wasn’t compatible and lead to misconfigured Continue reading

CCDE – Optical Design Considerations

Introduction

As a network architect you should not have to know all the details of the physical and data link layer. What you need to know though is how different transports can support the topology that you are looking to build. If you buy a circuit from an ISP, what protocols can you run over it? Is running MPLS over the circuit supported? What’s the maximum MTU? Is it possible to run STP over the link? This may be important when connecting data centers together through a Data Center Interconnect (DCI).

To be able to connect two data centers together, you will need to either connect via fibre or over a wavelength or buy circuits from an ISP. Renting a fibre will likely be more expensive but also more flexible if you have the need to run protocols such as MPLS over the link. For a pure DCI, just running IP may be enough so there could be cost savings if buying a circuit from an ISP instead.

For a big enough player it may also be feasible to build it all yourself. This post will look at the difference between Coarse Wave Division Multiplexing (CWDM) and Dense Wave Division Continue reading

CCNA – Operation of IP Data Networks 1.3

We move on to the next topic:

1.3 Identify common applications and their impact on the network

When you work in networking, it’s important to have an understanding of how applications work and what are their characteristics. Is it sensitive to packet loss? Is it sensitive to jitter? What ports does it use? Let’s have a look at some of the common applications that you need to be aware of for the CCNA certification.

HTTP

HTTP is the most important protocol on the Internet. The major part of all traffic from the Internet is HTTP. With sites like Facebook, Youtube, Netflix, this will not decrease in the future, rather web traffic will dominate even more. HTTP is normally run on TCP port 80 but it’s possible to run it on custom ports as well. Because HTTP runs over TCP, it is not very sensitive to packet loss and it does not have strict requirements for delay or jitter. However, people still don’t have a lot of patience for a web page loading and if there is a lot of packet loss, it may affect streaming services such as Netflix or services where downloading/uploading of files is done. From a Continue reading

CCNA – Operation of IP Data Networks 1.2

The next topic for CCNA is:

1.2 Select the components required to meet a given network specification

I wish the blueprint would have been a bit clearer on what they mean with this topic but it’s reasonable to think that it’s about picking routers and switches depending on the networking requirements.

Picking a router or switch will depend on what kind of circuit is bought from the ISP, if the service is managed, the number of users on the network, the number of subnets needed and if there are requirements for NAT and/or firewalling among many decision points. Since this is the CCNA RS we will pretend that devices such as the Cisco ASA does not exist which can be used for small offices to do both firewalling and routing.

I’ll give different examples and we’ll look at which devices make sense and why to pick one device over another.

MPLS VPN circuit 10 users One subnet (data) No need to NAT No need for firewall

The MPLS VPN circuit is a managed service, meaning that the ISP will have a Customer Premises Equipment (CPE) at the customer. In other words, the ISP will put a router at the Continue reading

CCNA – Operation of IP Data Networks 1.1

We kick off the CCNA series from the beginning. Operation of IP data networks is weighted as 5% in the CCNA RS blueprint. The first topic is:

1.1 Recognize the purpose and functions of various network devices such as routers, switches, bridges and hubs

Router

A router is a device that routes between different networks, meaning that it looks at the IP header and more specifically the destination IP of a packet to do forwarding. It uses a routing table which is populated by static routes and routes from dynamic protocols such as RIP, EIGRP, OSPF, ISIS and BGP. These routes are inserted into the Routing Information Base (RIB). The routes from different sources compete against each other and the best route gets inserted into the RIB. To define how trustworthy a route is, there is a metric called Administrative Distance (AD). These are some of the common AD values:

0 Connected route 1 Static route 20 External BGP 90 EIGRP 110 OSPF 115 ISIS 120 RIP 200 Internal BGP 255 Don't install

If a value of 255 is used, the route will not installed in the RIB as the route is deemed not trustworthy at all.

The goal Continue reading

CCNA RS Workbook

Hi everyone,

People that know me know that I have always been keen on giving back to the community and helping people in their studies. On that note, I have decided to start creating content for a CCNA RS workbook which will be published online. The goal is to take the blueprint and cover one item from the blueprint in each post.

I hope this will be helpful for people in their CCNA studies.

IPv6 Support in VRF on Catalyst 3k

I was looking for information on running IPv6 in VRF on the Catalyst 3k platform and there wasn’t much information available. I tried running IPv6 in VRF on Catalyst 3560 with correct SDM profile but got this error message:

Switch(config-vrf)#address-family ipv6 IPv6 VRF not supported for this platform or this template

I checked with Cisco and you need to have Catalyst 3560-X/3750-X with release 15.2(1)E for IPv6 to be supported in a VRF. This means the feature is not supported on the non X models.

The feature is also available on the 3650/3850 platform with IOS-XE 3.6.0E.

I hope this information helps someone looking for IPv6 support in VRF.

Nasty Multicast VSS bug on Catalyst 4500-X

I ran into an “exciting” bug yesterday. It was seen in a 4500-X VSS pair running 3.7.0 code. When there has been a switchover meaning that the secondary switch became active, there’s a risk that information is not properly synced between the switches. What we were seeing was that this VSS pair was “eating” the packets, essentially black holing them. Any multicast that came into the VSS pair would not be properly forwarded even though the Outgoing Interface List (OIL) had been properly built. Everything else looked normal, PIM neighbors were active, OILs were good (except no S,G), routing was there, RPF check was passing and so on.

It turns out that there is a bug called CSCus13479 which requires CCO login to view. The bug is active when Portchannels are used and PIM is run over them. To see if an interface is misbehaving, use the following command:

hrn3-4500x-vss-01#sh platfo hardware rxvlan-map-table vl 200 <<< Ingress port Executing the command on VSS member switch role = VSS Active, id = 1 Vlan 200: l2LookupId: 200 srcMissIgnored: 0 ipv4UnicastEn: 1 ipv4MulticastEn: 1 <<<<< ipv6UnicastEn: 0 ipv6MulticastEn: 0 mplsUnicastEn: 0 mplsMulticastEn: 0 privateVlanMode: Normal ipv4UcastRpfMode: None ipv6UcastRpfMode: None routingTableId: 1 rpSet: 0 flcIpLookupKeyType: IpForUcastAndMcast flcOtherL3LookupKeyTypeIndex: 0 vlanFlcKeyCtrlTableIndex: 0 vlanFlcCtrl: 0 Executing the command on VSS member switch role = VSS Standby, id = 2 Vlan 200: l2LookupId: 200 srcMissIgnored: 0 ipv4UnicastEn: 1 ipv4MulticastEn: 0 <<<<< ipv6UnicastEn: 0 ipv6MulticastEn: 0 mplsUnicastEn: 0 mplsMulticastEn: 0 privateVlanMode: Normal ipv4UcastRpfMode: None ipv6UcastRpfMode: None routingTableId: 1 rpSet: 0 flcIpLookupKeyType: IpForUcastAndMcast flcOtherL3LookupKeyTypeIndex: 0 vlanFlcKeyCtrlTableIndex: 0 vlanFlcCtrl: 0

From the output you can see that "ipv4MulticastEn" is set to 1 on one switch and 0 to the other one. The state has not been properly synched or somehow misprogrammed which leads to this issue with black holing multicast. It was not an easy one to catch so I hope this post might help someone.

This also shows that there are always drawbacks to clustering so weigh the risk of running in systems in clusters and having bugs affecting both devices as opposed to running them stand alone. There's always a tradeoff between complexity, topologies and how a network can be designed depending on your choice.

Catalyst 3750 IPv6 ACL Limitations

I recently ran into some limitations of IPv6 ACLs on the Catalyst 3750 platform. I had developed an ACL to protect from receiving traffic from unwanted address ranges such as ::, ::1, ::FFFF:0:0/96. The first address is the unspecified address, the second one is the loopback address and the last one is IPv4 mapped traffic. The ACL also contained an entry to deny traffic with routing-type 0.

Note that no error is output when adding the entries in the ACL, only when applying the ACL to an interface.

% This ACL contains following unsupported entries.

% Remove those entries and try again.

deny ipv6 any any routing-type 0 sequence 20

deny ipv6 host ::1 any sequence 290

deny ipv6 host :: any sequence 310

deny ipv6 ::FFFF:0.0.0.0/96 any sequence 330

% This ACL can not be attached to the interface.

SW1(config-if)#

%PARSE_RC-4-PRC_NON_COMPLIANCE: `ipv6 traffic-filter v6-ACL-IN in'

From the configuration guide, the following limitations apply to the Catalyst 3750 platform.

What this means is that we can’t match on flowlabel, routing-header and undetermined transport upper layer protocol. We also need to match on networks ranging from /0 to /64 and host addresses that are belonging to global unicast Continue reading

Blog Migrated!

Hi!

I have decided to migrate my blog from wordpress.com to a private environment. The main reasons being that I felt that I had outgrown the normal wordpress.com site. I wanted to be able to install plugins and get more accustomed to running my own environment. These days it can’t hurt picking up some Linux skills.

The other reason is that I haven’t made a dime on the blog, in fact since I’ve had to pay hosting costs I’ve been losing money on the blog every year. By placing some ads I hope I can make enough for the hosting and anything extra would help me in getting things I need to generate more content.

The blog should now be reachable over both v4 and v6 and have SSL enabled.

Please bear with me if you find any things that are broken. I have migrated the content but I’m sure things will pop up. If they do, please notify me.

/Daniel

Busting Myths – IPv6 Link Local Next Hop into BGP

In some publications it is mentioned that a link local next-hop can’t be used when redistributing routes into BGP because routers receiving the route will not know what to do with the next-hop. That is one of the reason why HSRPv2 got support for global IPv6 addresses. One such scenario is described in this link.

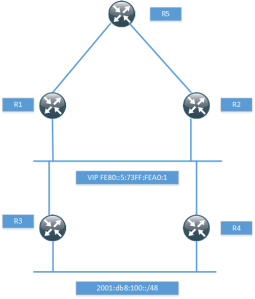

The topology used for this post is the following.

I have just setup enough of the topology to prove that it works with the next-hop, so I won’t be running any pings and so on. The routers R1 and R2 have a static route for the network behind R3 and R4.

ipv6 route 2001:DB8:100::/48 GigabitEthernet0/1 FE80::5:73FF:FEA0:1

When routing towards a link local address, the exit interface must be specified. R1 then runs BGP towards R5, notice that I’m not using next-hop-self.

router bgp 100 bgp router-id 1.1.1.1 bgp log-neighbor-changes neighbor 2001:DB8:1::5 remote-as 100 ! address-family ipv6 redistribute static neighbor 2001:DB8:1::5 activate exit-address-family

If we look in the BGP RIB, we can see that the route is installed with a link local next-hop.

R1#sh bgp ipv6 uni BGP table version is 2, local router ID is 1.1.1.1 Status codes: s suppressed, Continue reading

More PIM-BiDir Considerations

Introduction

From my last post on PIM BiDir I got some great comments from my friend Peter Palúch. I still had some concepts that weren’t totally clear to me and I don’t like to leave unfinished business. There is also a lack of resources properly explaining the behavior of PIM BiDir. For that reason I would like to clarify some concepts and write some more about the potential gains of PIM BiDir is. First we must be clear on the terminology used in PIM BiDir.

Terminology

Rendezvous Point Address (RPA) – The RPA is an address that is used as the root of the distribution tree for a range of multicast groups. This address must be routable in the PIM domain but does not have to reside on a physical interface or device.

Rendezvous Point Link (RPL) – It is the physical link to which the RPA belongs. The RPL is the only link where DF election does not take place. The RFC also says “In BIDIR-PIM, all multicast traffic to groups mapping to a specific RPA is forwarded on the RPL of that RPA.” In some scenarios where the RPA is virtual, there may not be an RPL though.

Many to Many Multicast – PIM BiDir

Introduction

This post will describe PIM Bidir, why it is needed and the design considerations for using PIM BiDir. This post is focused on technology overview and design and will not contain any actual configurations.

Multicast Applications

Multicast is a technology that is mainly used for one-to-many and many-to-many applications. The following are examples of applications that use or can benefit from using multicast.

One-to-many

One-to-many applications have a single sender and multiple receivers. These are examples of applications in the one-to-many model.

Scheduled audio/video: IP-TV, radio, lectures

Push media: News headlines, weather updates, sports scores

File distributing and caching: Web site content or any file-based updates sent to distributed end-user or replicating/caching sites

Announcements: Network time, multicast session schedules

Monitoring: Stock prices, security system or other real-time monitoring applications

Many-to-many

Many-to-many applications have many senders and many receivers. One-to-many applications are unidirectional and many-to-many applications are bidirectional.

Multimedia conferencing: Audio/video and whiteboard is the classic conference application

Synchronized resources: Shared distributed databases of any type

Distance learning: One-to-many lecture but with “upstream” capability where receivers can question the lecturer

Multi-player games: Many multi-player games are distributed simulations and also have chat group capabilities.

Overview of PIM

PIM has Continue reading