IRB Models: Edge Routing

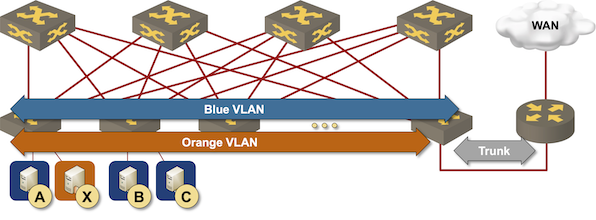

The simplest way to implement layer-3 forwarding in a network fabric is to offload it to an external device1, be it a WAN edge router, a firewall, a load balancer, or any other network appliance.

Routing at the (outer) edge of the fabric

Response: Complexities of Network Automation

David Gee couldn’t resist making a few choice comments after I asked for his opinion of an early draft of the Network Automation Expert Beginners blog post, and allowed me to share them with you. Enjoy 😉

Network automation offers promises of reliability and efficiency, but it came without a warning label and health warnings. We seem to be perpetually stuck in a window display with sexily dressed mannequins.

Response: Complexities of Network Automation

David Gee couldn’t resist making a few choice comments after I asked for his opinion of an early draft of the Network Automation Expert Beginners blog post, and allowed me to share them with you. Enjoy 😉

Network automation offers promises of reliability and efficiency, but it came without a warning label and health warnings. We seem to be perpetually stuck in a window display with sexily dressed mannequins.

Design Clinic: Small-Site IPv6 Multihoming

I decided to stop caring about IPv6 when the protocol became old enough to buy its own beer (now even in US), but its second-system effects keep coming back to haunt us. Here’s a question I got for the February 2023 ipSpace.net Design Clinic:

How can we do IPv6 networking in a small/medium enterprise if we’re using multiple ISPs and don’t have our own IPv6 Provider Independent IPv6 allocation. I’ve brainstormed this with people far more knowledgeable than me on IPv6, and listened to IPv6 Buzz episodes discussing it, but I still can’t figure it out.

Design Clinic: Small-Site IPv6 Multihoming

I decided to stop caring about IPv6 when the protocol became old enough to buy its own beer (now even in US), but its second-system effects keep coming back to haunt us. Here’s a question I got for the February 2023 ipSpace.net Design Clinic:

How can we do IPv6 networking in a small/medium enterprise if we’re using multiple ISPs and don’t have our own IPv6 Provider Independent IPv6 allocation. I’ve brainstormed this with people far more knowledgeable than me on IPv6, and listened to IPv6 Buzz episodes discussing it, but I still can’t figure it out.

netlab Release 1.5.0: Larger Lab Topologies

netlab release 1.5.0 includes features that will help you start very large lab topologies (someone managed to run over 90 Mikrotik routers on a 24-core server):

- You can start libvirt virtual machines in batches to reduce the CPU overload that causes startup failures on large topologies.

- You can combine virtual machines and containers in the same lab, further reducing the memory footprint for devices available as true containers (Linux hosts, Cumulus/FRR routers, Arista cEOS)

- Use custom management network IP subnet if you’re running out of management IP addresses

To get more details and learn about additional features included in release 1.5.0, read the release notes. To upgrade, execute pip3 install --upgrade networklab.

New to netlab? Start with the Getting Started document and the installation guide.

netlab Release 1.5.0: Larger Lab Topologies

netlab release 1.5.0 includes features that will help you start very large lab topologies (someone managed to run over 90 Mikrotik routers on a 24-core server):

- You can start libvirt virtual machines in batches to reduce the CPU overload that causes startup failures on large topologies.

- You can combine virtual machines and containers in the same lab, further reducing the memory footprint for devices available as true containers (Linux hosts, Cumulus/FRR routers, Arista cEOS)

- Use custom management network IP subnet if you’re running out of management IP addresses

To get more details and learn about additional features included in release 1.5.0, read the release notes. To upgrade, execute pip3 install --upgrade networklab.

New to netlab? Start with the Getting Started document and the installation guide.

Worth Reading: A Debugging Manifesto

Julia Evans published another fantastic must-read article: a debugging manifesto. Enjoy ;)

Worth Reading: A Debugging Manifesto

Julia Evans published another fantastic must-read article: a debugging manifesto. Enjoy ;)

MUST READ: Nothing Works

Did you ever wonder why it’s impossible to find good service company, why most software sucks, or why networking vendors can get away with selling crap? If you did, and found no good answer (apart from Sturgeon’s Law), it’s time to read Why is it so hard to buy things that work well? by Dan Luu.

Totally off-topic: his web site uses almost no CSS and looks in my browser like a relic of 1980s. Suggestions how to fix that (in Chrome) are most welcome.

MUST READ: Nothing Works

Did you ever wonder why it’s impossible to find good service company, why most software sucks, or why networking vendors can get away with selling crap? If you did, and found no good answer (apart from Sturgeon’s Law), it’s time to read Why is it so hard to buy things that work well? by Dan Luu.

Totally off-topic: his web site uses almost no CSS and looks in my browser like a relic of 1980s. Suggestions how to fix that (in Chrome) are most welcome.

Built.fm Podcast with David Gee

I had a lovely chat with David Gee on his built.fm podcast sometime in December. David switched jobs in the meantime, and so it took him a bit longer than expected to publish it. Chatting with David is always fun; hope you’ll enjoy our chat as much as I did.

Built.fm Podcast with David Gee

I had a lovely chat with David Gee on his built.fm podcast sometime in December. David switched jobs in the meantime, and so it took him a bit longer than expected to publish it. Chatting with David is always fun; hope you’ll enjoy our chat as much as I did.

Hiding Malicious Packets Behind LLC SNAP Header

A random tweet1 pointed me to Vulnerability Note VU#855201 that documents four vulnerabilities exploiting a weird combination of LLC and VLAN headers can bypass layer-2 security on most network devices.

The security researcher who found the vulnerability also provided an excellent in-depth description focused on the way operating systems like Linux and Windows handle LLC-encapsulated IP packets. Here’s the CliffNotes version focused more on the hardware switches. Even though I tried to keep it simple, you might want to read the History of Ethernet Encapsulation before moving on.

Hiding Malicious Packets Behind LLC SNAP Header

A random tweet1 pointed me to Vulnerability Note VU#855201 that documents four vulnerabilities exploiting a weird combination of LLC and VLAN headers can bypass layer-2 security on most network devices.

The security researcher who found the vulnerability also provided an excellent in-depth description focused on the way operating systems like Linux and Windows handle LLC-encapsulated IP packets. Here’s the CliffNotes version focused more on the hardware switches. Even though I tried to keep it simple, you might want to read the History of Ethernet Encapsulation before moving on.

Response: Network Automation Expert Beginners

I usually post links to my blog posts to LinkedIn, and often get extraordinary comments. Unfortunately, those comments usually get lost in the mists of social media fog after a few weeks, so I’m trying to save them by reposting them as blog posts (always with original author’s permission). Here’s a comment David Sun left on my Network Automation Expert Beginners blog post

The most successful automation I’ve seen comes from orgs who start with proper software requirements specifications and more importantly, the proper organizational/leadership backing to document and support said infrastructure automation tooling.

Response: Network Automation Expert Beginners

I usually post links to my blog posts to LinkedIn, and often get extraordinary comments. Unfortunately, those comments usually get lost in the mists of social media fog after a few weeks, so I’m trying to save them by reposting them as blog posts (always with original author’s permission). Here’s a comment David Sun left on my Network Automation Expert Beginners blog post

The most successful automation I’ve seen comes from orgs who start with proper software requirements specifications and more importantly, the proper organizational/leadership backing to document and support said infrastructure automation tooling.

Will DPUs Change the Network?

It’s easy to get excited about what seems to be a new technology and conclude that it will forever change the way we do things. For example, I’ve seen claims that SmartNICs (also known as Data Processing Units – DPU) will forever change the network.

TL&DR: Of course they won’t.

Before we start discussing the details, it’s worth remembering what a DPU is: it’s another server with its own CPU, memory, and network interface card (NIC) that happens to have PCI hardware that emulates the host interface cards. It might also have dedicated FPGA or ASICs.

Will DPUs Change the Network?

It’s easy to get excited about what seems to be a new technology and conclude that it will forever change the way we do things. For example, I’ve seen claims that SmartNICs (also known as Data Processing Units – DPU) will forever change the network.

TL&DR: Of course they won’t.

Before we start discussing the details, it’s worth remembering what a DPU is: it’s another server with its own CPU, memory, and network interface card (NIC) that happens to have PCI hardware that emulates the host interface cards. It might also have dedicated FPGA or ASICs.

netlab: Building a Layer-2 Fabric

A friend of mine decided to use netlab to build a simple traditional data center fabric, and asked me a question along these lines:

How do I make all the ports be L2 by default i.e. not have IP address assigned to them?

Trying to answer his question way too late in the evening (I know, I shouldn’t be doing that), I focused on the “no IP addresses” part. To get there, you have to use the l2only pool or disable IPv4 prefixes in the built-in address pools, for example: