Migrating from NGINX Ingress to Calico Ingress Gateway: A Step-by-Step Guide

From Ingress NGINX to Calico Ingress Gateway

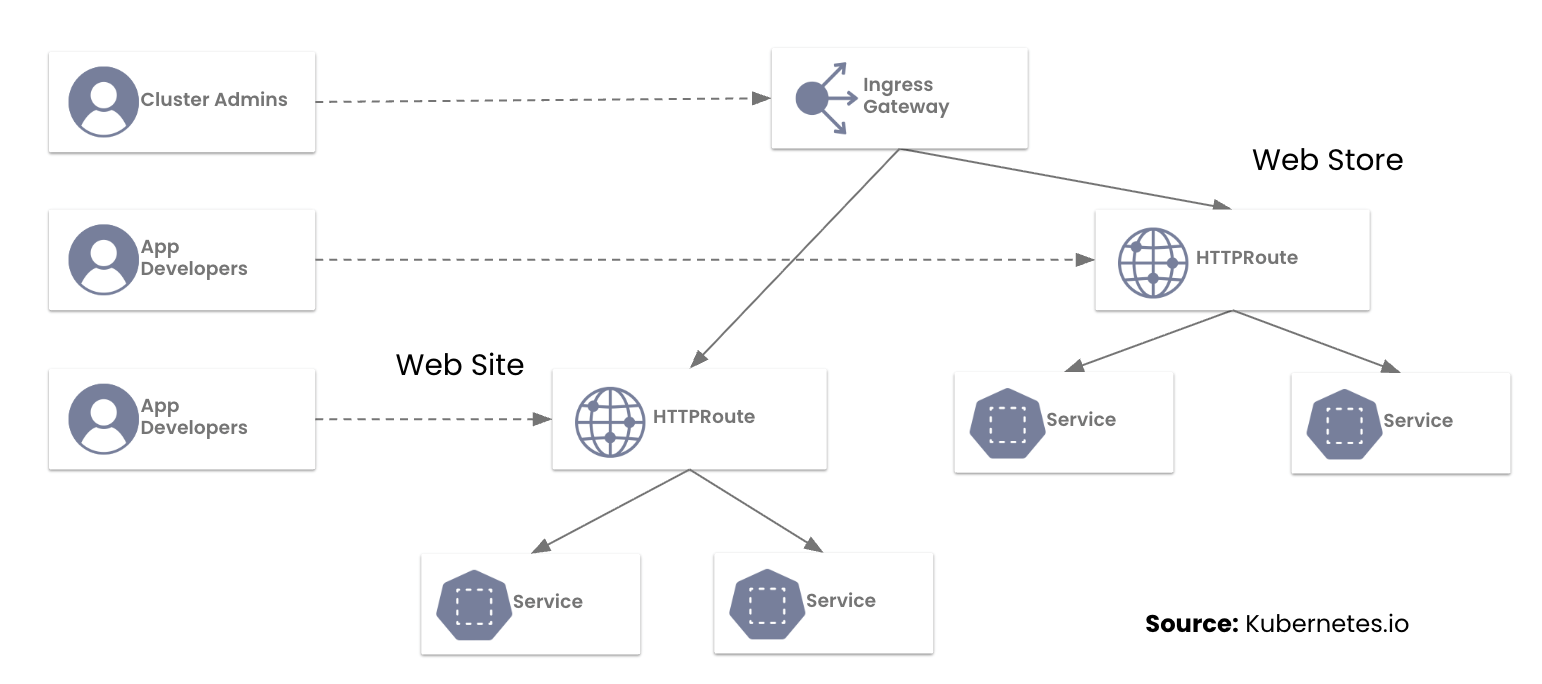

In our previous post, we addressed the most common questions platform teams are asking as they prepare for the retirement of the NGINX Ingress Controller. With the March 2026 deadline fast approaching, this guide provides a hands-on, step-by-step walkthrough for migrating to the Kubernetes Gateway API using Calico Ingress Gateway. You will learn how to translate NGINX annotations into HTTPRoute rules, run both models side by side, and safely cut over live traffic.

A Brief History

The announced retirement of the NGINX Ingress Controller has created a forced migration path for the many teams that relied on it as the industry standard. While the Ingress API is not yet officially deprecated, the Kubernetes SIG Network has designated the Gateway API as its official successor. Legacy Ingress will no longer receive enhancements and exists primarily for backward compatibility.

Why the Industry is Standardizing on Gateway API

While the Ingress API served the community for years, it reached a functional ceiling. Calico Ingress Gateway implements the Gateway API to provide:

- Role-Oriented Design: Clear separation between the infrastructure (managed by SREs) and routing logic (managed by Developers).

- Native Expressiveness: Features like URL rewrites and header manipulation Continue reading

Calico Ingress Gateway: Key FAQs Before Migrating from NGINX Ingress Controller

What Platform Teams Need to Know Before Moving to Gateway API

We recently sat down with representatives from 42 companies to discuss a pivotal moment in Kubernetes networking: the NGINX Ingress retirement.

With the March 2026 retirement of the NGINX Ingress Controller fast approaching, platform teams are now facing a hard deadline to modernize their ingress strategy. This urgency was reflected in our recent workshop, “Switching from NGINX Ingress Controller to Calico Ingress Gateway” which saw an overwhelming turnout, with engineers representing a cross-section of the industry, from financial services to high-growth tech startups.

During the session, the Tigera team highlighted a hard truth for platform teams: the original Ingress API was designed for a simpler era. Today, teams are struggling to manage production traffic through “annotation sprawl”—a web of brittle, implementation-specific hacks that make multi-tenancy and consistent security an operational nightmare.

The move to the Kubernetes Gateway API isn’t just a mandatory update; it’s a graduation to a role-oriented, expressive networking model. We’ve previously explored this shift in our blogs on Understanding the NGINX Retirement and Why the Ingress NGINX Controller is Dead.

Introducing the New Tigera & Calico Brand

Same community. A clearer, more unified look.

Today, we are excited to share a refresh of the Tigera and Calico visual identity!

This update better reflects who we are, who we serve, and where we are headed next.

If you have been part of the Calico community for a while, you know that change at Tigera is always driven by substance, not style alone. Since the early days of Project Calico, our focus has always been clear: Build powerful, scalable networking and security for Kubernetes, and do it in the open with the community.

Built for the Future, With the Community

Tigera was founded by the original Project Calico engineering team and remains deeply committed to maintaining Calico Open Source as the leading standard for container networking and network security.

“Tigera’s story began in 2016 with Project Calico, an open-source container networking and security project. Calico Open Source has since become the most widely adopted solution for containers and Kubernetes. We remain committed to maintaining Calico Open Source as the leading standard, while also delivering advanced capabilities through our commercial editions.”

—Ratan Tipirneni, President & CEO, Tigera

A Visual Evolution

This refresh is an evolution, not a reinvention. You Continue reading

Why Kubernetes Flat Networks Fail at Scale—and Why Your Cluster Needs a Security Hierarchy

Kubernetes networking offers incredible power, but scaling that power often transforms a clean architecture into a tangled web of complexity. Managing traffic flow between hundreds of microservices across dozens of namespaces presents a challenge that touches every layer of the organization, from engineers debugging connections to the architects designing for compliance.

The solution to these diverging challenges lies in bringing structure and validation to standard Kubernetes networking. Here is a look at how Calico Tiers and Staged Network Policies help you get rid of this networking chaos.

The Limits of Flat Networking

The default Kubernetes NetworkPolicy resource operates in a flat hierarchy. In a small cluster, this is manageable. However, in an enterprise environment with multiple tenants, teams, and compliance requirements, “flat” quickly becomes unmanageable, and dangerous.

To make this easier, imagine a large office building where every single employee has a key that opens every door. To secure the CEO’s office in a flat network, you have to put “Do Not Enter” signs on every door that could lead to it. That is flat networking, secure by exclusion rather than inclusion.

Without a security hierarchy, every new policy risks becoming a potential mistake that overrides others, and debugging connectivity Continue reading

Ingress Security for AI Workloads in Kubernetes: Protecting AI Endpoints with WAF

AI Workloads Have a New Front Door

For years, AI and machine learning workloads lived in the lab. They ran as internal experiments, batch jobs in isolated clusters, or offline data pipelines. Security focused on internal access controls and protecting the data perimeter.

That model no longer holds.

Today, AI models are increasingly part of production traffic, which is driving new challenges around securing AI workloads in Kubernetes. Whether serving a large language model for a customer-facing chatbot or a computer vision model for real-time analysis, these models are exposed through APIs, typically REST or gRPC, running as microservices in Kubernetes.

From a platform engineering perspective, these AI inference endpoints are now Tier 1 services. They sit alongside login APIs and payment gateways in terms of criticality, but they introduce a different and more expensive risk profile than traditional web applications. For AI inference endpoints, ingress security increasingly means Layer 7 inspection and WAF (Web Application Firewall) level controls at the cluster edge. By analyzing the full request payload, a WAF can detect and block abusive or malicious traffic before it ever reaches expensive GPU resources or sensitive data. This sets the stage for protecting AI workloads from both operational Continue reading

NGINX is Retiring: Your Step-by-Step Guide to Replacing Ingress NGINX

Your Curated Webinar & Blog Collection

The Ingress NGINX Controller is approaching retirement, and teams need a clear path forward to manage Kubernetes ingress traffic securely and reliably. To make this transition easier, we’ve created a single, curated hub with all the relevant blogs and webinars. This hub serves as your one-stop resource for understanding the migration to Kubernetes Gateway API with Calico Ingress Gateway.

This curated hub is designed to guide your team from understanding Ingress NGINX retirement, through evaluating options, learning the benefits of Calico Ingress Gateway, and ultimately seeing it in action with webinars and a demo.

Use This Collection to Help You Migrate Safely

One-stop resource: No need to hunt across the site for guidance.

One-stop resource: No need to hunt across the site for guidance. Recommended reading order: Helps teams build knowledge progressively.

Recommended reading order: Helps teams build knowledge progressively. Actionable takeaways: Blogs explain why and how to migrate; webinars show it in practice.

Actionable takeaways: Blogs explain why and how to migrate; webinars show it in practice. Demo access: Direct link to schedule personalized support for your environment.

Demo access: Direct link to schedule personalized support for your environment.

Recommended Reading

Step 1: Understand the Retirement of Ingress NGINX and the changing landscape

- Read “Ingress NGINX Controller Is Dead: Should You Move to Gateway API?” to understand why NGINX is being retired and what it means for your clusters.

Step 2: Compare Approaches, including Ingress vs. Continue reading

Kubernetes Networking at Scale: From Tool Sprawl to a Unified Solution

As Kubernetes platforms scale, one part of the system consistently resists standardization and predictability: networking. While compute and storage have largely matured into predictable, operationally stable subsystems, networking remains a primary source of complexity and operational risk

This complexity is not the result of missing features or immature technology. Instead, it stems from how Kubernetes networking capabilities have evolved as a collection of independently delivered components rather than as a cohesive system. As organizations continue to scale Kubernetes across hybrid and multi-environment deployments, this fragmentation increasingly limits agility, reliability, and security.

This post explores how Kubernetes networking arrived at this point, why hybrid environments amplify its operational challenges, and why the industry is moving toward more integrated solutions that bring connectivity, security, and observability into a single operational experience.

The Components of Kubernetes Networking

Kubernetes networking was designed to be flexible and extensible. Rather than prescribing a single implementation, Kubernetes defined a set of primitives and left key responsibilities such as pod connectivity, IP allocation, and policy enforcement to the ecosystem. Over time, these responsibilities were addressed by a growing set of specialized components, each focused on a narrow slice of Continue reading

From IPVS to NFTables: A Migration Guide for Kubernetes v1.35

Kubernetes Networking Is Changing

Kubernetes v1.35 marks an important turning point for cluster networking. The IPVS backend for kube-proxy has been officially deprecated, and future Kubernetes releases will remove it entirely. If your clusters still rely on IPVS, the clock is now very much ticking.

Staying on IPVS is not just a matter of running older technology. As upstream support winds down, IPVS receives less testing, fewer fixes, and less attention overall. Over time, this increases the risk of subtle breakage, makes troubleshooting harder, and limits compatibility with newer Kubernetes networking features. Eventually, upgrading Kubernetes will force a migration anyway, often at the worst possible time.

Migrating sooner gives you control over the timing, space to test properly, and a chance to avoid turning a routine upgrade into an emergency networking change.

Calico and the Path Forward

Project Calico’s unique design with a pluggable data plane architecture is what makes this transition possible without redesigning cluster networking from scratch. Calico supports a wide range of technologies, including eBPF, iptables, IPVS, Windows HNS, VPP, and nftables, allowing clusters to choose the most appropriate backend for their environment. This flexibility enables clusters to evolve alongside Kubernetes rather than being Continue reading

Key Insights from the 2025 GigaOm Radar for Container Networking

Why Calico was named as a Leader in the GigaOm Radar Report for Container Networking

In 2025, as modern applications became ever more distributed and the use of Kubernetes continued to proliferate, the role of container networking was critical. Today’s enterprises demand networking solutions that can scale, secure, and connect services reliably, whether those services run across multiple clouds, hybrid environments, or on-premises clusters.

We’re proud to share insights from the recently released 2025 GigaOm Radar Report for Container Networking, a comprehensive evaluation of vendor solutions across innovation, platform maturity, and real-world operational capabilities, and to highlight the unique strengths of Calico Cloud and Calico Enterprise as recognized in the report.

What the GigaOm Radar Report on Container Networking Covers

GigaOm’s Radar Report evaluates container networking solutions across a spectrum of criteria that matter most to IT infrastructure teams and cloud architects. These include:

- Network Policy Definition: Declarative, granular network policies consistently enforced across clusters

- Routing (Layer 3/Layer 4): Scalable routing between pods, services, and non-container workloads using Layer 3 (IP) and Layer 4 (TCP) protocols

- Layer 7 Networking: Application-aware traffic handling with visibility using Layer 7 protocols

- Load Balancing: Controls how traffic is distributed across instances, using latency Continue reading

Sidecarless mTLS in Kubernetes: How Istio Ambient Mesh and ztunnel Enable Zero Trust

Encrypting internal traffic and enforcing mutual (mTLS), a form of TLS in which both the client and server authenticate each other using X.509 certificates., has transitioned from a “nice-to-have” to a hard requirement, especially in Kubernetes environments where everything can talk to everything else by default. Whether your objectives are regulatory compliance, or simply aligning to the principles of Zero Trust, the goal is the same: to ensure every connection is encrypted, authenticated, and authorized.

Delivering Cluster-Wide mTLS Without Sidecars

The word ‘service mesh’ is bandied about as the ideal solution for implementing zero-trust security but it comes at a price often too high for organizations to accept. In addition to a steep learning curve, deploying a service mesh with a sidecar proxy in every pod scales poorly, driving up CPU and memory consumption and creating ongoing maintenance challenges for cluster operators.

Istio Ambient Mode addresses these pain points by decoupling the mesh from the application and splitting the service mesh into two distinct layers: mTLS and L7 traffic management, neither of which needs to run as a sidecar on a pod. By separating these domains, Istio allows platform engineers to implement mTLS cluster-wide without the complexity of Continue reading

The Rise of AI Agents and the Reinvention of Kubernetes: Ratan Tipirneni’s 2026 Outlook

Prediction: The next evolution of Kubernetes is not about scale alone, but about intelligence, autonomy, and governance.

As part of the article ‘AI and Enterprise Technology Predictions from Industry Experts for 2026′, published by Solutions Review, Ratan Tipirneni, CEO of Tigera, shares his perspective on how AI and cloud-native technologies are shaping the future of Kubernetes.

His predictions describe how production clusters are evolving as AI becomes a core part of enterprise platforms, introducing new requirements for security, networking, and operational control.

Looking toward 2026, Tipirneni expects Kubernetes to move beyond its traditional role of running microservices and stateless applications. Clusters will increasingly support AI-driven components that operate with greater autonomy and interact directly with other services and systems. This shift places new demands on platform teams around workload identity, access control, traffic management, and policy enforcement. It also drives changes in how APIs are governed and how network infrastructure is designed inside the cluster.

Read on to explore Tipirneni’s predictions and what they mean for teams preparing Kubernetes platforms for an AI-driven future.

AI Agents Become First-Class Workloads

By 2026, Tipirneni predicts that Kubernetes environments will increasingly host agent-based workloads rather than only traditional cloud native applications. Continue reading

Do You Need a Service Mesh? Understanding the Role of CNI vs. Service Mesh

The world of Kubernetes networking can sometimes be confusing. What’s a CNI? A service mesh? Do I need one? Both? And how do they interact in my cluster? The questions can go on and on.

Even for seasoned platform engineers, making sense of where these two components overlap and where the boundaries of responsibility end can be challenging. Seemingly bewildering obstacles can stand in the way of getting the most out of their complementary features.

One way to cut through the confusion is to start by defining what each of them is, then look at their respective capabilities, and finally clarify where they intersect and how they can work together.

This post will clarify:

- What a CNI is responsible for

- What a service mesh adds on top

- When you need one, the other, or both

What a CNI Actually Does

Container Network Interface (CNI) is a standard way to connect and manage networking for containers in Kubernetes. It is a set of standards defined by Kubernetes for configuring container network interfaces and maintaining connectivity between pods in a dynamic environment where network peers are constantly being created and destroyed.

Those standards are implemented by CNI plugins. A CNI plugin is Continue reading

How Istio Ambient Mode Delivers Real World Solutions

For years, platform teams have known what a service mesh can provide: strong workload identity, authorization, mutual TLS authentication and encryption, fine-grained traffic control, and deep observability across distributed systems. In theory, Istio checked all the boxes. In practice though, many teams hit a wall.

Across industries like financial services, media, retail, and SaaS, organizations told a similar story. They wanted mTLS between services to meet regulatory or security requirements. They needed safer deployment capabilities like canary rollouts and traffic splitting. They wanted visibility that went beyond IP addresses.

However, traditional sidecar based meshes came with real costs:

- High operational complexity

- Thousands of sidecars to manage

- Fragile upgrade paths

- Hard to debug failure modes

In several cases, teams started down the Istio service mesh path, only to pause or roll back entirely because the ongoing operational complexity was too high. The value of a service mesh was clear, but the service mesh architecture based on sidecars was not sustainable for many production environments.

The Reality Platform Teams Have Been Living With

In many cases, organizations evaluated service meshes with clear goals in mind. They wanted mTLS between services, better control over traffic during deployments, and observability that could keep up. Continue reading

Ingress NGINX Controller Is Dead — Should You Move to Gateway API?

Now What? Understanding the Impact of the Ingress NGINX Deprecation

Ingress NGINX Controller, the trusty staple of countless platform engineering toolkits, is about to be put out to pasture. This news was announced by the Kubernetes community recently, and very quickly circulated throughout the cloud-native space. It’s big news for any platform team that currently uses the NGINX Controller because, as of March 26, 2026, there will be no more bug fixes, no more critical vulnerability patches and no more enhancements when Kubernetes continues to release new versions.

If you’re feeling ambushed, you’re not alone. For many teams, this isn’t just an inconvenient roadmap update, its unexpected news that now puts long-term traffic management decisions front and center. You know you need to migrate yesterday but the best path forward can be a confusing labyrinth of platforms and unfamiliar tools. Questions you might ask yourself:

Do you find a quick drop-in Ingress replacement?

Do you find a quick drop-in Ingress replacement?

Does moving to Gateway API make sense and can you commit enough resources to do a full migration?

Does moving to Gateway API make sense and can you commit enough resources to do a full migration?

If you decide on Gateway API then what is the best option for a smooth transition?

If you decide on Gateway API then what is the best option for a smooth transition?

With Ingress NGINX on the way out, platform teams are standing at a Continue reading

An In-Depth Look at Istio Ambient Mode with Calico

The Next Step Toward a Unified Kubernetes Platform: Istio Ambient Mode

Organizations are struggling with rising operational complexity, fragmented tools, and inconsistent security enforcement as Kubernetes becomes the foundation for modern application platforms. As a result of this complexity and fragmentation, platform teams are increasingly burdened by the need to stitch together separate solutions for networking, network security, and observability. This fragmentation also creates higher operating costs, security gaps, inefficient troubleshooting, and an elevated risk of outages in mission-critical environments. The challenge is even greater for companies running multiple Kubernetes distributions, as relying on each platform’s unique and often incompatible networking stack can lead to significant vendor lock-in and operational overhead.

The Tigera Unified Strategy: Addressing Fragmentation

Tigera’s unified platform strategy is designed to address these challenges by providing a single solution that brings together all the essential Kubernetes networking and security capabilities enterprises need, that includes Istio Ambient Mode, delivered consistently across every Kubernetes distribution.

Istio Ambient Mode brings sidecarless service-mesh functionality that includes authentication, authorization, encryption, L4/L7 traffic controls, and deep application-level (L7) observability directly into the unified Calico platform. By including Istio Ambient Mode with Calico and making it easy to install and manage with the Tigera Continue reading

AI Meets Kubernetes Security: Tigera CEO Reveals What Comes Next for Platform Teams

Kubernetes adoption is growing rapidly, but so are complexity and security risks.

Platform teams are tasked with keeping clusters secure and observable while navigating a skills gap. At KubeCon + CloudNativeCon North America, The New Stack spoke with Ratan Tipirneni, President and CEO of Tigera, about the future of Kubernetes security, AI-driven operations, and emerging trends in enterprise networking. The highlights from that discussion are summarized below.

Portions of this article are adapted from a recorded interview between The New Stack’s Heather Joslin and Tigera CEO Ratan Tipirneni. You can watch the full conversation on The New Stack’s YouTube channel. Watch the full interview here

How Can Teams Better Manage the Kubernetes Blast Radius and Skills Gap?

Tipirneni emphasizes the importance of controlling risk in Kubernetes clusters. “You want to be able to microsegment your workloads so that if you do come under an attack, you can actually limit the blast radius,” he says.

Egress traffic is another area of concern. According to Tipirneni, identifying what leaves the cluster is critical for security and compliance. Platform engineers are often navigating complex configurations without decades of Continue reading

KubeCon NA 2025: Three Core Kubernetes Trends and a Calico Feature You Should Use Now

The Tigera team recently returned from KubeCon + CloudNativeCon North America and CalicoCon 2025 in Atlanta, Georgia. It was great, as always, to attend these events, feel the energy of our community, and hold in-depth discussions at the booth and in our dedicated sessions that revealed specific, critical shifts shaping the future of cloud-native platforms.

We pulled together observations from our Tigera engineers and product experts in attendance to identify three key trends that are directly influencing how organizations manage Kubernetes today.

Trend 1: Kubernetes is Central to AI Workload Orchestration

Trend 1: Kubernetes is Central to AI Workload Orchestration

A frequent and significant topic of conversation was the role of Kubernetes in supporting Artificial Intelligence and Machine Learning (AI/ML) infrastructure.

The consensus is clear: Kubernetes is becoming the standard orchestration layer for these specialized workloads. This requires careful consideration of networking and security policies tailored to high-demand environments. Observations from the Tigera team indicated a consistent focus on positioning Kubernetes as the essential orchestration layer for AI workloads. This trend underscores the need for robust, high-performance CNI solutions designed for the future of specialized computing.

Trend 2: Growth in Edge Deployments Increases Complexity

Trend 2: Growth in Edge Deployments Increases Complexity

Conversations pointed to a growing and tangible expansion of Kubernetes beyond central data centers and Continue reading

How to Turbocharge Your Kubernetes Networking With eBPF

When your Kubernetes cluster handles thousands of workloads, every millisecond counts. And that pressure is no longer the exception; it is the norm. According to a recent CNCF survey, 93% of organizations are using, piloting, or evaluating Kubernetes, revealing just how pervasive it has become.

Kubernetes has grown from a promising orchestration tool into the backbone of modern infrastructure. As adoption climbs, so does pressure to keep performance high, networking efficient, and security airtight.

However, widespread adoption brings a difficult reality. As organizations scale thousands of interconnected workloads, traditional networking and security layers begin to strain. Keeping clusters fast, observable, and protected becomes increasingly challenging.

Innovation at the lowest level of the operating system—the kernel—can provide faster networking, deeper system visibility, and stronger security. But developing programs at this level is complex and risky. Teams running large Kubernetes environments need a way to extend the Linux kernel safely and efficiently, without compromising system stability.

Why eBPF Matters for Kubernetes Networking

Enter eBPF (extended Berkeley Packet Filter), a powerful technology that allows small, verified programs to run safely inside the kernel. It gives Kubernetes platforms a way to move critical logic closer to where packets actually flow, providing sharper visibility Continue reading

5 Reasons to Switch to the Calico Ingress Gateway (and How to Migrate Smoothly)

The End of Ingress NGINX Controller is Coming: What Comes Next?

The Ingress NGINX Controller is approaching retirement, which has pushed many teams to evaluate their long-term ingress strategy. The familiar Ingress resource has served well, but it comes with clear limits: annotations that differ by vendor, limited extensibility, and few options for separating operator and developer responsibilities.

The Gateway API addresses these challenges with a more expressive, standardized, and portable model for service networking. For organizations migrating off Ingress NGINX, the Calico Ingress Gateway, a production-hardened, 100% upstream distribution of Envoy Gateway, provides the most seamless and secure path forward.

If you’re evaluating your options, here are the five biggest reasons teams are switching now followed by a step-by-step migration guide to help you make the move with confidence.

Reason 1: The Future Is Gateway API and Ingress Is Being Left Behind

Ingress NGINX is entering retirement. Maintaining it will become increasingly difficult as ecosystem support slows. The Gateway API is the replacement for Ingress and provides:

- A portable and standardized configuration model

- Consistent behaviour across vendors

- Cleaner separation of roles

- More expressive routing

- Support for multiple protocols

Calico implements the Gateway API directly and gives you an Continue reading

Introducing Calico AI and Istio Ambient Mode

The Complexity of Modern Kubernetes Networking

Kubernetes has transformed how teams build and scale applications, but it has also introduced new layers of complexity. Platform and DevOps teams must now integrate and manage multiple technologies: CNI, ingress and egress gateways, service mesh, and more across increasingly large and dynamic environments. As more applications are deployed into Kubernetes clusters, the operational burden on these teams continues to grow, especially when maintaining performance, reliability, security, and observability across diverse workloads.

To address this complexity and tool sprawl, Tigera is incorporating Istio’s Ambient Service Mesh directly into the Calico Unified Network Security Platform. Service mesh has become the preferred solution for application-level networking, particularly in environments with a large number of services or highly regulated workloads. Among available service meshes, Istio stands out as the most popular and widely adopted, supported by a thriving open-source community. By leveraging the lightweight, sidecarless design of Istio Ambient Mode, Calico delivers all the benefits of service mesh, secure service-to-service communication, mTLS authentication, fine-grained authorization, traffic management, and observability, without the burden of sidecars.

Complementing this addition is Calico AI. Calico AI brings intelligence and automation to Kubernetes networking. It addresses the massive operational burden on teams Continue reading

One-stop resource: No need to hunt across the site for guidance.

One-stop resource: No need to hunt across the site for guidance.

Trend 1: Kubernetes is Central to AI Workload Orchestration

Trend 1: Kubernetes is Central to AI Workload Orchestration Trend 2: Growth in Edge Deployments Increases Complexity

Trend 2: Growth in Edge Deployments Increases Complexity