DockerCon 2017: The Top Rated Sessions

After the general session videos from DockerCon Day 1 and Day 2 yesterday, we’re happy to share with you the video recordings of the top rated sessions by DockerCon attendees. All the slides will soon be published on our slideshare account and all the breakout session video recordings available on our DockerCon 2017 youtube playlist.

Cilium: Network and Application Security with BPF and XDP by Thomas Graf

Docker?!? But I am a Sysadmin by Mike Coleman

Creating Effective Images by Abby Fuller

Taking Docker from Local to Production at Intuit by JanJaap Lahpor and Harish Jayakumar

Container Performance Analysis by Brendan Gregg

Secure Substrate: Least Privilege Container Deployment by Diogo Mónica and Riyaz Faizullabhoy

Escape from VMs with Image2Docker by Elton Stoneman and Jeff Nickoloff

What Have Namespaces Done for You Lately? by Liz Rice

Watch the top rated sessions from #dockercon cc @brendangregg @abbyfuller @lizrice @diogomonica

Click To Tweet

The post DockerCon 2017: The Top Rated Sessions appeared first on Docker Blog.

“Fast and Furious 8: Fate of the Furious”

So "Fast and Furious 8" opened this weekend to world-wide box office totals of $500,000,000. I thought I'd write up some notes on the "hacking" in it. The tl;dr version is this: yes, while the hacking is a bit far fetched, it's actually more realistic than the car chase scenes, such as winning a race with the engine on fire while in reverse.[SPOILERS]

Car hacking

The most innovative cyber-thing in the movie is the car hacking. In one scene, the hacker takes control of the cars in a parking structure, and makes them rain on to the street. In another scene, the hacker takes control away from drivers, with some jumping out of their moving cars in fear.

How real is this?

Well, today, few cars have a mechanical link between the computer and the steering wheel. No amount of hacking will fix the fact that this component is missing.

With that said, most new cars have features that make hacking possible. I'm not sure, but I'd guess more than half of new cars have internet connections (via the mobile phone network), cameras (for backing up, but also looking forward for lane departure warnings), braking (for emergencies), and acceleration.

Continue reading

Twistlock Closes $17M Funding Round for Container Security

IBM security veteran Brendan Hannigan joined the Twistlock board.

IBM security veteran Brendan Hannigan joined the Twistlock board.

AES-CBC is going the way of the dodo

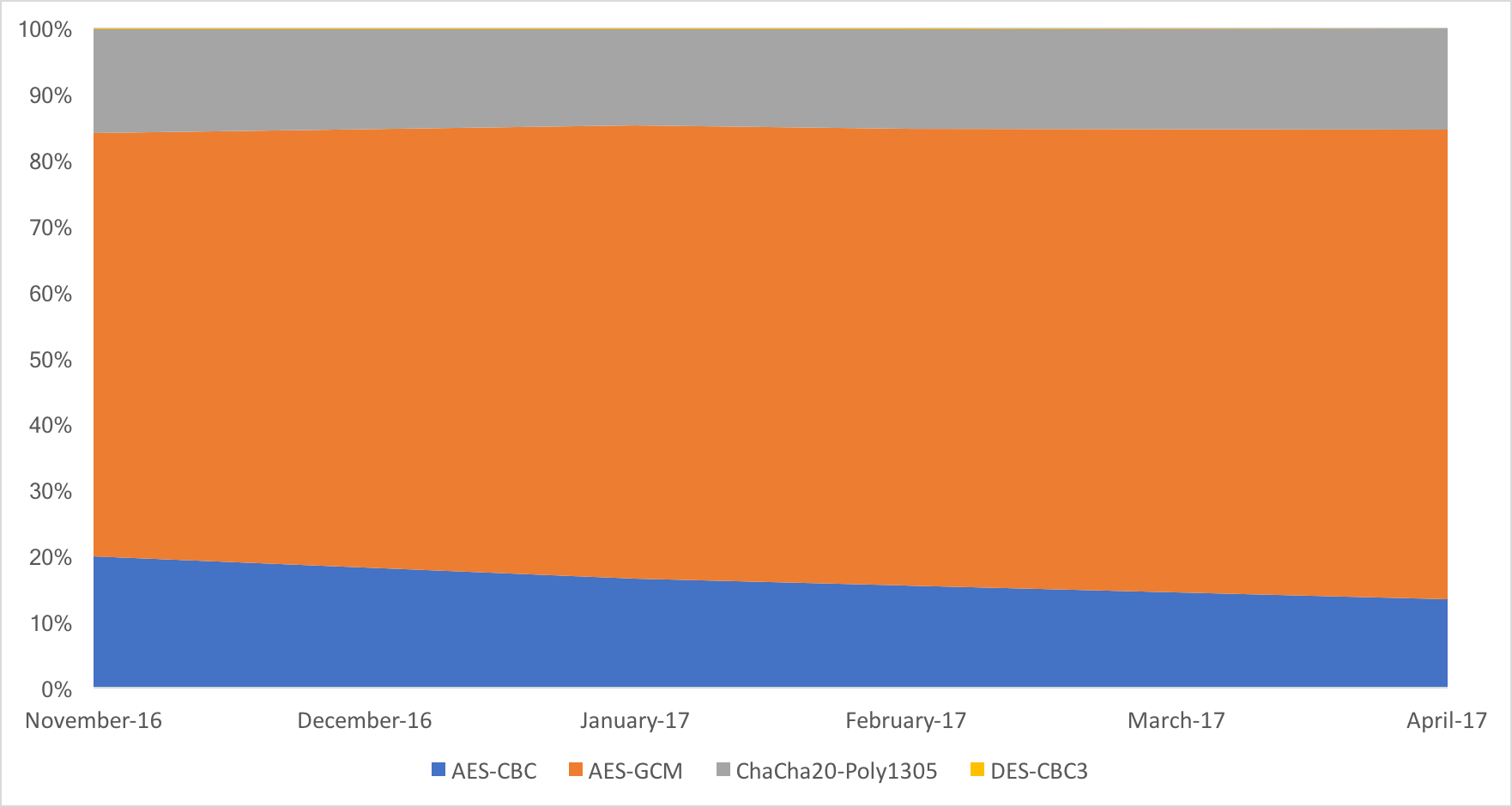

A little over a year ago, Nick Sullivan talked about the beginning of the end for AES-CBC cipher suites, following a plethora of attacks on this cipher mode.

Today we can safely confirm that this prediction is coming true, as for the first time ever the share of AES-CBC cipher suites on Cloudflare’s edge network dropped below that of ChaCha20-Poly1305 suites, and is fast approaching the 10% mark.

Over the course of the last six months, AES-CBC shed more than 33% of its “market” share, dropping from 20% to just 13.4%.

All of that share, went to AES-GCM, that currently encrypts over 71.2% of all connections. ChaCha20-Poly1305 is stable, with 15.3% of all connections opting for that cipher. Surprisingly 3DES is still around, with 0.1% of the connections.

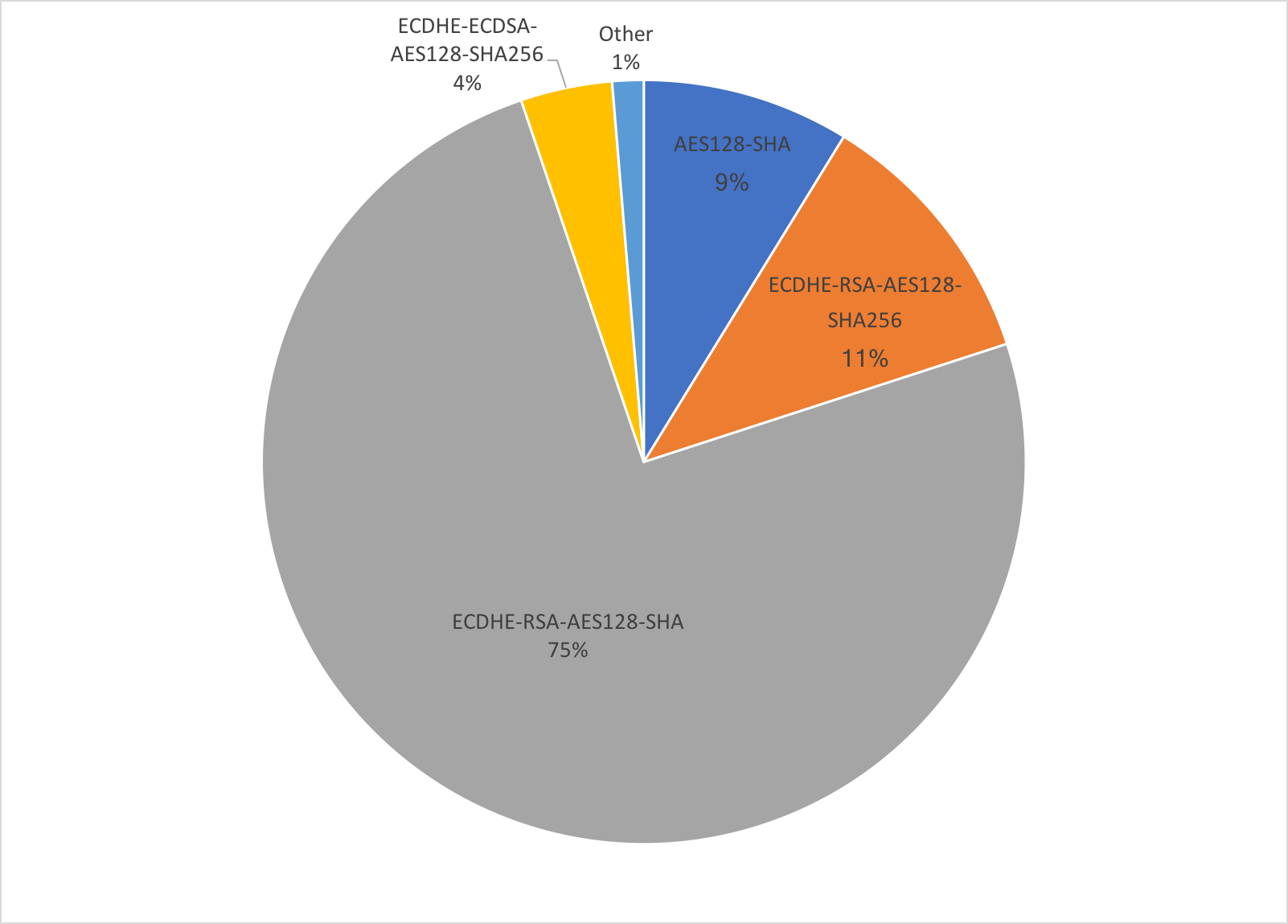

The internal AES-CBC cipher suite breakdown as follows:

The majority of AES-CBC connections use ECDHE-RSA or RSA key exchange, and not ECDHE-ECDSA, which implies that we mostly deal with older clients.

RSA is also dying

In other good new, the use of ECDSA surpassed that of RSA at the beginning of the year. Currently more than 60% of all connections use Continue reading

HyTrust Helps Feds Meet Cloud Security Mandate

It's a 'Swiss Army Knife' for federal cloud security compliance.

It's a 'Swiss Army Knife' for federal cloud security compliance.

ICSA Labs Certifies NSX Micro-segmentation Capabilities

VMware NSX has achieved ICSA labs Corporate Firewall Certification.

With the release of NSX for vSphere® 6.3, VMware has not only introduced several key security features such as Application Rule Manager and Endpoint Monitoring, which provide deep visibility into the application, and enable a rapid zero-trust deployment, but has also achieved Corporate Firewall Certification in independent testing performed by ICSA labs, a leading third-party testing and certification body and independent division of Verizon.

VMware NSX for vSphere 6.3 has been tested against an industry-accepted standard to which a consortium of firewall vendors, end users and ICSA labs contributed, and met all the requirements in the Baseline and Corporate module of the ICSA Module Firewall Certification Criteria version 4.2.

NSX is the only true micro-segmentation platform to achieve ICSA Firewall certification — with the NSX Distributed Firewall providing kernel-based, distributed stateful firewalling, and the Edge Services Gateway providing services such as North-South firewalling, NAT, DHCP, VPN, load balancing and high availability. VMware NSX provides security controls aligned to the application and enables a Zero-Trust model, independent of network topology.

The ICSA Firewall Certification criteria focus on several key firewall aspects, including stateful services, logging and persistence. ICSA also validates Continue reading

Observable Networks Detects Changes in AWS Lambda Functions

Serverless functions are becoming mainstream.

Serverless functions are becoming mainstream.

Can Container Security Surpass That of Virtual Machines?

Containers certainly face security threats that virtual machines do not.

Containers certainly face security threats that virtual machines do not.

Announcing LinuxKit: A Toolkit for building Secure, Lean and Portable Linux Subsystems

Last year, one of the most common requests we heard from our users was to bring a Docker-native experience to their platforms. These platforms were many and varied: from cloud platforms such as AWS, Azure, Google Cloud, to server platforms such as Windows Server, desktop platforms that their developers used such as OSX and Windows 10, to mainframes and IoT platforms – the list went on.

We started working on support for these platforms, and we initially shipped Docker for Mac and Docker for Windows, followed by Docker for AWS and Docker for Azure. Most recently, we announced the beta of Docker for GCP. The customizations we applied to make Docker native for each platform have furthered the adoption of the Docker editions.

One of the issues we encountered was that for many of these platforms, the users wanted Linuxcontainer support but the platform itself did not ship with Linux included. Mac OS and Windows are two obvious examples, but cloud platforms do not ship with a standard Linux either. So it made sense for us to bundle Linux into the Docker platform to run in these places.

What we needed to bundle was a secure, lean and portable Linux Continue reading

Introducing Moby Project: a new open-source project to advance the software containerization movement

Since Docker democratized software containers four years ago, a whole ecosystem grew around containerization and in this compressed time period it has gone through two distinct phases of growth. In each of these two phases, the model for producing container systems evolved to adapt to the size and needs of the user community as well as the project and the growing contributor ecosystem.

The Moby Project is a new open-source project to advance the software containerization movement and help the ecosystem take containers mainstream. It provides a library of components, a framework for assembling them into custom container-based systems and a place for all container enthusiasts to experiment and exchange ideas.

Let’s review how we got where we are today. In 2013-2014 pioneers started to use containers and collaborate in a monolithic open source codebase, Docker and few other projects, to help tools mature.

Then in 2015-2016, containers were massively adopted in production for cloud-native applications. In this phase, the user community grew to support tens of thousands of deployments that were backed by hundreds of ecosystem projects and thousands of contributors. It is during this phase, that Docker evolved its production model to an open component based approach. In Continue reading

Mirai, Bitcoin, and numeracy

Newsweek (the magazine famous for outing the real Satoshi Nakamoto) has a story about how a variant of the Mirai botnet is mining bitcoin. They fail to run the numbers.- total Mirai hash-rate = 2.5 million bots times 0.185 megahash/sec = 0.468 terahashes/second

- Continue reading

Using Microsegmentation to Prevent Security Breaches

Network virtualization is making microsegmentation possible and allowing networks to isolate security breaches.

Network virtualization is making microsegmentation possible and allowing networks to isolate security breaches.

Deluxe Makes Case for Network Virtualization

Microsegmentation of virtual networks spanning both private and public clouds is critical to Deluxe’s return on investment.

Microsegmentation of virtual networks spanning both private and public clouds is critical to Deluxe’s return on investment.

Changing Internet Standards to Build A Secure Internet

We’ve been working with registrars and registries in the IETF on making DNSSEC easier for domain owners, and over the next two weeks we’ll be starting out by enabling DNSSEC automatically for .dk domains.

DNSSEC: A Primer

Before we get into the details of how we've improved the DNSSEC experience, we should explain why DNSSEC is important and the function it plays in keeping the web safe.

DNSSEC’s role is to verify the integrity of DNS answers. When DNS was written in the early 1980’s, it was only a few researchers and academics on the internet. They all knew and trusted each other, and couldn’t imagine a world in which someone malicious would try to operate online. As a result, DNS relies on trust to operate. When a client asks for the address of a hostname like www.cloudflare.com, without DNSSEC it will trust basically any server that returns the response, even if it wasn’t the same server it originally asked. With DNSSEC, every DNS answer is signed so clients can verify answers haven’t been manipulated over transit.

The Trouble With DNSSEC

If DNSSEC is so important, why do so few domains support it? First, for a domain to Continue reading

Fortinet Brings Security to SD-WAN and SaaS Applications

Fortinet's SD-WAN security was built in-house.

Fortinet's SD-WAN security was built in-house.

Notes on the FCC and Privacy in the US

I’ve been reading a lot about the repeal of the rules putting the FCC in charge of privacy for access providers in the US recently—a lot of it rising to the level of hysteria and “the end is near” level. As you have probably been reading these stories, as well, I thought it worthwhile to take a moment and point out two pieces that seem to be the most balanced and thought through out there.

Essentially—yes, privacy is still a concern, and no, the sky is not falling. The first is by Nick Feamster, who I’ve worked with in the past, and has always seemed to have a reasonable take on things. The second is by Shelly Palmer, who I don’t always agree with, but in this case I think his analysis is correct.

Last week, the House and Senate both passed a joint resolution that prevent’s the new privacy rules from the Federal Communications Commission (FCC) from taking effect; the rules were released by the FCC last November, and would have bound Internet Service Providers (ISPs) in the United States to a set of practices concerning the collection and sharing of data about consumers. The rules were widely heralded Continue reading

Notes on the FCC and Privacy in the US

I’ve been reading a lot about the repeal of the rules putting the FCC in charge of privacy for access providers in the US recently—a lot of it rising to the level of hysteria and “the end is near” level. As you have probably been reading these stories, as well, I thought it worthwhile to take a moment and point out two pieces that seem to be the most balanced and thought through out there.

Essentially—yes, privacy is still a concern, and no, the sky is not falling. The first is by Nick Feamster, who I’ve worked with in the past, and has always seemed to have a reasonable take on things. The second is by Shelly Palmer, who I don’t always agree with, but in this case I think his analysis is correct.

Last week, the House and Senate both passed a joint resolution that prevent’s the new privacy rules from the Federal Communications Commission (FCC) from taking effect; the rules were released by the FCC last November, and would have bound Internet Service Providers (ISPs) in the United States to a set of practices concerning the collection and sharing of data about consumers. The rules were widely heralded Continue reading

Research: McAfee Labs Threats Report April 2017

Network professionals are the front line in cyber-defence by defining and operating the perimeter. While it is only a first layer of static defense, its well worth understanding the wider threat landscape that you are defending against. Many companies publish regular reports and this one is from McAfee.

McAfee Labs Threats Report – April 2017 – Direct Link

Landing page is https://secure.mcafee.com/us/security-awareness/articles/mcafee-labs-threats-report-mar-2017.aspx

Note: Intel has spun McAfee out to a private VC firm in the last few weeks so its possible that we will see a resurgence of the McAfee brand. I’m doubtful that McAfee can emerge but lets wait and see.

Some points I observed when reading this report:

- McAfee wants to tell you about its cloud-based threat intelligence (which all security vendors have now, table stakes)

- The pitch is pretty much identical to any other cloud threat intelligence.

- The big six security companies have formed the Cyber Threat Alliance ( ….to prevent the startups from competing with them ? ) aka. Check Point, Cisco, Fortinet, Intel Security, Palo Alto Networks, Symantec

- Big section on Mirai botnet and how it works.

- Good summary of the different network packet attack modes in Mirai. Nicely laid out with Continue reading

Mac Flooding Attack , Port Security and Deployment Considerations

This article is the 4th in Layer 2 security series. We will be discussing a very common layer 2 attack which is MAC flooding and its TMtigation “Port Security MAC limiting” If you didn’t read the previous 3 articles; DHCP snooping, Dynamic ARP Inspection, and IP Source Guard; I recommend that you take a quick […]

The post Mac Flooding Attack , Port Security and Deployment Considerations appeared first on Cisco Network Design and Architecture | CCDE Bootcamp | orhanergun.net.

University finds Proactive Security and PCI Compliance through NSX

The University of Pittsburgh is one of the oldest institutions in the country, dating to 1787 when it was founded as the Pittsburgh Academy. The University has produced the pioneers of the MRI and the television, winners of Nobel and Pulitzer prizes, Super Bowl and NBA champions, and best-selling authors.

As with many businesses today, the University continues to digitize its organization to keep up with the demands of over 35,000 students, 5,000 faculty, and 7,000 staff across four campuses. While the first thing that comes to mind may be core facilities such as classrooms, this also includes keeping up with the evolving technology on the business side of things, such as point-of-sale (POS) systems. When a student buys a coffee before studying or a branded sweatshirt for mom using their student ID, those transactions must be facilitated and secured by the University.

What does it mean to secure financial transactions? For one, just as with a retail store operation, the University must achieve PCI compliance to facilitate financial transactions for its customers. What does this mean? Among other tasks, PCI demands that the data used by these systems is completely isolated from other IT operations. However, locking everything down Continue reading