The Role of Security in Edge Computing

A look at what's needed to mitigate IoT security threats.

A look at what's needed to mitigate IoT security threats.

역자주: 이 글은 Marek Majkowski의 https://blog.cloudflare.com/syn-packet-handling-in-the-wild/ 를 번역한 것입니다.

우리 Cloudflare 에서는 실제 인터넷상의 서버 운영 경험이 많습니다. 하지만 이런 흑마술 마스터하기를 게을리하지도 않습니다. 이 블로그에서는 인터넷 프로토콜의 여러 어두운 부분을 다룬 적이 있습니다: understanding FIN-WAIT-2 나 receive buffer tuning과 같은 것들입니다.

CC BY 2.0 image by Isaí Moreno

사람들이 충분히 신경쓰지 않는 주제가 하나 있는데, 바로 SYN 홍수(SYN floods) 입니다. 우리는 리눅스를 사용하고 있는데 리눅스에서 SYN 패킷 처리는 매우 복잡하다는 것을 알게 되었습니다. 이 글에서는 이에 대해 좀 더 알아 보도록 하겠습니다.

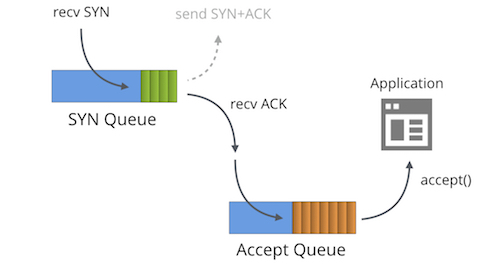

일단 만들어진 소켓에 대해 "LISTENING" TCP 상태에는 두개의 분리된 큐가 존재 합니다:

일반적으로 이 큐에는 여러가지 다른 이름이 붙어 있는데, "reqsk_queue", "ACK backlog", "listen backlog", "TCP backlog" 등이 있습니다만 혼란을 피하기 위해 위의 이름을 사용하도록 하겠습니다.

SYN 큐는 수신 SYN 패킷[1] (구체적으로는 struct inet_request_sock)을 저장합니다. 이는 SYN+ACK 패킷을 보내고 타임아웃시에 재시도하는 역할을 합니다. 리눅스에서 재시도 값은 다음과 같이 설정됩니다:

$ sysctl net.ipv4.tcp_synack_retries

net.ipv4.tcp_synack_retries = 5

문서를 보면 다음과 같습니다:

tcp_synack_retries - 정수

수동 TCP 연결 시도에 대해서 SYNACK를 몇번 다시 보낼지를 지정한다.

이 값은 255 이하이어야 한다. 기본값은 5이며, 1초의 초기 RTO값을 감안하면

마지막 재전송은 31초 Continue readingLinux operating system is used as the foundation for numerous network operating systems including Arista EOS and Cumulus Linux. It provides most networking constructs we grew familiar with including interfaces, VLANs, routing tables, VRFs and contexts, but they behave slightly differently from what we’re used to.

In Software Gone Wild Episode 86 Roopa Prabhu and David Ahern explained the fundamentals of packet forwarding on Linux, and the differences between Linux and more traditional network operating systems.

Read more ...How Does Internet Work - We know what is networking

I just received an e-mail from Cisco with the notice that I was elected Cisco Champion for 2018. As Cisco says: “Cisco Champions are a group of highly influential technical experts who generously enjoy sharing their knowledge, expertise, and thoughts on the social web and with Cisco. The Cisco Champion program encompasses a diverse set of areas such as Data Center, Internet of Things, Enterprise Networks, Collaboration, and Security. Cisco Champions are located all over the world.” I must say that last 7 years of writing this blog was the primary reason why one should pick me for this flattering

Welcome to Technology Short Take 93! Today I have another collection of data center technology links, articles, thoughts, and rants. Here’s hoping you find something useful!

Nothing this time around. Feel free to hit me up on Twitter if you have links you think I should include next time!

NVM-Express is the latest hot thing in storage, with server and storage array vendors big and small making a mad dash to bring the protocol into their products and get an advantage in what promises to be a fast-growing market.

With the rapid rise in the amount of data being generated and processed, and the growth of such technologies as artificial intelligence and machine learning in managing and processing the data, demand for faster speeds and lower latency in flash and other non-volatile memory will continue to increase in the coming years, and established companies like Dell EMC, NetApp …

A New Architecture For NVM-Express was written by Jeffrey Burt at The Next Platform.

NetYCE gives Ethan Banks an overview of their platform and shares some of the latest capabilities they’ve baked in.

The post BiB 023: netYCE Is Orchestrated Control Of Your Network appeared first on Packet Pushers.

In part 1 of our series about getting started with Linux, we learned how to download Linux, whether you should use the CLI or the GUI, how to get a SSH client, how to login to Linux and how to get help. In this post, you’ll learn how to know what type of Linux you are using and how to navigate the Linux file system.

Because there are so many different types of Linux, you want to be sure you know what distribution and version you are using (for the sake of searching the right documentation on the Internet, if nothing else). Keep in mind a couple different commands to identify your Linux version.

The uname command shows the basic type of operating system you are using, like this:

david@debian:~$ uname -a

Linux debian 3.16.0-4-686-pae #1 SMP Debian 3.16.43-2 (2017-04-30) i686 GNU/Linux

And the hostnamectl command shows you the hostname of the Linux server as well as other system information, like the machine ID, virtualization hypervisor (if used), operating system and Linux kernel version. Here’s an example:

david@debian:~$ hostnamectl

Static hostname: debian

Icon name: computer-vm

Continue reading

Russ White on the tragedy of BGP

Russ White on the tragedy of BGP

Today we are excited to launch the public beta for Docker Enterprise Edition (Docker EE), our container management platform. First announced at DockerCon Europe, this release features Kubernetes integration as an optional orchestration solution, running side-by-side with Docker Swarm. With this solution, organizations will be able to deploy applications with either Swarm or fully-conformant Kubernetes while maintaining the consistent developer-to-IT workflow users have come to expect from Docker, especially when combined with the recent edge release of Docker for Mac with Kubernetes support. In addition to Kubernetes, this release includes enhancements to Swarm and to Docker Trusted Registry (DTR) which can be tested during the beta period.

Due to the high interest in this beta, license keys will be rolled out in batches over the next few weeks. Individuals who signed up for beta at www.docker.com/kubernetes will receive instructions on how to access this release and where to submit feedback. We also encourage our partners to use this time to test and validate their Docker and Kubernetes solutions against this release. Registrations will remain open throughout this beta testing period.

At DockerCon Europe, we demonstrated the management integration of Kubernetes within Docker EE. You can Continue reading

Stateful container storage models support more robust applications.

Stateful container storage models support more robust applications.

Apple is promising $10 billion for new U.S. data centers.

Apple is promising $10 billion for new U.S. data centers.

Nick Russo and I stopped by the Network Collective last week to talk about BGP traffic engineering—and in the process I confused BGP deterministic MED and always compare MED. I’ve embedded the video below.

Verizon’s Vestberg describes fixed 5G as just a “slice” of the company’s bigger 5G plan.

Verizon’s Vestberg describes fixed 5G as just a “slice” of the company’s bigger 5G plan.

Tech Data will distribute the all-flash integrated appliances that run the software.

Tech Data will distribute the all-flash integrated appliances that run the software.