Dell Technologies: SDN Driving ‘Next-Era’ Partnerships with Robots

Humans will be the ‘digital conductors.’

Humans will be the ‘digital conductors.’

Humans will be the ‘digital conductors.’

Humans will be the ‘digital conductors.’

Every 4 years since its start back in 1989, a hacker/security conference takes place in the Netherlands. This summer, the eighth version of this conference, called Still Hacking Anyway 2017 (sha2017.org), will run between the 4th and 8th of August. The conference is not-for-profit and run by volunteers, and this year we’re expecting about 4000 visitors.

For an event like SHA, all the visitors need to connect to a network to access the Internet. A large part of the network is built on Cumulus Linux. In this article, we’ll dive into what the event is and how the network, with equipment sponsored by Cumulus, is being built.

What makes SHA 2017 especially exciting is that it is an outdoor event. All the talks are held in large tents, and they can be watched online through live streams. At the event site, visitors will organize “villages” (a group of tents) where they will work on several projects ranging from security research to developing electronics and building 3D printers.

Attendees will camp on a 40 acre field, but they won’t be off the grid, as wired and wireless networks will keep them connected. The network is designed Continue reading

The Internet Society African Regional Bureau has worked with Network Operator Groups (NOGs) in Africa, providing financial and technical support to organize trainings and events at the local level. We recently shared many of their stories. There are also a number of NOGs that seek to attract women engineers to share knowledge and experience as well as to encourage young women to take up technology-related fields – which are largely perceived in the African region as “men only.” Here are their stories.

New Relic expects to see integrations with cloud services from Azure, Google, IBM, and Pivotal.

New Relic expects to see integrations with cloud services from Azure, Google, IBM, and Pivotal.

This app-based approach could make private LTE networks more affordable.

This app-based approach could make private LTE networks more affordable.

Pulse Secure completes acquisition of Brocade assets; Riverbed releases Xirrus access point.

Pulse Secure completes acquisition of Brocade assets; Riverbed releases Xirrus access point.

Legal expenses, including Cisco patent dispute, cost Arista $12 million.

Legal expenses, including Cisco patent dispute, cost Arista $12 million.

Hey, it's HighScalability time:

Hands down the best ever 25,000 year old selfie from Pech Merle cave in southern France. (The Ice Age)

Company sees growth opportunities in 5G and cloud.

Company sees growth opportunities in 5G and cloud.

Why Create a PCI Assessment Playbook

Having gone through the Payment Card Industry Data Security Standard (PCI DSS) yearly assessment process several times, I can confirm it is a fairly intensive assessment that will require a large effort from a lot people!

Each assessment the Assessors will request evidence, review documentation, ask for sample system configurations, be onsite to interview and observe personnel, and present observations or findings that must be remediated. These various assessment activities and last-minute remediation efforts can be very disruptive to all involved, and usually result in “fire drill” activities that require personnel to be pulled away from their daily tasks to react to the assessment requests.

Since the PCI assessment is very similar from year to year, and with some well thought out planning it is possible to streamline the assessment process. Just like in football, having a well thought out strategy in the form of a playbook can assist everyone that needs to know their part, or what needs to be done when. With this cylinder process in place and in the form of a PCI Assessment Playbook that everyone can follow, it can greatly reduce the stress historically associated with the assessment and attaining Continue reading

The post Tier 1 carrier performance report: July, 2017 appeared first on Noction.

The post Worth Reading: Google rewires the Internet appeared first on rule 11 reader.

Excitement is building around SDS, but unfortunately, so is the confusion.

Excitement is building around SDS, but unfortunately, so is the confusion.

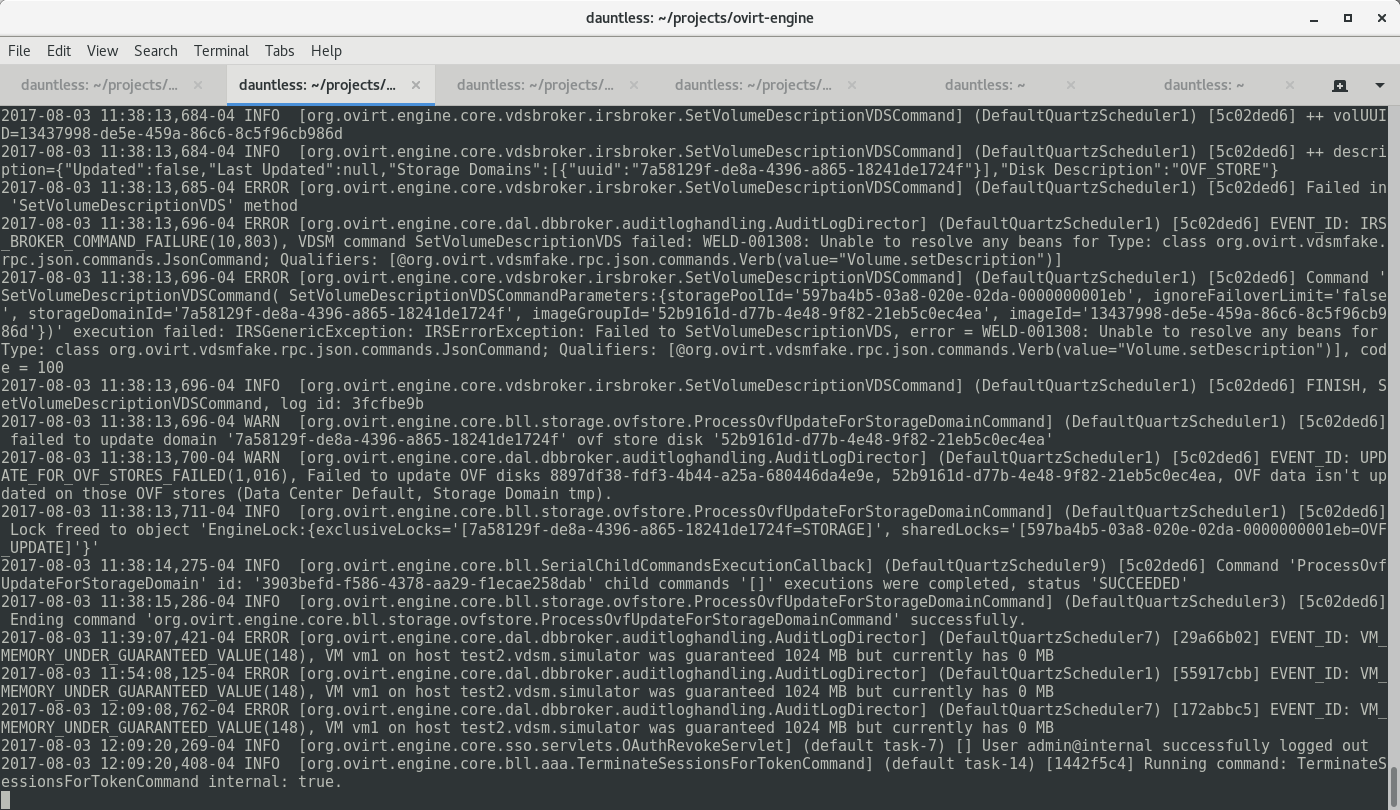

As a developer, one drawback of using Google Web Toolkit (GWT) for the oVirt Administration Portal (aka webadmin) is that the GWT compile process takes an exceptionally long time. If you make a change in some code and rebuild the ovirt-engine project using make install-dev ..., you'll be waiting several minutes to test your change. In practice, such a long delay in the usual code-compile-refresh-test cycle would be unbearable.

Luckily, we can use GWT Super Dev Mode ("SDM") to start up a quick refresh-capable instance of the application. With SDM running, you can make a change in GWT and test the refreshed change within seconds.

If you want to step through code and use the Chrome debugger, oVirt and SDM don't work well together for debugging due to the oVirt Administration Portal's code and source map size. Therefore, below we demonstrate how to disable source maps.

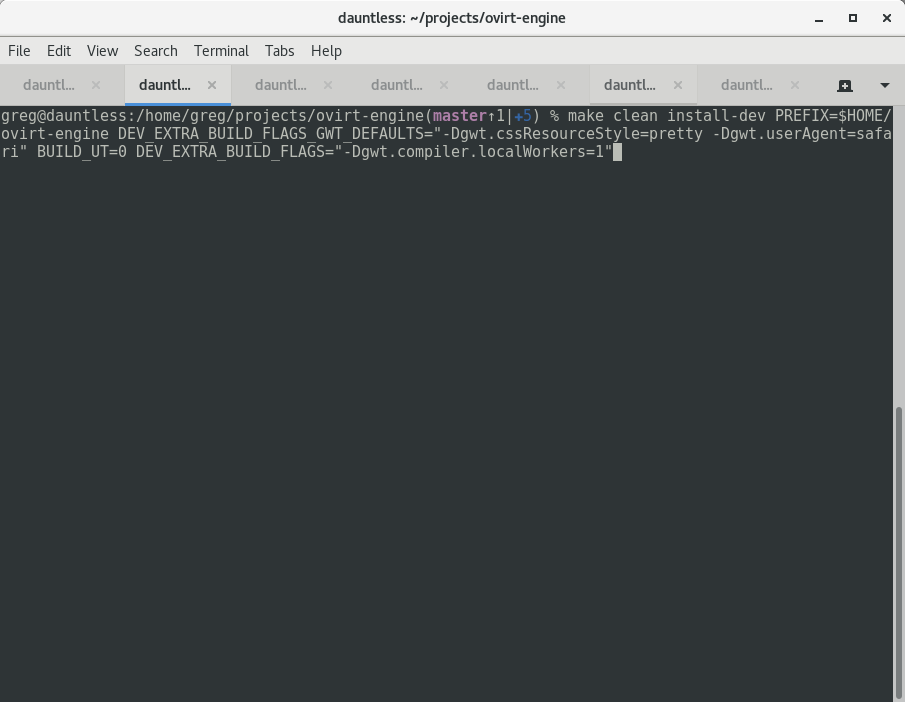

Open a terminal, build the engine normally, and start it.

``` make clean install-dev PREFIX=$HOME/ovirt-engine DEV_EXTRA_BUILD_FLAGS_GWT_DEFAULTS="-Dgwt.cssResourceStyle=pretty -Dgwt.userAgent=safari" BUILD_UT=0 DEV_EXTRA_BUILD_FLAGS="-Dgwt.compiler.localWorkers=1"

…

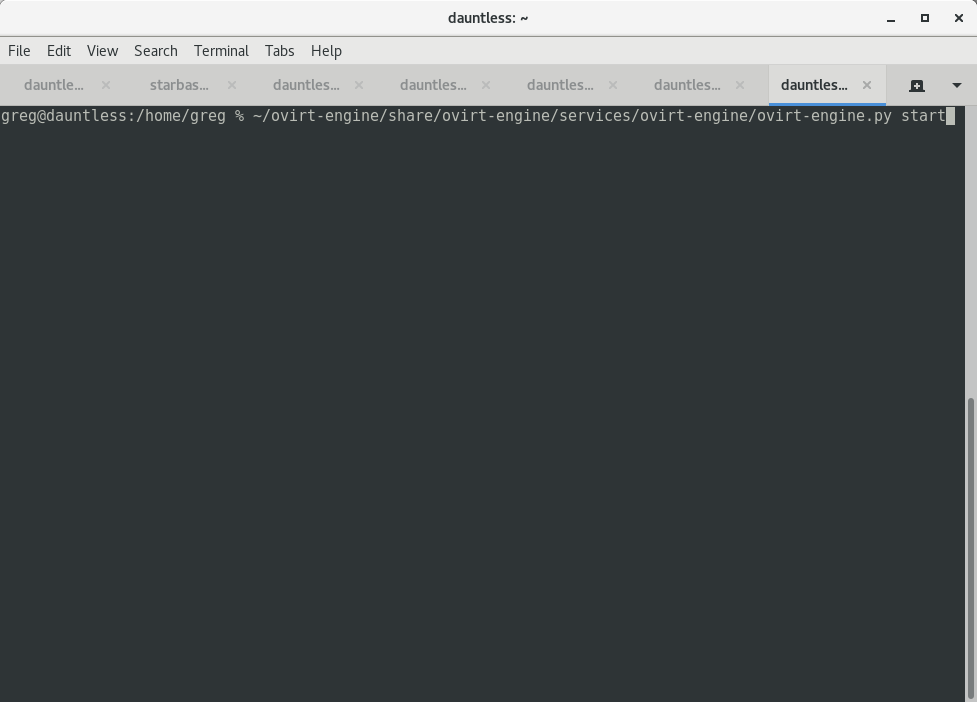

$HOME/ovirt-engine/share/ovirt-engine/services/ovirt-engine/ovirt-engine.py start

```

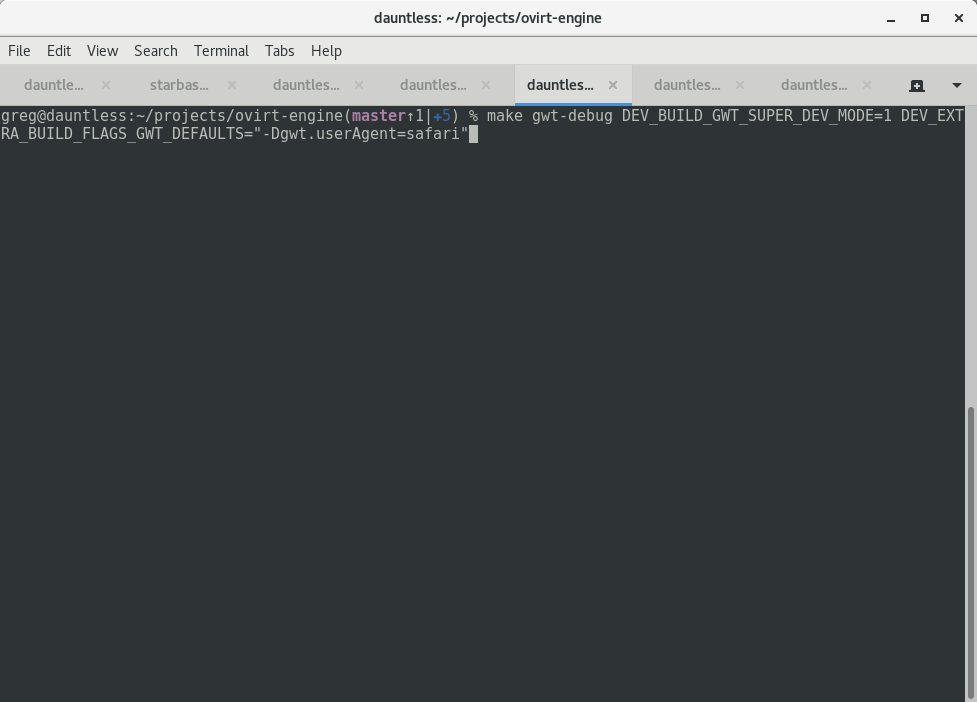

In a second terminal, run:

Chrome:

make gwt-debug DEV_BUILD_GWT_SUPER_DEV_MODE=1 DEV_EXTRA_BUILD_FLAGS_GWT_DEFAULTS="-Dgwt.userAgent=safari"

or

Firefox:

make gwt-debug DEV_BUILD_GWT_SUPER_DEV_MODE=1 DEV_EXTRA_BUILD_FLAGS_GWT_DEFAULTS="-Dgwt.userAgent=gecko1_8"

Wait about two minutes Continue reading

We’re watching them (watching you).

We’re watching them (watching you).

Plenty of reasons to not use it, basically.

The post Opinion on Cisco VIRL Aug 2017 appeared first on EtherealMind.

After finishing up Mobility Field Day last week, I got a chance to reflect on a lot of the information that was shared with the delegates. Much of the work in wireless now is focused on analytics. Companies like Cape Networks and Nyansa are trying to provide a holistic look at every part of the network infrastructure to help professionals figure out why their might be issues occurring for users. And over and over again, the resound cry that I heard was “It’s Not The Wi-Fi”

Most of wireless is focused on the design of the physical layer. If you talk to any professional and ask them to show your their tool kit, they will likely pull out a whole array of mobile testing devices, USB network adapters, and diagramming software that would make AutoCAD jealous. All of these tools focus on the most important part of the equation for wireless professionals – the air. When the physical radio spectrum isn’t working users will complain about it. Wireless pros leap into action with their tools to figure out where the fault is. Either that, or they are very focused on providing the right design from Continue reading

Settlement-Free Peering Requirements. I explained the Peering , Types of Peering basics in one of the previous posts. When we talk about peering, we generally mean settlement-free peering. But what are the common requirements for companies to peer with each other ? Settlement-free peering is called as Internet Peering as well. So, companies setup […]

The post Settlement-Free Peering Requirements appeared first on Cisco Network Design and Architecture | CCDE Bootcamp | orhanergun.net.