Using DCNM to Automate Cisco FabricPath Operations

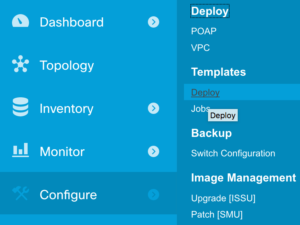

In my final post on Cisco’s Data Center Network Manager (DCNM), I’m taking a look at the deployment capabilities and templating features which allow configuration to be deployed automatically to multiple devices. In its simplest case, this might be used to set a new local username / password on all devices in the fabric, but in theory it can be used for much more.

DCNM Scripting

Let’s get one thing straight right out of the gate: this ain’t no Jinja2 templating system. While DCNM’s templates support the use of variables and some basic loop and conditional structures, the syntax is fairly limited and the only real-time interaction with the device during the execution of the template amounts to a variable containing the output of the last command issued. There are also very few system variables provided to tell you what’s going on. For example I couldn’t find a variable containing the name of the current device; I had to issue a hostname command and evaluate the response in order to confirm which device I was connecting to. That said, with a little creativity and a lot of patience, it’s possible to develop scripts which do useful things to the fabric.