Wi-Fi: The jungle of the Unlisenced

This is a follow-up on the recently published Packet Pushers Show 221 – Marriott, Wifi, + the FCC with Glenn Fleishman & Lee Badman. Let me begin by stating my role in the ecospace: I am currently overseeing the expansion of broadband into Indianfield Co-operative Campground (indianfieldcampground.com) in the town of Salem (A less populous […]

Author information

vLAG Caveats in Brocade VCS Fabric

Brocade VCS fabric has one of the most flexible multichassis link aggregation group (LAG) implementation – you can terminate member links of an individual LAG on any four switches in the VCS fabric. Using that flexibility is not always a good idea.

2015-01-23: Added a few caveats on load distribution

Read more ...Docker Networking 101 – The defaults

We’ve talked about docker in a few of my more recent posts but we haven’t really tackled how docker does networking. We know that docker can expose container services through port mapping, but that brings some interesting challenges along with it.

We’ve talked about docker in a few of my more recent posts but we haven’t really tackled how docker does networking. We know that docker can expose container services through port mapping, but that brings some interesting challenges along with it.

As with anything related to networking, our first challenge is to understand the basics. Moreover, to understand what our connectivity options are for the devices we want to connect to the network (docker(containers)). So the goal of this post is going to be to review docker networking defaults. Once we know what our host connectivity options are, we can spread quickly into advanced container networking.

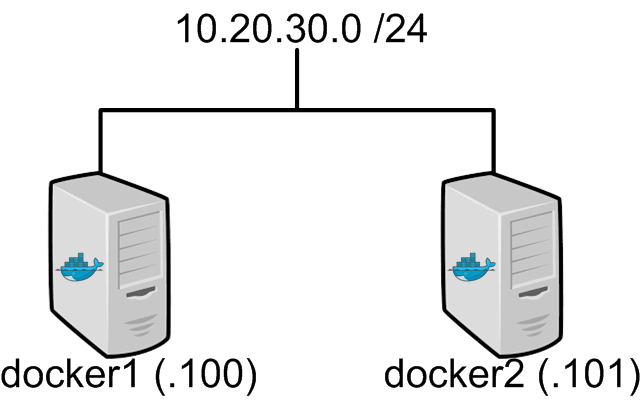

So let’s start with the basics. In this post, I’m going to be working with two docker hosts, docker1 and docker2. They sit on the network like this…

So nothing too complicated here. Two basic hosts with a very basic network configuration. So let’s assume that you’ve installed docker and are running with a default configuration. If you need instructions for the install see this. At this point, all I’ve done is configured a static IP on each host, configured a Continue reading

Free Chapter of “The Book of GNS3”

Hey Everyone! I wanted to release a quick note about a Free Chapter release from The Book of GNS3. The Book of GNS3 is written byValidation Testing Matters

A couple of weeks ago, a CLN Member Posted a question with the heading Does ASA drop active session.

The specific question was as follows–

I have a time based ACL configured on a Cisco ASA. I need to know if the active sessions are dropped by the ASA when the time limit is over.

For example, users are allowed to connect between 12 and 1 PM. If there are any active connections just before 1 PM then will they be dropped at 1 PM?

Many network and security administrators would blindly assume that a time-based ACL would block or allow traffic based on the time-range attached to the individual ACE. Having quite a lot of experience with the ASA, I was skeptical and assumed that any ongoing connections would continue to allow the flow of traffic. I decided to do a little testing and here is what I found.

- Viewing the ACL, the time based ACE (line in the ACL) switched from active to inactive about 60-70 seconds after I expected it to

- When testing with sessionized ICMP (fixup protocol icmp–to enable ICMP inspection), ICMP Echoes were blocked as soon as the ACE switched to inactive

- Testing with TCP, a connection through Continue reading

The Importance of Seeing Your Network Topology

by Alex Hoff, originally published on the Auvik blog As our product development adventure has unfolded over the past two years, we’veInteract with Ansible

Find Ansible on all of your favorite social networks and on the web.

LinkedIn (Group)

LinkedIn (Page)

Configuring a load balancer with VMware NSX

In the previous post a NAT has been configured to allow access from external networks: Now the edge router will act as a load balancer too: connection to the edge router with destination port 2222 will be balanced on both internal VM using the port 22. Go to “Networking & Security -> NSX Edges”, […]Configuring NAT and firewall on a NSX Edge Router

On a previous post the edge router has been connected to external network: In this post NAT and Firewall will be configured to allow SSH access to VM1 from external networks. Go to “Networking & Security -> NSX Edges”, double click on the edge router and follow “Manage -> NAT”. Add a DNAT role so […]But Does it Work?

When I was in the USAF, a long, long, time ago, there was a guy in my shop — Armand — who, no matter what we did around the shop, would ask, “but does it work?” For instance, when I was working on redoing the tool cabinet, with nice painted slots for each tool, he walked by — “Nice. But does it work?” I remember showing him where each tool fit, and how it would all be organized so we the pager went off at 2AM because the localizer was down (yet again), it would be easy to find that one tool you needed to fix the problem. He just shook his head and walked away. Again, later, I was working on the status board in the Group Readiness Center — the big white metal board that showed the current status of every piece of comm equipment on the Base — Armand walked by and said, “looks nice, but does it work?” Again, I showed him how the new arrangement was better than the old one. And again Armand just shook his head and walked away. It took me a long time to “get it” — to Continue reading

Improving ECMP Load Balancing with Flowlets

Every time I write about unequal traffic distribution across a link aggregation group (LAG, aka Etherchannel or Port Channel) or ECMP fabric, someone asks a simple question “is there no way to reshuffle the traffic to make it more balanced?”

TL&DR summary: there are ways to do it, and some vendors already implemented them.

Read more ...PQ Show 42 – HP Networking – Location Aware Wireless

This sponsored podcast is another in our series of recordings made at HP Discover 2014 in Barcelona, Spain. Once again, our special thanks to Chris Young for bringing us technical guests and not just fluffy marketing folks. Technical Marketing Engineer at HP Networking Yarnin Israel and Senior Research Scientist at HP Labs Souvik Sen join Packet […]

Author information

The post PQ Show 42 – HP Networking – Location Aware Wireless appeared first on Packet Pushers Podcast and was written by Ethan Banks.

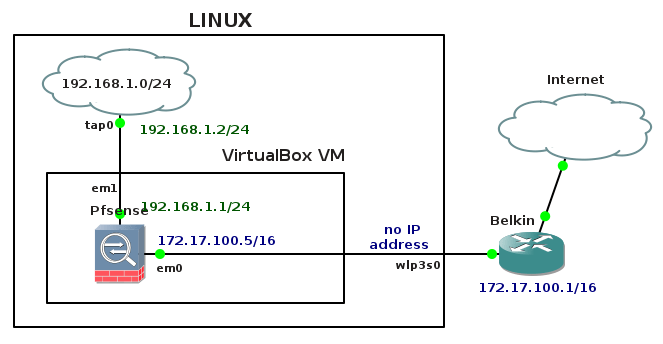

PfSense VirtualBox Appliance as Personal Firewall on Linux

The tutorial explains how to set up pfSense VirtualBox appliance in order to use it as a personal firewall on Linux. It shows Linux networkPfSense VirtualBox Appliance as Personal Firewall on Linux

The tutorial explains how to set up pfSense VirtualBox appliance in order to use it as a personal firewall on Linux. It shows Linux network configuration to support this scenario and provides an installation script that automatically builds a VirtualBox virtual machine ready for pfSense installation. It also describes pfSense installation and shows minimal web configuration needed for successful connection to the Internet.

pfSense Live CD ISO disk can be downloaded from here.

1. Linux Network Configuration

We are going to install pfSsense from live CD ISO image on a VirtualBox virtual machine. To do so we must reconfigure an existing network interface, create a new one and configure new static default routes. A network topology consists of Linux Fedora with installed VirtualBox virtualizer. is shown below.

Picture 1 - Network Topology

A wireless network card is installed in Linux and presented as an interface wlp3s0. The interface wlp3s0 is the interface that connects Pfsense virtual machine to the outside world. This interface will be bridged with a first network adapter (em0) of the Pfsense virtual machine. Bridging host adapter wlp3s0 with the guest adapter em0 (WAN interface of Pfsense) will be done using vboxmanage utility and shown later in the tutorial.

As the Pfsense appliance is Continue reading