Boston, London, & NY developers: We can’t wait to meet you

Photo by Patrick Tomasso / Unsplash

Are you based in Boston, London, or New York? There's a lot going on this month from the London Internet Summit to Developer Week New York and additional meetups in Boston and New York. Drop by our events and connect with the Cloudflare community.

Event #1 (Boston): UX, Integrations, & Developer Experience: A Panel feat. Drift & Cloudflare

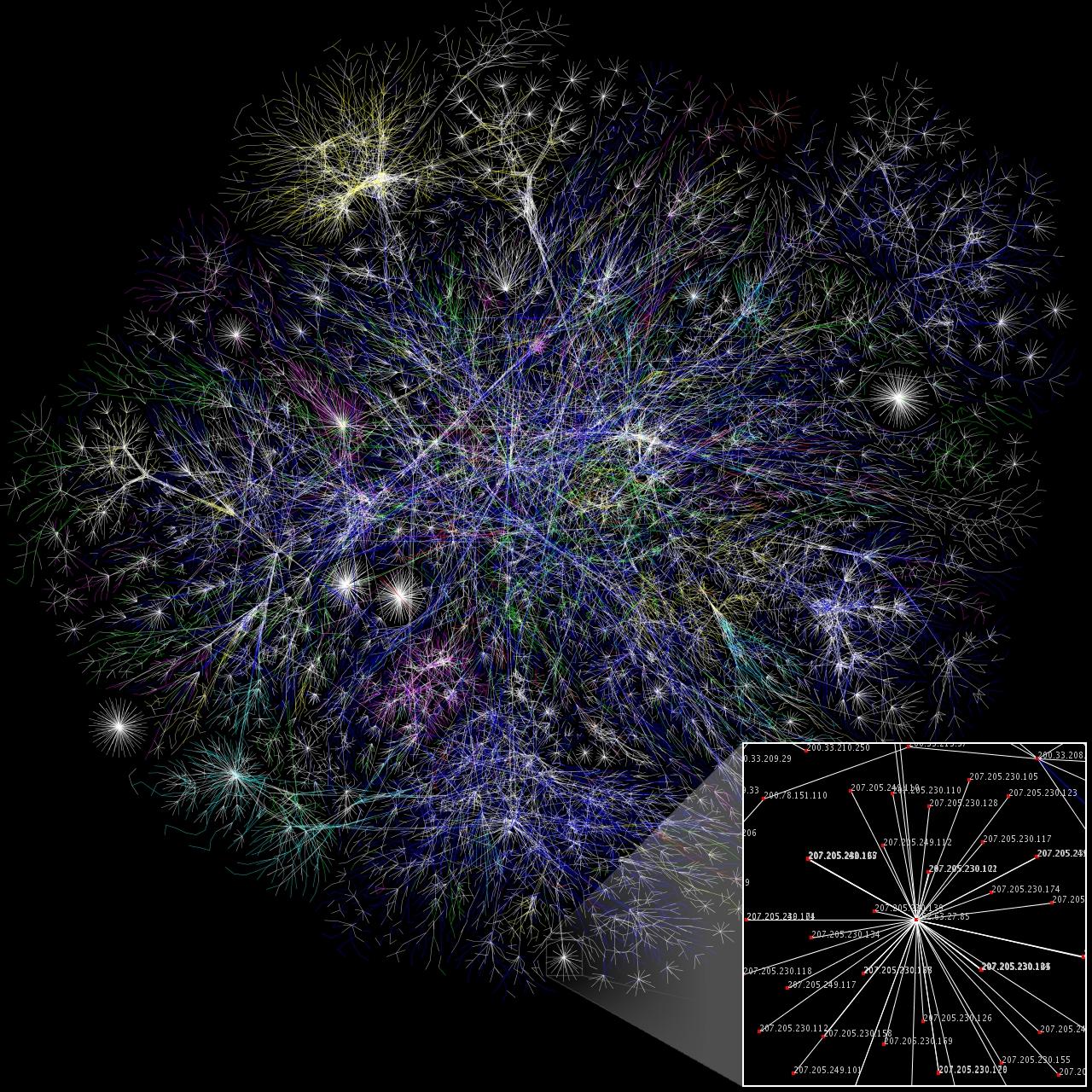

Photo by The Opte Project / Originally from the English Wikipedia; description page is/was here.]

Tuesday, June 12: 6:00 pm - 8:00 pm

Location: Drift - 222 Berkley St, 6th Floor Boston, MA 02116

Join us at Drift HQ for a panel discussion on user experience, developer experience, and integration, featuring Elias Torres from Drift and Connor Peshek and Ollie Hsieh from Cloudflare.

The panelists will speak about their experiences developing user-facing applications, best practices they learned in the process, the integration of the Drift app and the Cloudflare Apps platform, and future platform features.

View Event Details & Register Here »

Event #2 (London): Cloudflare Internet Summit

Photo by Luca Micheli / Unsplash

Thursday, June 14: 9:00 am - 6:00 pm

Location: The Tobacco Dock - Wapping Ln, Continue reading

Introducing DNS Resolver for Tor

In case you haven’t heard yet, Cloudflare launched a privacy-first DNS resolver service on April 1st. It was no joke! The service, which was our first consumer-focused service, supports emerging DNS standards such as DNS over HTTPS:443 and TLS:853 in addition to traditional protocols over UDP:53 and TCP:53, all in one easy to remember address: 1.1.1.1.

As it was mentioned in the original blog post, our policy is to never, ever write client IP addresses to disk and wipe all logs within 24 hours. Still, the exceptionally privacy-conscious folks might not want to reveal their IP address to the resolver at all, and we respect that. This is why we are launching a Tor hidden service for our resolver at dns4torpnlfs2ifuz2s2yf3fc7rdmsbhm6rw75euj35pac6ap25zgqad.onion and accessible via tor.cloudflare-dns.com.

NOTE: the hidden resolver is still an experimental service and should not be used in production or for other critical uses until it is more tested.

Crash Course on Tor

What is Tor?

Imagine an alternative Internet where, in order to connect to www.cloudflare.com, instead of delegating the task of finding a path to our servers to your internet provider, you had to go through the following Continue reading

Cloudflare Workers Recipe Exchange

Photo of Indian Spices, by Joe mon bkk. Wikimedia Commons, CC BY-SA 4.0.

Share your Cloudflare Workers recipes with the Cloudflare Community. Developers in Cloudflare’s community each bring a unique perspective that would yield use cases our core team could never have imagined. That is why we invite you to share Workers recipes that are useful in your own work, life, or hobby.

We’ve created a new tag “Recipe Exchange” in the Workers section of the Cloudflare Community Forum. We invite you to share your work, borrow / get inspired by the work of others, and upvote useful recipes written by others in the community.

Recipe Exchange in Cloudflare Community

We will be highlighting select interesting and/or popular recipes (with author permission) in the coming months right here in this blog.

What is Cloudflare Workers, anyway?

Cloudflare Workers let you run JavaScript in Cloudflare’s hundreds of data centers around the world. Using a Worker, you can modify your site’s HTTP requests and responses, make parallel requests, or generate responses from the edge. Cloudflare Workers has been in open beta phase since February 1st. Read more about the launch in this blog post.

What can you do with Continue reading

We have lift off – Rocket Loader GA is mobile!

Today we’re excited to announce the official GA of Rocket Loader, our JavaScript optimisation feature that will prioritise getting your content in front of your visitors faster than ever before with improved Mobile device support. In tests on www.cloudflare.com we saw reduction of 45% (almost 1 second) in First Contentful Paint times on our pages for visitors.

We initially launched Rocket Loader as a beta in June 2011, to asynchronously load a website’s JavaScript to dramatically improve the page load time. Since then, hundreds of thousands of our customers have benefited from a one-click option to boost the speed of your content.

With this release, we’ve vastly improved and streamlined Rocket Loader so that it works in conjunction with mobile & desktop browsers to prioritise what matters most when loading a webpage: your content.

Visitors don’t wait for page “load”

To put it very simplistically - load time is a measure of when the browser has finished loading the document (HTML) and all assets referenced by that document.

When you clicked to visit this blog post, did you wait for the spinning wheel on your browser tab to start reading this content? You Continue reading

Today we mitigated 1.1.1.1

On May 31, 2018 we had a 17 minute outage on our 1.1.1.1 resolver service; this was our doing and not the result of an attack.

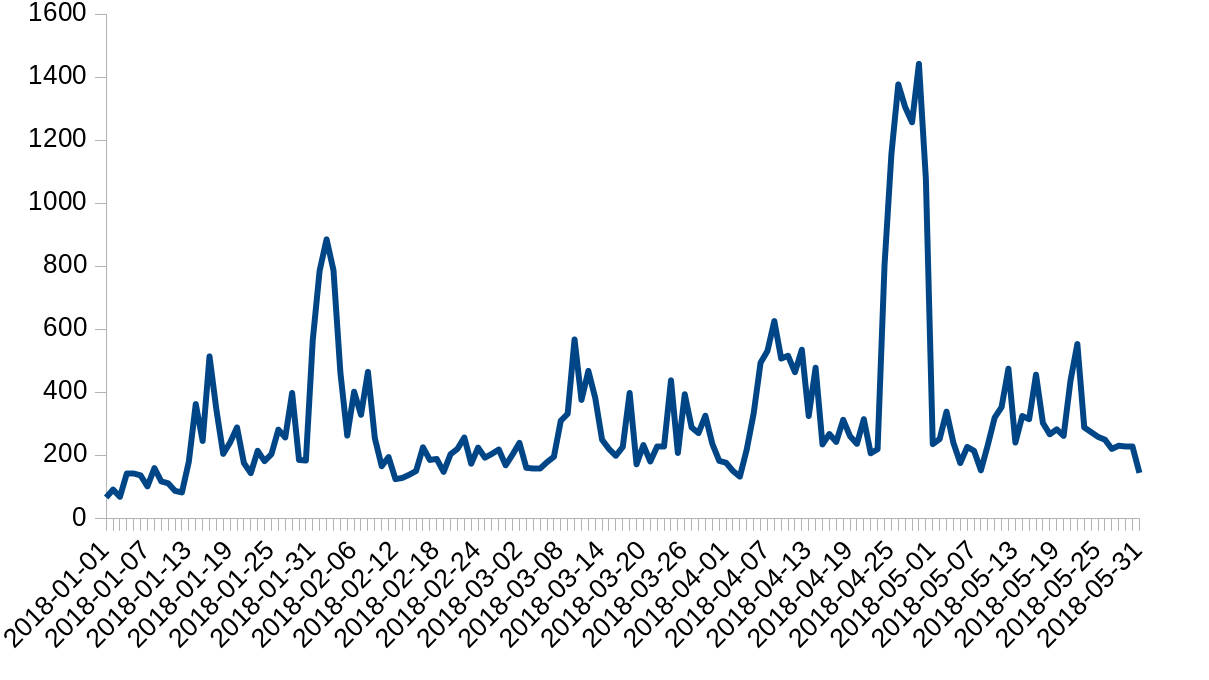

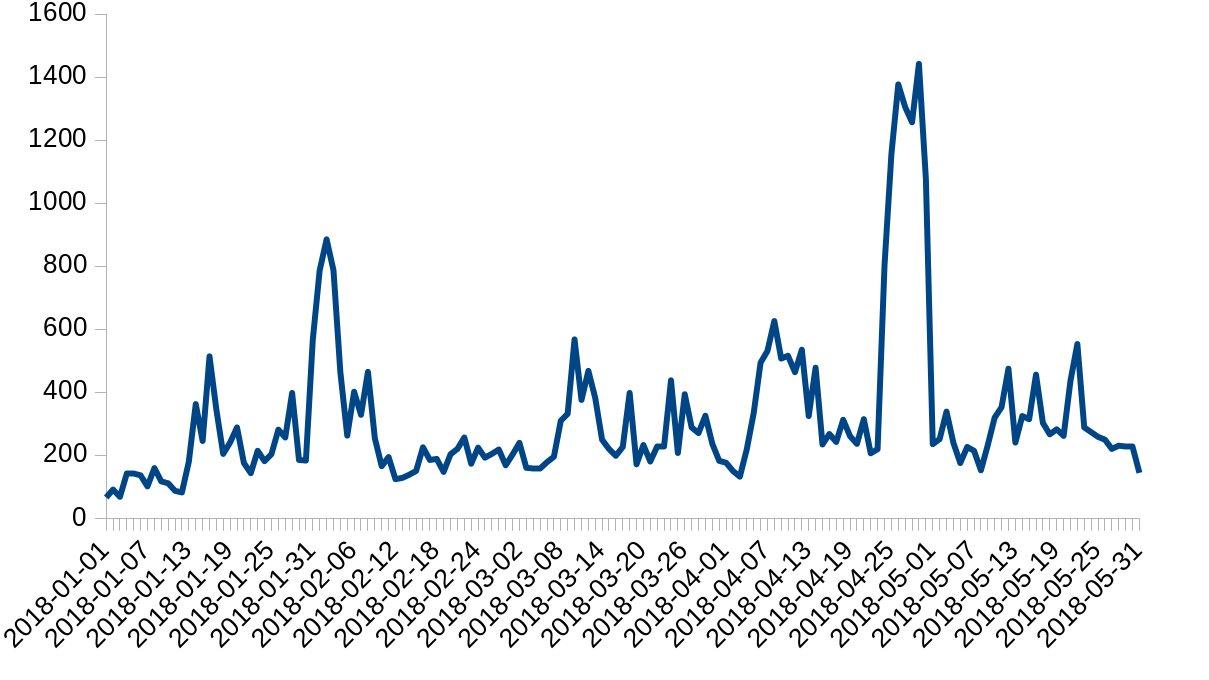

Cloudflare is protected from attacks by the Gatebot DDoS mitigation pipeline. Gatebot performs hundreds of mitigations a day, shielding our infrastructure and our customers from L3/L4 and L7 attacks. Here is a chart of a count of daily Gatebot actions this year:

In the past, we have blogged about our systems:

Today, things didn't go as planned.

Gatebot

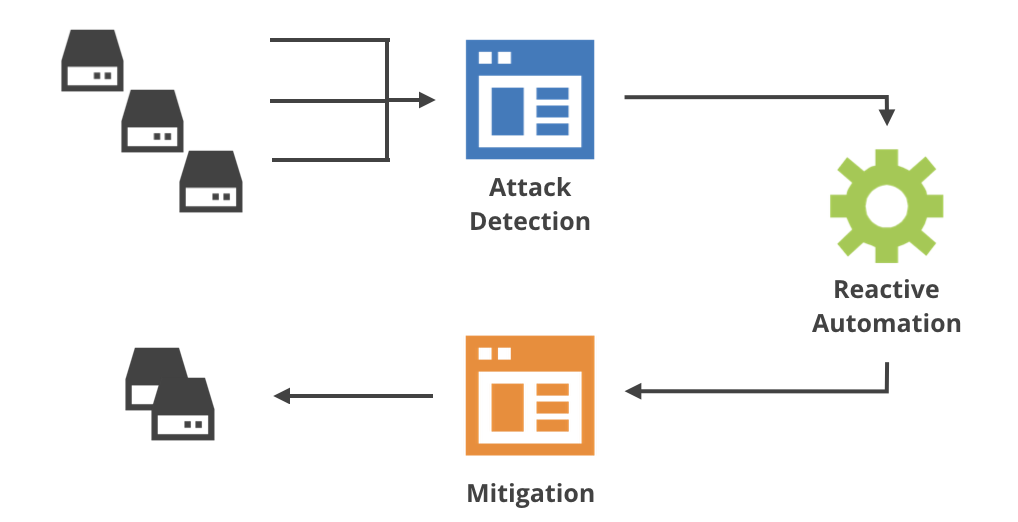

Cloudflare’s network is large, handles many different types of traffic and mitigates different types of known and not-yet-seen attacks. The Gatebot pipeline manages this complexity in three separate stages:

- attack detection - collects live traffic measurements across the globe and detects attacks

- reactive automation - chooses appropriate mitigations

- mitigations - executes mitigation logic on the edge

The benign-sounding "reactive automation" part is actually the most complicated stage in the pipeline. We expected that from the start, which is why we implemented this stage using a custom Functional Reactive Programming (FRP) framework. If you want to know more about it, see the talk and the presentation.

APNIC Labs/CloudFlare DNS 1.1.1.1 Outage: Hijack or Mistake?

At 29-05-2018 08:09:45 UTC, BGPMon (A very well known BGP monitoring system to detect prefix hijacks, route leaks and instability) detected a possible BGP hijack of 1.1.1.0/24 prefix. Cloudflare Inc has been announcing this prefix from AS 13335 since 1st April 2018 after signing an initial 5-year research agreement with APNIC Research and Development (Labs) to offer DNS services.

Shanghai Anchang Network Security Technology Co., Ltd. (AS58879) started announcing 1.1.1.0/24 at 08:09:45 UTC, which is normally announced by Cloudflare (AS13335). The possible hijack lasted only for less than 2min. The last announcement of 1.1.1.0/24 was made at 08:10:27 UTC. The BGPlay screenshot of 1.1.1.0/24 is given below:

Anchang Network (AS58879) peers with China Telecom (AS4809), PCCW Global (AS3491), Cogent Communications (AS174), NTT America, Inc. (AS2914), LG DACOM Corporation (AS3786), KINX (AS9286) and Hurricane Electric LLC (AS6939). Unfortunately, Hurricane Electric (AS6939) allowed the announcement of 1.1.1.0/24 originating from Anchang Network (AS58879). Apparently, all other peers blocked this announcement. NTT (AS2914) and Cogent (AS174) are also MANRS Participants and actively filter prefixes.

Dan Goodin (Security Editor at Ars Technica, who extensively covers malware, computer espionage, botnets, and hardware hacking) reached Continue reading

Introducing: The Cloudflare All-Stars Fantasy League

Baseball season is well underway, and to celebrate, we're excited to introduce the Cloudflare All-Stars Fantasy League: a group of fictitious sports teams that revolve around some of Cloudflare’s most championed products and services. Their mission? To help build a better Internet.

Cloudflare HQ is located just a block away from the San Francisco Giants Stadium. Each time there's a home game, crowds of people walk past Cloudflare's large 2nd street windows and peer in to the office space. The looks in their eyes scream: "Cloudflare! Teach me about your products while giving me something visually stimulating to look at!"

They asked. We listened.

The design team saw a creative opportunity, seized it, and hit it out of the park. Inspired by the highly stylized sports badges and emblems of some real-life sports teams, we applied this visual style to our own team badges. We had a lot of fun coming up with the team names, as well as figuring out which visuals to use for each.

For the next few months, the Cloudflare All-Stars teams will be showcased within the large Cloudflare HQ windows facing 2nd street and en route to Giants Stadium. Feel free to Continue reading

Rate Limiting: Delivering more rules, and greater control

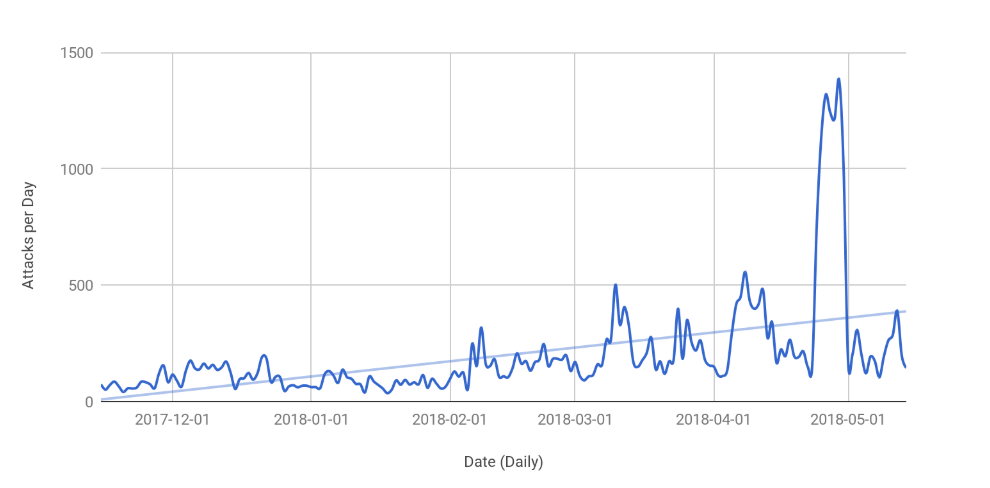

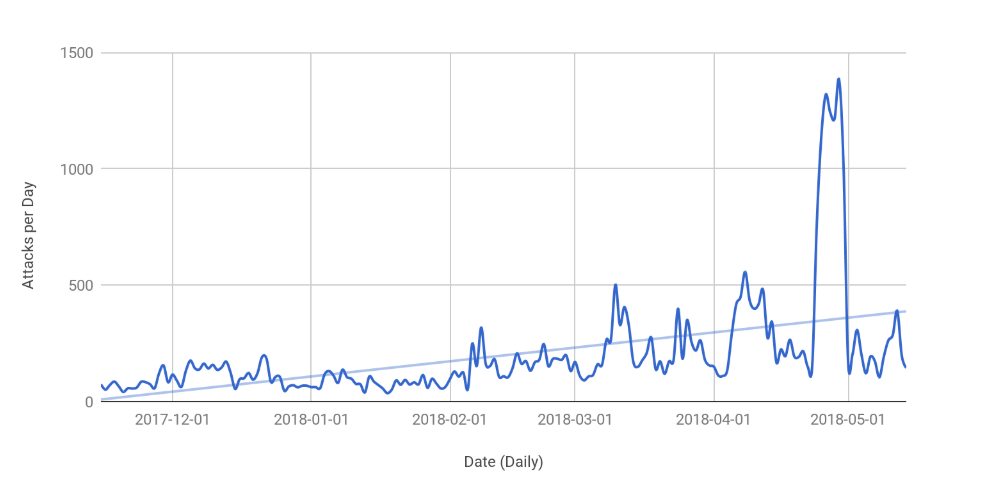

With more and more platforms taking the necessary precautions against DDoS attacks like integrating DDoS mitigation services and increasing bandwidth at weak points, Layer 3 and 4 attacks are just not as effective anymore. For Cloudflare, we have fully automated Layer 3/4 based protections with our internal platform, Gatebot. In the last 6 months we have seen a large upward trend of Layer 7 based DDoS attacks. The key difference to these attacks is they are no longer focused on using huge payloads (volumetric attacks), but based on Requests per Second to exhaust server resources (CPU, Disk and Memory). On a regular basis we see attacks that are over 1 million requests per second. The graph below shows the number of Layer 7 attacks Cloudflare has monitored, which is trending up. On average seeing around 160 attacks a day, with some days spiking up to over 1000 attacks.

A year ago, Cloudflare released Rate Limiting and it is proving to be a hugely effective tool for customers to protect their web applications and APIs from all sorts of attacks, from “low and slow” DDoS attacks, through to bot-based attacks, such as credential stuffing and content scraping. We’re pleased about the Continue reading

Why I’m Joining Cloudflare

I love working as a Chief Security Officer because every day centers around building something that makes people safer. Back in 2002, as I considered leaving my role as a cybercrime federal prosecutor to work in tech on e-commerce trust and safety, a mentor told me, “You have two rewarding but very different paths: you can prosecute one bad actor at a time, or you can try to build solutions that take away many bad actors' ability to do harm at all.” And while each is rewarding in its own way, my best days are those where I get to see harm prevented—at Internet scale.

In 2016, while traveling the United States to conduct hearings on the condition of Internet security as a member of President Obama's cyber commission, my co-commissioners noticed I had fallen into a pattern of asking the same question of every panelist: “Who is responsible for building a safer online environment where small businesses can set up shop without fear?” We heard many answers that all led to the same “not a through street” conclusion: Most law enforcement agencies extend their jurisdiction online, but there are no digital equivalents to the Department of Continue reading

You get TLS 1.3! You get TLS 1.3! Everyone gets TLS 1.3!

It's no secret that Cloudflare has been a big proponent of TLS 1.3, the newest edition of the TLS protocol that improves both speed and security, since we have made it available to our customers starting in 2016. However, for the longest time TLS 1.3 has been a work-in-progress which meant that the feature was disabled by default in our customers’ dashboards, at least until all the kinks in the protocol could be resolved.

With the specification finally nearing its official publication, and after several years of work (as well as 28 draft versions), we are happy to announce that the TLS 1.3 feature on Cloudflare is out of beta and will be enabled by default for all new zones.

For our Free and Pro customers not much changes, they already had TLS 1.3 enabled by default from the start. We have also decided to disable the 0-RTT feature by default for these plans (it was previously enabled by default as well), due to its inherent security properties. It will still be possible to explicitly enable it from the dashboard or the API (more on 0-RTT soon-ish in another blog post).

Our Business and Continue reading

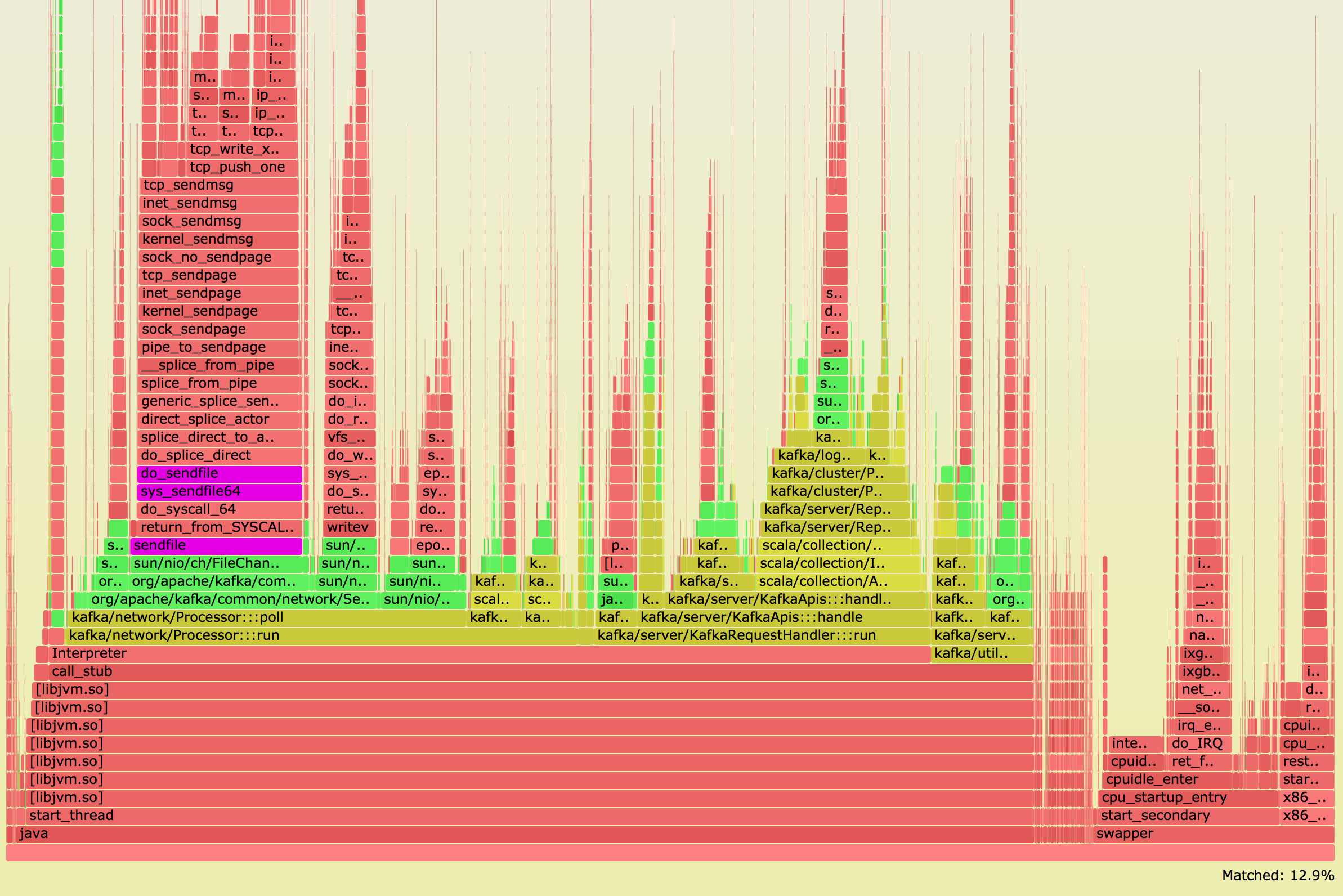

Tracing System CPU on Debian Stretch

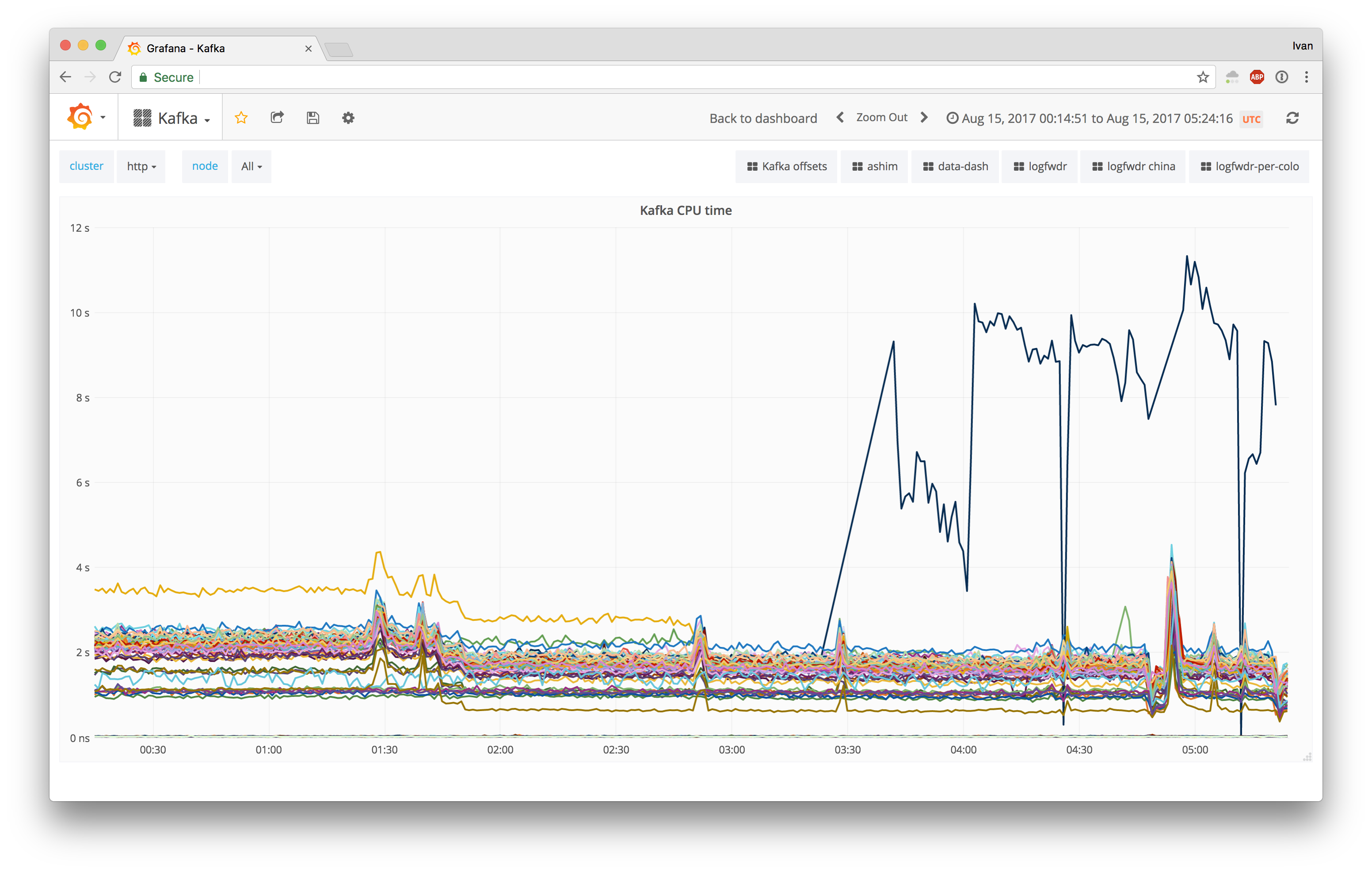

This is a heavily truncated version of an internal blog post from August 2017. For more recent updates on Kafka, check out another blog post on compression, where we optimized throughput 4.5x for both disks and network.

Photo by Alex Povolyashko / Unsplash

Upgrading our systems to Debian Stretch

For quite some time we've been rolling out Debian Stretch, to the point where we have reached ~10% adoption in our core datacenters. As part of upgarding the underlying OS, we also evaluate the higher level software stack, e.g. taking a look at our ClickHouse and Kafka clusters.

During our upgrade of Kafka, we sucessfully migrated two smaller clusters, logs and dns, but ran into issues when attempting to upgrade one of our larger clusters, http.

Thankfully, we were able to roll back the http cluster upgrade relatively easily, due to heavy versioning of both the OS and the higher level software stack. If there's one takeaway from this blog post, it's to take advantage of consistent versioning.

High level differences

We upgraded one Kafka http node, and it did not go as planned:

Having 5x CPU usage was definitely an unexpected outcome. For control datapoints, we Continue reading

Project Jengo Celebrates One Year Anniversary by Releasing Prior Art

Today marks the one year anniversary of Project Jengo, a crowdsourced search for prior art that Cloudflare created and funded in response to the actions of Blackbird Technologies, a notorious patent troll. Blackbird has filed more than one hundred lawsuits asserting dormant patents without engaging in any innovative or commercial activities of its own. In homage to the typical anniversary cliché, we are taking this opportunity to reflect on the last year and confirm that we’re still going strong.

Project Jengo arose from a sense of immense frustration over the way that patent trolls purchase over-broad patents and use aggressive litigation tactics to elicit painful settlements from companies. These trolls know that the system is slanted in their favor, and we wanted to change that. Patent lawsuits take years to reach trial and cost an inordinate sum to defend. Knowing this, trolls just sit back and wait for companies to settle. Instead of perpetuating this cycle, Cloudflare decided to bring the community together and fight back.

After Blackbird filed a lawsuit against Cloudflare alleging infringement of a vague and overly-broad patent (‘335 Patent), we launched Project Jengo, which offered a reward to people who submitted prior art that could Continue reading

The Rise of Edge Compute: The Video

At the end of March, Kenton Varda, tech lead and architect for Cloudflare Workers, traveled to London and led a talk about the Rise of Edge Compute where he laid out our vision for the future of the Internet as a platform.

Several of those who were unable to attend on-site asked for us to produce a recording. Well, we've completed the audio edits, so here it is!

Visit the Workers category on Cloudflare's community forum to learn more about Workers and share questions, answers, and ideas with other developers.

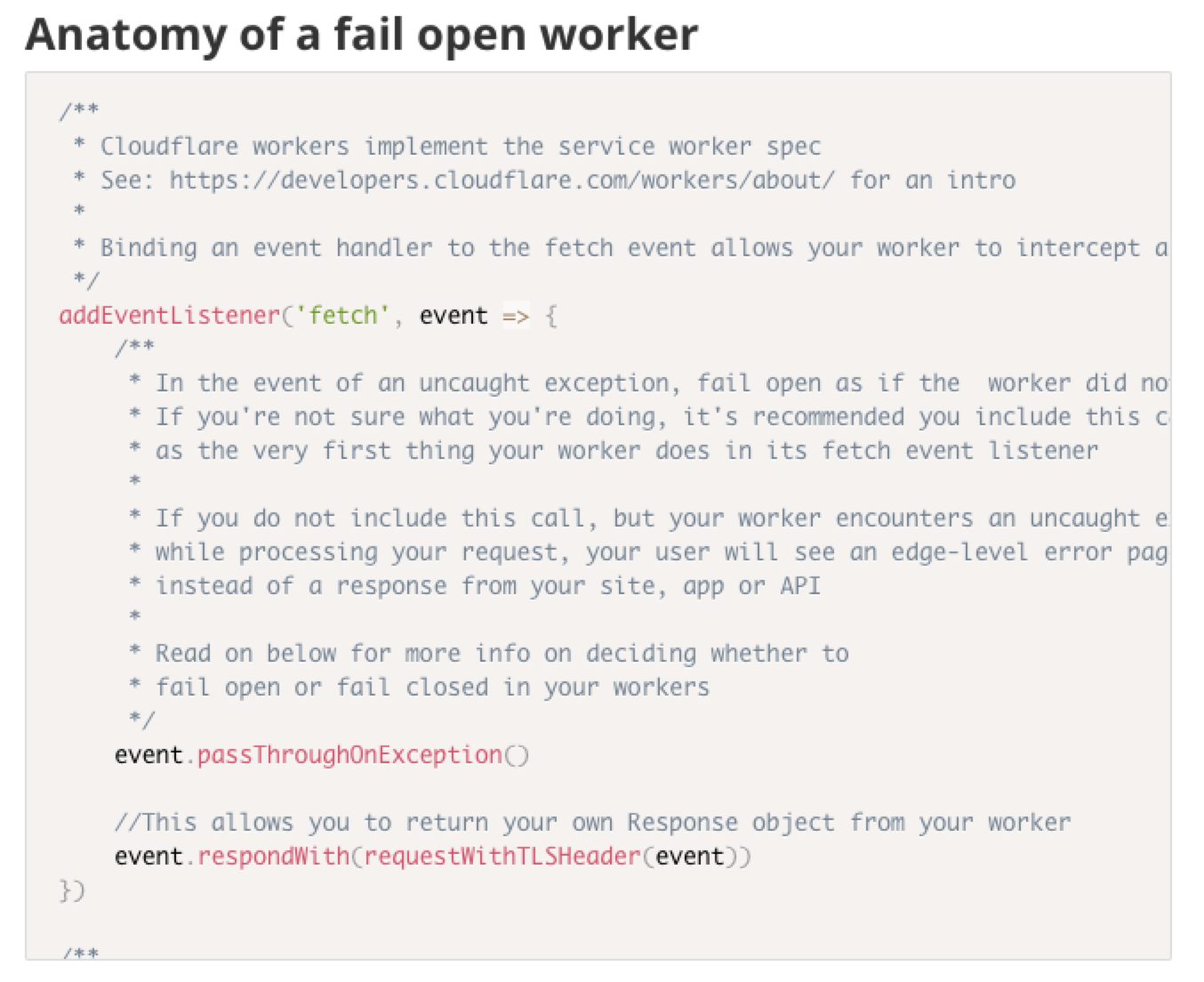

Dogfooding Cloudflare Workers

On the WWW team, we’re responsible for Cloudflare’s REST APIs, account management services and the dashboard experience. We take security and PCI compliance seriously, which means we move quickly to stay up to date with regulations and relevant laws.

A recent compliance project had a requirement of detecting certain end user request data at the edge, and reacting to it both in API responses as well as visually in the dashboard. We realized that this was an excellent opportunity to dogfood Cloudflare Workers.

Deploying workers to www.cloudflare.com and api.cloudflare.com

In this blog post, we’ll break down the problem we solved using a single worker that we shipped to multiple hosts, share the annotated source code of our worker, and share some best practices and tips and tricks we discovered along the way.

Since being deployed, our worker has served over 400 million requests for both calls to api.cloudflare.com and the www.cloudflare.com dashboard.

The task

First, we needed to detect when a client was connecting to our services using an outdated TLS protocol. Next, we wanted to pass this information deeper into our application stack so that we could act upon it and Continue reading

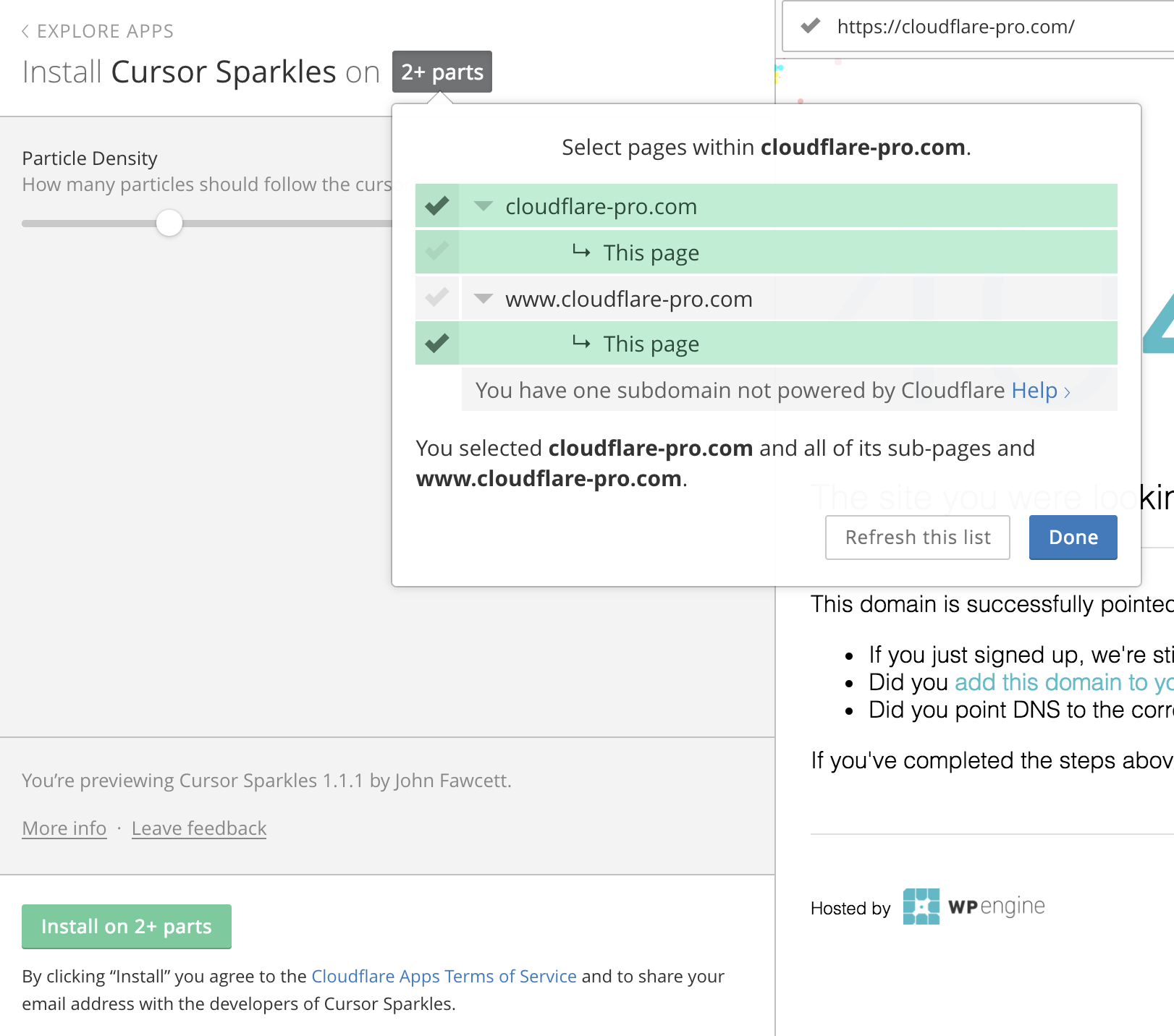

Custom Page Selection for Cloudflare Apps

In July 2016, Cloudflare integrated with Eager - an apps platform. During this integration, several decisions were made to ensure an optimal experience installing apps. We wanted to make sure site owners on Cloudflare could customize and install an app with the minimal number of clicks possible. Customizability often adds complexity and clicks for the user. We’ve been tinkering to find the right balance of user control and simplicity since.

When installing an app, a site owner must select where - what URLs on their site - they want what apps installed. Our original plan for selecting the URLs an app would be installed on took a few twists and turns. Our end decision was to utilize our Always Online crawler to pre-populate a tree of the user’s site. Always Online is a feature that crawls Cloudflare sites and serves pages from our cache if the site goes down.

The benefits to this original setup are:

1. Only valid pages appear

An app only allows installations on html pages. For example, since injecting Javascript into a JPEG image isn’t possible, we would prevent the installer from trying it by not showing that path. Preventing the user from that type of Continue reading

Sharing more Details, not more Data: Our new Privacy Policy and Data Protection Plans

After an exhilarating first month as Cloudflare’s first Data Protection Officer (DPO), I’m excited to announce that today we are launching a new Privacy Policy. Our new policy explains the kind of information we collect, from whom we collect it, and how we use it in a more transparent way. We also provide clearer instructions for how you, our users, can exercise your data subject rights. Importantly, nothing in our privacy policy changes the level of privacy protection for your information.

Our new policy is a key milestone in our GDPR readiness journey, and it goes into effect on May 25 — the same day as the GDPR. (You can learn more about the European Union’s General Data Protection Regulation here.) But our GDPR journey doesn’t end on May 25.

Over the coming months, we’ll be following GDPR-related developments, providing you periodic updates about what we learn, and adapting our approach as needed. And I’ll continue to focus on GDPR compliance efforts, including coordinating our responses to data subject requests for information about how their data is being handled, evaluating the privacy impact of new products and services on our users’ personal data, and working with customers who want Continue reading

Expanding Multi-User Access on dash.cloudflare.com

One of the most common feature requests we get is to allow customers to share account access. This has been supported at our Enterprise level of service, but is now being extended to all customers. Starting today, users can go to the new home of Cloudflare’s Dashboard at dash.cloudflare.com. Upon login, users will see the redesigned account experience. Now users can manage all of their account level settings and features in a more streamlined UI.

CC BY 2.0 image by Mike Lawrence

All customers now have the ability to invite others to manage their account as Administrators. They can do this from the ‘Members’ tab in the new Account area on the Cloudflare dashboard. Invited Administrators have full control over the account except for managing members and changing billing information.

For Customers who belong to multiple accounts (previously known as organizations), the first thing they will see is an account selector. This allows easy searching and selection between accounts. Additionally, there is a zone selector for searching through zones across all accounts. Enterprise customers still have access to the same roles as before with the addition of the Administrator and Billing Roles.

The New Dashboard @ dash. Continue reading

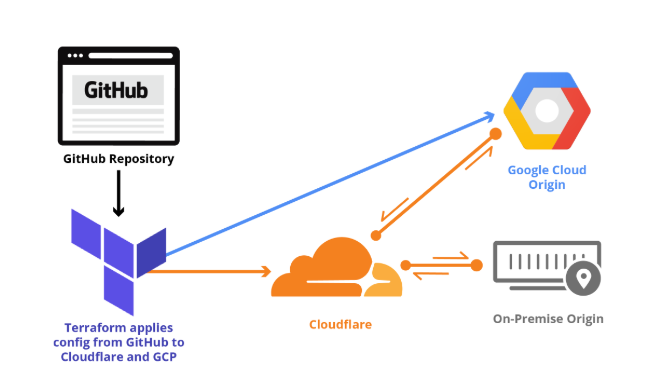

Getting started with Terraform and Cloudflare (Part 2 of 2)

In Part 1 of Getting Started with Terraform, we explained how Terraform lets developers store Cloudflare configuration in their own source code repository, institute change management processes that include code review, track their configuration versions and history over time, and easily roll back changes as needed.

We covered installing Terraform, provider initialization, storing configuration in git, applying zone settings, and managing rate limits. This post continues the Cloudflare Terraform provider walkthrough with examples of load balancing, page rules, reviewing and rolling back configuration, and importing state.

Reviewing the current configuration

Before we build on Part 1, let's quickly review what we configured in that post. Because our configuration is in git, we can easily view the current configuration and change history that got us to this point.

$ git log

commit e1c38cf6f4230a48114ce7b747b77d6435d4646c

Author: Me

Date: Mon Apr 9 12:34:44 2018 -0700

Step 4 - Update /login rate limit rule from 'simulate' to 'ban'.

commit 0f7e499c70bf5994b5d89120e0449b8545ffdd24

Author: Me

Date: Mon Apr 9 12:22:43 2018 -0700

Step 4 - Add rate limiting rule to protect /login.

commit d540600b942cbd89d03db52211698d331f7bd6d7

Author: Me

Date: Sun Apr 8 22:21:27 2018 -0700

Step 3 - Enable TLS 1.3, Continue readingGetting started with Terraform and Cloudflare (Part 1 of 2)

As a Product Manager at Cloudflare, I spend quite a bit of my time talking to customers. One of the most common topics I'm asked about is configuration management. Developers want to know how they can write code to manage their Cloudflare config, without interacting with our APIs or UI directly.

Following best practices in software development, they want to store configuration in their own source code repository (be it GitHub or otherwise), institute a change management process that includes code review, and be able to track their configuration versions and history over time. Additionally, they want the ability to quickly and easily roll back changes when required.

When I first spoke with our engineering teams about these requirements, they gave me the best answer a Product Manager could hope to hear: there's already an open source tool out there that does all of that (and more), with a strong community and plugin system to boot—it's called Terraform.

This blog post is about getting started using Terraform with Cloudflare and the new version 1.0 of our Terraform provider. A "provider" is simply a plugin that knows how to talk to a specific set of APIs—in this case, Cloudflare, but Continue reading

Copenhagen & London developers, join us for five events this May

Photo by Nick Karvounis / Unsplash

Are you based in Copenhagen or London? Drop by one or all of these five events.

Ross Guarino and Terin Stock, both Systems Engineers at Cloudflare are traveling to Europe to lead Go and Kubernetes talks in Copenhagen. They'll then join Junade Ali and lead talks on their use of Go, Kubernetes, and Cloudflare’s Mobile SDK at Cloudflare's London office.

My Developer Relations teammates and I are visiting these cities over the next two weeks to produce these events with Ross, Terin, and Junade. We’d love to meet you and invite you along.

Our trip will begin with two meetups and a conference talk in Copenhagen.

Event #1 (Copenhagen): 6 Cloud Native Talks, 1 Evening: Special KubeCon + CloudNativeCon EU Meetup

Tuesday, 1 May: 17:00-21:00

Location: Trifork Copenhagen - Borgergade 24B, 1300 København K

How to extend your Kubernetes cluster

A brief introduction to controllers, webhooks and CRDs. Ross and Terin will talk about how Cloudflare’s internal platform builds on Kubernetes.

Speakers: Ross Guarino and Terin Stock

View Event Details & Register Here »