Cloudflare’s public IPFS gateways and supporting Interplanetary Shipyard

IPFS, the distributed file system and content addressing protocol, has been around since 2015, and Cloudflare has been a user and operator since 2018, when we began operating a public IPFS gateway. Today, we are announcing our plan to transition this gateway traffic to the IPFS Foundation’s gateway, maintained by the Interplanetary Shipyard (“Shipyard”) team, and discussing what it means for users and the future of IPFS gateways.

As announced in April 2024, many of the IPFS core developers and maintainers now work within a newly created, independent entity called Interplanetary Shipyard after transitioning from Protocol Labs, where IPFS was invented and incubated. By operating as a collective, ongoing maintenance and support of important protocols like IPFS are now even more community-owned and managed. We fully support this “exit to community” and are excited to support Shipyard as they build more great infrastructure for the open web.

On May 14th, 2024, we will begin to transition traffic that comes to Cloudflare’s public IPFS gateway to the IPFS Foundation’s gateway at ipfs.io or dweb.link. Cloudflare’s public IPFS gateway is just one of many – part of a distributed ecosystem that also includes Pinata, eth.limo, and Continue reading

Reclaiming CPU for free with Go’s Profile Guided Optimization

Golang 1.20 introduced support for Profile Guided Optimization (PGO) to the go compiler. This allows guiding the compiler to introduce optimizations based on the real world behaviour of your system. In the Observability Team at Cloudflare, we maintain a few Go-based services that use thousands of cores worldwide, so even the 2-7% savings advertised would drastically reduce our CPU footprint, effectively for free. This would reduce the CPU usage for our internal services, freeing up those resources to serve customer requests, providing measurable improvements to our customer experience. In this post, I will cover the process we created for experimenting with PGO – collecting representative profiles across our production infrastructure and then deploying new PGO binaries and measuring the CPU savings.

How does PGO work?

PGO itself is not a Go-specific tool, although it is relatively new. PGO allows you to take CPU profiles from a program running in production and use that to optimise the generated assembly for that program. This includes a bunch of different optimisations such as inlining heavily used functions more aggressively, reworking branch prediction to favour the more common branches, and rearranging the generated code to lump hot paths together to save on CPU Continue reading

East African Internet connectivity again impacted by submarine cable cuts

On Sunday, May 12, issues with the ESSAy and Seacom submarine cables again disrupted connectivity to East Africa, impacting a number of countries previously affected by a set of cable cuts that occurred nearly three months earlier.

On February 24, three submarine cables that run through the Red Sea were damaged: the Seacom/Tata cable, the Asia Africa Europe-1 (AAE-1), and the Europe India Gateway (EIG). It is believed that the cables were cut by the anchor of the Rubymar, a cargo ship that was damaged by a ballistic missile on February 18. These cable cuts reportedly impacted countries in East Africa, including Tanzania, Kenya, Uganda, and Mozambique. As of this writing (May 13), these cables remain unrepaired.

Already suffering from reduced capacity due to the February cable cuts, these countries were impacted by a second set of cable cuts that occurred on Sunday, May 12. According to a social media post from Ben Roberts, Group CTIO at Liquid Intelligent Technologies in Kenya, faults on the EASSy and Seacom cables again disrupted connectivity to East Africa, as he noted “All sub sea capacity between East Africa and South Africa is down.” A BBC Continue reading

Treasury and PNNL threat data now available for Financial sector customers to secure applications

Following the White House’s National Cybersecurity Strategy, which underscores the importance of fostering public-private partnerships to enhance the security of critical sectors, Cloudflare is happy to announce a strategic partnership with the United States Department of the Treasury and the Department of Energy’s Pacific Northwest National Laboratory (PNNL) to create Custom Indicator Feeds that enable customers to integrate approved threat intelligence feeds directly into Cloudflare's platform.

Our partnership with the Department of the Treasury and PNNL offers approved financial services institutions privileged access to threat data that was previously exclusive to the government. The feed, exposed as a Custom Indicator Feed, collects advanced insights from the Department of the Treasury and the federal government's exclusive sources. Starting today, financial institutions can create DNS filtering policies through Cloudflare’s Gateway product that leverage threat data directly from these government bodies. These policies are crucial for protecting organizations from malicious links and phishing attempts specifically targeting the financial sector.

This initiative not only supports the federal effort to strengthen cybersecurity within critical infrastructure including the financial sector, for which the Treasury is the designated lead agency, but also contributes directly to the ongoing improvement of our shared security capabilities.

Why we partnered Continue reading

Introducing Cloudflare for Unified Risk Posture

Managing risk posture — how your business assesses, prioritizes, and mitigates risks — has never been easy. But as attack surfaces continue to expand rapidly, doing that job has become increasingly complex and inefficient. (One global survey found that SOC team members spend, on average, one-third of their workday on incidents that pose no threat).

But what if you could mitigate risk with less effort and less noise?

This post explores how Cloudflare can help customers do that, thanks to a new suite that converges capabilities across our Secure Access Services Edge (SASE) and web application and API (WAAP) security portfolios. We’ll explain:

- Why this approach helps protect more of your attack surface, while also reducing SecOps effort

- Three key use cases — including enforcing Zero Trust with our expanded CrowdStrike partnership

- Other new projects we’re exploring based on these capabilities

Cloudflare for Unified Risk Posture

Today, we’re announcing Cloudflare for Unified Risk Posture, a new suite of cybersecurity risk management capabilities that can help enterprises with automated and dynamic risk posture enforcement across their expanding attack surface. Today, one unified platform enables organizations to:

- Evaluate risk across people and applications: Cloudflare evaluates risk posed by people via Continue reading

Using Fortran on Cloudflare Workers

In April 2020, we blogged about how to get COBOL running on Cloudflare Workers by compiling to WebAssembly. The ecosystem around WebAssembly has grown significantly since then, and it has become a solid foundation for all types of projects, be they client-side or server-side.

As WebAssembly support has grown, more and more languages are able to compile to WebAssembly for execution on servers and in browsers. As Cloudflare Workers uses the V8 engine and supports WebAssembly natively, we’re able to support languages that compile to WebAssembly on the platform.

Recently, work on LLVM has enabled Fortran to compile to WebAssembly. So, today, we’re writing about running Fortran code on Cloudflare Workers.

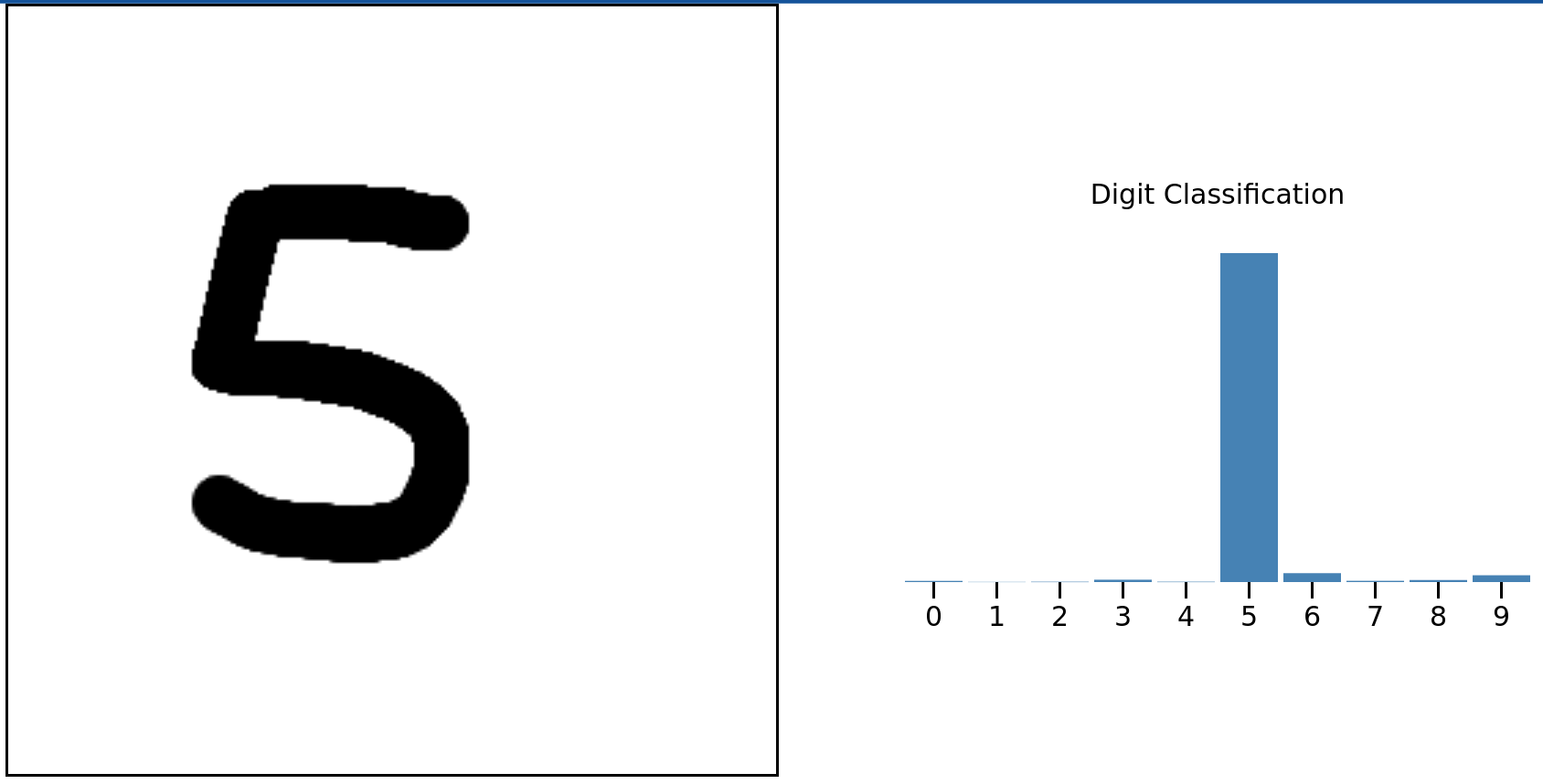

Before we dive into how to do this, here’s a little demonstration of number recognition in Fortran. Draw a number from 0 to 9 and Fortran code running somewhere on Cloudflare’s network will predict the number you drew.

Try yourself on handwritten-digit-classifier.fortran.demos.cloudflare.com.

This is taken from the wonderful Fortran on WebAssembly post but instead of running client-side, the Fortran code is running on Cloudflare Workers. Read on to find out how you can use Fortran on Cloudflare Workers and how that demonstration works.

Wait, Fortran? Continue reading

Q1 2024 Internet disruption summary

This post is also available in 日本語, 한국어, Deutsch, Français, Español.

Cloudflare’s network spans more than 310 cities in over 120 countries, where we interconnect with over 13,000 network providers in order to provide a broad range of services to millions of customers. The breadth of both our network and our customer base provides us with a unique perspective on Internet resilience, enabling us to observe the impact of Internet disruptions. Thanks to recently released Cloudflare Radar functionality, this quarter we have started to explore the impact from a routing perspective, as well as a traffic perspective, at both a network and location level.

The first quarter of 2024 kicked off with quite a few Internet disruptions. Damage to both terrestrial and submarine cables caused problems in a number of locations, while military action related to ongoing geopolitical conflicts impacted connectivity in other areas. Governments in several African countries, as well as Pakistan, ordered Internet shutdowns, focusing heavily on mobile connectivity. Malicious actors known as Anonymous Sudan claimed responsibility for cyberattacks that disrupted Internet connectivity in Israel and Bahrain. Maintenance and power outages forced users offline, resulting in observed drops in traffic. And in Continue reading

Lessons from building an automated SDK pipeline

In case you missed the announcement from Developer Week 2024, Cloudflare is now offering software development kits (SDKs) for Typescript, Go and Python. As a reminder, you can get started by installing the packages.

// Typescript

npm install cloudflare

// Go

go get -u github.com/cloudflare/cloudflare-go/v2

// Python

pip install --pre cloudflare

Instead of using a tool like curl or Postman to create a new zone in your account, you can use one of the SDKs in a language that you’re already comfortable with or that integrates directly into your existing codebase.

import Cloudflare from 'cloudflare';

const cloudflare = new Cloudflare({

apiToken: process.env['CLOUDFLARE_API_TOKEN']

});

const newZone = await cloudflare.zones.create({

account: { id: '023e105f4ecef8ad9ca31a8372d0c353' },

name: 'example.com',

type: 'full',

});

Since their inception, our SDKs have been manually maintained by one or more dedicated individuals. For every product addition or improvement, we needed to orchestrate a series of manually created pull requests to get those changes into customer hands. This, unfortunately, created an imbalance in the frequency and quality of changes that made it into the SDKs. Even though the product teams would drive some of these changes, not all languages were covered and the SDKs Continue reading

Meta Llama 3 available on Cloudflare Workers AI

We are thrilled to give developers around the world the ability to build AI applications with Meta Llama 3 using Workers AI. We are proud to be a launch partner with Meta for their newest 8B Llama 3 model, and excited to continue our partnership to bring the best of open-source models to our inference platform.

Workers AI

Workers AI’s initial launch in beta included support for Llama 2, as it was one of the most requested open source models from the developer community. Since that initial launch, we’ve seen developers build all kinds of innovative applications including knowledge sharing chatbots, creative content generation, and automation for various workflows.

At Cloudflare, we know developers want simplicity and flexibility, with the ability to build with multiple AI models while optimizing for accuracy, performance, and cost, among other factors. Our goal is to make it as easy as possible for developers to use their models of choice without having to worry about the complexities of hosting or deploying models.

As soon as we learned about the development of Llama 3 from our partners at Meta, we knew developers would want to start building with it as quickly as possible. Continue reading

Cloudflare named in 2024 Gartner® Magic Quadrant™ for Security Service Edge

Gartner has once again named Cloudflare to the Gartner® Magic Quadrant™ for Security Service Edge (SSE) report1. We are excited to share that Cloudflare is one of only ten vendors recognized in this report. For the second year in a row, we are recognized for our ability to execute and the completeness of our vision. You can read more about our position in the report here.

Last year, we became the only new vendor named in the 2023 Gartner® Magic Quadrant™ for SSE. We did so in the shortest amount of time as measured by the date since our first product launched. We also made a commitment to our customers at that time that we would only build faster. We are happy to report back on the impact that has had on customers and the Gartner recognition of their feedback.

Cloudflare can bring capabilities to market quicker, and with greater cost efficiency, than competitors thanks to the investments we have made in our global network over the last 14 years. We believe we were able to become the only new vendor in 2023 by combining existing advantages like our robust, multi-use global proxy, our lightning-fast DNS resolver, our Continue reading

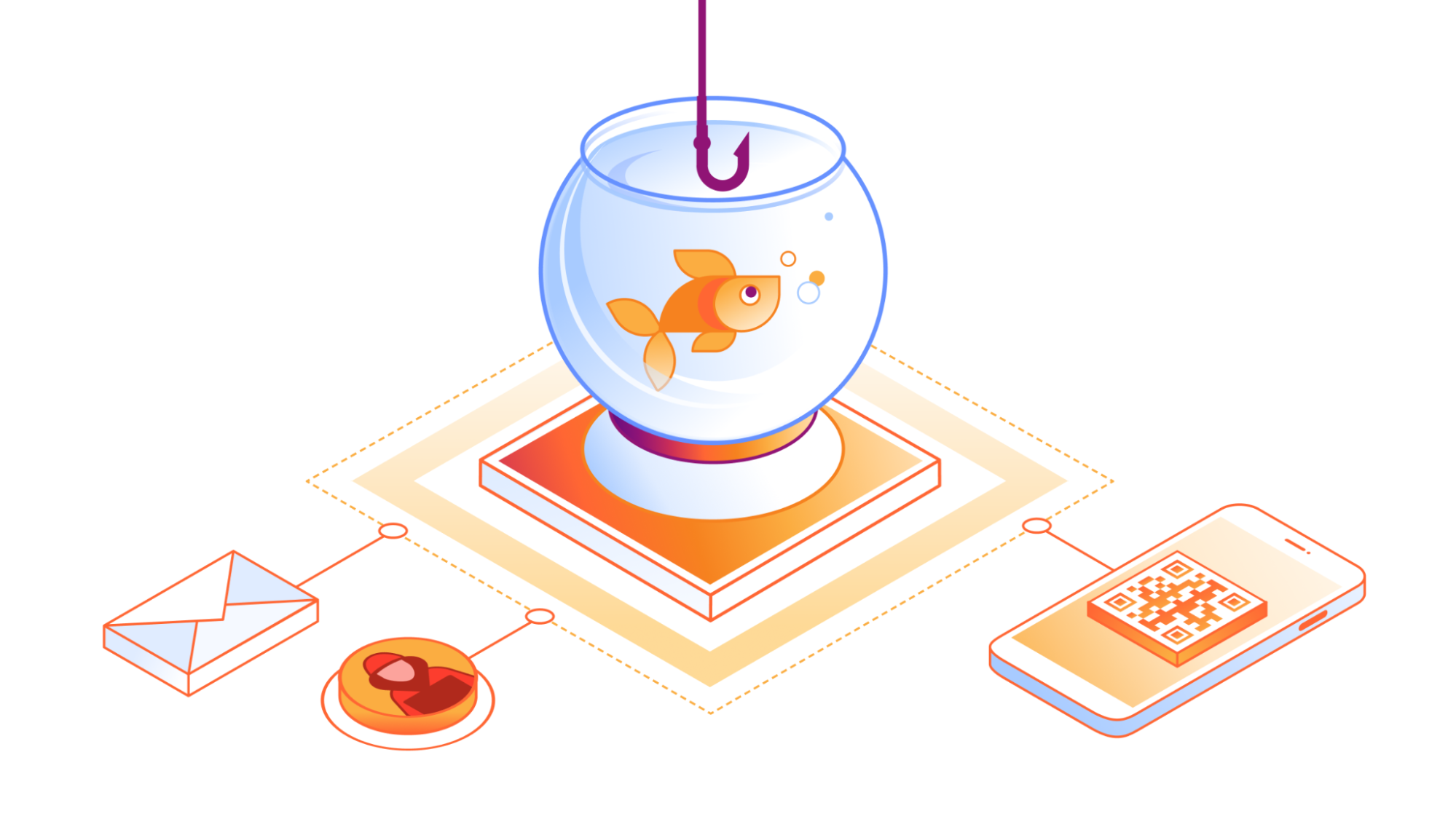

How Cloudflare Cloud Email Security protects against the evolving threat of QR phishing

In the ever-evolving landscape of cyber threats, a subtle yet potent form of phishing has emerged — quishing, short for QR phishing. It has been 30 years since the invention of QR codes, yet quishing still poses a significant risk, especially after the era of COVID, when QR codes became the norm to check statuses, register for events, and even order food.

Since 2020, Cloudflare’s cloud email security solution (previously known as Area 1) has been at the forefront of fighting against quishing attacks, taking a proactive stance in dissecting them to better protect our customers. Let’s delve into the mechanisms behind QR phishing, explore why QR codes are a preferred tool for attackers, and review how Cloudflare contributes to the fight against this evolving threat.

How quishing works

The impact of phishing and quishing are quite similar, as both can result in users having their credentials compromised, devices compromised, or even financial loss. They also leverage malicious attachments or websites to provide bad actors the ability to access something they normally wouldn’t be able to. Where they differ is that quishing is typically highly targeted and uses a QR code to further obfuscate itself from detection.

Since Continue reading

DDoS threat report for 2024 Q1

Welcome to the 17th edition of Cloudflare’s DDoS threat report. This edition covers the DDoS threat landscape along with key findings as observed from the Cloudflare network during the first quarter of 2024.

What is a DDoS attack?

But first, a quick recap. A DDoS attack, short for Distributed Denial of Service attack, is a type of cyber attack that aims to take down or disrupt Internet services such as websites or mobile apps and make them unavailable for users. DDoS attacks are usually done by flooding the victim's server with more traffic than it can handle.

To learn more about DDoS attacks and other types of attacks, visit our Learning Center.

Accessing previous reports

Quick reminder that you can access previous editions of DDoS threat reports on the Cloudflare blog. They are also available on our interactive hub, Cloudflare Radar. On Radar, you can find global Internet traffic, attacks, and technology trends and insights, with drill-down and filtering capabilities, so you can zoom in on specific countries, industries, and networks. There’s also a free API allowing academics, data sleuths, and other web enthusiasts to investigate Internet trends across the globe.

To learn how we prepare this report, refer Continue reading

Why I joined Cloudflare as Chief Partner Officer

In today's rapidly evolving digital landscape, the decision to join a company is not just about making a career move. Instead, it's about finding a mission, a community, and a platform to make a meaningful impact. Cloudflare’s remarkable technology and incredibly driven teams are two reasons why I’m excited to join the team.

Joining Cloudflare as the Chief Partner Officer is my commitment to driving innovation and impact across the Internet through our channel partnerships. In each conversation throughout the interview process, I found myself getting more and more excited about the opportunity. Several former trusted colleagues who have recently joined Cloudflare repeatedly told me how amazing the people and company culture are. A positive culture driven by people that are passionate about their work is key. We work too hard not to have fun while doing it.

When it comes to partnerships, I see the immense value that partners can provide. My philosophy revolves around fostering collaborative, value-driven partnerships. It is about building ecosystems where we jointly navigate challenges, innovate together, and collectively thrive in a rapidly evolving global marketplace where the success of our channel partners directly influences our collective achievements. It also involves investing in their growth Continue reading

An Internet traffic analysis during Iran’s April 13, 2024, attack on Israel

(UPDATED on April 15, 2024, with information regarding the Palestinian territories.)

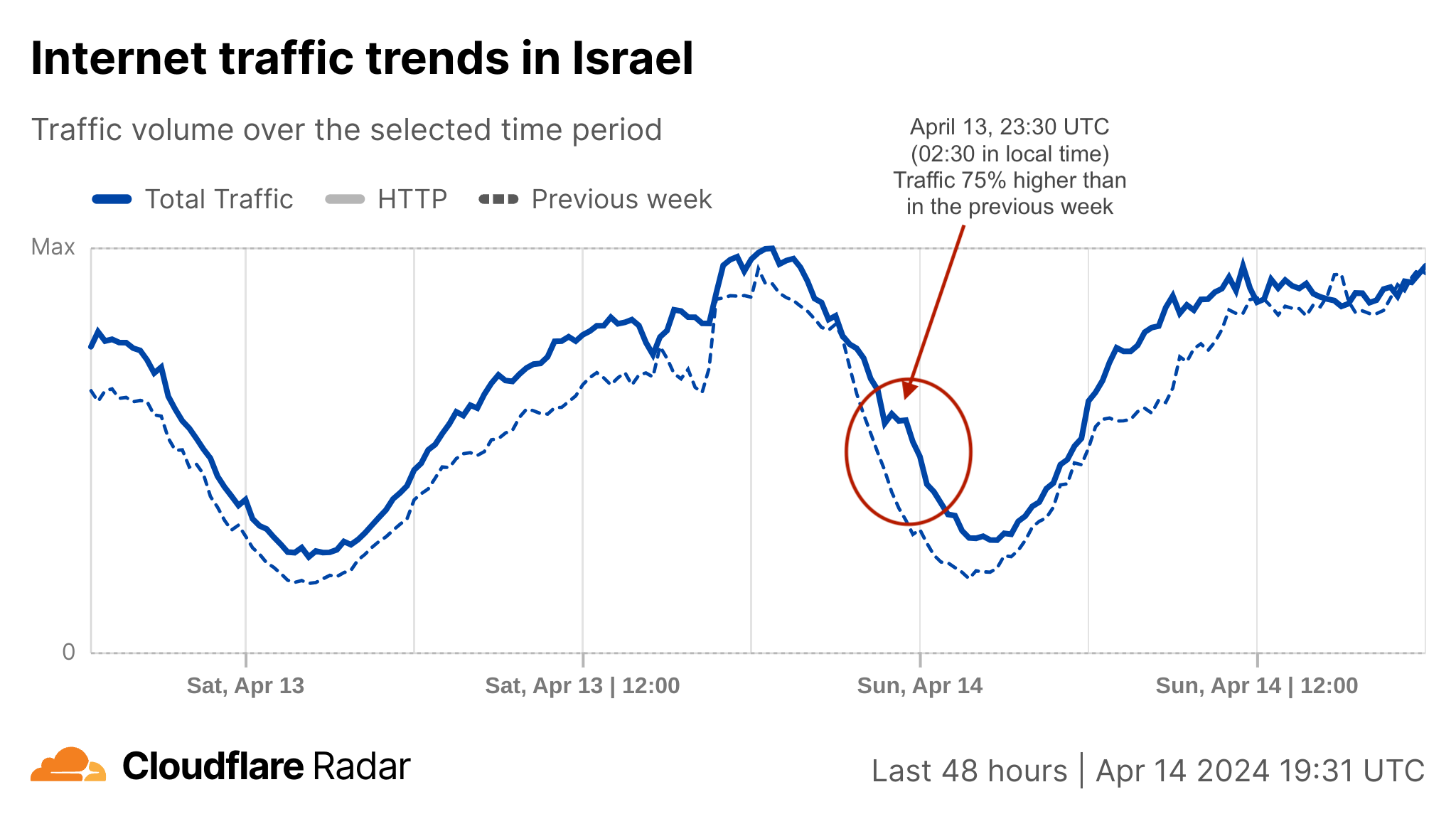

As news came on Saturday, April 13, 2024, that Iran was launching a coordinated retaliatory attack on Israel, we took a closer look at the potential impact on Internet traffic and attacks. So far, we have seen some traffic shifts in both Israel and Iran, but we haven’t seen a coordinated large cyberattack on Israeli domains protected by Cloudflare.

First, let’s discuss general Internet traffic patterns. Following reports of attacks with drones, cruise missiles, and ballistic missiles, confirmed by Israeli and US authorities, Internet traffic in Israel surged after 02:00 local time on Saturday, April 13 (23:00 UTC on April 12), peaking at 75% higher than in the previous week around 02:30 (23:30 UTC) as people sought news updates. This traffic spike was predominantly driven by mobile device usage, accounting for 62% of all traffic from Israel at that time. Traffic remained higher than usual during Sunday.

Around that time, at 02:00 local time (23:00 UTC), the IDF (Israel Defense Forces) posted on X that sirens were sounding across Israel because of an imminent attack from Iran.

🚨Sirens sounding across Israel🚨 pic.twitter.com/BuDasagr10

— Israel Defense Forces Continue reading

Improving authoritative DNS with the official release of Foundation DNS

We are very excited to announce the official release of Foundation DNS, with new advanced nameservers, even more resilience, and advanced analytics to meet the complex requirements of our enterprise customers. Foundation DNS is one of Cloudflare's largest leaps forward in our authoritative DNS offering since its launch in 2010, and we know our customers are interested in an enterprise-ready authoritative DNS service with the highest level of performance, reliability, security, flexibility, and advanced analytics.

Starting today, every new enterprise contract that includes authoritative DNS will have access to the Foundation DNS feature set and existing enterprise customers will have Foundation DNS features made available to them over the course of this year. If you are an existing enterprise customer already using our authoritative DNS services, and you’re interested in getting your hands on Foundation DNS earlier, just reach out to your account team, and they can enable it for you. Let’s get started…

Why is DNS so important?

From an end user perspective, DNS makes the Internet usable. DNS is the phone book of the Internet which translates hostnames like www.cloudflare.com into IP addresses that our browsers, applications, and devices use to connect to services. Without Continue reading

How we ensure Cloudflare customers aren’t affected by Let’s Encrypt’s certificate chain change

Let’s Encrypt, a publicly trusted certificate authority (CA) that Cloudflare uses to issue TLS certificates, has been relying on two distinct certificate chains. One is cross-signed with IdenTrust, a globally trusted CA that has been around since 2000, and the other is Let’s Encrypt’s own root CA, ISRG Root X1. Since Let’s Encrypt launched, ISRG Root X1 has been steadily gaining its own device compatibility.

On September 30, 2024, Let’s Encrypt’s certificate chain cross-signed with IdenTrust will expire. After the cross-sign expires, servers will no longer be able to serve certificates signed by the cross-signed chain. Instead, all Let’s Encrypt certificates will use the ISRG Root X1 CA.

Most devices and browser versions released after 2016 will not experience any issues as a result of the change since the ISRG Root X1 will already be installed in those clients’ trust stores. That's because these modern browsers and operating systems were built to be agile and flexible, with upgradeable trust stores that can be updated to include new certificate authorities.

The change in the certificate chain will impact legacy devices and systems, such as devices running Android version 7.1.1 (released in 2016) or older, as those exclusively Continue reading

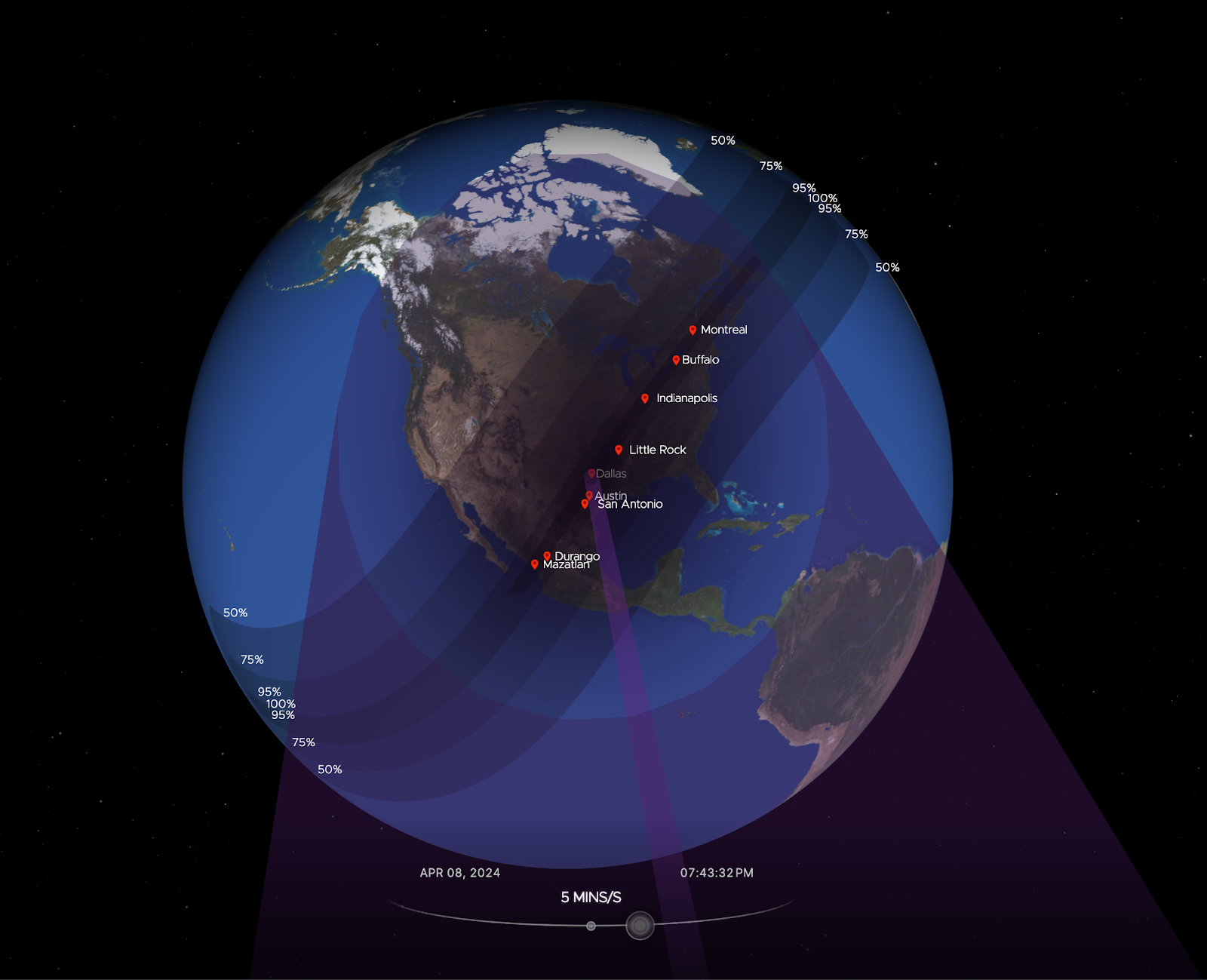

Total eclipse of the Internet: traffic impacts in Mexico, the US, and Canada

There are events that unite people, like a total solar eclipse, reminding us, humans living on planet Earth, of our shared dependence on the sun. Excitement was obvious in Mexico, several US states, and Canada during the total solar eclipse that occurred on April 8, 2024. Dubbed the Great North American Eclipse, millions gathered outdoors to witness the Moon pass between Earth and the Sun, casting darkness over fortunate states. Amidst the typical gesture of putting the eclipse glasses on and taking them off, depending on if people were looking at the sky during the total eclipse, or before or after, what happened to Internet traffic?

Cloudflare’s data shows a clear impact on Internet traffic from Mexico to Canada, following the path of totality. The eclipse occurred between 15:42 UTC and 20:52 UTC, moving from south to north, as seen in this NASA image of the path and percentage of darkness of the eclipse.

Looking at the United States in aggregate terms, bytes delivered traffic dropped by 8%, and request traffic by 12% as compared to the previous week at 19:00 UTC Continue reading

Major data center power failure (again): Cloudflare Code Orange tested

This post is also available in Français, Español.

Here's a post we never thought we'd need to write: less than five months after one of our major data centers lost power, it happened again to the exact same data center. That sucks and, if you're thinking "why do they keep using this facility??," I don't blame you. We're thinking the same thing. But, here's the thing, while a lot may not have changed at the data center, a lot changed over those five months at Cloudflare. So, while five months ago a major data center going offline was really painful, this time it was much less so.

This is a little bit about how a high availability data center lost power for the second time in five months. But, more so, it's the story of how our team worked to ensure that even if one of our critical data centers lost power it wouldn't impact our customers.

On November 2, 2023, one of our critical facilities in the Portland, Oregon region lost power for an extended period of time. It happened because of a cascading series of faults that appears to have been caused by maintenance by the Continue reading

Developer Week 2024 wrap-up

This post is also available in 简体中文, 繁體中文, 日本語, 한국어, Deutsch, Français and Español.

Developer Week 2024 has officially come to a close. Each day last week, we shipped new products and functionality geared towards giving developers the components they need to build full-stack applications on Cloudflare.

Even though Developer Week is now over, we are continuing to innovate with the over two million developers who build on our platform. Building a platform is only as exciting as seeing what developers build on it. Before we dive into a recap of the announcements, to send off the week, we wanted to share how a couple of companies are using Cloudflare to power their applications:

We have been using Workers for image delivery using R2 and have been able to maintain stable operations for a year after implementation. The speed of deployment and the flexibility of detailed configurations have greatly reduced the time and effort required for traditional server management. In particular, we have seen a noticeable cost savings and are deeply appreciative of the support we have received from Cloudflare Workers.

- FAN Communications

Milkshake helps creators, influencers, and business owners create engaging web pages Continue reading

Cloudflare acquires Baselime to expand serverless application observability capabilities

Today, we’re thrilled to announce that Cloudflare has acquired Baselime.

The cloud is changing. Just a few years ago, serverless functions were revolutionary. Today, entire applications are built on serverless architectures, from compute to databases, storage, queues, etc. — with Cloudflare leading the way in making it easier than ever for developers to build, without having to think about their architecture. And while the adoption of serverless has made it simple for developers to run fast, it has also made one of the most difficult problems in software even harder: how the heck do you unravel the behavior of distributed systems?

When I started Baselime 2 years ago, our goal was simple: enable every developer to build, ship, and learn from their serverless applications such that they can resolve issues before they become problems.

Since then, we built an observability platform that enables developers to understand the behaviour of their cloud applications. It’s designed for high cardinality and dimensionality data, from logs to distributed tracing with OpenTelemetry. With this data, we automatically surface insights from your applications, and enable you to quickly detect, troubleshoot, and resolve issues in production.

In parallel, Cloudflare has been busy the past few years building Continue reading