(Micro)benchmarking Linux kernel functions

Usually, the performance of a Linux subsystem is measured through an external (local or remote) process stressing it. Depending on the input point used, a large portion of code may be involved. To benchmark a single function, one solution is to write a kernel module.

Minimal kernel module

Let’s suppose we want to benchmark the IPv4 route lookup function,

fib_lookup(). The following kernel function executes 1,000 lookups

for 8.8.8.8 and returns the average value.1 It uses the

get_cycles() function to compute the execution “time.”

/* Execute a benchmark on fib_lookup() and put result into the provided buffer `buf`. */ static int do_bench(char *buf) { unsigned long long t1, t2; unsigned long long total = 0; unsigned long i; unsigned count = 1000; int err = 0; struct fib_result res; struct flowi4 fl4; memset(&fl4, 0, sizeof(fl4)); fl4.daddr = in_aton("8.8.8.8"); for (i = 0; i < count; i++) { t1 = get_cycles(); err |= Continue reading

Bloomberg Using Kubernetes to Test Kubernetes Deployments

PowerfulSeal test platform likened to Netflix's Chaos Monkey.

PowerfulSeal test platform likened to Netflix's Chaos Monkey.

Our commitment to open networking

On Monday we released our latest version of Cumulus Linux, 3.5. It includes symmetric VxLAN routing, Voice VLAN and 10 new hardware platforms. This includes General Availability (GA) of our two supported chassis, the four slot Backpack and eight slot OMP800. We announced Early Access (EA) support for both chassis in our previous release, Cumulus Linux 3.4.

At Cumulus, moving fast to fix problems and get features in the hands of our customers is core to our culture. In today’s webscale networks, it’s hard for even the largest of organizations to operate on classic 18+ month buying cycles. Some folks want the ability to use new technology as soon as possible.

The EA process gives customers the ability to use working software or hardware and provide direct feedback on the final product. That feedback improves all aspects of the product, from purchasing, delivery, default configurations or operations.

When we announced EA for our chassis systems, we had many Fortune 500 customers express interest. For some, the EA process allowed them to start the purchasing process knowing that it would take months until a final purchase order was ready. For others, they were able to put working, stable Continue reading

Programming Unbound

I’m doing some research on Facebook’s Open/R routing platform for a future blog post. I’m starting to understand the nuances a bit compared to OSPF or IS-IS, but during my reading I got stopped cold by one particular passage:

Many traditional routing protocols were designed in the past, with a strong focus on optimizing for hardware-limited embedded systems such as CPUs and RAM. In addition, protocols were designed as purpose-built solutions to solve the particular problem of routing for connectivity, rather than as a flexible software platform to build new applications in the network.

Uh oh. I’ve seen language like this before related to other software projects. And quite frankly, it worries me to death. Because it means that people aren’t learning their lessons.

New and Improved

Any time I see an article about how a project was rewritten from the ground up to “take advantage of new changes in protocols and resources”, it usually signals to me that some grad student decided to rewrite the whole thing in Java because they didn’t understand C. It sounds a bit cynical, but it’s not often wrong.

Want proof? Check out Linus Torvalds and his opinion about rewriting the Linux kernel in Continue reading

Reflections on the Internet’s Past

Earlier this year, as part of the Internet Society’s 25th anniversary celebration, we asked you to share your memories of the early Internet. As we look forward to the new year, it’s fun to read through the stories and look back at where we started.

One of the earliest memories was from Stanford University.

I got my first Arpanet email account in 1978.

[By 1985] All the graduate students and professors had accounts, and there was a campus Ethernet, Macs were being integrated into the network via AppleTalk (print and file sharing services)… Also, beyond email we had ftp servers that served shareware and USENET to help with sysadmin problems. Much of the networking software and hardware was developed on campus, including the AppleTalk gateways (Kinetics) and routers (early Cisco protoypes).

There was also this dose of funny reality from nearly ten thousand kilometers away, in Moscow:

I had remote data connection more than 26 years ago, in 1991. We had so called dial up modem connection via telephone PSTN pre-analogue PBX- the step-by-step switch.

It was toooooo extremely long.

Another member shared this memory from INET ’93 San Francisco:

…among the papers and presentations one which drew the largest crowd was Continue reading

Episode 18 – Whitebox Networking

In Episode 18 of Network Collective, Pete Lumbis of Cumulus Networks and Kevin Myers of IP Architechs join us to talk about the pros and cons of running whitebox or commodity hardware in your network. There’s no denying that the price point on commodity hardware is attractive but we discuss all you should consider when you’re looking to transition away from a major network hardware vendor.

Show Notes

What is whitebox networking?

- Has a variety of definitions depending on the person answering

- Associated with ONIE and Oss like Cumulus Linux, BigSwitch, IPI

- Commodity hardware not mainstream vendor with OS that may or may not be from the same vendor

- To some, hardware has more to do with hardware

- Commodity hardware has to do with using chipset, board, or even layout that someone else created

- Component of disaggregation to consider

- Britebox is the larger vendors offering disaggregation options

- Whietbox equipment isn’t about homemade devices soldered together in our garages

Why whitebox networking?

- The original impetus for whitebox was often around cost, and sometimes it still is

- Current reasons are to have more options like customized operating systems

- Whitebox utilizes the equipment to move to an x86 model that compute Continue reading

Top 5 blogs of 2017: LinuxKit, A Toolkit for building Secure, Lean and Portable Linux Subsystems

In case you’ve missed it, this week we’re highlighting the top five most popular Docker blogs in 2017. Coming in the third place is the announcement of LinuxKit, a toolkit for building secure, lean and portable Linux Subsystems.

LinuxKit includes the tooling to allow building custom Linux subsystems that only include exactly the components the runtime platform requires. All system services are containers that can be replaced, and everything that is not required can be removed. All components can be substituted with ones that match specific needs. It is a kit, very much in the Docker philosophy of batteries included but swappable. LinuxKit is an open source project available at https://github.com/linuxkit/linuxkit.

To achieve our goals of a secure, lean and portable OS,we built it from containers, for containers. Security is a top-level objective and aligns with NIST stating, in their draft Application Container Security Guide: “Use container-specific OSes instead of general-purpose ones to reduce attack surfaces. When using a container-specific OS, attack surfaces are typically much smaller than they would be with a general-purpose OS, so there are fewer opportunities to attack and compromise a container-specific OS.”

The leanness directly helps with security by removing parts not Continue reading

From SD-WAN to the Network Edge: Here Are the Top Sponsored Posts of 2017

Here is a list of our Top 5 sponsored posts of 2017

Here is a list of our Top 5 sponsored posts of 2017

From Trials to Use Cases – The Top 5G Developments in 2017

Virtualization becomes a bigger part of 5G discussion.

Virtualization becomes a bigger part of 5G discussion.

5 Biggest HCI Movers and Shakers in 2017

Five companies stand out for their strong hyperconverged moves.

Five companies stand out for their strong hyperconverged moves.

IoT Security Using BLE Encryption

An analysis of the pros and cons of Bluetooth Low Energy link-layer security for IoT protection.

Microsoft: ReFS is ridiculous

I’ve blogged before about how the new integrity-checking filesystem in Windows, ReFS, doesn’t actually have integrity checking turned on by default. It’s pretty silly that for a modern filesystem meant to compete with ZFS and BtrFS, to have the main 21st century feature turned off by default. But it’s not quite ridiculous. Not yet.

Now it turns out that scrubbing is only supported on Windows Server

- Microsoft honestly shipped an integrity-checking filesystem in Windows 10 with no way to repair or scrub it.

I used to say that Windows 10 is the best Windows ever, and that Microsoft kinda won my trust back. But what the hell?

I contacted Microsoft support over chat, who first suggested I do a system restore (sigh). But after I insisted that they please confirm that it’s supposed to work confirmed that no that only ships with Windows Server.

It’s not even clear from their pricing if I need the $882 Standard Edition or the $6,155 Datacenter Edition. Either one is way too much for such a standard feature.

What the hell, Microsoft? All I want is a checksumming file system. Either provide it, or don’t. Don’t give me a checksumming filesystem that can’t be Continue reading

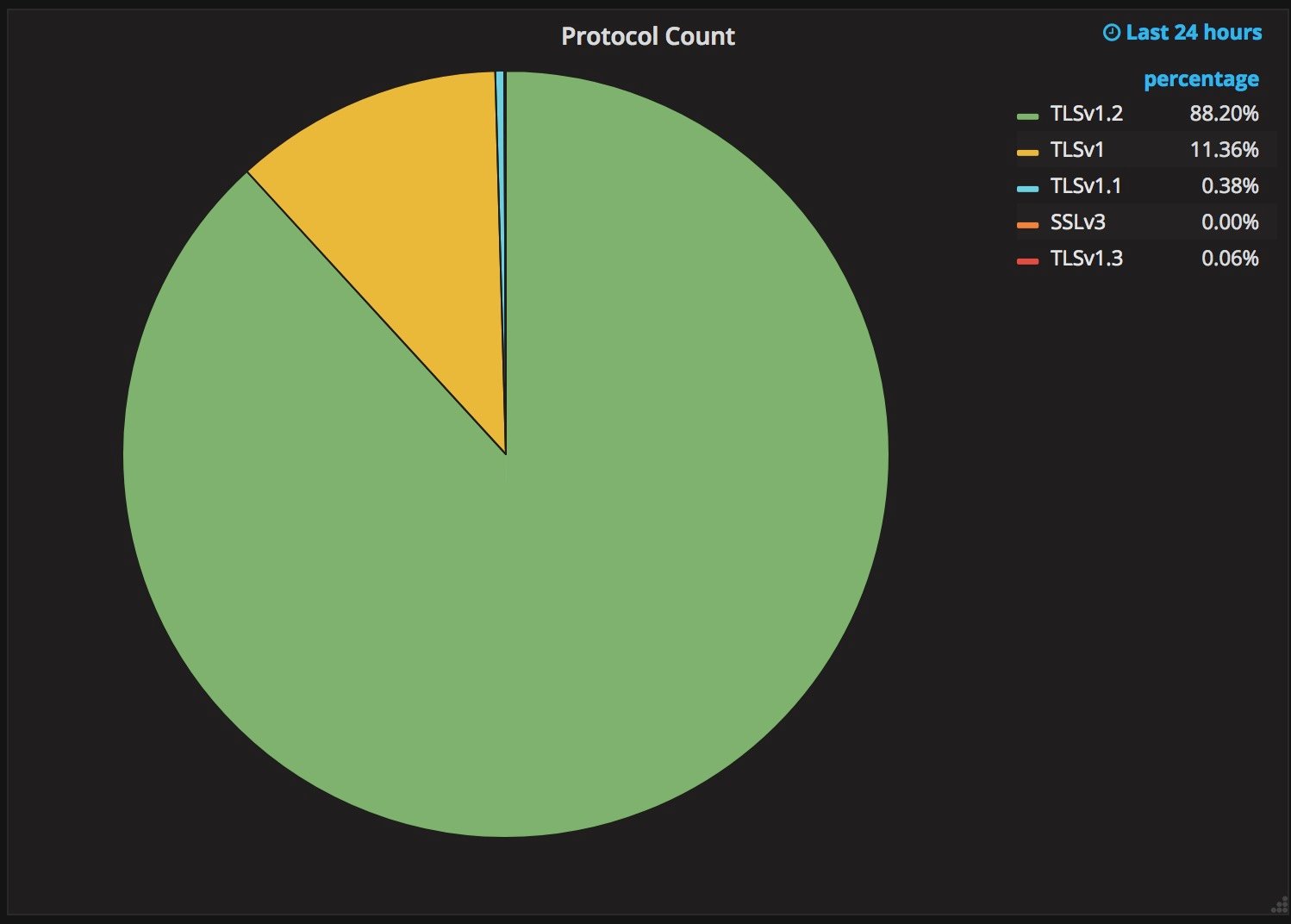

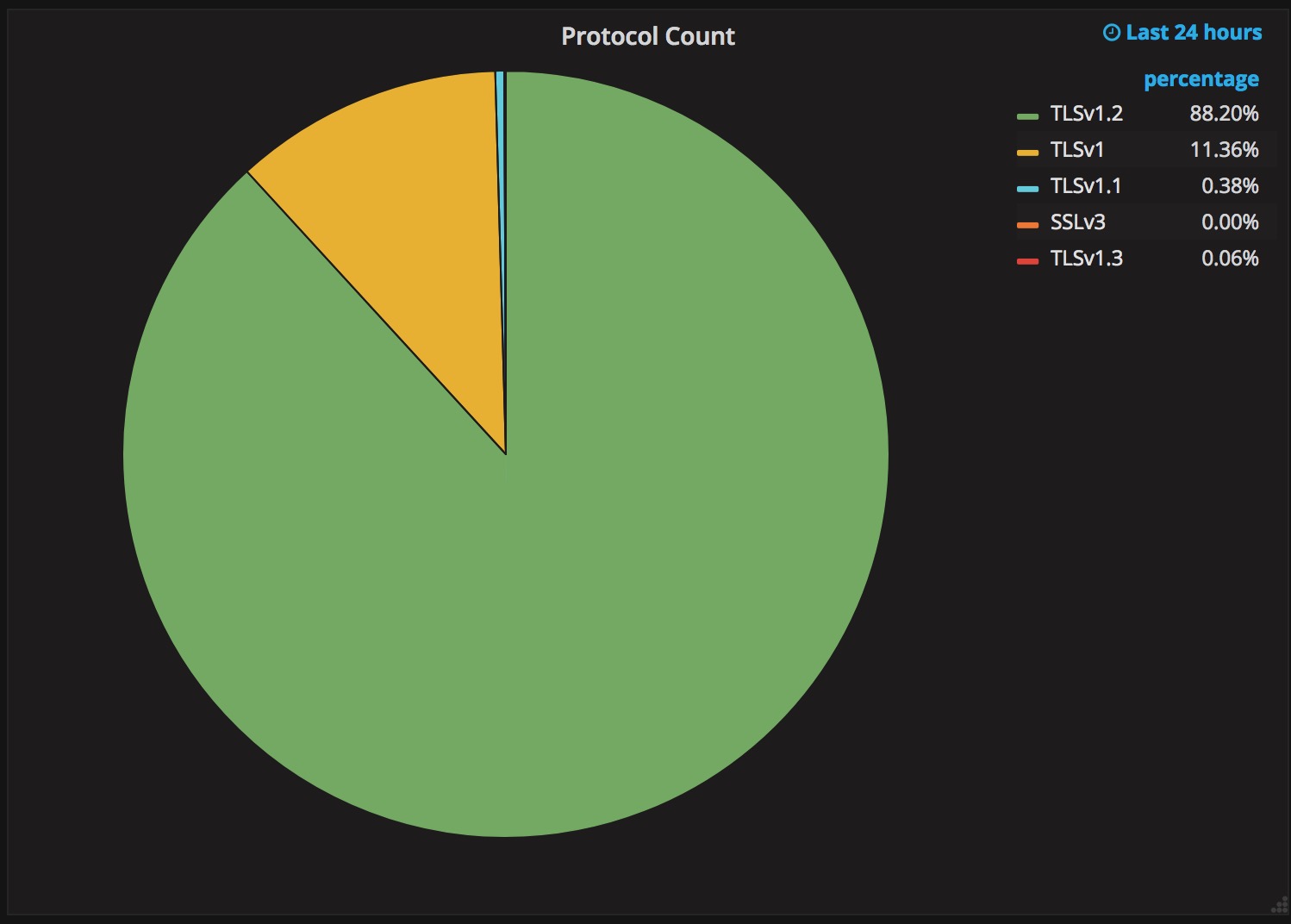

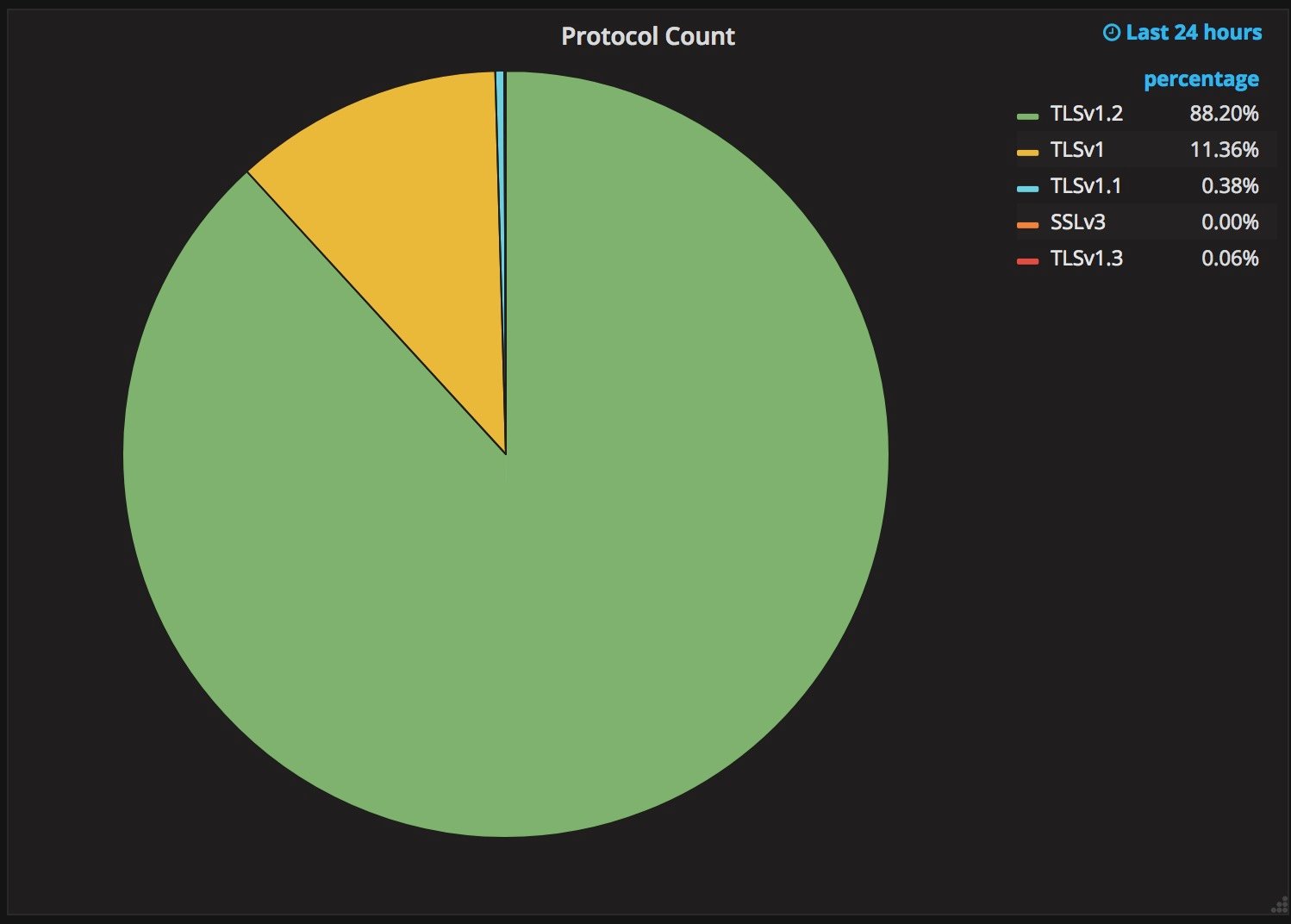

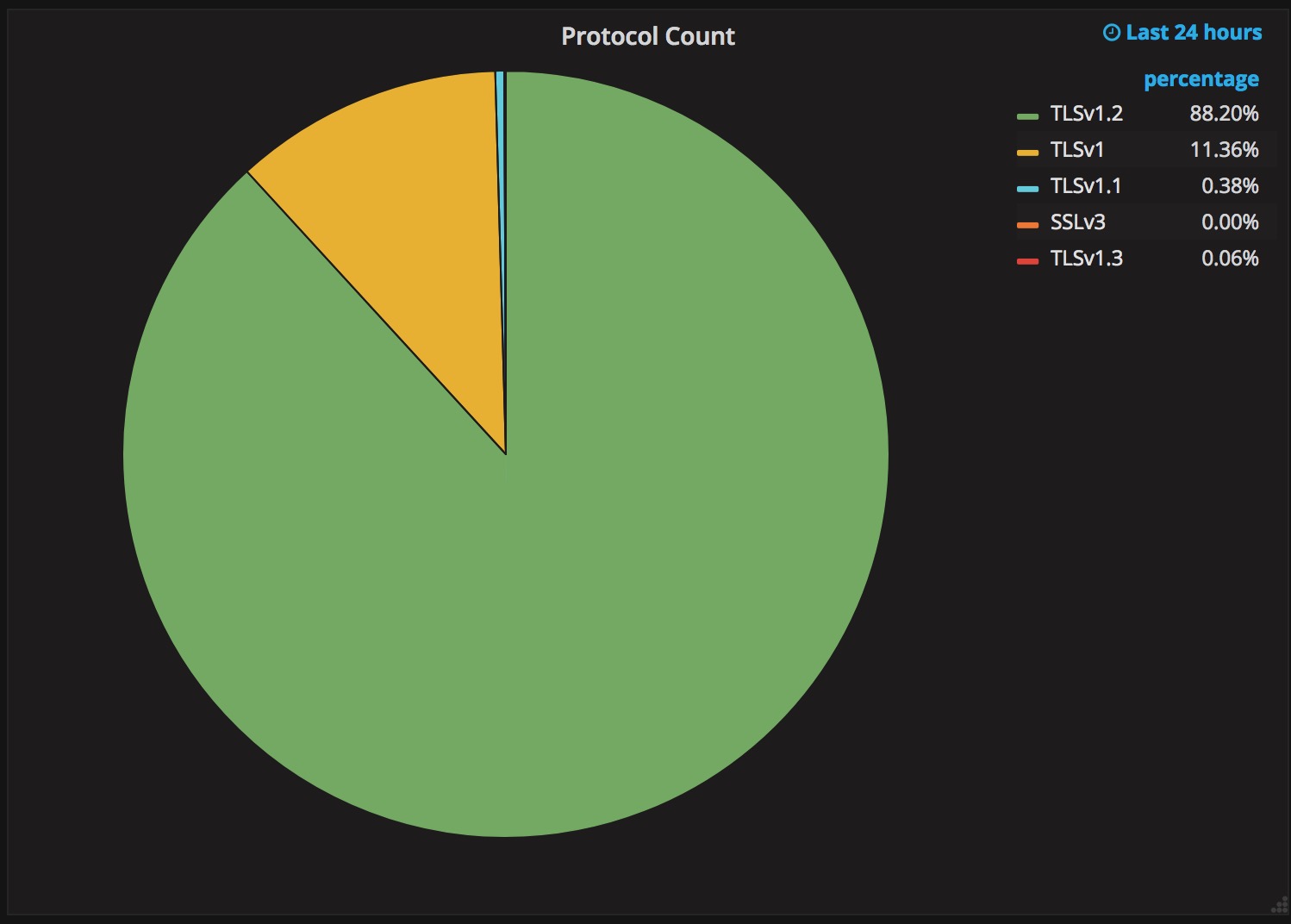

Why TLS 1.3 isn’t in browsers yet

Upgrading a security protocol in an ecosystem as complex as the Internet is difficult. You need to update clients and servers and make sure everything in between continues to work correctly. The Internet is in the middle of such an upgrade right now. Transport Layer Security (TLS), the protocol that keeps web browsing confidential (and many people persist in calling SSL), is getting its first major overhaul with the introduction of TLS 1.3. Last year, Cloudflare was the first major provider to support TLS 1.3 by default on the server side. We expected the client side would follow suit and be enabled in all major browsers soon thereafter. It has been over a year since Cloudflare’s TLS 1.3 launch and still, none of the major browsers have enabled TLS 1.3 by default.

The reductive answer to why TLS 1.3 hasn’t been deployed yet is middleboxes: network appliances designed to monitor and sometimes intercept HTTPS traffic inside corporate environments and mobile networks. Some of these middleboxes implemented TLS 1.2 incorrectly and now that’s blocking browsers from releasing TLS 1.3. However, simply blaming network appliance vendors would be disingenuous. The deeper truth of the Continue reading

Why TLS 1.3 isn’t in browsers yet

Upgrading a security protocol in an ecosystem as complex as the Internet is difficult. You need to update clients and servers and make sure everything in between continues to work correctly. The Internet is in the middle of such an upgrade right now. Transport Layer Security (TLS), the protocol that keeps web browsing confidential (and many people persist in calling SSL), is getting its first major overhaul with the introduction of TLS 1.3. Last year, Cloudflare was the first major provider to support TLS 1.3 by default on the server side. We expected the client side would follow suit and be enabled in all major browsers soon thereafter. It has been over a year since Cloudflare’s TLS 1.3 launch and still, none of the major browsers have enabled TLS 1.3 by default.

The reductive answer to why TLS 1.3 hasn’t been deployed yet is middleboxes: network appliances designed to monitor and sometimes intercept HTTPS traffic inside corporate environments and mobile networks. Some of these middleboxes implemented TLS 1.2 incorrectly and now that’s blocking browsers from releasing TLS 1.3. However, simply blaming network appliance vendors would be disingenuous. The deeper truth of the Continue reading

Concise (Post-Christmas) Cryptography Challenges

It's the day after Christmas; or, depending on your geography, Boxing Day. With the festivities over, you may still find yourself stuck at home and somewhat bored.

Either way; here are three relatively short cryptography challenges, you can use to keep you momentarily occupied. Other than the hints (and some internet searching), you shouldn't require a particularly deep cryptography knowledge to start diving into these challenges. For hints and spoilers, scroll down below the challenges!

Challenges

Password Hashing

The first one is simple enough to explain; here are 5 hashes (from user passwords), crack them:

$2y$10$TYau45etgP4173/zx1usm.uO34TXAld/8e0/jKC5b0jHCqs/MZGBi

$2y$10$qQVWugep3jGmh4ZHuHqw8exczy4t8BZ/Jy6H4vnbRiXw.BGwQUrHu

$2y$10$DuZ0T/Qieif009SdR5HD5OOiFl/WJaDyCDB/ztWIM.1koiDJrN5eu

$2y$10$0ClJ1I7LQxMNva/NwRa5L.4ly3EHB8eFR5CckXpgRRKAQHXvEL5oS

$2y$10$LIWMJJgX.Ti9DYrYiaotHuqi34eZ2axl8/i1Cd68GYsYAG02Icwve

HTTP Strict Transport Security

A website works by redirecting its www. subdomain to a regional subdomain (i.e. uk.), the site uses HSTS to prevent SSLStrip attacks. You can see cURL requests of the headers from the redirects below, how would you practically go about stripping HTTPS in this example?

$ curl -i http://www.example.com

HTTP/1.1 302 Moved Temporarily

Server: nginx

Date: Tue, 26 Dec 2017 12:26:51 GMT

Content-Type: text/html

Transfer-Encoding: chunked

Connection: keep-alive

location: https://uk.example.com/

$ curl -i http://uk.example.com

HTTP/1.1 200 OK

Server: nginx

Content-Type: text/html; Continue reading