Apples for Apples: Measuring the Cost of Public vs. Private Clouds

Before making a choice of where to place workloads, enterprises need a consistent way to compare public and private cloud costs.Path Hunting in BGP

BGP is a path vector protocol. This is similar to distance vector protocols such as RIP. Protocols like these, as opposed to link state protocols such as OSPF and ISIS, are not aware of any topology. They can only act on information received by peers. Information is not flooded in the same manner as IGPs where a change in connectivity is immediately flooded while also running SPF. With distance and path vector protocols, good news travels fast and bad news travels slow. What does this mean? What does it have to do with path hunting?

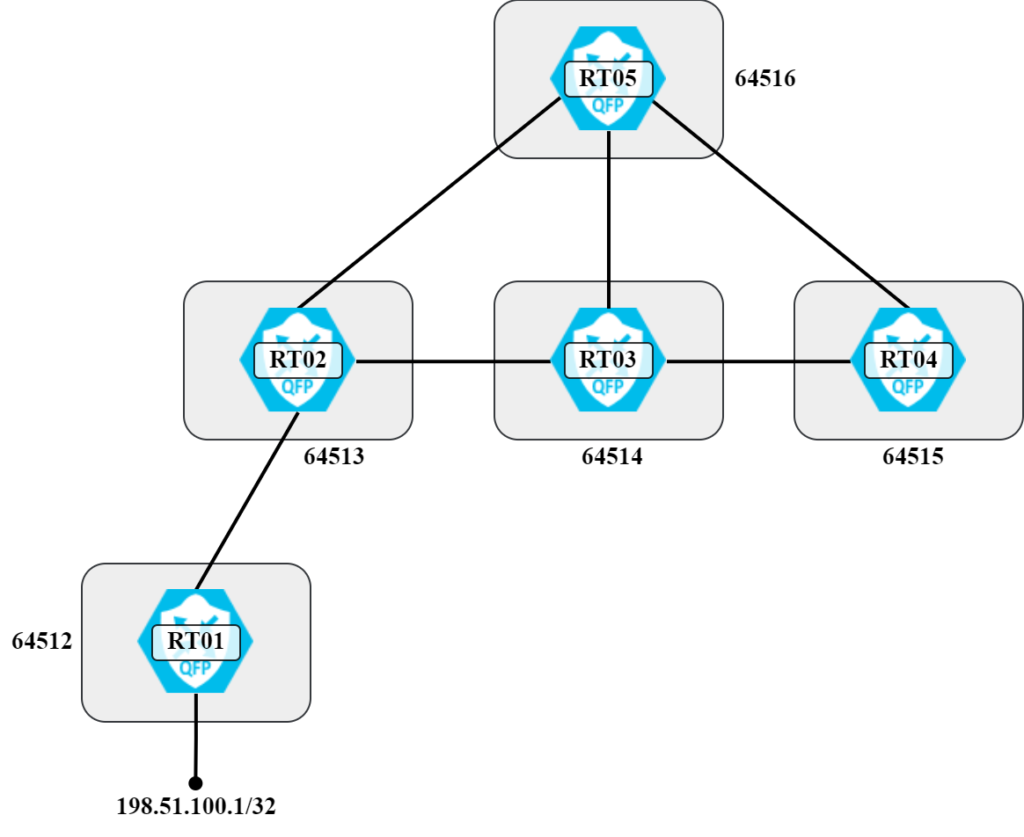

To explain what path hunting is, let’s work with the topology below:

With how the routers and autonomous systems are connected, under stable conditions RT05 will have prefix 198.51.100.1/32 via the following AS paths:

- 64513 64512

- 64514 64513 64512

- 64515 64514 64513 64512

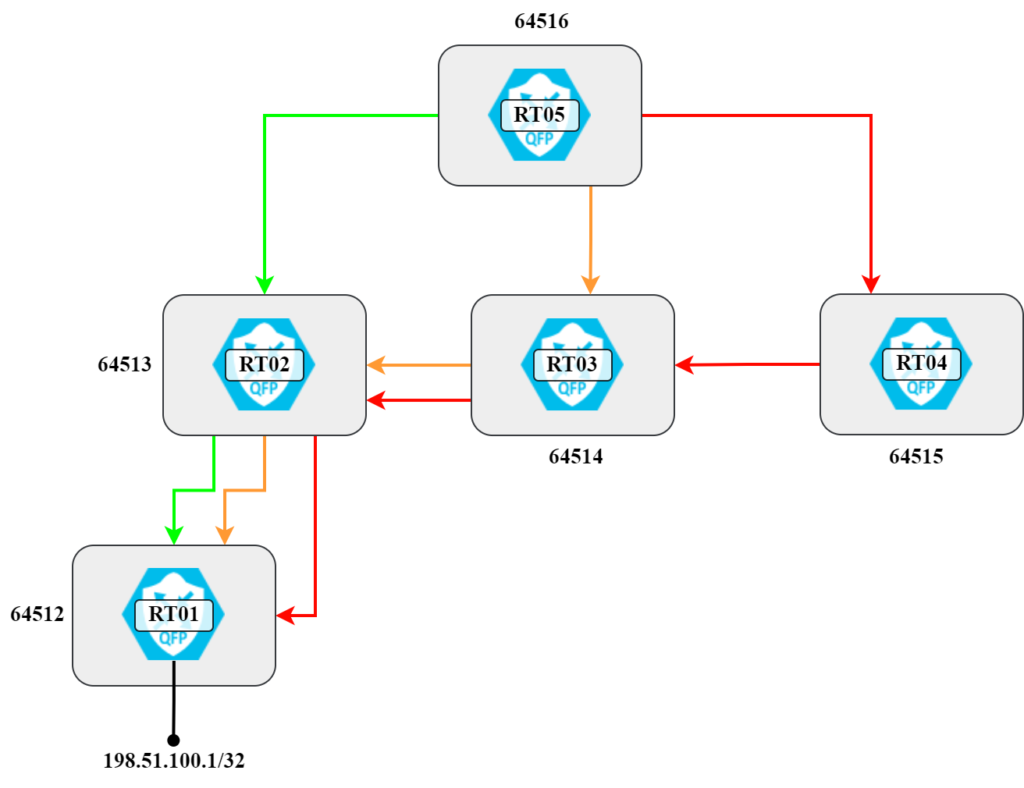

This is also shown visually in the following diagram:

There’s a total of three different paths, sorted from shortest to longest. Let’s verify this:

RT05#sh bgp ipv4 uni

BGP table version is 11, local router ID is 192.168.128.174

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Continue reading

MPLS-Auto-BW-Junos

In this write up I am covering how to design and apply MPLS Auto-Bandwidth feature set in Junos Platforms.

https://github.com/kashif-nawaz/MPLS-Auto-BW-Junos

Worth Reading: Some Thoughts on Digital Twins

I encountered several articles explaining the challenges of simulating your network in a virtual lab in the last few months, including:

- Some thoughts on digital twins by Jeff McLaughlin

- Network Simulation is hard – Part 1, Part 2 – by Pete Crocker

- Twenty-five open-source network emulators and simulators you can use in 2023 by Brian Linkletter

Enjoy!

Worth Reading: Some Thoughts on Digital Twins

I encountered several articles explaining the challenges of simulating your network in a virtual lab in the last few months, including:

- Some thoughts on digital twins by Jeff McLaughlin

- Network Simulation is hard – Part 1, Part 2 – by Pete Crocker

- Twenty-five open-source network emulators and simulators you can use in 2023 by Brian Linkletter

Enjoy!

Worth Reading: Introduction of EVPN at DE-CIX

Numerous Internet Exchange Points (IXP) started using VXLAN years ago to replace tradition layer-2 fabrics with routed networks. Many of them tried to avoid the complexities of EVPN and used VXLAN with statically-configured (and hopefully automated) ingress replication.

A few went a step further and decided to deploy EVPN, primarily to deploy Proxy ARP functionality on EVPN switches and reduce the ARP/ND traffic. Thomas King from DE-CIX described their experience on APNIC blog – well worth reading if you’re interested in layer-2 fabrics.

Worth Reading: Introduction of EVPN at DE-CIX

Numerous Internet Exchange Points (IXP) started using VXLAN years ago to replace tradition layer-2 fabrics with routed networks. Many of them tried to avoid the complexities of EVPN and used VXLAN with statically-configured (and hopefully automated) ingress replication.

A few went a step further and decided to deploy EVPN, primarily to deploy Proxy ARP functionality on EVPN switches and reduce the ARP/ND traffic. Thomas King from DE-CIX described their experience on APNIC blog – well worth reading if you’re interested in layer-2 fabrics.

Heavy Networking 704: Roundtable Redux: Blaming The Network; Containerlab Love; 400G Envy

Today's Heavy Networking is another roundtable episode. We've assembled a group of network engineers to talk about what's on their minds. Topics today include why other IT departments adn end users are quick to blame the network first, and what can be done about it; using Nokia's open-source Containerlab for testing and development work; and why you shouldn't feel left behind when you hear talk about 400G and 800G networks.

The post Heavy Networking 704: Roundtable Redux: Blaming The Network; Containerlab Love; 400G Envy appeared first on Packet Pushers.

Heavy Networking 704: Roundtable Redux: Blaming The Network; Containerlab Love; 400G Envy

Today's Heavy Networking is another roundtable episode. We've assembled a group of network engineers to talk about what's on their minds. Topics today include why other IT departments adn end users are quick to blame the network first, and what can be done about it; using Nokia's open-source Containerlab for testing and development work; and why you shouldn't feel left behind when you hear talk about 400G and 800G networks.Calico monthly roundup: September 2023

Welcome to the Calico monthly roundup: September edition! From open source news to live events, we have exciting updates to share—let’s get into it!

|

Discover how you can automate security, ensure consistency, and tightly align security with development practices in a microservices environment. |

The State of Calico Open Source: Usage & Adoption Report 2023 Get insights into Calico’s adoption across container and Kubernetes environments, in terms of platforms, data planes, and policies. |

Open source news

- Share your Calico Open Source journey and win a $25.00 gift card – Your journey with Calico Open Source matters to us! Share your experience and insight on how you solve problems and build your network security using Calico. Book a meeting to tell us about your journey and where you seek information. As a thank you, we’ll send you a $25.00 gift card!

- Calico Live – Join the Calico community every Wednesday at 2:00 pm ET for a live discussion about learning how to leverage Calico and Kubernetes for networking and security. We will explore Kubernetes security and policy design, network flow logs and more. Join Continue reading

Global NaaS Event Roundup: Taking NaaS and SASE Forward

MEF announced the industry’s first NaaS blueprint, a SASE certification program, and an enterprise leadership council.Virtual networking 101: Bridging the gap to understanding TAP

It's a never-ending effort to improve the performance of our infrastructure. As part of that quest, we wanted to squeeze as much network oomph as possible from our virtual machines. Internally for some projects we use Firecracker, which is a KVM-based virtual machine manager (VMM) that runs light-weight “Micro-VM”s. Each Firecracker instance uses a tap device to communicate with a host system. Not knowing much about tap, I had to up my game, however, it wasn't easy — the documentation is messy and spread across the Internet.

Here are the notes that I wish someone had passed me when I started out on this journey!

A tap device is a virtual network interface that looks like an ethernet network card. Instead of having real wires plugged into it, it exposes a nice handy file descriptor to an application willing to send/receive packets. Historically tap devices were mostly used to implement VPN clients. The machine would route traffic towards a tap interface, and a VPN client application would pick them up and process accordingly. For example this is what our Cloudflare WARP Linux client does. Here's how it looks on my laptop:

$ ip link list

...

18: CloudflareWARP: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> Continue readingVideo: What Is Software-Defined Data Center

A few years ago, I was asked to deliver a What Is SDDC presentation that later became a webinar. I forgot about that webinar until I received feedback from one of the viewers a week ago:

If you like to learn from the teachers with the “straight to the point” approach and complement the theory with many “real-life” scenarios, then ipSpace.net is the right place for you.

I haven’t realized people still find that webinar useful, so let’s make it viewable without registration, starting with What Problem Are We Trying to Solve and What Is SDDC.

Video: What Is Software-Defined Data Center

A few years ago, I was asked to deliver a What Is SDDC presentation that later became a webinar. I forgot about that webinar until I received feedback from one of the viewers a week ago:

If you like to learn from the teachers with the “straight to the point” approach and complement the theory with many “real-life” scenarios, then ipSpace.net is the right place for you.

I haven’t realized people still find that webinar useful, so let’s make it viewable without registration, starting with What Problem Are We Trying to Solve and What Is SDDC.

Transforming Container Network Security with Calico Container Firewall

Transforming Container Network Security with Calico Container Firewall