SDN Analytics and Orchestration from the 17th Annual SDN/MPLS Conference

SDN Analytics & Orchestration from the 17th Annual SDN/MPLS Conference

by Steve Harriman, VP of Marketing - November 11, 2014

Last week at the SDN/MPLS [1] conference in Washington, D.C., large service providers, research organizations and academia, and equipment manufacturers from around the world gathered to hear about the latest SDN/NFV developments. Cengiz Alaettinoglu, Packet Design’s CTO, contributed his insights and experience by presenting at the conference on “SDN Analytics: Bridging Overlay and Underlay Networks.” His premise is that underlay routing issues will impact overlay network performance, thus creating the need for SDN analytics to correlate the two and provide management visibility.

Figure 1. SDN Analytics can correlate the impact of underlay network issues on overlay performance.

- Topology (IGP, BGP, RSVP-TE, L2/3 VPNs, OpenFlow tables)

- Traffic (real-time and historical traffic matrices, and projected demands)

- Performance (jitter, packet delay/loss, MOS scores, Continue reading

The Best Presentations on SDN Analytics and Wide Area Orchestration at SDN/MPLS 2014

The Best Presentations on SDN Analytics and Wide Area Orchestration at SDN/MPLS 2014

by Cengiz Alaettinoglu, CTO - November 11, 2014

I attended the SDN/MPLS conference in Washington, D.C. last week, where I presented on the importance of analytics for WAN SDN application bandwidth scheduling and the need for even richer analytics when looking at the data center, network edge and WAN SDN holistically. In my presentation I highlighted the importance of accurate traffic demand matrices and the need to consider failures when selecting paths, so that the network can survive them without creating congestion. I was not the only one talking about WAN orchestration and analytics.

One of the most interesting presentations in my opinion was by Douglas Freimuth of IBM. Douglas presented his work titled “Orchestrated Bandwidth-on-Demand for Cloud Services.” It is a collaboration between IBM, Ciena, and AT&T. They carried out the work in a laboratory test bed.

In the test bed, there were three data centers (Los Angeles, New York and Chicago) running OpenStack. When VM workload in the Los Angeles data center exceeded a threshold, some of the VMs were moved to the New York data center to reduce the load. Continue reading

How to IT: Chasing the Certs

Let me kick this blog off with a little bit of background information about me. I am an IT worker born and raised in Houston, TX. ICloudFlare and SHA-1 Certificates

At CloudFlare, we’re dedicated to ensuring sites are not only secure, but also available to the widest audience. In the coming months, both Google’s Chrome browser and Mozilla’s Firefox browser are changing their policy with respect to certain web site certificates. We are aware of these changes, and we have modified our SSL offerings to ensure customer sites continue to be secure and available to all visitors.

Chrome (and Firefox) and SHA-1

Google will be making changes to its Chrome browser in upcoming versions to change the way they treat certain web site certificates based on their digital signature. These changes affect over 80% of websites.

As described in our blog post on CFSSL, web site certificates are organized using a chain of trust. Digital signatures are the glue that connects the certificates in the chain. Each certificate is digitally signed by its issuer using a digital signature algorithm defined by the type of key and a cryptographic hash function (such as MD5, SHA-1, SHA-256).

Starting in Chrome 39 (to be released this month, November 2014), certificates signed with a SHA-1 signature algorithm will be considered less trusted than those signed with a more modern SHA-2 algorithm. This change Continue reading

What The Juniper Learning Portal Offers For Free

I’ve been working with Juniper SRX firewalls, MX routers, and EX switches for over a year now. I don’t spend a ton of time at the CLI. Mostly, I have some project I need to accomplish, so I do my homework, mock up in a lab what I’m able to, and wing the rest. […]Andrisoft Wanguard: Cost-Effective Network Visibility

Andrisoft Wansight and Wanguard are tools for network traffic monitoring, visibility, anomaly detection and response. I’ve used them, and think that they do a good job, for a reasonable price.

Wanguard Overview

There are two flavours to what Andrisoft does: Wansight for network traffic monitoring, and Wanguard for monitoring and response. They both use the same underlying components, the main difference is that Wanguard can actively respond to anomalies (DDoS, etc).

Andrisoft monitors traffic in several ways – it can do flow monitoring using NetFlow/sFlow/IPFIX, or it can work in inline mode, and do full packet inspection. Once everything is setup, all configuration and reporting is done from a console. This can be on the same server as you’re using for flow collection, or you can use a distributed setup.

The software is released as packages that can run on pretty much any mainstream Linux distro. It can run on a VM or on physical hardware. If you’re processing a lot of data, you will need plenty of RAM and good disk. VMs are fine for this, provided you have the right underlying resources. Don’t listen to those who still cling to their physical boxes. They lost.

Anomaly Detection

You Continue reading

Lessons Learned from Deploying Multicast

Lately I have been working a lot with multicast, which is fun and challenging! Even if you have a good understanding of multicast unless you work on it a lot there may be some concepts that fall out of memory or that you only run into in real life and not in the lab. Here is a summary of some things I’ve noticed so far.

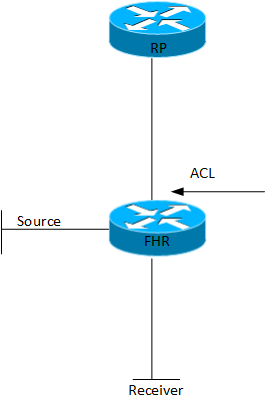

PIM Register

PIM Register are control plane messages sent from the First Hop Router (FHR) towards the Rendezvous Point (RP). These are unicast messages encapsulating the multicast from the multicast source. There are some considerations here, firstly because these packets are sent from the FHR control plane to the RP control plane, they are not subject to any access list configured outbound on the FHR. I had a situation where I wanted to route the multicast locally but not send it outbound.

Even if the ACL was successful, care would have to be taken to not break the control plane between the FHR and the RP or all multicast traffic for the group would be at jeopardy.

The PIM Register messages are control plane messages, this means that the RP has to process them Continue reading

SDN, Network Virtualization and Hypervisors

Packet Pushers sponsor Pluribus Networks sent along Robert Drost to bring us this blog post. He’s a pretty interesting guy. Robert Drost was a Sr. Distinguished Engineer and Director of Advanced Hardware at Sun Microsystems. Robert has extensive hardware experience, including over 90 patents and a 17 year career in high-performance computing systems. Among other recognitions, […]

Author information

The post SDN, Network Virtualization and Hypervisors appeared first on Packet Pushers Podcast and was written by Sponsored Blog Posts.

Network Management Should Be Way Easier

by Kevin Dooley originally published on the Auvik blog Network management is so often ridiculously complicated. Naturally I have mixedEnd-Of-Sale date announced for various Cisco IPS’s

Since Cisco started announcing the Sourcefire FirePower (Hardware & Software) modules earlier this year I have been wondering what was going to happen to their existing IPS line. Looks like the End Of Sale announcement was recently made, with an EoS date in April of next year. The EoX announce affects both the IPS Modules […]SDN Jobs Vs. Traditional Jobs, 3QCY14

Are the number of #SDN jobs catching up to traditional networking skills, for instance, for jobs that require OSPF skills? Today’s post wraps this short series about the SDN job market in the 3rd quarter of 2014, with a comparison of the number of SDN jobs versus other search terms. Other posts in the series for this quarter:

I Wanted to Know, and Thought You Might as Well

Let’s say the SDN numbers show us 25 new jobs/week in the US. Is that a lot? Not many? I have no idea. So I pondered how we could get some perspective, with just a little effort (translated: only a little time and money). The solution seemed obvious: track some traditional networking terms with the same kinds of searches that we were already tracking with “SDN”.

So, we’ve been tracking a few other terms for a while now:

- CCIE

- CCNP

- OSPF

Note that we didn’t track for each type of CCIE or CCNP, but simply that single term. So our data counts any and every job with CCIE in the title or description, and another counter for CCNP.

However, Continue reading

Source-Driven Configuration for NetOps

I mentioned in a previous post that version control is an important component of efficiently managing network infrastructure. I’m going to take is a step further than what most are doing with RANCID, which is traditionally used at the end of a workflow (gathering running config diffs) and show you what it’s like to start with version controlled configuration artifacts, specifically using Ansible’s “template” module.

I’m not going to discuss how you get the resulting configurations actually running on your network devices – that is best saved for another post. This is more focused on using version control and review workflows to initiate what will eventually turn into a networking-centric CI pipeline.

Config Review and Versioning with Gerrit

Let’s say you are the Senior Network Engineer for your entire company, which boasts a huge network. You don’t have time to touch every device, so you have a team of junior-level network engineers that help you out with move/add/change kinds of tasks. You’ve already moved your configurations into Jinja2 templates, and have created an Ansible role that takes care of moving configuration variables into a rendered Continue reading

Teambuilding. Whisky Tango Foxtrot? Check.

If you’ve ever done a network audit or a stock inventory check, you’ll know that it is possible one of the most boring activities you could possibly undertake, unless the stock you’re checking is particular salacious, I suppose. Certainly it’s … Continue reading

If you liked this post, please do click through to the source at Teambuilding. Whisky Tango Foxtrot? Check. and give me a share/like. Thank you!

Leading Disruption

My entire career has been spent finding disruption and cultivating the technologies needed to convert that disruption into real business value for customers. It is with that objective in mind that I am thrilled to join the Plexxi team as Chief Executive Officer, alongside my good friend and colleague Dave Husak, who will lead our product development efforts.

We are in a unique moment in time, with massive technological and business model changes underway in parallel. Everything we know about compute, storage, networking, and applications is in transformation. Changes like this have not occurred in over twenty years. And change of this magnitude breeds opportunity.

My decision to join Plexxi was actually many months in the making. In my previous job leading EMC’s Unified Storage Division, I drove over $30B in revenue during my tenure with over 2000 people in the global organizational for which I was responsible. In that role, I had a fairly unique vantage point of the IT industry as a whole. I certainly spent time viewing the landscape from my position within a major infrastructure manufacturer. But I also got to engage with channel and technology partners across the entire IT spectrum to see how they Continue reading

The Degree or the Certification: Learn to See

This week I was reading through various RSS feeds, and ran across a couple that fell within the scope of last week’s topic. So, rather than moving on to more practical concerns, as I had planned to do — well, I thought I should respond to some common lines of thinking.

![]() First of all, the IT space is in constant change, and the speed of change is just increasing. That change manifests itself in new technologies coming about, and new processes associated with the technologies. Secondly is work experience: What you’ve done in the past is not necessarily useful for the future. Like in the financial realm, where it’s recognized that past performance is no guarantee of future performance, it’s also true in the work environment. When you look at past experience, it’s already dated, from a technology perspective. -IT Business Edge

First of all, the IT space is in constant change, and the speed of change is just increasing. That change manifests itself in new technologies coming about, and new processes associated with the technologies. Secondly is work experience: What you’ve done in the past is not necessarily useful for the future. Like in the financial realm, where it’s recognized that past performance is no guarantee of future performance, it’s also true in the work environment. When you look at past experience, it’s already dated, from a technology perspective. -IT Business Edge

Now, I’m not one to argue with the idea that the IT world is always changing. Certainly new technologies come, and old technologies go. As the saying goes, legacy just means what you’re currently installing. And certainly there will always be a need to learn the new language, the new command line, the new hardware choices, the Continue reading

Automated Network Diagrams with Schprokits & AutoNetkit

In this article, I’m going to explore not only working with Schprokits, but also working with AutoNetkit. AutoNetkit, part of the PhD thesis work of Simon Knight, is an application and framework for modeling network devices, both from a configuration and visualization/diagramming standpoint. Some of Continue reading

SDN Jobs Vs. Traditional Jobs, 3QCY14

Are the number of #SDN jobs catching up to traditional networking skills, for instance, for jobs that require OSPF skills? Today’s post wraps this short series about the SDN job market in the 3rd quarter of 2014, with a comparison of the number of SDN jobs versus other search terms. Other posts in the series for this quarter:

I Wanted to Know, and Thought You Might as Well

Let’s say the SDN numbers show us 25 new jobs/week in the US. Is that a lot? Not many? I have no idea. So I pondered how we could get some perspective, with just a little effort (translated: only a little time and money). The solution seemed obvious: track some traditional networking terms with the same kinds of searches that we were already tracking with “SDN”.

So, we’ve been tracking a few other terms for a while now:

- CCIE

- CCNP

- OSPF

Note that we didn’t track for each type of CCIE or CCNP, but simply that single term. So our data counts any and every job with CCIE in the title or description, and another counter for CCNP.

However, Continue reading

The Routing Resilience Manifesto

If you run BGP in your network, you need to think about BGP security. It might not seem like it’s important if you’re not a provider, but two points to consider: First, if you’re connected to the Internet, making certain your little corner of the Internet is secure is important Second, no matter where you […]

Author information

Handling the Bottom of MPLS Stack

MPLS bottom-of-stack bit confused one of my readers. In particular, he had a problem with the part where the egress MPLS Label Switch Router (LSR) should go from labeled (MPLS) to unlabeled (IPv4, IPv6) packets and has to figure out what’s in the packet.

Read more ...