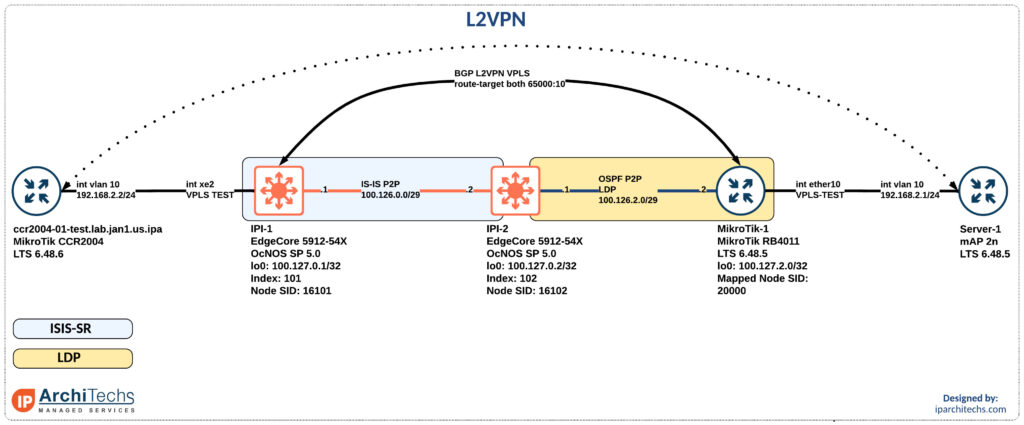

Interop IP infusion and MikroTik: part 2 – VPLS

VPLS is a pretty common technology in ISPs to either sell layer 2 services or backhaul traffic to a centralized aggregation point to conserve IPv4 space; check out more on that here.

How can I take advantage of segment routing but still deliver the same services? We already looked at how to setup the label switched paths utilizing IP Infusion as a segment routing mapping server in this interop post. Now let’s see how we can deliver a VPLS service over this with mikrotik as a provider edge router.

Delivering a service with a L2VPN

After setting up the IGP and label distribution between the PEs we will start building the L2VPN.

Why a BGP signaled VPLS session instead of LDP signaled VPLS?

In the segment-routing domain there is no LDP running. However, some vendors support static pseudowires or other methods to bring up a targeted LDP session for VPLS. I did some basic testing here couldn’t easily identify the right combination of knobs to make this work. Don’t worry; I’ll come back to it.

BGP signaled VPLS is a standards based technology that both vendors support.

First thing we need to do after having loopback reachability is to build Continue reading

Using netsim-tools with containerlab: Welcome to the World of Tomorrow

Julio Perez wrote a wonderful blog post describing how he combined netsim-tools and containerlab to build Arista cEOS labs.

Hint: when you’re done with that blog post, keep reading and add his blog to your RSS feed – he wrote some great stuff in the past.

Using netlab with containerlab: Welcome to the World of Tomorrow

Julio Perez wrote a wonderful blog post describing how he combined netlab and containerlab1 to build Arista cEOS labs.

Hint: when you’re done with that blog post, keep reading and add his blog to your RSS feed – he wrote some great stuff in the past.

-

netlab was known as netsim-tools at the time he wrote the blog post ↩︎

Rust Notes: Enums

An enum is a type which can be ONE of a FEW different variants. // Create an instance of the enum variants with the double colon. let blah = StuffAndThings::Blah; let bleh = StuffAndThings::Bleh(42); let stuff = StuffAndThings::Stuff(String::from("Stuff"), true); let things =...continue reading

Rust Notes: Hash Maps

A HashMap is a generic collection of key/value pairs. All of the keys must have the same type, and all of the values must have the same type. HashMap Considerations A hash maps data is stored on the heap. A hash maps size is not known at compile time, they can grow or shrink at run...continue reading

Rust Notes: Vectors

A vector is a collection of items of the same type. Vector Considerations When an empty vector is created with Vec::new it is un-allocated until elements are pushed into it. A vector with elements has allocated memory and lives on the heap. A vector is represented as a pointer to the...continue reading

Worth Reading: Performance Testing of Commercial BGP Stacks

For whatever reason, most IT vendors attach “you cannot use this for performance testing and/or publish any results” caveat to their licensing agreements, so it’s really hard to get any independent test results that are not vendor-sponsored and thus suitably biased.

Justin Pietsch managed to get a permission to publish test results of Junos container implementation (cRPD) – no surprise there, Junos outperformed all open-source implementations Justin tested in the past.

What about other commercial BGP stacks? Justin did the best he could: he published Testing Commercial BGP Stacks instructions, so you can do the measurements on your own.

Worth Reading: Performance Testing of Commercial BGP Stacks

For whatever reason, most IT vendors attach “you cannot use this for performance testing and/or publish any results” caveat to their licensing agreements, so it’s really hard to get any independent test results that are not vendor-sponsored and thus suitably biased.

Justin Pietsch managed to get a permission to publish test results of Junos container implementation (cRPD) – no surprise there, Junos outperformed all open-source implementations Justin tested in the past.

What about other commercial BGP stacks? Justin did the best he could: he published Testing Commercial BGP Stacks instructions, so you can do the measurements on your own.

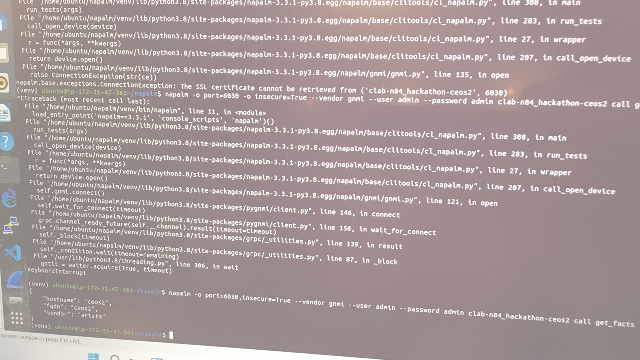

#NANOG84 Hackathon: No plan survives first contact with go-getter students

Austin, 12–13 February 2022

So there I was, ready to do battle in my blue corner of the ring. Together with Anton Karneliuk from Karneliuk.com — author of pyGNMI among other things — we got started on a multi-vendor NAPALM driver using gNMI as a backend.

Working around the clock in our different time zones, in full blown follow-the-moon style, we managed to get a basic NAPALM get_facts working for both Arista cEOS and Nokia SR Linux, at some point late Saturday night.

Working together to build the Internet of tomorrow®

On Sunday a group of students from University of Colorado Boulder joined the party, and it quickly became apparent that our hacking plans would have to change. Because important as multi-vendor compatibility issues are, inter-generation transfer of knowledge is even more critical. We already barely understand the networks we have built today — how could we ever expect the next generation to keep things running if we don’t help them understand what we did?

Long story short: I teamed up with Dinesh Kumar Palanivelu and he ended up submitting his first Pull Request: A small step for a man, but a huge win for the NANOG community!

Thanks again Continue reading

Rust Notes: Traits

A trait in Rust is similar to an interface in other languages. Traits define the required behaviour a type MUST implement to satisfy the trait (interface). // Structs that implement the `Greeter` trait. struct Person struct Dog } // Implement the `Greeter` trait for the...continue reading

Rust Notes: Structs

A struct in Rust is used to encapsulate data. structs can have fields, associated functions and methods. // Instantiate an immutable struct. let sat = StuffAndThings Associated Function Associated functions are tied to an instance of a struct and are usually used as...continue reading

Rust Notes: Traits

A trait in Rust is similar to an interface in other languages. Traits define the required behaviour a type MUST implement to satisfy the trait (interface). // Structs that implement the `Greeter` trait. struct Person struct Dog } // Implement the `Greeter` trait for the...continue reading

Heavy Networking 618: Building Virtual Networks With Console Connect (Sponsored)

On today’s Heavy Networking episode we talk with sponsor Console Connect, which provides software-defined interconnections for enterprises and service providers. Guests Paul Gampe and Jay Turner dig into the Console Connect catalog, including Internet On-Demand, CloudRouter, and some of the interesting partner integrations that provide unique connectivity options.

The post Heavy Networking 618: Building Virtual Networks With Console Connect (Sponsored) appeared first on Packet Pushers.

Heavy Networking 618: Building Virtual Networks With Console Connect (Sponsored)

On today’s Heavy Networking episode we talk with sponsor Console Connect, which provides software-defined interconnections for enterprises and service providers. Guests Paul Gampe and Jay Turner dig into the Console Connect catalog, including Internet On-Demand, CloudRouter, and some of the interesting partner integrations that provide unique connectivity options.CDP Discovery Using Microsoft’s PKTMON

In this video, I review how to use Microsoft pktmon commands to figure out what port I am connected to without using Wireshark.Technical Debt or Underperforming Investment?

In this week’s issue of the Packet Pushers Human Infrastructure newsletter, there was an excellent blog post from Kam Lasater about how talking about technical debt makes us sound silly. I recommend you read the whole thing because he brings up some very valid points about how the way the other departments of the organization perceive our issues can vary. It also breaks down debt in a very simple format that takes it away from a negative connotation and shows how debt can be a leverage instrument.

To that end, I want to make a modest proposal to help the organization understand the challenges that IT faces with older systems and integration challenges. Except we need some new branding. So, I propose we start referring to technical debt as “underperforming technical investments”.

I’d Buy That For A Dollar

Technical debt is just a clever way to refer to the series of layered challenges we face from decisions that were made to accomplish tasks. It’s a burden we carry negatively throughout the execution of our job because it adds extra time to the process. We express it as debt because it’s a price that must be paid every time we need Continue reading