Upgraded Turnstile Analytics enable deeper insights, faster investigations, and improved security

Attackers are increasingly using more sophisticated methods to not just brute force their way into your sites but also simulate real user behavior for targeted harmful activity like account takeovers, credential stuffing, fake account creation, content scraping, and fraudulent transactions. They are no longer trying to simply take your website down or gain access to it, but rather cause actual business harm. There is also the increasing complexity added by attackers rotating IP addresses, routing through proxies, and using VPNs. In this evolving security landscape, meaningful analytics matter. Many traditional CAPTCHA solutions provide simplistic pass or fail trends on challenges without insights into traffic patterns or behavior. Cloudflare Turnstile aims to equip you with more than just basic trends, so you can make informed decisions and stay ahead of the attackers.

We are excited to introduce a major upgrade to Turnstile Analytics. With these upgraded analytics, you can identify harder-to-detect bots faster, and fine-tune your bot security posture with less manual log analysis than before. Turnstile, our privacy-first CAPTCHA alternative, has been helping you protect your applications from automated abuse while ensuring a seamless experience for legitimate users. Now, using enhanced analytics, you can gain deeper insights into Continue reading

Extending Cloudflare Radar’s security insights with new DDoS, leaked credentials, and bots datasets

Security and attacks continues to be a very active environment, and the visibility that Cloudflare Radar provides on this dynamic landscape has evolved and expanded over time. To that end, during 2023’s Security Week, we launched our URL Scanner, which enables users to safely scan any URL to determine if it is safe to view or interact with. During 2024’s Security Week, we launched an Email Security page, which provides a unique perspective on the threats posed by malicious emails, spam volume, the adoption of email authentication methods like SPF, DMARC, and DKIM, and the use of IPv4/IPv6 and TLS by email servers. For Security Week 2025, we are adding several new DDoS-focused graphs, new insights into leaked credential trends, and a new Bots page to Cloudflare Radar. We are also taking this opportunity to refactor Radar’s Security & Attacks page, breaking it out into Application Layer and Network Layer sections.

Below, we review all of these changes and additions to Radar.

Since Cloudflare Radar launched in 2020, it has included both network layer (Layers 3 & 4) and application layer (Layer 7) attack traffic insights on a single Security & Attacks page. Over Continue reading

Worth Reading: Standards for ANSI Escape Codes

I encountered the Escape sequences (named after the first character in the sequence) while programming stuff that would look nicely on the venerable VT100 terminals (not to mention writing one or two VT100 emulators myself).

In the meantime, those sequences got standardized and (par for the course) extended with “proprietary” stuff everyone uses now. Julia Evans did a great job documenting the state of the art. Thanks a million!

Tech Bytes: Securing 5G Networks With Palo Alto Networks Prisma SASE (Sponsored)

Today on the Tech Bytes podcast we dive into 5G security with sponsor Palo Alto Networks. 5G services are being adopted worldwide for use cases such as IoT, connected vehicles, and AR and VR. At the same time, the number of threats against 5G is growing. We’ll talk about Palo Alto Networks Prisma SASE 5G,... Read more »NB518: Clock Starts For New Intel CEO; Arista Load Balancing Targets AI Infrastructure

Take a Network Break! We start with warnings about an Apple Webkit zero day and ransonware exploits against known Fortinet vulnerabilites, and discuss attribution issues with the X DDoS attack. Intel names Lip-Bu Tan as Chief Resurrection Officer, but how long does he have before investors get antsy? HPE plans to lay off thousands of... Read more »Tensor Parallelism

The previous section described how Pipeline Parallelism distributes entire layers across multiple GPUs. However, Large Language Models (LLMs) based on transformer architectures contain billions of parameters, making this approach insufficient.

For example, GPT-3 has approximately 605 million parameters in a single self-attention layer and about 1.2 billion parameters in a feedforward layer, and these figures apply to just one transformer block. Since GPT-3 has 96 transformer blocks, the total parameter count reaches approximately 173 billion. When adding embedding and normalization parameters, the total increases to roughly 175 billion parameters.

The number of parameters in a single layer alone often exceeds the memory capacity of a single GPU, making Pipeline Parallelism insufficient. Additionally, performing large matrix multiplications on a single GPU would be extremely slow and inefficient. Tensor Parallelism addresses this challenge by splitting computations within individual layers across multiple GPUs rather than assigning whole layers to separate GPUs, as done in Pipeline Parallelism.

Chapter 7 introduces Transformer architecture but for memory refreshing, figure 8-15 illustrates a stack of decoder modules in a transformer architecture. Each decoder module consists of a Self-Attention layer and a Feedforward layer. The figure also shows how an input word, represented by x1, is first Continue reading

Conventional cryptography is under threat. Upgrade to post-quantum cryptography with Cloudflare Zero Trust

Quantum computers are actively being developed that will eventually have the ability to break the cryptography we rely on for securing modern communications. Recent breakthroughs in quantum computing have underscored the vulnerability of conventional cryptography to these attacks. Since 2017, Cloudflare has been at the forefront of developing, standardizing, and implementing post-quantum cryptography to withstand attacks by quantum computers.

Our mission is simple: we want every Cloudflare customer to have a clear path to quantum safety. Cloudflare recognizes the urgency, so we’re committed to managing the complex process of upgrading cryptographic algorithms, so that you don’t have to worry about it. We're not just talking about doing it. Over 35% of the non-bot HTTPS traffic that touches Cloudflare today is post-quantum secure.

The National Institute of Standards and Technology (NIST) also recognizes the urgency of this transition. On November 15, 2024, NIST made a landmark announcement by setting a timeline to phase out RSA and Elliptic Curve Cryptography (ECC), the conventional cryptographic algorithms that underpin nearly every part of the Internet today. According to NIST’s announcement, these algorithms will be deprecated by 2030 and completely disallowed by 2035.

At Cloudflare, we aren’t waiting until 2035 or even Continue reading

Advancing account security as part of Cloudflare’s commitment to CISA’s Secure by Design pledge

In May 2024, Cloudflare signed the Cybersecurity and Infrastructure Security Agency (CISA) Secure By Design pledge. Since then, Cloudflare has been working to enhance the security of our products, ensuring that users are better protected from evolving threats.

Today we are excited to talk about the improvements we have made towards goal number one in the pledge, which calls for increased multi-factor authentication (MFA) adoption. MFA takes many forms across the industry, from app-based and hardware key authentication, to email or SMS. Since signing the CISA pledge we have continued to iterate on our MFA options for users, and most recently added support for social logins with Apple and Google, building on the strong foundation that both of these partners offer their users with required MFA for most accounts. Since introducing social logins last year, about 25% of our users use it weekly, and it makes up a considerable portion of our MFA secured users. There’s much more to do in this space, and we are continuing to invest in more options to help secure your accounts.

According to the 2024 Verizon Data Breach Investigations Continue reading

Email Security now available for free for political parties and campaigns through Cloudflare for Campaigns

At Cloudflare, we believe that every political candidate — regardless of their affiliation — should be able to run their campaign without the constant worry of cyber attacks. Unfortunately, malicious actors, such as nation-states, financially motivated attackers, and hackers, are often looking to disrupt campaign operations and messaging. These threats have the potential to interfere with the democratic process, weaken public confidence, and cause operational challenges for campaigns of all scales.

In 2020, in partnership with the non-profit, non-partisan Defending Digital Campaigns (DDC), we launched Cloudflare for Campaigns to offer a free package of cybersecurity tools to political campaigns, especially smaller ones with limited resources. Since then, we have helped over 250 political campaigns and parties across the US, regardless of affiliation.

This is why we are excited to announce that we have extended our Cloudflare for Campaigns product suite to include Email Security, to secure email systems that are essential to safeguarding the integrity and success of a political campaign. By preventing phishing, spoofing, and other email threats, it helps protect candidates, staff, and supporters from cyberattacks that could compromise sensitive data.

Phishing attacks on political campaigns have been a Continue reading

Enhanced security and simplified controls with automated botnet protection, cipher suite selection, and URL Scanner updates

At Cloudflare, we are constantly innovating and launching new features and capabilities across our product portfolio. Today, we're releasing a number of new features aimed at improving the security tools available to our customers.

Automated security level: Cloudflare’s Security Level setting has been improved and no longer requires manual configuration. By integrating botnet data along with other request rate signals, all customers are protected from confirmed known malicious botnet traffic without any action required.

Cipher suite selection: You now have greater control over encryption settings via the Cloudflare dashboard, including specific cipher suite selection based on our client or compliance requirements.

Improved URL scanner: New features include bulk scanning, similarity search, location picker and more.

These updates are designed to give you more power and flexibility when managing online security, from proactive threat detection to granular control over encryption settings.

Cloudflare’s Security Level feature was designed to protect customer websites from malicious activity.

Available to all Cloudflare customers, including the free tier, it has always had very simple logic: if a connecting client IP address has shown malicious behavior across our network, issue a managed challenge. The system tracks malicious behavior Continue reading

Password reuse is rampant: nearly half of observed user logins are compromised

Accessing private content online, whether it's checking email or streaming your favorite show, almost always starts with a “login” step. Beneath this everyday task lies a widespread human mistake we still have not resolved: password reuse. Many users recycle passwords across multiple services, creating a ripple effect of risk when their credentials are leaked.

Based on Cloudflare's observed traffic between September - November 2024, 41% of successful logins across websites protected by Cloudflare involve compromised passwords. In this post, we’ll explore the widespread impact of password reuse, focusing on how it affects popular Content Management Systems (CMS), the behavior of bots versus humans in login attempts, and how attackers exploit stolen credentials to take over accounts at scale.

As part of our Application Security offering, we offer a free feature that checks if a password has been leaked in a known data breach of another service or application on the Internet. When we perform these checks, Cloudflare does not access or store plaintext end user passwords. We have built a privacy-preserving credential checking service that helps protect our users from compromised credentials. Passwords are hashed – i.e., converted into a random string of characters Continue reading

Chaos in Cloudflare’s Lisbon office: securing the Internet with wave motion

Over the years, Cloudflare has gained fame for many things, including our technical blog, but also as a tech company securing the Internet using lava lamps, a story that began as a research/science project almost 10 years ago. In March 2025, we added another layer to its legacy: a "wall of entropy" made of 50 wave machines in constant motion at our Lisbon office, the company's European HQ.

These wave machines are a new source of entropy, joining lava lamps in San Francisco, suspended rainbows in Austin, and double chaotic pendulums in London. The entropy they generate contributes to securing the Internet through LavaRand.

The new waves wall at Cloudflare’s Lisbon office sits beside the Radar Display of global Internet insights, with the 25th of April Bridge overlooking the Tagus River in the background.

It’s exciting to see waves in Portugal now playing a role in keeping the Internet secure, especially given Portugal’s deep maritime history.

The installation honors Portugal’s passion for the sea and exploration of the unknown, famously beginning over 600 years ago, in 1415, with pioneering vessels like caravels and naus/carracks, precursors to galleons and other ships. Portuguese sea exploration was driven by navigation schools Continue reading

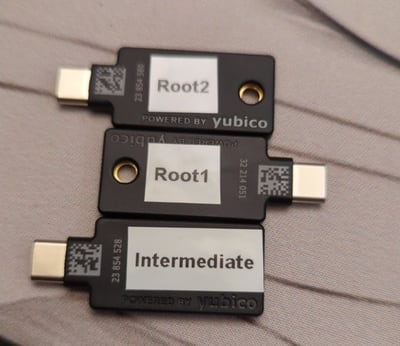

Offline PKI using 3 YubiKeys and an ARM single board computer

An offline PKI enhances security by physically isolating the certificate authority from network threats. A YubiKey is a low-cost solution to store a root certificate. You also need an air-gapped environment to operate the root CA.

This post describes an offline PKI system using the following components:

- 2 YubiKeys for the root CA (with a 20-year validity),

- 1 YubiKey for the intermediate CA (with a 5-year validity), and

- 1 Libre Computer Sweet Potato as an air-gapped SBC.

It is possible to add more YubiKeys as a backup of the root CA if needed. This is not needed for the intermediate CA as you can generate a new one if the current one gets destroyed.

The software part

offline-pki is a small Python application to manage an offline PKI.

It relies on yubikey-manager to manage YubiKeys and cryptography for

cryptographic operations not executed on the YubiKeys. The application has some

opinionated design choices. Notably, the cryptography is hard-coded to use NIST

P-384 elliptic curve.

The first step is to reset all your YubiKeys:

$ offline-pki yubikey reset This will reset the connected YubiKey. Are you sure? [y/N]: y New PIN code: Repeat for confirmation: Continue reading

Arista EOS Spooky Action at a Distance

This blog post describes yet another bizarre behavior discovered during the netlab integration testing.

It started innocently enough: I was working on the VRRP integration test and wanted to use Arista EOS as the second (probe) device in the VRRP cluster because it produces nice JSON-formatted results that are easy to use in validation tests.

Everything looked great until I ran the test on all platforms on which netlab configures VRRP, and all of them passed apart from Arista EOS (that was before we figured out how Sturgeon’s Law applies to VRRPv3) – a “That’s funny” moment that was directly responsible for me wasting a few hours chasing white rabbits down this trail.

How Cloudflare is using automation to tackle phishing head on

Phishing attacks have grown both in volume and in sophistication over recent years. Today’s threat isn’t just about sending out generic emails — bad actors are using advanced phishing techniques like 2 factor monster in the middle (MitM) attacks, QR codes to bypass detection rules, and using artificial intelligence (AI) to craft personalized and targeted phishing messages at scale. Industry organizations such as the Anti-Phishing Working Group (APWG) have shown that phishing incidents continue to climb year over year.

To combat both the increase in phishing attacks and the growing complexity, we have built advanced automation tooling to both detect and take action.

In the first half of 2024, Cloudflare resolved 37% of phishing reports using automated means, and the median time to take action on hosted phishing reports was 3.4 days. In the second half of 2024, after deployment of our new tooling, we were able to expand our automated systems to resolve 78% of phishing reports with a median time to take action on hosted phishing reports of under an hour.

In this post we dig into some of the details of how we implemented these improvements.

Welcome to Security Week 2025

The layer of security around today’s Internet is essential to safeguarding everything. From the way we shop online, engage with our communities, access critical healthcare resources, sustain the worldwide digital economy, and beyond. Our dependence on the Internet has led to cyber attacks that are bigger and more widespread than ever, worsening the so-called defender’s dilemma: attackers only need to succeed once, while defenders must succeed every time.

In the past year alone, we discovered and mitigated the largest DDoS attack ever recorded in the history of the Internet – three different times – underscoring the rapid and persistent efforts of threat actors. We helped safeguard the largest year of elections across the globe, with more than half the world’s population eligible to vote, all while witnessing geopolitical tensions and war reflected in the digital world.

2025 already promises to follow suit, with cyberattacks estimated to cost the global economy $10.5 trillion in 2025. As the rapid advancement of AI and emerging technologies increases, and as threat actors become more agile and creative, the security landscape continues to drastically evolve. Organizations now face a higher volume of attacks, and an influx of more complex threats that carry real-world consequences, Continue reading