Durable Objects, now in Open Beta

Back in September, we announced Durable Objects - a new paradigm for stateful serverless.

Since then, we’ve seen incredible demand and countless unlocked opportunities on our platform. We’ve watched large enterprises build applications from complex API features to real-time games in a matter of days from inception to launch. We’ve heard from developers that Durable Objects lets them spend time they used to waste configuring and deploying databases on building features for their apps. More than anything, we’ve heard that you want to start building with Durable Objects now.

As of today, Durable Objects beta access is available to anyone with a Cloudflare Workers® subscription - you can enable them now in the dashboard by navigating to “Workers” and then “Durable Objects”. You can also upgrade to the latest version of Wrangler to deploy Durable Objects!

Durable Objects are still in beta and are being made available to you for testing purposes. Storage is capped per-account at 10 GB of data, and there is no associated SLA for Object availability or durability.

What are Durable Objects?

Durable Objects provide two things: coordination across multiple Workers and strongly consistent edge storage.

Normally Cloudflare’s network executes a Continue reading

Intermittent Terraform Authentication Failure Using AWS Provider in a Vagrant VM

TL&DR: Client clock skew could result in AWS authentication failure when running terraform apply

When I wanted to compare AWS and Azure orchestration speeds I encountered a crazy Terraform error message when running terraform apply:

module.network.aws_vpc.My_VPC: Creating...

Error: Error creating VPC: AuthFailure:

AWS was not able to validate the provided access credentials

status code: 401, request id: ...

Obviously I did all the usual stuff before googling for a solution:

Intermittent Terraform Authentication Failure Using AWS Provider in a Vagrant VM

TL&DR: Client clock skew could result in AWS authentication failure when running terraform apply

When I wanted to compare AWS and Azure orchestration speeds I encountered a crazy Terraform error message when running terraform apply:

module.network.aws_vpc.My_VPC: Creating...

Error: Error creating VPC: AuthFailure:

AWS was not able to validate the provided access credentials

status code: 401, request id: ...

Obviously I did all the usual stuff before googling for a solution:

DNS at IETF 110

The amount of activity in the DNS in the IETF seems to be growing every meeting. I thought that the best way to illustrate to considerably body of DNS working being undertaken at the IETF these days would be to take a snapshot of DNS activity that was reported to the DNS-related Working Group meetings at IETF 110.Business Survival in Uncertain Times Requires Digital Resilience

The digital resilience of your organization is central to its ability to plan, orchestrate, measure, manage, and prioritize activities.Free Networking Lab Images From Arista, Cisco, nVidia (Cumulus)

Here’s my current list of no cost, minimal headache, easily obtainable networking images that work in a virtual lab environment such as EVE-NG or GNS3. My goal is to clearly document what these images are and how to obtain them, as this data is less obvious than I’d like.

I missed some. Probably a bunch. Let me know on the Packet Pushers Slack channel or Twitter DM, and I’ll do additional posts or update this list over time. Make sure your recommendations are for images which are freely available from the vendor for lab use with no licensing requirements or other strings attached. Use those same channels if you just want to tell me I’m wrong about whatever you come across in this post that’s…you know…wrong. I’m all about fixing the wrong stuff.

The list is vendor-neutral, sorted alphabetically. I have no personal allegiance to any of these operating systems. I’ve worked with both EOS and NX-OS in production environments. JUNOS, too, although I don’t have a Juniper virtual device on this list currently. I haven’t worked with Cumulus in production, although it’s been a passive interest for a while now.

Remember–configuration is the boring part. Select a NOS Continue reading

Cloudflare’s WAF is recognized as customers’ choice for 2021

The team at Cloudflare building our Web Application Firewall (WAF) has continued to innovate over the past year. Today, we received public recognition of our work.

The ease of use, scale, and innovative controls provided by the Cloudflare WAF has translated into positive customer reviews, earning us the Gartner Peer Insights Customers' Choice Distinction for WAF for 2021. You can download a complimentary copy of the report here.

Gartner Peer Insights Customers’ Choice distinctions recognize vendors and products that are highly rated by their customers. The data collected represents a top-level synthesis of vendor software products most valued by IT Enterprise professionals.

The positive feedback we have received is consistent and leads back to Cloudflare’s product principles. Customers find that Cloudflare’s WAF is:

- “An excellent hosted WAF, and a company that acts more like a partner than a vendor” — Principal Site Reliability Architect in the Services Industry [Full Review];

- “A straightforward yet highly effective WAF solution” — VP in the Finance Industry [Full Review];

- “Easy and Powerful with Outstanding Support” — VP Technology in the Retail Industry [Full Review];

- “Secure, Intuitive and a Delight for web security and accelerations” — Sr Director-Technical Product Continue reading

SONiC’s Next Home: The SmartNIC Data Processing Unit (DPU)

This guest post is by Ihab Tarazi, Sr. VP and Networking CTO at Dell Technologies. We thank Dell Technologies for being a sponsor. It’s an exciting time to be a part of today’s networking evolution where all the pieces are finally falling into place to help us truly realize a software-defined network. SONiC is an […]

The post SONiC’s Next Home: The SmartNIC Data Processing Unit (DPU) appeared first on Packet Pushers.

Heavy Networking 570: Dell Brings The SONiC NOS To SmartNICs And DPUs (Sponsored)

On today's sponsored Heavy Networking podcast we examine the use of SmartNICs and DPUs to offload networking and security processes. We also discuss the use of the SONiC network OS to run on SmartNICs and DPUs, with P4 as a programming layer. Dell Technologies is our sponsor, and our guest from Dell is Ihab Tarazi, Sr. VP and Networking CTO.Heavy Networking 570: Dell Brings The SONiC NOS To SmartNICs And DPUs (Sponsored)

On today's sponsored Heavy Networking podcast we examine the use of SmartNICs and DPUs to offload networking and security processes. We also discuss the use of the SONiC network OS to run on SmartNICs and DPUs, with P4 as a programming layer. Dell Technologies is our sponsor, and our guest from Dell is Ihab Tarazi, Sr. VP and Networking CTO.

The post Heavy Networking 570: Dell Brings The SONiC NOS To SmartNICs And DPUs (Sponsored) appeared first on Packet Pushers.

Enough Is Enough: What Happens When Law Enforcement Bends Laws to Access Data

Tutanota co-founder Matthias Pfau explains how a recent court order is a wake-up call to end the encryption debate once and for all In a world increasingly reliant on the Internet in our day-to-day lives, there’s no turning back on encryption. Encryption is a critical security tool for citizens, businesses, and governments to communicate confidentially […]

The post Enough Is Enough: What Happens When Law Enforcement Bends Laws to Access Data appeared first on Internet Society.

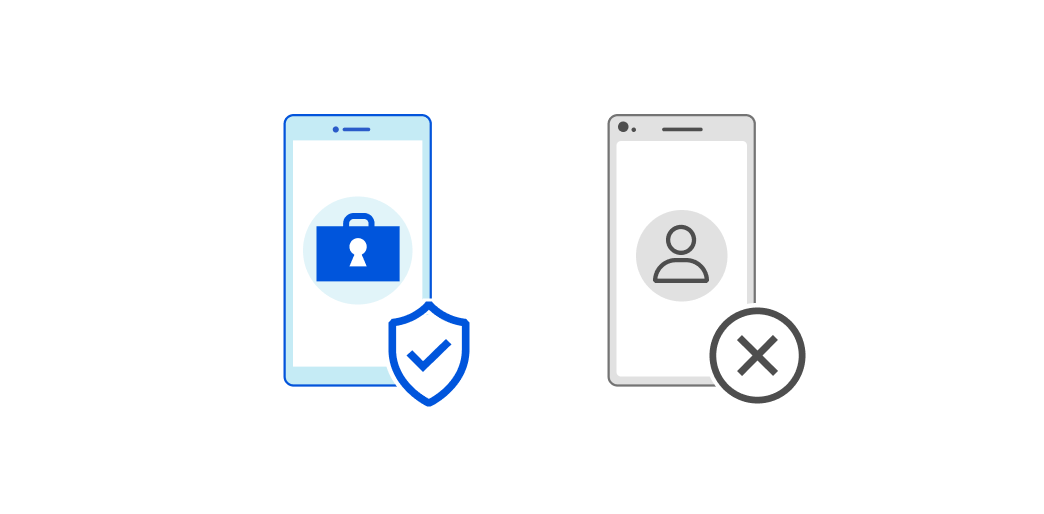

Build Zero Trust rules with managed devices

Starting today, your team can use Cloudflare Access to build rules that only allow users to connect to applications from a device that your enterprise manages. You can combine this requirement with any other rule in Cloudflare’s Zero Trust platform, including identity, multifactor method, and geography.

As more organizations adopt a Zero Trust security model with Cloudflare Access, we hear from customers who want to prevent connections from devices they do not own or manage. For some businesses, a fully remote workforce increases the risk of data loss when any user can login to sensitive applications from an unmanaged tablet. Other enterprises need to meet new compliance requirements that restrict work to corporate devices.

We’re excited to help teams of any size apply this security model, even if your organization does not have a device management platform or mobile device manager (MDM) today. Keep reading to learn how Cloudflare Access solves this problem and how you can get started.

The challenge of unmanaged devices

An enterprise that owns corporate devices has some level of control over them. Administrators can assign, revoke, inspect and manage devices in their inventory. Whether teams rely on management platforms or a simple spreadsheet, businesses can Continue reading

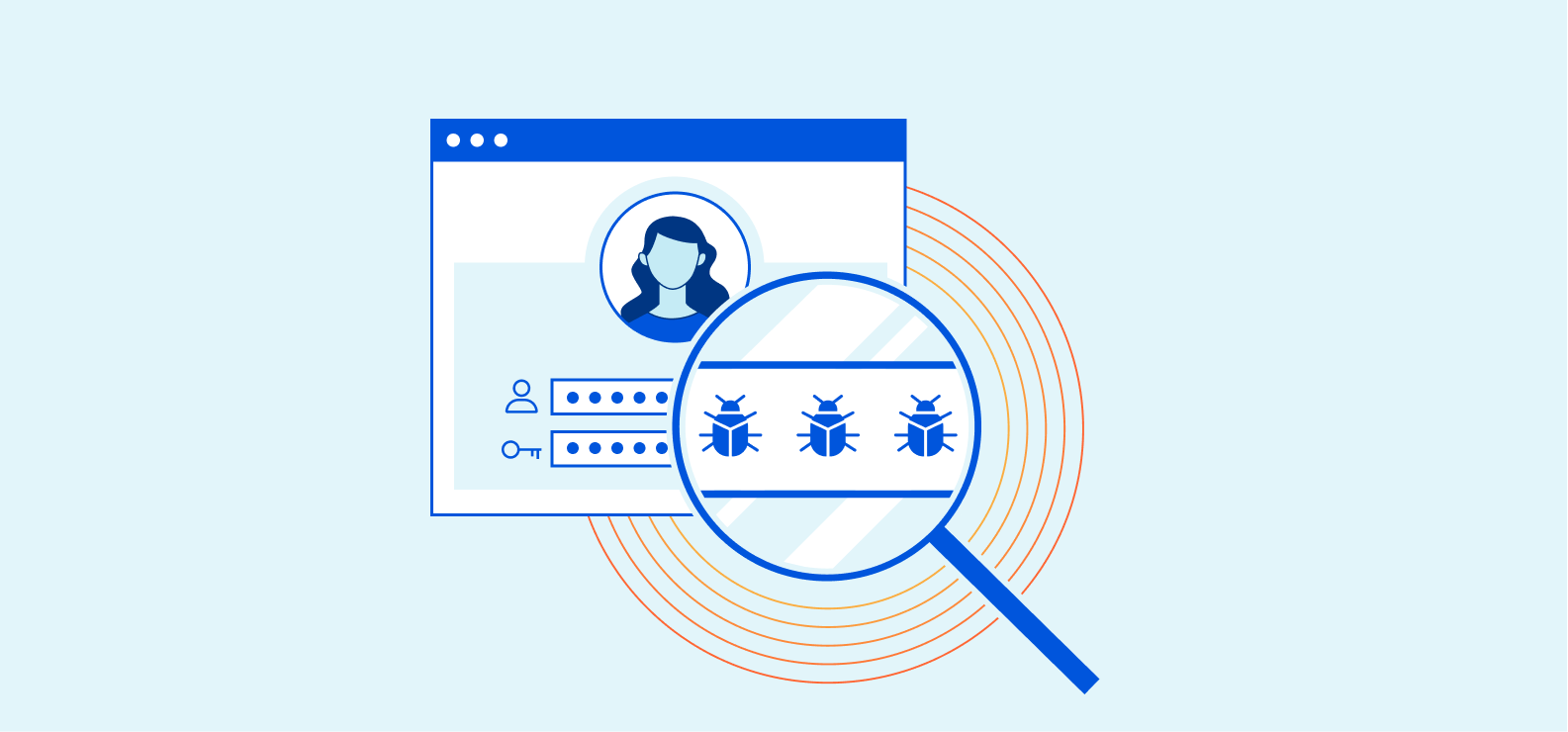

Inside Cloudflare: Preventing Account Takeovers

Over the last week, Cloudflare has published blog posts on products created to secure our customers from credential stuffing bots, detect users with compromised credentials, and block users from proxy services. But what do we do inside Cloudflare to prevent account takeovers on our own applications? The Security Team uses Cloudflare products to proactively prevent account compromises. In addition, we build detections and automations as a second layer to alert us if an employee account is compromised. This ensures we can catch suspicious behavior, investigate it, and quickly remediate.

Our goal is to prevent automated and targeted attackers regardless of the account takeover technique: brute force attack, credential stuffing, botnets, social engineering, or phishing.

Classic Account Takeover Lifecycle

First, let's walk through a common lifecycle for a compromised account.

In a typical scenario, a set of passwords and email addresses have been breached. These credentials are reused through credential stuffing in an attempt to gain access to any account (on any platform) where the user may have reused that combination. Once the attacker has initial access, which means the combination worked, they can gain information on that system and pivot to other systems through methods. This is classified Continue reading

End User Security: Account Takeover Protections with Cloudflare

End user account security is always a top priority, but a hard problem to solve. To make matters worse, authenticating users is hard. With datasets of breached credentials becoming commonplace, and more advanced bots crawling the web attempting credential stuffing attacks, protecting and monitoring authentication endpoints becomes a challenge for security focused teams. On top of this, many authentication endpoints still rely just on providing a correct username and password making undetected credential stuffing lead to account takeover by malicious actors.

Many features of the Cloudflare platform can help with implementing account takeover protections. In this post we will go over several examples as well as announce a number of new features. These include:

- Open Proxy managed list (NEW): ensure authentication attempts to your app are not coming from proxy services;

- Super Bot Fight Mode (NEW): keep automated traffic away from your authentication endpoints;

- Exposed Credential Checks (NEW): get a warning whenever a user is logging in with compromised credentials. This can be used to initiate a two factor authentication flow or password reset;

- Cloudflare Access: add an additional authentication layer by easily integrating with third party OATH services, soon with optional enforcement of managed devices (NEW);

- Rate Limiting Continue reading

Nokia Service Routing Certification and my experience

IntroductionBenefits of every certification:

- We always need motivation for learning something. Every certification consists of several levels. Step by step approach. Levels are goals for us. And it helps to keep motivation on a high level. And every achieved level helps to feel more confident.

- Every certification program has proper learning tools. Self-study guides, books, online/offline courses, etc. It helps to save time so we can just start to study.

- Certification is not the main goal. Preparing is the main goal. And preparing results. For example, notes. Notes were useful before exams as well as they will be useful in the future.

Dealing with Cloud Challenges

Here’s a message I got from one of my subscribers (probably based on one of my recent public cloud rants):

I often think the cloud stuff has been sent to try us in IT – the struggle could be tough enough when we were dealing with waterfall development and monolithic projects. When products took years to develop, and years to understand.

And now we’re being asked to be agile and learn new stuff all the time about moving targets that barely have documentation at all, never mind accurate doco! We had obviously got into our comfort zone and needed shaking out of it!

Always interested to hear your experiences with the cloud networking though – it’s what I subscribed to ipspace.net for TBH as I think it’s the most complete reference source for that purpose and a vital part of enterprise networking these days!