Paito SGP Terbaik dan Terpercaya sebagai Referensi Data SGP yang Konsisten

Daftar Pustaka

Pengantar Pentingnya Paito SGP Terbaik dan Terpercaya

Paito SGP terbaik dan terpercaya menjadi rujukan utama bagi pembaca yang membutuhkan ringkasan data harian secara rapi. Banyak orang memilih paito karena tampilannya sederhana namun informatif. Selain itu, paito memudahkan pencatatan tanpa harus membuka data satu per satu.

Di tengah banyaknya sumber, kualitas paito menentukan kepercayaan. Oleh sebab itu, memilih paito SGP yang tepat membantu pembaca memperoleh data SGP yang akurat dan konsisten. Dengan pendekatan ini, pembaca dapat memantau result SGP secara terstruktur.

Apa Itu Paito SGP dan Fungsi Utamanya

Secara umum, paito SGP merupakan tabel visual yang menyusun hasil SGP berdasarkan hari dan tanggal. Fungsi utamanya adalah menyederhanakan Continue reading

KERIS TAMING SARI: SENJATA LEGENDA YANG SARAT NILAI SEJARAH

Daftar Pustaka

Asal Usul Keris Taming Sari

Legenda Keris Taming Sari selalu menarik perhatian banyak orang. Senjata ini terkenal sebagai keris sakti yang menyimpan kisah luar biasa. Menurut cerita rakyat, keris ini pertama kali muncul di Kerajaan Majapahit. Keris tersebut kemudian menjadi milik Hang Tuah, seorang pahlawan terkenal dari Kesultanan Malaka.

Para pandai besi hebat dipercaya menempa keris ini dengan teknik tingkat tinggi. Karena itu, Taming Sari dianggap unik. Selain itu, setiap detailnya menunjukkan karya seni kelas tinggi. Walaupun banyak legenda, para sejarawan tetap menilai benda ini sebagai simbol kejayaan masa lalu.

Selain sejarah Majapahit, hubungan dengan Malaka juga sangat kuat. Hang Tuah memakai keris ini dalam berbagai pertempuran. Oleh karena itu, sosok Hang Tuah selalu identik dengan Keris Taming Sari. Cerita tersebut terus bertahan dari generasi ke generasi.

Lebih jauh lagi, masyarakat percaya bahwa keris ini memiliki kekuatan mistis. Banyak dongeng menyebutkan keris mampu menang sendiri tanpa tuannya. Hal tersebut membuat banyak orang menganggapnya sebagai benda pusaka paling berpengaruh di Nusantara.

Ciri Khas Keris Taming Sari

Bila membahas keris legendaris ini, Continue reading

Burung yang Hanya Ada di Indonesia: Cucakrawa

Daftar Pustaka

Keindahan Burung Endemik dengan Suara Merdu

Burung Cucakrawa atau dikenal sebagai Cucak Rawa menjadi salah satu burung yang sangat istimewa di Indonesia. Selain itu, burung ini juga memiliki kicauan merdu yang memukau banyak pecinta burung. Karena itu, banyak orang merasa terpikat oleh pesonanya. Bahkan, mereka rela menghabiskan banyak uang untuk memilikinya. Namun, keberhasilan dalam melestarikan burung endemik ini tetap menjadi fokus penting.

Selain itu, Cucakrawa juga memperlihatkan warna tubuh yang menarik. Bulunya terlihat cokelat zaitun dengan kombinasi putih bersih di beberapa bagian. Kemudian, bentuk tubuhnya tampak gagah dan elegan. Karena itu, burung ini semakin populer. Hingga kini, keberadaannya tetap menjadi simbol kekayaan alam Indonesia.

Asal Usul dan Habitat Alami

Secara ilmiah, Cucakrawa memiliki nama Pycnonotus zeylanicus. Burung ini hanya hidup di Indonesia, terutama di wilayah Sumatra, Jawa, dan sebagian Kalimantan. Mereka hidup di rawa, hutan sekunder, serta daerah lembap. Oleh karena itu, mereka sangat bergantung pada lingkungan yang alami dan terjaga.

Selain itu, burung ini juga senang tinggal pada pepohonan tinggi. Mereka memilih tempat yang Continue reading

On MPLS Paths, Tunnels and Interfaces

One of my readers attempted to implement a multi-vendor multicast VPN over MPLS but failed. As a good network engineer, he tried various duct tapes but found that the only working one was a GRE tunnel within a VRF, resulting in considerable frustration. In his own words:

How is a GRE tunnel different compared to an MPLS LSP? I feel like conceptually, they kind of do the same thing. They just tunnel traffic by wrapping it with another header (one being IP/GRE, the other being MPLS).

Instead of going down the “how many angels are dancing on this pin” rabbit hole (also known as “Is MPLS tunneling?”), let’s focus on the fundamental differences between GRE/IPsec/VXLAN tunnels and MPLS paths.

IP Addresses through 2025

It's time for another annual roundup from the world of IP addresses. Let’s see what has changed in the past 12 months in addressing the Internet and look at how IP address allocation information can inform us of the changing nature of the network itself.NB558: Microsoft Buys Indulgences for Carbon Sins; Starlink Cleared for 7,500 More Satellites

Take a Network Break! This week we start with follow-up from a perhaps not-so-red Red Alert, and then cover two very-red Red Alerts. On the news front, Ruckus Wireless gets a new parent company, and sources say Extreme Networks may be interested in bringing Ruckus into its fold. AT&T rolls out an IoT management offering,... Read more »How we mitigated a vulnerability in Cloudflare’s ACME validation logic

On October 13, 2025, security researchers from FearsOff identified and reported a vulnerability in Cloudflare's ACME (Automatic Certificate Management Environment) validation logic that disabled some of the WAF features on specific ACME-related paths. The vulnerability was reported and validated through Cloudflare’s bug bounty program.

The vulnerability was rooted in how our edge network processed requests destined for the ACME HTTP-01 challenge path (/.well-known/acme-challenge/*).

Here, we’ll briefly explain how this protocol works and the action we took to address the vulnerability.

Cloudflare has patched this vulnerability and there is no action necessary for Cloudflare customers. We are not aware of any malicious actor abusing this vulnerability.

ACME is a protocol used to automate the issuance, renewal, and revocation of SSL/TLS certificates. When an HTTP-01 challenge is used to validate domain ownership, a Certificate Authority (CA) will expect to find a validation token at the HTTP path following the format of http://{customer domain}/.well-known/acme-challenge/{token value}.

If this challenge is used by a certificate order managed by Cloudflare, then Cloudflare will respond on this path and provide the token provided by the CA to the caller. If the token provided does not Continue reading

How Moving Away from Ansible Made netlab Faster

TL&DR: Of course, the title is clickbait. While the differences are amazing, you won’t notice them in small topologies or when using bloatware that takes minutes to boot.

Let’s start with the background story: due to the (now fixed) suboptimal behavior of bleeding-edge Ansible releases, I decided to generate the device configuration files within netlab (previously, netlab prepared the device data, and the configuration files were rendered in an Ansible playbook).

As we use bash scripts to configure Linux containers, it makes little sense (once the bash scripts are created) to use an Ansible playbook to execute docker exec script or ip netns container exec script. netlab release 26.01 runs the bash scripts to configure Linux, Bird, and dnsmasq containers directly within the netlab initial process.

Now for the juicy part.

RAID 5 with mixed-capacity disks on Linux

Standard RAID solutions waste space when disks have different sizes. Linux software RAID with LVM uses the full capacity of each disk and lets you grow storage by replacing one or two disks at a time.

We start with four disks of equal size:

$ lsblk -Mo NAME,TYPE,SIZE NAME TYPE SIZE vda disk 101M vdb disk 101M vdc disk 101M vdd disk 101M

We create one partition on each of them:

$ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vda $ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vdb $ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vdc $ sgdisk --zap-all --new=0:0:0 -t 0:fd00 /dev/vdd $ lsblk -Mo NAME,TYPE,SIZE NAME TYPE SIZE vda disk 101M └─vda1 part 100M vdb disk 101M └─vdb1 part 100M vdc disk 101M └─vdc1 part 100M vdd disk 101M └─vdd1 part 100M

We set up a RAID 5 device by assembling the four partitions:1

$ mdadm --create /dev/md0 --level=raid5 --bitmap=internal --raid-devices=4 \ > /dev/vda1 /dev/vdb1 /dev/vdc1 /dev/vdd1 $ lsblk -Mo NAME,TYPE,SIZE NAME TYPE SIZE vda disk 101M ┌┈▶ └─vda1 part 100M ┆ vdb disk 101M ├┈▶ └─vdb1 part 100M ┆ Continue reading

Hedge 292: Data Center Costs

The cost of building and maintaining a data center is rising rapidly–and not just in financial terms. George Michaelson joins Tom and Russ to discuss the wider costs of data centers.

download

TNO053: Ethernet Is Everywhere

Ethernet is everywhere. Today we talk with one of the people responsible for this protocol’s ubiquity. Doug Boom is a veteran of the Ethernet development world. His code has helped landers reach Mars, submarines sail the deep seas, airplanes get to their gates, cars drive around town, and more. Doug walks us through the origins... Read more »HN810: AI in Network Operations: Pragmatism Over Hype (Sponsored)

Are you an AI skeptic or an enthusiast? Ethan and Drew sit down with Igor Tarasenko, Senior Director of Product Software Architecture and Engineering at Equinix, to break down the reality of AI in the network. In this sponsored episode, Tarasenko discusses why APIs are the new CLI, the critical need for observability in AI,... Read more »TSMC Has No Choice But To Trust The Sunny AI Forecasts Of Its Customers

If the GenAI expansion runs out of gas, Taiwan Semiconductor Manufacturing Co, the world’s most important foundry for advanced chippery, will be the first to know. …

TSMC Has No Choice But To Trust The Sunny AI Forecasts Of Its Customers was written by Timothy Prickett Morgan at The Next Platform.

Astro is joining Cloudflare

The Astro Technology Company, creators of the Astro web framework, is joining Cloudflare.

Astro is the web framework for building fast, content-driven websites. Over the past few years, we’ve seen an incredibly diverse range of developers and companies use Astro to build for the web. This ranges from established brands like Porsche and IKEA, to fast-growing AI companies like Opencode and OpenAI. Platforms that are built on Cloudflare, like Webflow Cloud and Wix Vibe, have chosen Astro to power the websites their customers build and deploy to their own platforms. At Cloudflare, we use Astro, too — for our developer docs, website, landing pages, and more. Astro is used almost everywhere there is content on the Internet.

By joining forces with the Astro team, we are doubling down on making Astro the best framework for content-driven websites for many years to come. The best version of Astro — Astro 6 — is just around the corner, bringing a redesigned development server powered by Vite. The first public beta release of Astro 6 is now available, with GA coming in the weeks ahead.

We are excited to share this news and even more thrilled for what Continue reading

Infrahub with Damien Garros

Why do we need Infrahub, another network automation tool? What does it bring to the table, who should be using it, and why is it using a graph database internally?

I discussed these questions with Damien Garros, the driving force behind Infrahub, the founder of OpsMill (the company developing it), and a speaker in the ipSpace.net Network Automation course.

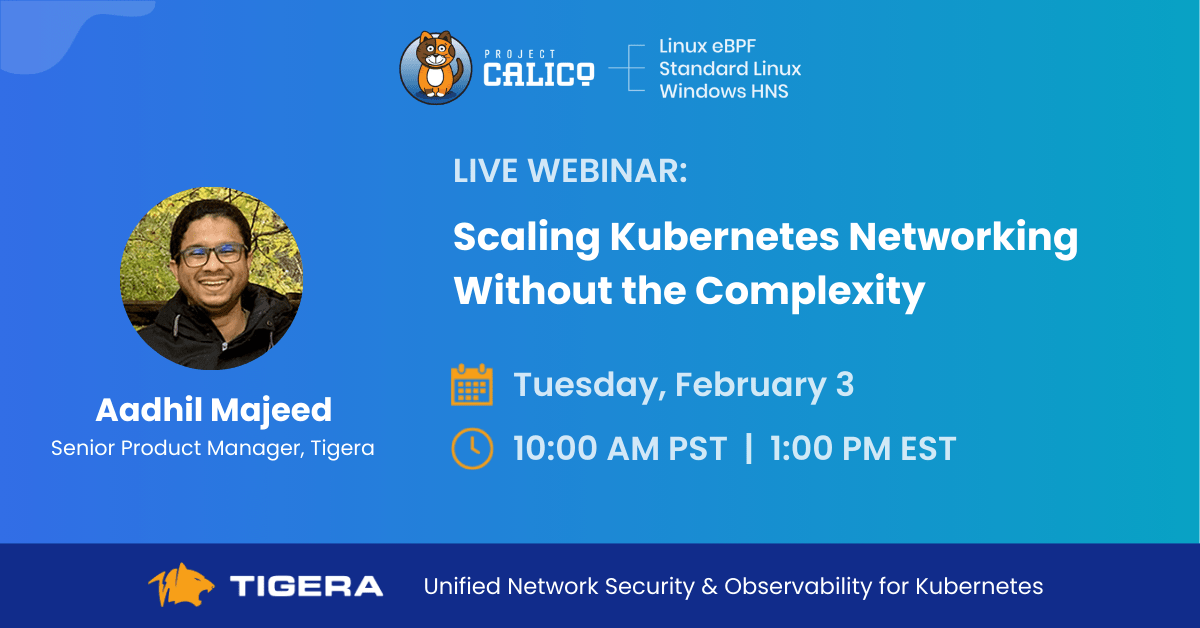

Kubernetes Networking at Scale: From Tool Sprawl to a Unified Solution

As Kubernetes platforms scale, one part of the system consistently resists standardization and predictability: networking. While compute and storage have largely matured into predictable, operationally stable subsystems, networking remains a primary source of complexity and operational risk

This complexity is not the result of missing features or immature technology. Instead, it stems from how Kubernetes networking capabilities have evolved as a collection of independently delivered components rather than as a cohesive system. As organizations continue to scale Kubernetes across hybrid and multi-environment deployments, this fragmentation increasingly limits agility, reliability, and security.

This post explores how Kubernetes networking arrived at this point, why hybrid environments amplify its operational challenges, and why the industry is moving toward more integrated solutions that bring connectivity, security, and observability into a single operational experience.

The Components of Kubernetes Networking

Kubernetes networking was designed to be flexible and extensible. Rather than prescribing a single implementation, Kubernetes defined a set of primitives and left key responsibilities such as pod connectivity, IP allocation, and policy enforcement to the ecosystem. Over time, these responsibilities were addressed by a growing set of specialized components, each focused on a narrow slice of Continue reading