Why I joined Cloudflare

Customer Service. Business. Growth. While these three make up a large portion of what keeps most enterprise companies operating, they are just the beginning at Cloudflare.

I am excited to share that I have joined Cloudflare as its Chief Customer Officer. Cloudflare has seen explosive growth: we launched only a decade ago and have already amassed nearly 3 million customers and grown from a few 100 enterprise customers to 1000s. Currently, we are at a growth inflection point where more companies are choosing to partner with us and are leveraging our service. We are fortunate to serve these customers with a consistent, high quality experience, no matter where their end-users are located around the world.

But the flare doesn’t stop at performative success

I took this opportunity because Cloudflare serves the world and does what is right over what is easy. Our customers deliver meals to your doors, provide investment and financial advice, produce GPS devices for navigational assistance, and so much more. Our customers span every vertical and industry, as well as every size. By partnering with them, we have a hand in delighting customers everywhere and helping make the Internet better. I am excited to work with them Continue reading

Worth Reading: Redistributing Your Entire IS-IS Network By Mistake

Here’s an interesting factoid: when using default IS-IS configuration (running L1 + L2 on all routers in your network), every router inserts every IP prefix from anywhere in your network into L2 topology… at least on Junos.

For more details read this article by Chris Parker.

DNS OARC Meeting Notes

In the Internet’s name space the DNS OARC meetings are a case where a concentrated burst of DNS tests the proposition that you just can't have too much DNS! OARC held its latest meeting on the 11th August with four presentations. Here's my thoughts on the material presented at that meeting.Self-hosted external DNS resolver for Kubernetes

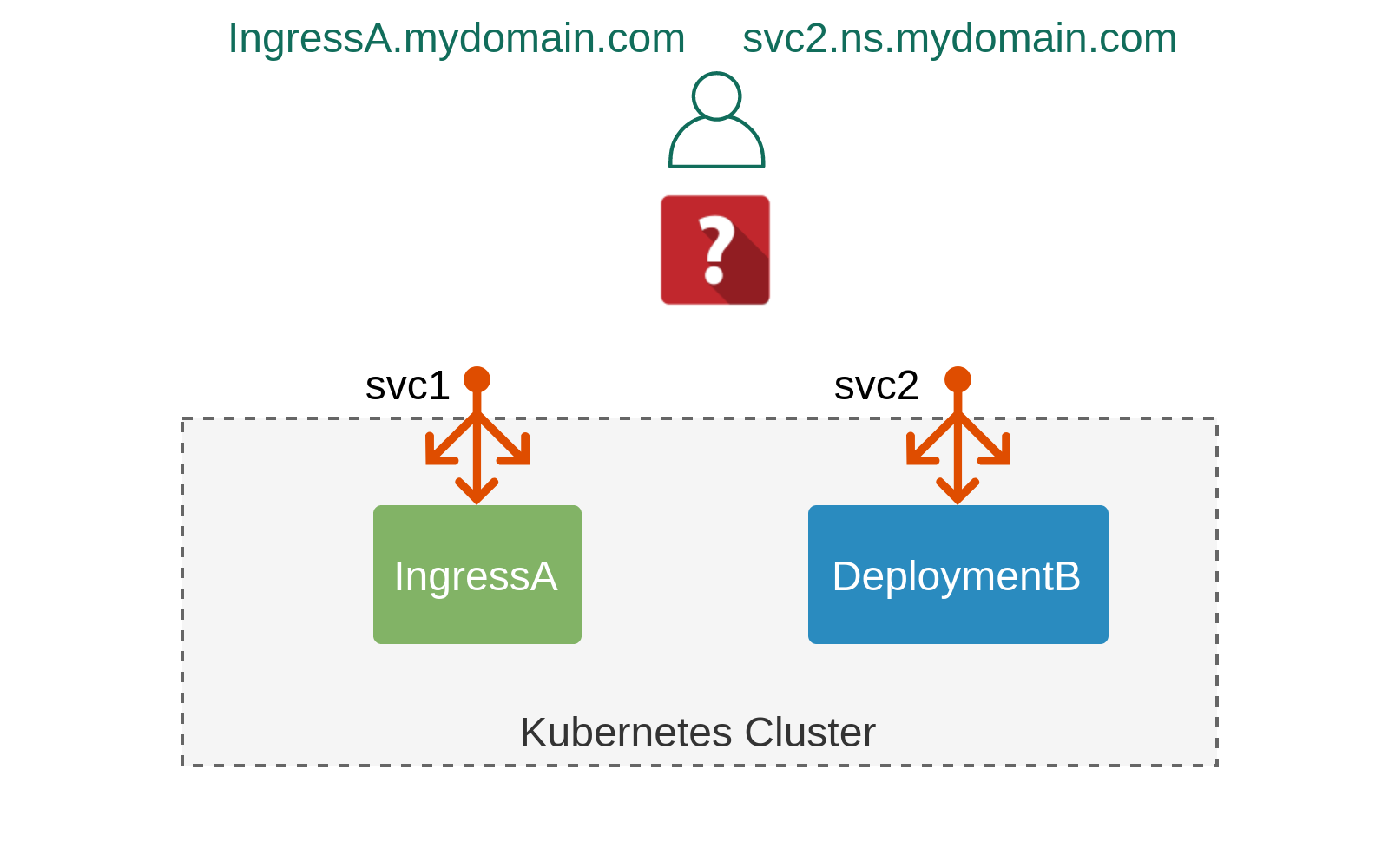

There comes a time in the life of every Kubernetes cluster when internal resources (pods, deployments) need to be exposed to the outside world. Doing so from a pure IP connectivity perspective is relatively easy as most of the constructs come baked-in (e.g. NodePort-type Services) or can be enabled with an off-the-shelf add-on (e.g. Ingress and LoadBalancer controllers). In this post, we’ll focus on one crucial piece of network connectivity which glues together the dynamically-allocated external IP with a static customer-defined hostname — a DNS. We’ll examine the pros and cons of various ways of implementing external DNS in Kubernetes and introduce a new CoreDNS plugin that can be used for dynamic discovery and resolution of multiple types of external Kubernetes resources.

External Kubernetes Resources

Let’s start by reviewing various types of “external” Kubernetes resources and the level of networking abstraction they provide starting from the lowest all the way to the highest level.

One of the most fundamental building block of all things external in Kubernetes is the NodePort service. It works by allocating a unique external port for every service instance and setting up kube-proxy to deliver incoming packets from that port to the one of Continue reading

What I’ve learned about scaling OSPF in Datacenters

I worked at Amazon for 17 years as a network engineer. Now I’m out of Amazon and looking at the industry, I’m learning new things. One of the things I’ve learned recently is that OSPF shouldn’t be used for Clos leaf-spine networks because of scale. I’ve heard that it can’t...Speed Matters: How Businesses Can Improve User Experience Using Open Standards

A recent report – Milliseconds make Millions – commissioned by Google and published by Deloitte, has shown that mobile website speed has a direct impact on user experience. Reducing latency and increasing load times by just 0.1 second can positively affect conversion rates potentially leading to an increase in net earnings.

Over a four-week period, Deloitte’s research team analyzed mobile web data from 37 retail, travel, luxury, and lead generation brands throughout Europe and the U.S. Results showed that by decreasing load time by 0.1s, the average conversion rate grew by 8% for retail sites and by 10% for travel sites. The team also observed an increase in engagement, page views, and the amount of money spent by website visitors when sites loaded faster.

Multiple studies have consistently shown that faster page load speeds will result in better conversion rates. Akamai’s 2017 Online Retail Performance Report, for example, showed that a 100-millisecond delay in website load time can reduce conversion rates by 7% and that over half (53%) of mobile site visitors will leave a page that takes longer than three seconds to load.

HTTP/2 and IPv6: Faster and More Available

There’s good news: making some Continue reading

My Journey Towards the Cisco Certified DevNet Specialist – Security by Nick Russo

On 10 August 2020, I took and passed the Automating Cisco Security Solutions (SAUTO) exam on my first attempt. In February of the same year, I passed DEVASC, DEVCOR, and ENAUTO to earn both the CCDevA and CCDevP certifications. You might be wondering why I decided to take another concentration exam. I won’t use this blog to talk about myself too much, but know this: learning is a life-long journey that doesn’t end when you earn your degree, certification, or other victory trinket. I saw SAUTO as an opportunity to challenge myself by leaving my “comfort zone” … and trust me, it was very difficult.

One of the hardest aspects of SAUTO is that it encompasses 12 different APIs spread across an enormous collection of products covering the full spectrum of cyber defense. Learning any new API is difficult as you’ll have to familiarize yourself with new API documentations, authentication/authorization schemes, request/response formats, and various other product nuances. For that reason along, the scope of SAUTO when compared to ENAUTO makes it a formidable exam.

Network automation skills are less relevant in this exam than in DEVASC, DEVCOR, or ENAUTO, as they only account for 10% Continue reading

Enforcing Enterprise Security Controls in Kubernetes using Calico Enterprise

Hybrid cloud infrastructures run critical business resources and are subject to some of the strictest network security controls. Irrespective of the industry and resource types, these controls broadly fall into three categories.

- Segmenting environments (Dev, Staging, Prod)

- Enforcing zones (DMZ, Trusted, etc.)

- Compliance requirements (GDPR, PCI DSS)

Workloads (pods) running on Kubernetes are ephemeral in nature, and IP-based controls are no longer effective. The challenge is to enforce the organizational security controls on the workloads and Kubernetes nodes themselves. Customers need the following capabilities:

- Ability to implement security controls both globally and on a per-app basis: Global controls help enforce segmentation across the cluster, and work well when the workloads are classified into different environments and/or zones using labels. As long as the labels are in place, these controls will work for any new workloads.

- Generate alerts if security controls are tampered with: Anyone with valid permissions can make changes to the controls. There is a possibility that these controls can be modified without proper authorization or even with a malicious intent to bypass the security. Hence, it is important to monitor changes to the policies.

- Produce an audit log showing changes to security controls over time: This is Continue reading

The 2020 Indigenous Connectivity Summit and Trainings: Register Now

People around the world are relying on the Internet to keep them connected to everyday life, but Indigenous communities in North America are being left behind by companies and governments. Lack of connectivity means many are unable to access even basic information and healthcare. And while COVID-19 has hit Indigenous communities especially hard, lack of access means they can’t use services that connected populations consider critical, such as remote learning and teleworking.

We must address this critical gap.

For years, the Internet Society has worked with those very communities, along with network operators, technologists, civil society, academia, and policymakers – bringing them together to discuss what can be done collectively to narrow the digital divide. We do this through our Indigenous Connectivity Summit (ICS) and the pre-Summit Trainings: Community Networks and Policy and Advocacy.

This year, though we can’t meet in person, we’ll hold a virtual event.

We’re excited to announce that registration is now open for the 2020 Indigenous Connectivity Summit.

The Summit will take place October 5-9, 2020, with training sessions beginning the first week of September. Those who register for the Summit before Friday, September 11th will receive a swag bag and materials for hands-on training prior to the Summit. Continue reading

Accelerating the data center with NVIDIA, Mellanox + Cumulus

Today’s modern datacenter and cloud architectures are horizontally scalable disaggregated distributed systems. Distributed systems have many individual components that work together independently creating a powerful cohesive solution. Just like how compute is the brains behind a datacenter’s distributed system, the network is the nervous system, responsible for ensuring communication gets to all the individual components. This blog tells you why NVIDIA Mellanox gives NVIDIA a larger footprint in the datacenter. The combination of NVIDIA, Mellanox and Cumulus together can provide end-to-end acceleration technologies for the modern disaggregated data-center.

Accelerating the datacenter

All parties coming together in this acquisition are involved in acceleration technologies in the modern data center:

- NVIDIA is at the center of Compute acceleration: Its GPU’s provide compute acceleration for High performance computing and infrastructure for neural networks that power AI assisted application features.

- Mellanox comes to the table with its dominance in High performance interconnects, Data and network processing acceleration on the host and hardware for the network fabric

- Cumulus Networks provides the Linux stack to accelerate the network fabric by enabling networking hardware features, and accelerating deployment, integration and monitoring of the network fabric with Automation and the Linux ecosystem. Cumulus Networks software architecture and DNA Continue reading

Day Two Cloud 061: Using Public Cloud For Disaster Recovery

The Day Two Cloud podcast explores different approaches to using the public cloud for disaster recovery. We examine costs and benefits, discuss recovery times, dive into planning, and more. The show draws on co-host Ned Bellavance's experience working on DR projects for a variety of customers during his VAR days.Day Two Cloud 061: Using Public Cloud For Disaster Recovery

The Day Two Cloud podcast explores different approaches to using the public cloud for disaster recovery. We examine costs and benefits, discuss recovery times, dive into planning, and more. The show draws on co-host Ned Bellavance's experience working on DR projects for a variety of customers during his VAR days.

The post Day Two Cloud 061: Using Public Cloud For Disaster Recovery appeared first on Packet Pushers.

NTC – Netpalm With Tony Nealon

Open source continues to accelerate in the network domain with projects such as Netmiko, NAPALM, and Nornir–all of which are led by individuals, not large organizations or venture-backed startups. In this episode we sit down with Tony Nealon, creator of Netpalm. Netpalm is a network API platform that can abstract and render structured data, both inbound and outbound, to your network device’s native telnet, SSH, NETCONF or RESTCONF interface–leveraging popular libraries like NAPALM, Netmiko, and ncclient under the hood for network device communication

Helpful Links:

Outro Music:

Danger Storm Kevin MacLeod (incompetech.com)

Licensed under Creative Commons: By Attribution 3.0 License

http://creativecommons.org/licenses/by/3.0/

The post NTC – Netpalm With Tony Nealon appeared first on Network Collective.