Internet of Things (IoT) telemetry

The internet of things (IoT) is the network of physical objects—devices, vehicles, buildings and other items—embedded with electronics, software, sensors, and network connectivity that enables these objects to collect and exchange data. - ITUThe recently released Raspberry Pi Zero (costing $5) is an example of the type of embedded low power computer enabling IoT. These small devices are typically wired to one or more sensors (measuring temperature, humidity, location, acceleration, etc.) and embedded in or attached to physical devices.

Collecting real-time telemetry from large numbers of small devices that may be located within many widely dispersed administrative domains poses a number of challenges, for example:

- Discovery - How are newly connected devices discovered?

- Configuration - How can the numerous individual devices be efficiently configured?

- Transport - How efficiently are measurements transported and delivered?

- Latency - How long does it take before measurements are remotely accessible?

The following steps describe how to install the Host sFlow Continue reading

OVS Orbit podcast with Ben Pfaff

OVS Orbit Episode 6 is a wide ranging discussion between Ben Pfaff and Peter Phaal of the industry standard sFlow measurement protocol, implementation of sFlow in Open vSwitch, network analytics use cases and application areas supported by sFlow, including: OpenStack, Open Network Virtualization (OVN), DDoS mitigation, ECMP load balancing, Elephant and Mice flows, Docker containers, Network Function Virtualization (NFV), and microservices.Follow the link to see listen to the podcast, read the extensive show notes, follow related links, and to subscribe to the podcast.

Raspberry Pi real-time network analytics

The Raspberry Pi model 3b is not much bigger than a credit card, costs $35, runs Linux, has a 1G RAM, and powerful 4 core 64 bit ARM processor. This article will demonstrate how to turn the Raspberry Pi into a Terribit/second real-time network analytics engine capable of monitoring hundreds of switches and thousands of switch ports.The diagram shows how the sFlow-RT real-time analytics engine receives a continuous telemetry stream from industry standard sFlow instrumentation build into network, server and application infrastructure and delivers analytics through APIs and can easily be integrated with a wide variety of on-site and cloud, orchestration, DevOps and Software Defined Networking (SDN) tools.

A future article will examine how the Host sFlow agent can be used to efficiently stream measurements from large numbers of inexpensive Rasberry Pi devices ($5 for model Zero) to the sFlow-RT collector to monitor and control the "Internet of Things" (IoT).The following instructions show how to install sFlow-RT on Raspbian Jesse (the Debian Linux based Raspberry Pi operating system).

wget http://www.inmon.com/products/sFlow-RT/sflow-rt_2.0-1092.debWe are ignoring the dependency on openjdk and will use the default Raspbian Java 1.8 version Continue reading

sudo dpkg -i --ignore-depends=openjdk-7-jre-headless sflow-rt_2.0-1092.deb

OpenNSL

Open Network Switch Layer (OpenNSL) is a library of network switch APIs that is openly available for programming Broadcom network switch silicon based platforms. These open APIs enable development of networking application software based on Broadcom network switch architecture based platforms.The recent inclusion of the APIs needed to enable sFlow instrumentation in Broadcom hardware allows open source network operating systems such as OpenSwitch and Open Network Linux to implement the sFlow telemetry standard.

Mininet dashboard

Mininet Dashboard has been released on GitHub, https://github.com/sflow-rt/mininet-dashboard. Follow the steps in Mininet flow analytics to install sFlow-RT and configure sFlow instrumentation in Mininet.The following steps install the dashboard and start sFlow-RT:

cd sflow-rtThe dashboard web interface shown in the screen shot should now be accessible. Run a test to see data in the dashboard. The following test created the results shown:

./get-app.sh sflow-rt mininet-dashboard

./start.sh

sudo mn --custom extras/sflow.py --link tc,bw=10 --topo tree,depth=2,fanout=2 --test iperfThe dashboard has three time series charts that update every second and show five minutes worth of data. From top to bottom, the charts are:

- Top Flows - Click on a peak in the chart to see the flows that were active at that time.

- Top Ports - Click on a peak in the chart to see the ingress ports that were active at that time.

- Topology Diameter - The diameter of the topology.

Mininet flow analytics

Mininet is free software that creates a realistic virtual network, running real kernel, switch and application code, on a single machine (VM, cloud or native), in seconds. Mininet is useful for development, teaching, and research. Mininet is also a great way to develop, share, and experiment with OpenFlow and Software-Defined Networking systems.This article shows how standard sFlow instrumentation built into Mininet can be combined with sFlow-RT analytics software to provide real-time traffic visibility for Mininet networks. Augmenting Mininet with sFlow telemetry realistically emulates the instrumentation built into most vendor's switch hardware, provides visibility into Mininet experiments, and opens up new areas of research (e.g. SDN and large flows).

The following papers are a small selection of projects using sFlow-RT:

- Network-Wide Traffic Visibility in OF@TEIN SDN Testbed using sFlow

- OrchSec: An Orchestrator-Based Architecture For Enhancing Network-Security Using Network Monitoring And SDN Control Functions

- Utilizing OpenFlow and sFlow to Detect and Mitigate SYN Flooding Attack

- OpenDaylight Project Proposal "Dynamic Flow Management"

- Large Flows Detection, Marking, and Mitigation based on sFlow Standard in SDN

- An SDN-based Architecture for Network-as-a-Service

- Saving Energy in OpenFlow Computer Networks

- Implementation of Neural Switch using OpenFlow as Load Balancing Method in Data Center

Identifying bad ECMP paths

In the talk Move Fast, Unbreak Things! at the recent DevOps Networking Forum, Petr Lapukhov described how Facebook has tackled the problem of detecting packet loss in Equal Cost Multi-Path (ECMP) networks. At Facebook's scale, there are many parallel paths and actively probing all the paths generates a lot of data. The active tests generate over 1Terabits/second of measurement data per Facebook data center and a Hadoop cluster with hundreds of compute nodes is required per data center to process the data.Processing active test data can detect that packets are being lost within approximately 20 seconds, but doesn't provide the precise location where packets are dropped. A custom multi-path traceroute tool (fbtracert) is used to follow up and narrow down the location of the packet loss.

While described as measuring packet loss, the test system is really measuring path loss. For example, if there are 64 ECMP paths in a pod, then the loss of one path would result in a packet loss of approximately 1 in 64 packets in traffic flows that cross the ECMP group.

Black hole detection describes an alternative approach. Industry standard sFlow instrumentation embedded within most vendor's switch hardware provides visibility into the Continue reading

Black hole detection

The Broadcom white paper, Black Hole Detection by BroadView™ Instrumentation Software, describes the challenge of detecting and isolating packet loss caused by inconsistent routing in leaf-spine fabrics. The diagram from the paper provides an example, packets from host H11 to H22 are being forwarded by ToR1 via Spine1 to ToR2 even though the route to H22 has been withdrawn from ToR2. Since ToR2 doesn't have a route to the host, it sends the packet back up to Spine 2, which will send the packet back to ToR2, causing the packet to bounce back and forth until the IP time to live (TTL) expires.The white paper discusses how Broadcom ASICs can be programmed to detect blackholes based on packet paths, i.e. packets arriving at a ToR switch from a Spine switch should never be forwarded to another Spine switch.

This article will discuss how the industry standard sFlow instrumentation (also included in Broadcom based switches) can be used to provide fabric wide detection of black holes.

The diagram shows a simple test network built using Cumulus VX virtual machines to emulate a four switch leaf-spine fabric like the one described in the Broadcom white paper (this network is Continue reading

sFlow to IPFIX/NetFlow

RESTflow explains how the sFlow architecture shifts the flow cache from devices to external software and describes how the sFlow-RT REST API can be used to program and query flow caches. Exporting events using syslog describes how flow records can be exported using the syslog protocol to Security Information and Event Management (SIEM) tools such as Logstash and and Splunk. This article demonstrates how sFlow-RT can be used to define and export the flows using the IP Flow Information eXport (IPFIX) protocol (the IETF standard based on NetFlow version 9).For example, the following command defines a cache that will maintain flow records for TCP flows on the network, capturing IP source and destination addresses, source and destination port numbers and the bytes transferred and sending flow records to address 10.0.0.162:

curl -H "Content-Type:application/json" -X PUT --data '{"keys":"ipsource,ipdestination,tcpsourceport,tcpdestinationport",

"value":"bytes", "ipfixCollectors":["10.0.0.162"]}'

http://localhost:8008/flow/tcp/jsonRunning Wireshark's tshark command line utility on 10.0.0.162 verifies that flows are being received: # tshark -i eth0 -V udp port 4739

Running as user "root" and group "root". This could be dangerous.

Capturing on lo

Frame 1 (134 bytes on wire, 134 bytes captured)

Arrival Time: Continue reading

Berkeley Packet Filter (BPF)

Linux bridge, macvlan, ipvlan, adapters discusses how industry standard sFlow technology, widely supported by data center switch vendors, has been extended to provide network visibility into the Linux data plane. This article explores how sFlow's lightweight packet sampling mechanism has been implemented on Linux network adapters.Linux Socket Filtering aka Berkeley Packet Filter (BPF) describes the recently added prandom_u32() function that allows packets to be randomly sampled in the Linux kernel for efficient monitoring of production traffic.

Background: Enhancing Network Intrusion Detection With Integrated Sampling and Filtering, Jose M. Gonzalez and Vern Paxson, International Computer Science Institute Berkeley, discusses the motivation for adding random sampling BPF and the email thread [PATCH] filter: added BPF random opcode describes the Linux implementation and includes an interesting discussion of the motivation for the patch.The following code shows how the open source Host sFlow agent implements random 1-in-256 packet sampling as a BPF program:

ld randA JIT for packet filters discusses the Linux Just In Time (JIT) compiler for BFP programs, delivering native machine code performance for compiled filters.

mod #256

jneq #1, drop

ret #-1

drop: ret #0

Minimizing cost of visibility describes why low overhead monitoring is an Continue reading

Cisco SF250, SG250, SF350, SG350, SG350XG, and SG550XG series switches

Software version 2.1.0 adds sFlow support to Cisco 250 Series Smart Switches, 350 Series Smart Switches and 550X Series Stackable Managed Switches.Cisco network engineers might not be familiar with the multi-vendor sFlow technology since it is a relatively new addition to Cisco products. The article, Cisco adds sFlow support, describes some of the key features of sFlow and contrasts them to Cisco NetFlow.Configuring sFlow on the switches is straightforward. For example, The following commands configure a switch to sample packets at 1-in-1024, poll counters every 30 seconds and send sFlow to an analyzer (10.0.0.50) over UDP using the default sFlow port (6343):

sflow receiver 1 10.0.0.50For each interface:

sflow flow-sampling 1024 1A previous posting discussed the selection of sampling rates. Additional information can be found on the Cisco web site.

sflow counter-sampling 30 1

Multi-tenant sFlow

This article discusses how real-time sFlow telemetry can be shared with network tenants to provide each tenant with a real-time view of their slice of the shared resources. The diagram shows a simple network with two tenants, Tenant A and Tenant B, each assigned their own subnet, 10.0.0.0/24 and 10.0.1.0/24 respectively.One option would be to simply replicate the sFlow datagrams and send copies to both tenants. Forwarding using sflowtool describes how sflowtool can be used to replicate and forward sFlow and sFlow-RT can be configured to forward sFlow using its REST API:

curl -H "Content-Type:application/json" \However, there are serious problems with this approach:

-X PUT --data '{"address":"10.0.0.1","port":6343}' \

http://127.0.0.1:8008/forwarding/TenantA/json

- Private information about Tenant B's traffic is leaked to Tenant A.

- Information from internal links within the network (i.e. links between s1, s2, s3 and s4) is leaked to Tenant A.

- Duplicate data from each network hop is likely to cause Tenant A to over-estimate their traffic.

Network visibility with Docker

Microservices describes the critical role that network visibility provides as a common point of reference for monitoring, managing and securing the interactions between the numerous and diverse distributed service instances in a microservices deployment.Industry standard sFlow is well placed to give network visibility into the Docker infrastructure used to support microservices. The sFlow standard is widely supported by data center switch vendors (Cisco, Arista, Juniper, Dell, HPE, Brocade, Cumulus, etc.) providing a cost effective and scaleable method of monitoring the physical network infrastructure. In addition, Linux bridge, macvlan, ipvlan, adapters described how sFlow is also an efficient means of leveraging instrumentation built into the Linux kernel to extend visibility into Docker host networking.

The following commands build the Host sFlow binary package from sources on an Ubuntu 14.04 system:

sudo apt-get updateThis resulting hsflowd_1.29.1-1_amd64.deb package can be copied and installed on all the hosts in the Docker cluster using configuration management tools such as Puppet, Chef, Ansible, etc.

sudo apt-get install build-essential

sudo apt-get install libpcap-dev

sudo apt-get install wget

wget https://github.com/sflow/host-sflow/archive/v1.29.1.tar.gz

tar -xvzf v1.29.1.tar.gz

cd host-sflow-1.29.1

make DOCKER=yes PCAP=yes deb

This Continue reading

Lasers!

Cool! You mean that I actually have frickin' switches with frickin' laser beams attached to their frickin' ports?Dr. Evil is right, lasers are cool! The draft sFlow Optical Interface Structures specification exports metrics gathered from instrumentation built into Small Form-factor Pluggable (SFP) and Quad Small Form-factor Pluggable (QSFP) optics modules. This article provides some background on optical modules and discusses the value of including optical metrics in the sFlow telemetry stream exported from switches and hosts.

Pluggable optical modules are intelligent devices that do more than simply convert between optical and electrical signals. The functional diagram below shows the elements within a pluggable optical module.

The transmit and receive functions are shown in the upper half of the diagram. Incoming optical signals are received on a fiber and amplified as they are converted to electrical signals that can be handled by the switch. Transmit data drives the modulation of a laser diode which transmits the optical signal down a fiber.

The bottom half of the diagram shows the management components. Power, voltage and temperature sensors are monitored and the results are written into registers in an EEPROM that are accessible via a management interface.

The proposed sFlow extension standardizes Continue reading

Minimizing cost of visibility

Visibility allows orchestration systems (OpenDaylight, ONOS, OpenStack Heat, Kubernetes, Docker Storm, Apache Mesos, etc.) to adapt to changing demand by targeting resources where they are needed to increase efficiency, improve performance, and reduce costs. However, the overhead of monitoring must be low in order to realize the benefits.An analogous observation that readers may be familiar with is the importance of minimizing costs when investing in order to maximize returns - see Vanguard Principle 3: Minimize costSuppose that a 100 server pool is being monitored and visibility will allow the orchestration system to realize a 10% improvement by better workload scheduling and placement - increasing the pool's capacity by 10% without the need to add an additional 10 servers and saving the associated CAPEX/OPEX costs.

The chart shows the impact that measurement overhead has in realizing the potential gains in this example. If the measurement overhead is 0%, then the 10% performance gain is fully realized. However, even a relatively modest 2% measurement overhead reduces the potential improvement to just under 8% (over a 20% drop in the potential gains). A 9% measurement overhead wipes out the potential efficiency gain and measurement overheads greater than 9% result in Continue reading

Docker network visibility demonstration

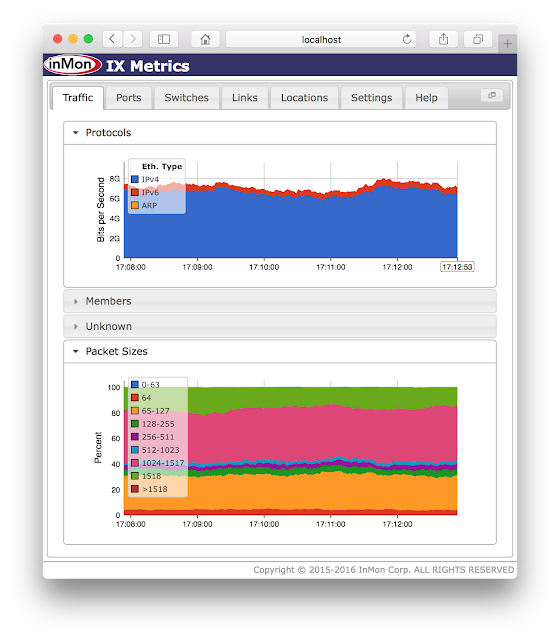

The 2 minute live demonstration shows how the open source Host sFlow agent can be used to efficiently monitor Docker networking in production environments. The demonstration shows real-time tracking of 30Gbit/s traffic flows using less than 1% of a single processor core.Internet Exchange (IX) Metrics

IX Metrics has been released on GitHub, https://github.com/sflow-rt/ix-metrics. The application provides real-time monitoring of traffic between members in an Internet Exchange (IX).Close monitoring of exchange traffic is critical to operations:

- Ensure that there is sufficient capacity to accommodate new and existing members.

- Ensure that all traffic sources are accounted for and that there are no unauthorized connections.

- Ensure that only allowed traffic types are present.

- Ensure that non-unicast traffic is strictly controlled.

- Ensure that packet size policies are controlled to avoid loss due to MTU mismatches.

The measurements from the exchange infrastructure are useful to members since it allows them to easily see how much traffic they are exchanging with other members through their peering relationships. This information is easy to collect using the exchange infrastructure, but much harder for members to determine independently.

The sFlow standard has long been a popular method of monitoring exchanges for a number of reasons:

- sFlow Continue reading

Network and system analytics as a Docker microservice

Microservices describes why the industry standard sFlow instrumentation embedded within cloud infrastructure is uniquely able to provide visibility into microservice deployments.The sFlow-RT analytics engine is well suited to deployment as a Docker microservice since the application is stateless and presents network and system analytics as a RESTful service.

The following steps demonstrate how to create a containerized deployment of sFlow-RT.

First, create a directory for the project and edit the Dockerfile:

mkdir sflow-rtAdd the following contents to Dockerfile:

cd sflow-rt

vi Dockerfile

FROM centos:centos6Build the project:

RUN yum install -y java-1.7.0-openjdk

RUN rpm -i http://www.inmon.com/products/sFlow-RT/sflow-rt-2.0-1072.noarch.rpm

EXPOSE 8008 6343/udp

CMD /etc/init.d/sflow-rt start && tail -f /dev/null

docker build -t sflow-rt .Run the service:

docker run -p 8008:8008 -p 6343:6343/udp -d sflow-rtAccess the API at http://docker_host:8008/ to verify that the service is running.

Now configure sFlow agents to send data to the docker_host on port 6343:

- Switch configurations

- Install Host sFlow agents to monitor hosts, hypervisors, Docker, etc.

The diagram shows how new and existing cloud based or locally hosted orchestration, operations, and security tools can leverage sFlow-RT's analytics service to gain real-time visibility. The solution is extremely scaleable, a single sFlow-RT instance can monitor thousands of servers and the network devices connecting them.

Microservices

|

| Figure 1: Visibility and the software defined data center |

While I genuinely believe that the network will play an immensely strategic role in the microservices world, inspecting and storing billions of API calls on a daily basis will require significant computing and storage resources. In addition, deep packet inspection could be challenging at line rates; so, sampling, at the expense of full visibility, might be an alternative. Finally, network traffic analysis must be combined with service-level telemetry data (that we already collect today) in order to get a comprehensive and in-depth picture of the distributed application.Sampling isn't just an alternative, sampling is the key to making large scale microservice visibility a reality. Shrink ray describes how sampling acts as a scaling function, reducing the task of monitoring large scale microservice infrastructure from an intractable measurement and big data problem to a lightweight real-time data center wide visibility solution for monitoring, managing, Continue reading

Open vSwitch version 2.5 released

The recent Open vSwitch version 2.5 release includes significant network virtualization enhancements:- sFlow agent now reports tunnel and MPLS structures.The sFlow Tunnel Structures specification enhances visibility into network virtualization by capturing encapsulation / decapsulation actions performed by tunnel end points. In many network virtualization implementations VXLAN, GRE, Geneve tunnels are terminate in Open vSwitch and so the new feature has broad application.

...

- Add experimental version of OVN. OVN, the Open Virtual Network, is a

system to support virtual network abstraction. OVN complements the

existing capabilities of OVS to add native support for virtual network

abstractions, such as virtual L2 and L3 overlays and security groups.

The second related feature is the inclusion of the Open Virtual Network (OVN), providing a simple method of building virtual networks for OpenStack and Docker.

The following articles provide additional background: