SC24 Real-time RoCEv2 traffic visibility

The chart shows eight 400Gbits/s RDMA over Converged Ethernet (RoCEv2) flows, typically seen in AI / ML data centers, totaling 3.2 Tbits/s. The unique challenge in this case is that flows are being routed from locations scattered around the United States to Atlanta, the location of the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC24) conference. SC24 Network Research Exhibit: The Resiliant, Performant Networks and Distributed Processing demonstration aims to explore performance limitations and enablers for high volume bulk data tranfers. Maintaining stable 400Gbits/s RoCEv2 connections over a wide area network is challenging since the packets have to traverse multiple links, avoid contention on links, and deal with buffering associated with transmission latency that is orders of magnitude higher than data center environments where RoCEv2 is typically deployed (one way latency across the USA is a minimum of 16 milliseconds due to speed of light, but in practice the latency is quite a bit larger, on the other hand latency across a leaf and spine data center fabric is measured in microseconds). During setup it was noticed that total throughput with 8 concurrent flows was only 2.7Tbits/s (instead of the 3Tbits/second plus expected). Examining a Continue readingSC24 SCinet traffic

The real-time dashboard shows total network traffic at The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC24) conference being held this week in Atlanta. The dashboard shows that 31 Petabytes of data have been transferred already and the conference has just started.

The conference network used in the demonstration, SCinet, is described as the most powerful and advanced network on Earth, connecting the SC community to the world.

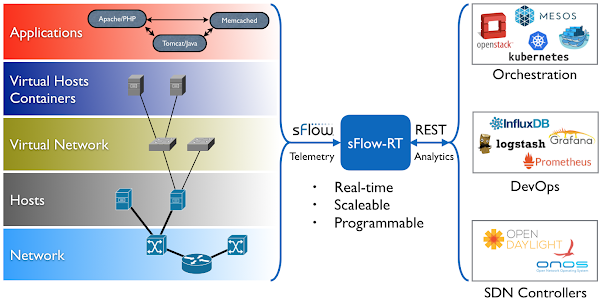

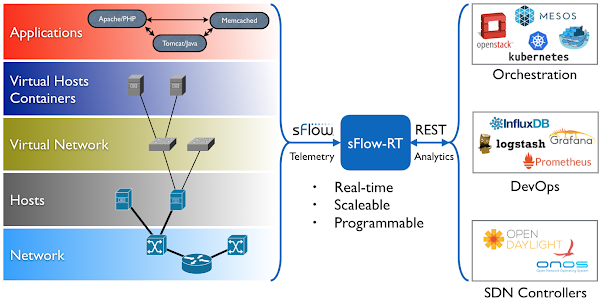

In this example, the sFlow-RT real-time analytics engine receives sFlow telemetry from switches, routers, and servers in the SCinet network and creates metrics to drive the real-time charts in the dashboard. Getting Started provides a quick introduction to deploying and using sFlow-RT for real-time network-wide flow analytics.Finally, check out the SC24 Dropped packet visibility demonstration to learn about one of newest developments in sFlow monitoring and see a live demonstration.

NVIDIA Cumulus Linux 5.11 for AI / ML

NVIDIA Cumulus Linux 5.11 includes major upgrades to the sFlow agent that fully exposes the advanced instrumentation built into NVIDIA Spectrum-X silicon. The enhanced real-time telemetry is particularly relevant to the AI / machine learning workloads that Spectrum-X is designed to handle.

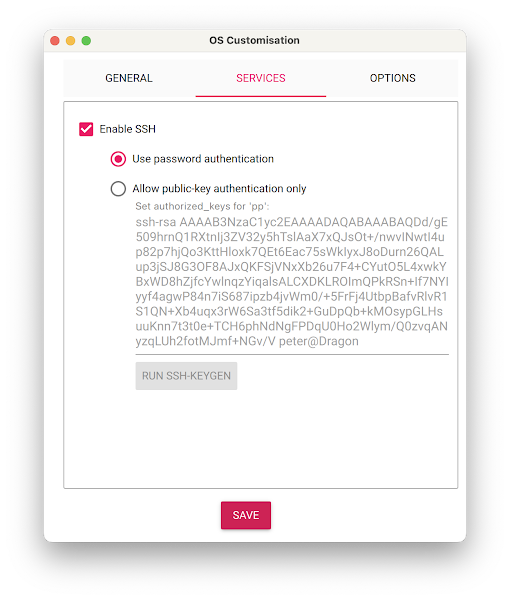

With Cumulus Linux 5.11, the sFlow agent is easily configured using nvue commands, see Monitoring System Statistics and Network Traffic with sFlow:

nv set system sflow dropmon hw nv set system sflow poll-interval 20 nv set system sflow collector 192.0.2.1 nv set system sflow state enabled nv config apply

Note: In this case, enabling dropmon ensures that every dropped packet is captured, along with ingress port and drop reason (e.g. ttl_exceeded).

The same commands should be applied to every switch in the fabric for comprehensive visibility.

RDMA over Converged Ethernet (RoCE) describes how sFlow provides detailed visibility into RoCE flows used to move data between GPUs in an AI / ML data center fabric. The chart above from the RDMA network visibility demonstration at the SC22 conference shows that sFlow monitoring easily scales to the 400/800G speeds needed for machine learning. In this example, the sFlow-RT real-time analytics engine receives sFlow Continue readingSC24 Dropped packet visibility demonstration

The real-time dashboard is a joint InMon / Arista demonstration at The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC24) conference being held this week in Atlanta.

The conference network used in the demonstration, SCinet, is described as the most powerful and advanced network on Earth, connecting the SC community to the world.

The sFlow Packet Drop Monitoring In High Performance Networks dashboard combines telemetry from all the Arista switches in the SCinet network to provide real-time network-wide view of performance. Each of the three charts demonstrate a different type of measurement in the sFlow telemetry stream:

- Counters: Total Traffic shows total traffic calculated from interface counters streamed from all interfaces. Counters provide a useful way of accurately reporting byte, frame, error and discard counters for each network interface. In this case, the chart rolls up data from all interfaces to trend total traffic on the network.

- Samples: Top Flows shows the top 5 largest traffic flows traversing the network. The chart is based on sFlow's random packet sampling mechanism, providing a scaleable method of determining the hosts and services responsible for the traffic reported by the counters. Visibility into top flows is essential if one Continue reading

Worldwide deployment of real-time flow analytics

Industry standard sFlow telemetry is widely supported by network equipment vendors and network management platforms. However, the advent of real-time sFlow analytics has opened up a range of new applications for sFlow. The map above shows the proportion of sFlow-RT instances running in each of the over 70 countries in which it is deployed.

The following use cases are driving current deployments:

- Augmenting observability dashboards with real-time network analytics, see Flow metrics with Prometheus and Grafana

- Monitoring Internet Exchange Points, see Internet eXchange Provider (IXP) Metrics

- Automated DDoS mitigation, see DDoS protection quickstart guide

Addressing the challenge of operating AI / ML clusters is the emerging application for sFlow visibility. High speed (400/800G) data center switches needed to handle machine learning traffic flows include sFlow agents and real-time analytics are essential to optimize the network so that expensive GPU and compute resources are fully utilized, see Leveraging open technologies to monitor packet drops in AI cluster fabrics.

If you would like to see how real-time network analytics can transform network operations, Getting Started describes how to download and configure sFlow-RT analytics software for use in your network, or how to try it out using an emulator, or pre-captured data.

Leveraging open technologies to monitor packet drops in AI cluster fabrics

In this talk from the recent OCP Global Summit, Aldrin Isaac, eBay, describes the challenge, AI clusters operate most efficiently over lossless networks for optimum job completion times which can be significantly impacted by dropped packets. Although networks can be designed to minimize packet loss by choosing the right network topology, optimizing network devices and protocols, an effective monitoring and troubleshooting network performance tool is still required. Such tool should capture packet drops, raise notifications and identify various drop reasons and pin point where the drops caused congestions. In turn, it allows the governing management application to tune configurations of relevant infrastructure components, including switches, NICs and GPU servers.

The talk shares the results and best practices of a TAM (Telemetry and Monitoring) solution being prepared for deployment at eBay. It leverages OCP’s SAI and open sFlow drop notification technologies as part of eBay’s ongoing initiatives to adopt open networking hardware and community SONiC for its data centers.

The sFlow Dropped Packet Notification Structures extension mentioned in the talk adds real-time packet drop notifications (including dropped packet header and drop reason) as part of an industry standard sFlow telemetry feed, making the data available to open source and commercial sFlow analytics Continue reading

OCP Global Summit 2024

AI networking is a popular topic at the up coming OCP Global Summit in San Jose, California, with an entire morning on Wednesday October 16 devoted to the subject. Of particular interest is the talk, Leveraging open technologies to monitor packet drops in AI cluster fabrics, by Aldrin Isaac, eBay, describing the challenge, AI clusters operate most efficiently over lossless networks for optimum job completion times which can be significantly impacted by dropped packets. Although networks can be designed to minimize packet loss by choosing the right network topology, optimizing network devices and protocols, an effective monitoring and troubleshooting network performance tool is still required. Such tool should capture packet drops, raise notifications and identify various drop reasons and pin point where the drops caused congestions. In turn, it allows the governing management application to tune configurations of relevant infrastructure components, including switches, NICs and GPU servers.The talk will share the results and best practices of a TAM (Telemetry and Monitoring) solution being prepared for deployment at eBay. It leverages OCP’s SAI and open sFlow drop notification technologies as part of eBay’s ongoing initiatives to adopt open networking hardware and community SONiC for its data centers.

Vector Packet Processor (VPP)

VPP with sFlow - Part 1 and VPP with sFlow - Part 2 describe the journey to add industry standard sFlow instrumentation to the Vector Packet Processor (VPP) an Open Source Terabit Software Dataplane for software routers running on commodity x86 / ARM hardware.The main conclusions based on testing described in the two VPP blog posts are:

- If sFlow is not enabled on a given interface, there is no regression on other interfaces.

- If sFlow is enabled, copying packets costs 11 CPU cycles on average

- If sFlow takes a sample, it takes only marginally more CPU time to enqueue.

- No sampling gets 9.88Mpps of IPv4 and 14.3Mpps of L2XC throughput,

- 1:1000 sampling reduces to 9.77Mpps of L3 and 14.05Mpps of L2XC throughput,

- and an overly harsh 1:100 reduces to 9.69Mpps and 13.97Mpps only.

The VPP sFlow plugin provides a lightweight method of exporting real-time sFlow telemetry from a VPP based router. Including the plugin with VPP distributions has no impact on performance. Enabling the plugin provides real-time visibility that opens up additional use cases for VPPs programmable dataplane. For example, VPP is well suited to packet filtering use cases where the number of Continue reading

Emulating congestion with Containerlab

The Containerlab dashboard above shows variation in throughput in a leaf and spine network due to large "Elephant" flow collisions in an emulated network, see Leaf and spine traffic engineering using segment routing and SDN for a demonstration of the issue using physical switches.This article describes the steps needed to emulate realistic network performance problems using Containerlab. First, using the FRRouting (FRR) open source router to build the topology provides a lightweight, high performance, routing implementation that can be used to efficiently emulate large numbers of routers using the native Linux dataplane for packet forwarding. Second, the containerlab tools netem set command can be used to introduce packet loss, delay, jitter, or restrict bandwidth of ports.

The netem tool makes use of the Linux tc (traffic control) module. Unfortunately, if you are using Docker desktop, the minimal virtual machine used to run containers does not include the tc module.

multipass launch dockerInstead, use Multipass as a convenient way to create and start an Ubuntu virtual machine with Docker support on your laptop. If you are already on a Linux system with Docker installed, skip forward to the git clone step.

multipass lsList the multipass virtual machines.

Continue reading

Dropped packet metrics with Prometheus and Grafana

Dropped packets due to black hole routes, buffer exhaustion, expired TTLs, MTU mismatches, etc. can result in insidious connection failures that are time consuming and difficult to diagnose. Dropped packet notifications with Arista Networks, VyOS dropped packet notifications and Using sFlow to monitor dropped packets describe implementations of the sFlow Dropped Packet Notification Structures extension for Arista Networks switches, VyOS routers, and Linux servers respectively, providing end to end visibility into packet drop events (including switch port, drop reason and packet header for each dropped packet).Flow metrics with Prometheus and Grafana describes how define flow metrics and create dashboards to trend the flow metrics over time. This article describes how the same setup can be used to define and trend metrics based on dropped packet notifications.

- job_name: sflow-rt-drops

metrics_path: /app/prometheus/scripts/export.js/flows/ALL/txt

static_configs:

- targets: ['sflow-rt:8008']

params:

metric: ['dropped_packets']

key:

- 'node:inputifindex'

- 'ifname:inputifindex'

- 'reason'

- 'stack'

- 'macsource'

- 'macdestination'

- 'null:vlan:untagged'

- 'null:[or:ipsource:ip6source]:none'

- 'null:[or:ipdestination:ip6destination]:none'

- 'null:[or:icmptype:icmp6type:ipprotocol:ip6nexthdr]:none'

label:

- 'switch'

- 'port'

- 'reason'

- 'stack'

- 'macsource'

- 'macdestination'

- 'vlan'

- 'src'

- 'dst'

- 'protocol'

value: ['frames']

dropped: ['true']

maxFlows: ['20']

minValue: ['0.001']

The Prometheus scrape configuration above is used to Continue reading

Dropped packet notifications with Arista Networks

Visibility into dropped packets is essential for Artificial Intelligence/Machine Learning (AI/ML) workloads, where a single dropped packet can stall large scale computational tasks, idling millions of dollars worth of GPU/CPU resources, and delaying the completion of business critical workloads. Enabling real-time sFlow telemetry provides the observability into traffic flows and packet drops needed to effectively manage these networks.

The availability of the Arista EOS 4.31.4M maintenance release brings sFlow dropped packet monitoring (previously demonstrated using the 4.30.1F feature release - see SC23 Dropped packet visibility demonstration) to production networks, see EOS Life Cycle Policysflow sampling 50000 sflow polling-interval 20 sflow vrf mgmt destination 203.0.113.100 sflow vrf mgmt source-interface Management0 sflow runThe above Arista EOS commands enable sFlow counter polling and packet sampling on all ports, sending the sFlow telemetry to the sFlow analyzer at 203.0.113.100

flow tracking mirror-on-drop

sample limit 100 pps

!

tracker SFLOW

exporter SFLOW

format sflow

collector sflow

local interface Management0

no shutdown

The above commands add sFlow Dropped Packet Notification Structures to the sFlow telemetry feed using Broadcom Mirror on Drop (MoD) instrumentation. Broadcom implements mirror-on-drop in Jericho 2, Trident 3, and Tomahawk 3, Continue reading

VyOS 1.4 LTS released

|

| Protectli Vault - 4 Port |

The VyOS 1.4.0 (Sagitta) LTS release announcement is exciting news! VyOS is an open source router operating system based on Linux that can be installed on commodity PC hardware - for optimal performance at least 1GB RAM and 4GB of storage space is recommended.

The new 1.4 LTS release includes a significantly enhanced implementation of industry standard sFlow telemetry based on the open source Host sFlow agent.

set system sflow interface eth0 set system sflow interface eth1 set system sflow interface eth2 set system sflow interface eth3 set system sflow polling 30 set system sflow sampling-rate 1000 set system sflow drop-monitor-limit 50 set system sflow server 192.0.2.100Enter the commands above to enable sFlow monitoring on interfaces eth0, eth1, eth2, and eth3. Interface counters will be exported every 30 seconds, packets will be sampled with probability 1/1000, and up to 50 packet headers (and drop reasons) per second will collected from packets dropped by the router. The sFlow telemetry stream will be sent to an sFlow collector at 192.0.2.100.

Running Docker on the sFlow collector makes it easy to run a variety of Continue reading

Raspberry Pi 5 network emulation with Containerlab

The GitHub sflow-rt/containerlab project contains example network topologies for the Containerlab network emulation tool that demonstrate real-time streaming telemetry in realistic data center topologies and network configurations. The examples use the same FRRouting (FRR) engine that is part of SONiC, NVIDIA Cumulus Linux, and DENT network operating systems. Containerlab can be used to experiment before deploying solutions into production. Examples include: tracing ECMP flows in leaf and spine topologies, EVPN visibility, and automated DDoS mitigation using BGP Flowspec and RTBH controls. Raspberry Pi 5 real-time network analytics describes how to install Docker on a Raspberry Pi 5.docker run hello-worldRun the hello-world container to verify that Docker in properly installed and running before proceeding.

git clone https://github.com/sflow-rt/containerlab.gitDownload the sflow-rt/containerlab project from GitHub.

cd containerlab ./run-clabStart Containerlab.

containerlab deploy -t clos5.ymlStart the 5 stage leaf and spine topology shown at the top of this page. The initial launch may take a couple of minutes as the container images are downloaded for the first time. Once the images are downloaded, the topology deploys in around 10 seconds.

./topo.py clab-clos5Push the topology to the sFlow-RT analytics software. An instance of the sFlow-RT Continue reading

Raspberry Pi 5 real-time network analytics

|

| CanaKit Raspberry Pi 5 Starter Kit - Aluminum |

ssh [email protected]Use ssh to log into Raspberry Pi (having installled the micro SD card).

sudo apt-get update && sudo apt-get -y upgradeUpdate packages and OS to latest version.

curl Continue reading

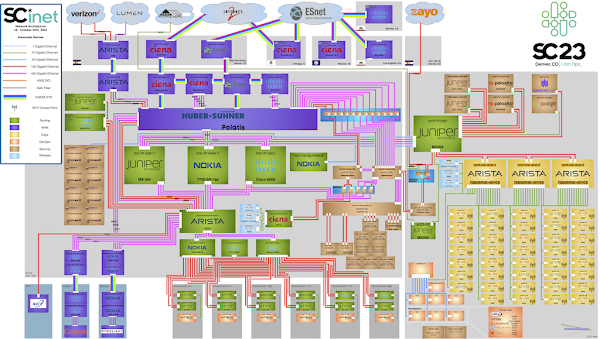

SC23 Over 6 Terabits per Second of WAN Traffic

The world’s fastest temporary internet service gets turned on in Denver for one week only describes the SCinet temporary network built to support the The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC23) this week in Denver. The SC23 WAN Stress Test chart demonstrates that the provisioned 6.71 terabits bits per second capacity was pushed to the limits. SC23 SCinet traffic describes the architecture of the real-time monitoring system used to comprehensively monitor the SCinet network and generate these charts. This chart shows that over 175 Petabytes of data were transfered during the show.SC23 Dropped packet visibility demonstration describes a joint demonstration by InMon Corp and Arista Networks of one of newest developments in sFlow telemetry, identifying every dropped packet, the reason it was dropped, and the location it was dropped across all the switches in real-time. SC23 WiFi Traffic Heatmap shows a real-time view of WiFi usage at the conference displayed on a conference floorplan. Finally, SC23 Data Transfer Node TCP Metrics demonstrates how standard metrics maintained by the Linux kernel can be used to augment sFlow telemetry and track the performance of large science data transfers.SC23 Data Transfer Node TCP Metrics

The dashboard shown above is based on the open source sflow-rt/dtn project. The dashboard shows data captured from The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC23) being held this week in Denver.The dashboard displays data gathered from open source Host sFlow agents installed on Data Transfer Nodes (DTNs) run by the Caltech High Energy Physics Department and used for handling transfer of large scientific data sets (for example, accessing experiment data from the CERN particle accelerator). Network performance monitoring describes how the Host sFlow agents augment standard sFlow telemetry with measurements that the Linux kernel maintains as part of the normal operation of the TCP protocol stack.

The dashboard shows 5 large flows (greater than 50 Gigabits per Second). For each large flow being tracked, additional TCP performance metrics are displayed:

- RTT The round trip time observed between DTNs

- RTT Wait The amount of time that data waits on sender before it can be sent.

- RTT Sdev The standard deviation on observed RTT. This variation is a measure of jitter.

- Avg. Packet Size The average packet size used to send data.

- Packets in Flight The number of unacknowledged packets.

See Defining Flows for full range of Continue reading

SC23 WiFi Traffic Heatmap

Additional use cases being demonstrated this week include, SC23 Dropped packet visibility demonstration and SC23 SCinet traffic.

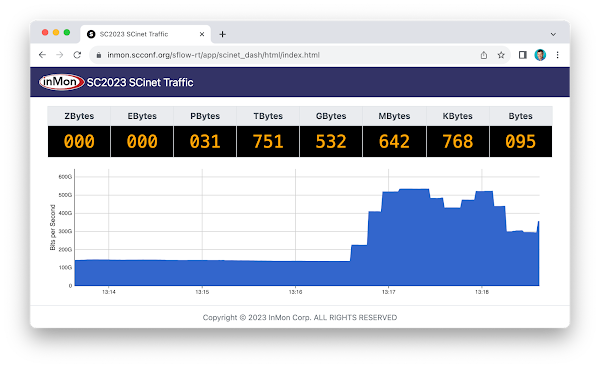

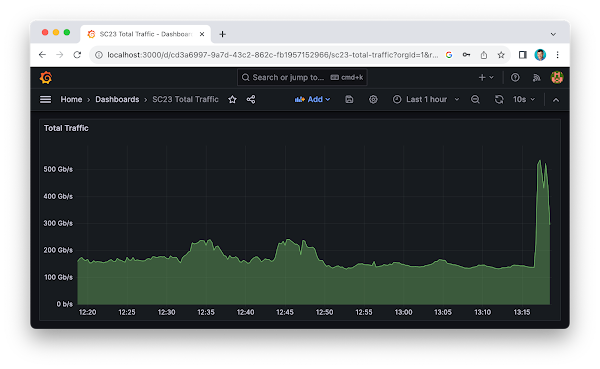

SC23 SCinet traffic

The real-time dashboard shows total network traffic at The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC23) conference being held this week in Denver. The dashboard shows that 31 Petabytes of data have been transferred already and the conference hasn't even started. The conference network used in the demonstration, SCinet, is described as the most powerful and advanced network on Earth, connecting the SC community to the world. In this example, the sFlow-RT real-time analytics engine receives sFlow telemetry from switches, routers, and servers in the SCinet network and creates metrics to drive the real-time charts in the dashboard. Getting Started provides a quick introduction to deploying and using sFlow-RT for real-time network-wide flow analytics. The dashboard above trends SC23 Total Traffic. The dashboard was constructed using the Prometheus time series database to store metrics retrieved from sFlow-RT and Grafana to build the dashboard. Deploy real-time network dashboards using Docker compose demonstrates how to deploy and configure these tools to create custom dashboards like the one shown here.Finally, check out the SC23 Dropped packet visibility demonstration to learn about one of newest developments in sFlow monitoring and see a live demonstration.

SC23 Dropped packet visibility demonstration

The real-time dashboard is a joint InMon / Arista Network Research Exhibition, SC23-NRE-026 Standard Packet Drop Monitoring In High Performance Networks. a part of The International Conference for High Performance Computing, Networking, Storage, and Analysis (SC23) conference being held this week in Denver. The conference network used in the demonstration, SCinet, is described as the most powerful and advanced network on Earth, connecting the SC community to the world.The SC23-NRE-026 Standard Packet Drop Monitoring In High Performance Networks dashboard combines telemetry from all the Arista switches in the SCinet network to provide real-time network-wide view of performance. Each of the three charts demonstrate a different type of measurement in the sFlow telemetry stream:

- Counters: Total Traffic shows total traffic calculated from interface counters streamed from all interfaces. Counters provide a useful way of accurately reporting byte, frame, error and discard counters for each network interface. In this case, the chart rolls up data from all interfaces to trend total traffic on the network.

- Samples: Top Flows shows the top 5 largest traffic flows traversing the network. The chart is based on sFlow's random packet sampling mechanism, providing a scaleable method of determining the hosts and services responsible Continue reading

Internet eXchange Provider (IXP) Metrics

IXP Metrics is available on Github. The application provides real-time monitoring of traffic between members of an Internet eXchange Provider (IXP) network.

This article will use Arista switches as an example to illustrate the steps needed to deploy the monitoring solution, however, these steps should work for other network equipment vendors (provided you modify the vendor specific elements in this example).

git clone https://github.com/sflow-rt/prometheus-grafana.git cd prometheus-grafana env RT_IMAGE=ixp-metrics ./start.sh

The easiest way to get started is to use Docker, see Deploy real-time network dashboards using Docker compose, and deploy the sflow/ixp-metrics image bundling the IXP Metrics application.

scrape_configs:

- job_name: sflow-rt-ixp-metrics

metrics_path: /app/ixp-metrics/scripts/metrics.js/prometheus/txt

static_configs:

- targets: ['sflow-rt:8008']

Follow the directions in the article to add a Prometheus scrape task to retrieve the metrics.

sflow source-interface management 1 sflow destination 10.0.0.50 sflow polling-interval 20 sflow sample 50000 sflow run

Enable sFlow on all exchange switches, directing sFlow telemetry to the Docker host (in this case 10.0.0.50).

Use the sFlow-RT Status page to confirm that sFlow is being received from the switches. In this case 286 sFlow datagrams per second are being received from 9 switches. The IX-F Member Export JSON Schema Continue reading