HashiCorp $100M Series D Renders $1.9B Valuation

The company offers open source-based software platforms that allow enterprises to manage distributed application infrastructure. It competes against companies like Puppet and Chef.

The company offers open source-based software platforms that allow enterprises to manage distributed application infrastructure. It competes against companies like Puppet and Chef.

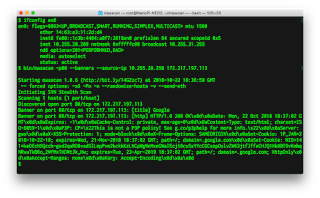

Masscan and massive address lists

I saw this go by on my Twitter feed. I thought I'd blog on how masscan solves the same problem.If you do @nmap scanning with big exclusion lists, things are about to get a lot faster. ;)— Daniel Miller ✝ (@bonsaiviking) November 1, 2018

Both nmap and masscan are port scanners. The differences is that nmap does an intensive scan on a limited range of addresses, whereas masscan does a light scan on a massive range of addresses, including the range of 0.0.0.0 - 255.255.255.255 (all addresses). If you've got a 10-gbps link to the Internet, it can scan the entire thing in under 10 minutes, from a single desktop-class computer.

Education Service Center Region 11 Protects Student Data with VMware NSX Data Center

Rory Peacock is the Deputy Executive Director of Technology at Education Service Center Region 11, where he oversees all technology services provided to Region 11 schools.

Region 11 is one of 20 education service centers throughout the State of Texas. In Texas, an education service center manages education programs, delivers technical assistance, and provides professional development to schools within its region. With regards to technology, education service centers assist their schools with hosted services and technical support.

Education Service Center Region 11 serves 70,699 educators and almost 600,000 students across 10 urban and rural counties.

I had the opportunity to talk to Rory about some of his largest technology challenges since he joined Region 11 in 2015.

Day Zero

Region 11 is a long-time VMware customer, introducing VMware vSphere in 2009. Since then, Region 11 has virtualized over 95% of their server environment. They’ve also made the move to virtual desktops utilizing VMware Horizon to support their 200 employees.

On the very day in 2016 that a meeting was set with the VMware NSX Data Center team to demo the product, Region 11 was hit with a zero-day attack of ransomware. A legacy system was hit in its demilitarized Continue reading

Edgeworx ioFog Platform Drills Software Down to the Edge

“Our goal is to bring a full software layer down to the lowest edge layer possible and to make building for the edge as easy as building for the cloud,” says CEO Kilton Hopkins.

“Our goal is to bring a full software layer down to the lowest edge layer possible and to make building for the edge as easy as building for the cloud,” says CEO Kilton Hopkins.

IoT Tales of Horror (Inspired by Real-Life Events)

Happy Halloween! In some parts of the world, people are celebrating this holiday of horror by dressing up as monsters or other frights and watching scary movies. But sometimes these tales can be just a little boring. Pod people? Headless horsemen? Replicant children? Whatever.

I present the real horror stories of Halloween – and every other day of the year. These tales are inspired by real-life events and are guaranteed to give you a chill. (And not just because your smart thermostat is being controlled by a shapeshifting clown who lives in the sewer!)

I(o)T

In the fall of 2018, a group of kids work together to destroy an evil malware, which infects connected toys and preys on the children of their small town.

Inspired by the terrifying vulnerabilities found in everyday connected toys.

Night of the Living Devices

There’s panic across the Internet as connected devices suddenly begin attacking critical Internet infrastructure. The film follows a group of network operators as they frantically work to protect the Internet from these packet spewing, infected devices.

Inspired by the harrowing events of the 2016 Dyn attack.

Rosemary’s Baby Monitor

Rosemary’s Baby Monitor

A young family moves into a house billed as the “smart Continue reading

MEF Releases SD-WAN Technical Specification to Its Members

MEF aims for its SD-WAN service standardization to accelerate sales of SD-WAN products and services like MEF accomplished with Carrier Ethernet service standardization.

MEF aims for its SD-WAN service standardization to accelerate sales of SD-WAN products and services like MEF accomplished with Carrier Ethernet service standardization.

Deploying Secure Kubernetes Containers in Production: Thwarting the Threats

Once you have containers in production certain security measures must be put in place to cover network filtering, container inspection, and host security.

Once you have containers in production certain security measures must be put in place to cover network filtering, container inspection, and host security.

Oracle Cloud Tech Seems Solid. But Is It Too Late?

The company’s got about a year to turn around its Oracle cloud business if it’s going to meet its lofty goals.

The company’s got about a year to turn around its Oracle cloud business if it’s going to meet its lofty goals.

Systemd is bad parsing and should feel bad

Systemd has a remotely exploitable bug in it's DHCPv6 client. That means anybody on the local network can send you a packet and take control of your computer. The flaw is a typical buffer-overflow. Several news stories have pointed out that this client was written from scratch, as if that were the moral failing, instead of reusing existing code. That's not the problem.Trump Wants a National 5G Spectrum Policy

The president issued a memorandum that is intended to make more spectrum available so that operators can more quickly deploy 5G.

The president issued a memorandum that is intended to make more spectrum available so that operators can more quickly deploy 5G.

Join Us: Immerse Yourself in Networking and Security at VMworld Europe

We are looking forward to a fantastic show at VMworld 2018 Europe. We have a ton of great content on networking and security to share with you including breakouts, labs, activities, and parties! You’ll have prime opportunities to learn about the Virtual Cloud Network, the latest on the NSX product portfolio, and network with your peers and VMware experts.

To get you started for a packed week of learning and fun, we highly recommend attending our two showcase keynotes:

First, at the NSX Keynote: Building the Network of the Future with the Virtual Cloud Network (Tuesday, 06 November, 14:00 – 15:00), you’ll hear Tom Gillis, the new GM of NSBU lead discussions on:

- VMware’s vision for networking with the Virtual Cloud Network

- The latest and greatest innovations across the NSX product portfolio

- Joint solutions we are bringing to the cloud with IBM

- A special conversation with key customers about how they are using NSX today

- And, demonstrations showing the entire product portfolio in action including NSX Data Center, NSX Cloud, NSX SD-WAN by VeloCloud, and AppDefense

Next, at the Security Keynote: Transforming Security in a Cloud and Mobile World, (Wednesday, 07 November, 14:00 – 15:00), Tom Corn, Continue reading

Check Point Buys Cloud Security Startup Dome9 for $175 Million

Dome9’s platform will add new capabilities — specifically cloud management and policy enforcement — to Check Point’s recently launched CloudGuard product portfolio.

Dome9’s platform will add new capabilities — specifically cloud management and policy enforcement — to Check Point’s recently launched CloudGuard product portfolio.

Alibaba Cloud Brings Its Blockchain Service to the Global Stage

The blockchain service is based on the Linux Foundation’s Hyperledger Fabric and the Alibaba Group financial affiliate’s blockchain platform.

The blockchain service is based on the Linux Foundation’s Hyperledger Fabric and the Alibaba Group financial affiliate’s blockchain platform.

Cisco, Nokia, Microsoft Back $85M Team8-Led Security Fund

These investors and other major companies also joined a Team8-led coalition that aims to rethink security by building it into network and cloud infrastructure.

These investors and other major companies also joined a Team8-led coalition that aims to rethink security by building it into network and cloud infrastructure.

Samsung, NEC Join Forces on 5G

The partnership will provide Samsung with access to the Japanese market and NEC with its first hardware foray outside of its home market.

The partnership will provide Samsung with access to the Japanese market and NEC with its first hardware foray outside of its home market.

NSX at VMworld 2018 Europe – The Technical Session Guide

Hola! VMworld 2018 Europe is around the corner and we look forward to connecting with our VMware NSX community in Barcelona. Before we go into session recommendations, let us give you a recap on what we have been up to since VMworld US.

Since VMworld US in August 2018, we have announced general availability for NSX-T Data Center 2.3. NSX-T Data Center 2.3 extends NSX platform support to Bare Metal servers, enhances multi-cloud control in AWS & Azure, advances security with N/S service insertion, and has many more enhancements.

Our friends from vSphere have also released vSphere 6.7 Update 1 which gives NSX-T N-VDS visualization in the vCenter now.

For automation fans, we have a Concourse CI pipeline which automates the NSX-T install. This pipeline can be used to stand up entire NSX-T environments on vSphere clusters by filing a simple parameter file.

NSX-T Concourse Pipeline information

Now, let’s talk about our VMworld Europe line-up. As usual, we have great technical deep-dives, deployment stories, and hands-on labs for you. For the technical enthusiasts who are interested in deep-dives and deployment strategies, here are a set of “geek” sessions to choose Continue reading

Masscan as a lesson in TCP/IP

When learning TCP/IP it may be helpful to look at the masscan port scanning program, because it contains its own network stack. This concept, "contains its own network stack", is so unusual that it'll help resolve some confusion you might have about networking. It'll help challenge some (incorrect) assumptions you may have developed about how networks work.Fortinet Buys ZoneFox, a Security Startup That Hunts for Insider Threats

The company’s software enables organizations to see where business-critical data is going and if people are doing things with it that they shouldn’t be – either accidentally or maliciously.

The company’s software enables organizations to see where business-critical data is going and if people are doing things with it that they shouldn’t be – either accidentally or maliciously.

Oracle Buys DataFox, Pledges to Embed AI Into Everything

Oracle co-CEO Mark Hurd predicted that by 2025 all cloud apps will include artificial intelligence. And likely because of this AI strategy, the company reached a deal to acquire DataFox and its cloud-based AI data engine.

Oracle co-CEO Mark Hurd predicted that by 2025 all cloud apps will include artificial intelligence. And likely because of this AI strategy, the company reached a deal to acquire DataFox and its cloud-based AI data engine.

How to Balance Cloud-Scale Efficiencies Against Business Realities

The widespread deployment of cloud infrastructure has led IT teams to demand freedom of choice, but that might not always be what’s best for the organization as a whole.

The widespread deployment of cloud infrastructure has led IT teams to demand freedom of choice, but that might not always be what’s best for the organization as a whole.