RSA Adds Security Orchestration, Automation to SIEM Platform

RSA today rolled out an orchestration and automation tool for its SIEM product. It also reached a deal to acquire Fortscale, a behavioral analytics startup.

RSA today rolled out an orchestration and automation tool for its SIEM product. It also reached a deal to acquire Fortscale, a behavioral analytics startup.

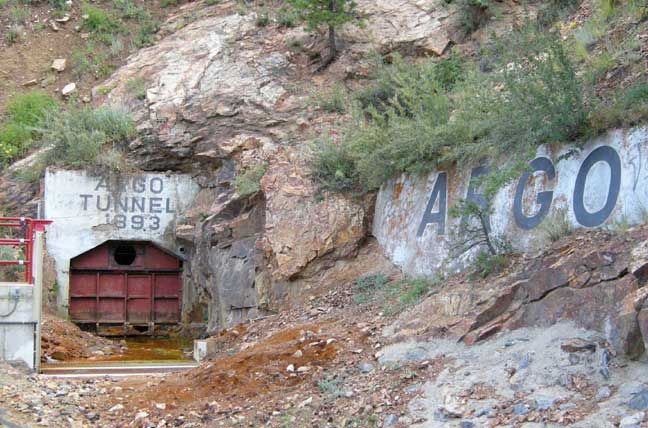

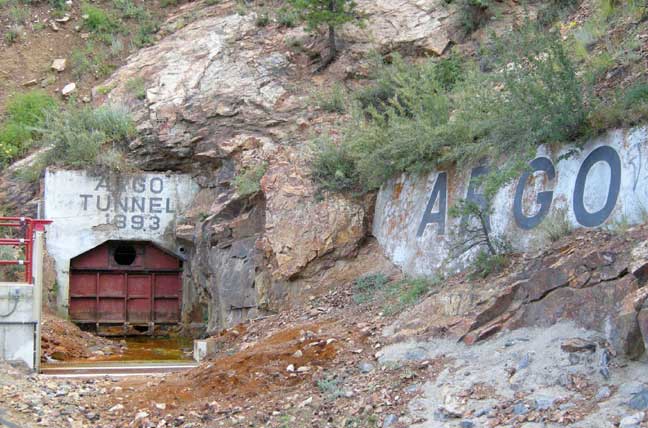

Argo Tunnel: A Private Link to the Public Internet

Photo from Wikimedia Commons

Today we’re introducing Argo Tunnel, a private connection between your web server and Cloudflare. Tunnel makes it so that only traffic that routes through Cloudflare can reach your server.

You can think of Argo Tunnel as a virtual P.O. box. It lets someone send you packets without knowing your real address. In other words, it’s a private link. Only Cloudflare can see the server and communicate with it, and for the rest of the internet, it’s unroutable, as if the server is not even there.

How this used to be done

This type of private deployment used to be accomplished with GRE tunnels. But GRE tunnels are expensive and slow, they don’t really make sense in a 2018 internet.

GRE is a tunneling protocol for sending data between two servers by simulating a physical link. Configuring a GRE tunnel requires coordination between network administrators from both sides of the connection. It is an expensive service that is usually only available for large corporations with dedicated budgets. The GRE protocol encapsulates packets inside other packets, which means that you will have to either lower the MTU of your origin servers, or have your router do Continue reading

How the Lenca are Restoring the Past to Build their Future

The Internet has the potential to enable Indigenous communities to continue living on remote traditional lands without diminishing their access to services and information. The potential can go a long way towards closing the digital divide and offers new opportunities while preserving Indigenous culture.

In the ongoing debate about what difference the digital makes to the concept of Indigeneity itself, the voices of Indigenous people are what has been missing. It should be left up to community members to be caught up in this age of information and build the future on their own terms.

This is the story of Lenca people of Azacualpa, an Indigenous community of Yamaranguila in Intibucá, Honduras. In June 2017 they decided to start their relationship with technology by creating Radio Azacualpa, a radio station run by women, with the support of Cultural Survival’s Community Media Grants Project. It was a dream come true.

The radio tagline “La voz de las Mujeres” – the voice of women – says it all. “One of our goals as a radio station is to achieve recognition of our rights as women and to achieve equality,” explains Maria Santos, leader of the Azacualpa community.

In 2018, the Lenca decided Continue reading

Building Your Own Network Monitoring Sensor

You can create a sensor to monitor wired and WiFi networks and gain insight into how networks are performing for remote end users.

Building Your Own Network Monitoring Sensor

You can create a sensor to monitor wired and WiFi networks and gain insight into how networks are performing for remote end users.

VMware NSX Manager Deployment Guide

In this excerpt from "Learning VMware NSX, Second Edition" see the steps involved in setting up the network virtualization software.

VMware NSX Manager Deployment Guide

In this excerpt from "Learning VMware NSX, Second Edition" see the steps involved in setting up the network virtualization software.

Don’t Get Obsessed with REST API

REST API is the way of the world and all network devices should support it, right? Well, Ken Duda (Arista) disagreed with this idea during his Networking Field Day presentation, but unfortunately there wasn’t enough time to go into the details that would totally derail the presentation anyway.

Fixing that omission: should we have REST API on network devices or not?

Read more ...AWS Puts More Muscle Behind Machine Learning And Database

Amazon Web Services essentially sparked the public cloud race a dozen years ago when it first launched the Elastic Compute Cloud (EC2) service and then in short order the Simple Storage Service (S3), giving enterprises access to the large amount compute and storage resources that its giant retail business leaned on.

Since that time, AWS has grown rapidly in the number of services it offers, the number of customers it serves, the amount of money it brings in and the number of competitors – including Microsoft, IBM, Google, Alibaba, and Oracle – looking to chip away …

AWS Puts More Muscle Behind Machine Learning And Database was written by Jeffrey Burt at The Next Platform.

VMware Cloud on AWS with Direct Connect: NSX Networking and vMotion to the Cloud with Demo

Check out my prior below blogs here on VMware Network Virtualization blog on how NSX is leveraged in VMware Cloud on AWS to provide all the networking and security features. These prior blogs provide a foundation that this blog post builds on. In this blog post I discuss how AWS Direct Connect can be leveraged with VMware Cloud on AWS to provide high bandwidth, low latency connectivity to a SDDC deployed in VMware Cloud on AWS. This is one of my favorite features as it provides high bandwidth, low latency connectivity from on-prem directly into the customer’s VMware Cloud on AWS VPC enabling better and consistent connectivity/performance while also enabling live migration/vMotion from on-prem to cloud! I want to to thank my colleague, Venky Deshpande, who helped with some of the details in this post. Continue reading

Stackery Is Served New Funds, Adds Health Monitor for Serverless Apps

The company maintains close ties to AWS' Lambda serverless platform, but it is working more closely with Microsoft Azure.

The company maintains close ties to AWS' Lambda serverless platform, but it is working more closely with Microsoft Azure.

Where Is My Feature Request?

Getting a feature request implemented by your vendor can be a long and painful process. In this post we will take a look at some of the reasons feature request processes take so long and what a customer can do to avoid some of the pain and suffering.Combating Ransomware in Multi-Cloud Environments

The rapidly evolving threat environment, coupled with a more vulnerable enterprise network signals a paradigm shift in how organizations will protect data residing on multi-cloud systems.

The rapidly evolving threat environment, coupled with a more vulnerable enterprise network signals a paradigm shift in how organizations will protect data residing on multi-cloud systems.

Inside Nvidia’s NVSwitch GPU Interconnect

At the GPU Technology Conference last week, we told you all about the new NVSwitch memory fabric interconnect that Nvidia has created to link multiple “Volta” GPUs together and that is at the heart of the DGX-2 system that the company has created to demonstrate its capabilities and to use on its internal Saturn V supercomputer at some point in the future.

Since the initial announcements, more details have been revealed by Nvidia about NVSwitch, including details of the chip itself and how it helps applications wring a lot more performance from the GPU accelerators.

Our first observation upon looking …

Inside Nvidia’s NVSwitch GPU Interconnect was written by Timothy Prickett Morgan at The Next Platform.

Innaput Actors Utilize Remote Access Trojan Since 2016, Presumably Targeting Victim Files

Overview ASERT recently identified a campaign targeting commercial manufacturing in the US and potentially Europe in late 2017. The threat actors used phishing and downloader(s) to install a Remote Access Trojan (RAT) ASERT calls InnaputRAT on the target’s machine. The RAT contained a series of […]Sigfox USA President Says Executive Departures Are Typical of Startups

Can the IoT market support both LPWA and cellular? Yes, says Sigfox, but forming partnerships with other IoT firms may be the best option.

Can the IoT market support both LPWA and cellular? Yes, says Sigfox, but forming partnerships with other IoT firms may be the best option.