Video Delivery Drove Comcast Toward Virtualization

It now runs major OpenStack clouds and works with AWS and Microsoft Azure.

It now runs major OpenStack clouds and works with AWS and Microsoft Azure.

It now runs major OpenStack clouds and works with AWS and Microsoft Azure.

It now runs major OpenStack clouds and works with AWS and Microsoft Azure.

It supports AWS, with Rovius Cloud for Google and Microsoft Azure available later this year.

It supports AWS, with Rovius Cloud for Google and Microsoft Azure available later this year.

The move was a first for Microsoft as it grows open source support.

The move was a first for Microsoft as it grows open source support.

If you’ve been working in IT for the past few years, you know how much the security landscape has changed recently. Application infrastructures — once hosted in on-premises data centers — now sit in highly dynamic public and private multicloud environments. With the rise of mobile devices, bring-your-own-device (BYOD) policies, and Internet of Things (IoT), end-user environments are no longer primarily about corporately managed desktops. And attackers are growing more sophisticated by the day.

In such an atmosphere, traditional network perimeter security ceases to provide adequate protection.

That’s where the VMware solutions come in. At the heart of the solutions is a ubiquitous software layer across application infrastructure and endpoints that’s independent of the underlying physical infrastructure or location. To really understand how it works, you need to experience it for yourself. And the Transform Security track at vForum Online Fall 2017 on October 18th is the perfect opportunity. As our largest virtual conference, vForum Online gives IT professionals like yourself the chance to take a deep dive into VMware products with breakout sessions, chats with experts, and hands-on labs — all from the comfort of your own desk.

With this free half-day event just weeks away, it’s time to Continue reading

About 70 years ago, the world was introduced to the digital computers revolution which made the computation of millions of operations as fast and easy as 1+2. This simplified so many time-consuming activities and brought about new applications that amazed the world. Then, about 40 years ago the advent of networks and inter-networks (or the Internet) revolutionized the way we work and live by connecting the hundreds of millions of computing devices that have invaded our homes and offices.

Today, we are at the beginning of a new revolution, that of the Internet of Things (IoT), the extent of which might only be limited by our imagination. Internet of Things refers to the rapidly growing network of objects connected through the Internet. The objects can be sensors such as a thermostat or a speed meter, or actuators that open a valve or that turn on/off a light or a motor. These devices are embedded in our everyday home and workplace equipment (refrigerators, machines, cars, road infrastructure, etc.) or even the human body. These devices connected to powerful computers in the “cloud” might change our world in a way that few of us can imagine today. It is estimated that Continue reading

For the past decade, the loudest arguments waged regarding the Four (Amazon, Apple, Facebook, and Google) were about which CEO was more Jesus-like or should run for president. These platforms brought down autocrats, were going to cure death and put a man on Mars, because they are just so awesome. Media outlets were coopted into […]

The post Response: The Worm Has Turned Against Tech Companies appeared first on EtherealMind.

The platform now includes support for Microsoft Azure, Google Cloud Platform, IBM Bluemix, and Alibaba Cloud.

The platform now includes support for Microsoft Azure, Google Cloud Platform, IBM Bluemix, and Alibaba Cloud.

Comedy, surely. Microsoft's 15 years of collaboration has been disastrous. Can I say 'sharepoint' ?

The post Response: Microsoft Teams is replacing Skype for Business to put more pressure on Slack appeared first on EtherealMind.

Data Hub doesn’t move data around, but processes it where it resides.

Data Hub doesn’t move data around, but processes it where it resides.

Data Box also joins the list of ways to transfer information to the cloud.

Data Box also joins the list of ways to transfer information to the cloud.

I’m not a big user of Apple’s Automator tool, but sometimes it’s very useful. For example, A10 Networks load balancers make it pretty easy for administrators to capture packets without having to remember the syntax and appropriate command flags for a tcpdump command in the shell. Downloading the .pcap file is pretty easy too (especially using the web interface), but what gets downloaded is not just a single file; instead, it’s a gzip file containing a tar file which in turn contains (for the hardware I use) seventeen packet capture files. In this post I’ll explain what these files are, why it’s annoying, and how I work around this in MacOS.

If you’re wondering how one packet capture turned into sixteen PCAP files, that’s perfectly reasonable and the answer is simple in its own way. The hardware I use has sixteen CPU cores, fifteen of which are used by default to process traffic, and inbound flows are spread across those cores. Thus when taking a packet capture, the system actually requests each core to dump the flows matching the filter specification. Each core effectively has awareness of both the client and server sides of any connection, so both Continue reading

MapR Technologies has been busy in recent years build out its capabilities as a data platform company that can support a broad range of open-source technologies, from Hadoop and Spark to Hive, and can reach from the data center through the edge and out into the cloud. At the center of its efforts is its Converged Data Platform, which comes with the MapR-FS Posix file system and includes enterprise-level database and storage that are designed to handle the emerging big data workloads.

At the Strata Data Conference in New York City Sept. 26, company officials are putting their focus …

MapR Bulks Up Database for Modern Apps was written by Nicole Hemsoth at The Next Platform.

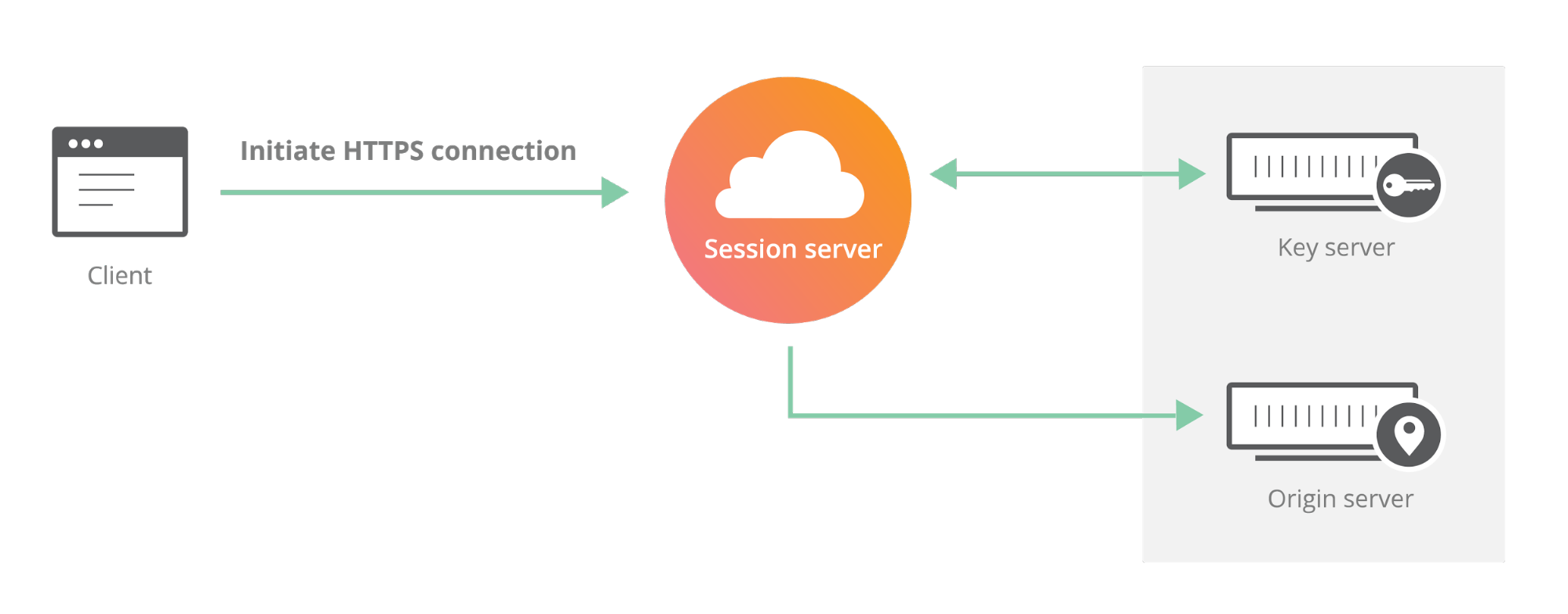

Today we announced Geo Key Manager, a feature that gives customers unprecedented control over where their private keys are stored when uploaded to Cloudflare. This feature builds on a previous Cloudflare innovation called Keyless SSL and a novel cryptographic access control mechanism based on both identity-based encryption and broadcast encryption. In this post we’ll explain the technical details of this feature, the first of its kind in the industry, and how Cloudflare leveraged its existing network and technologies to build it.

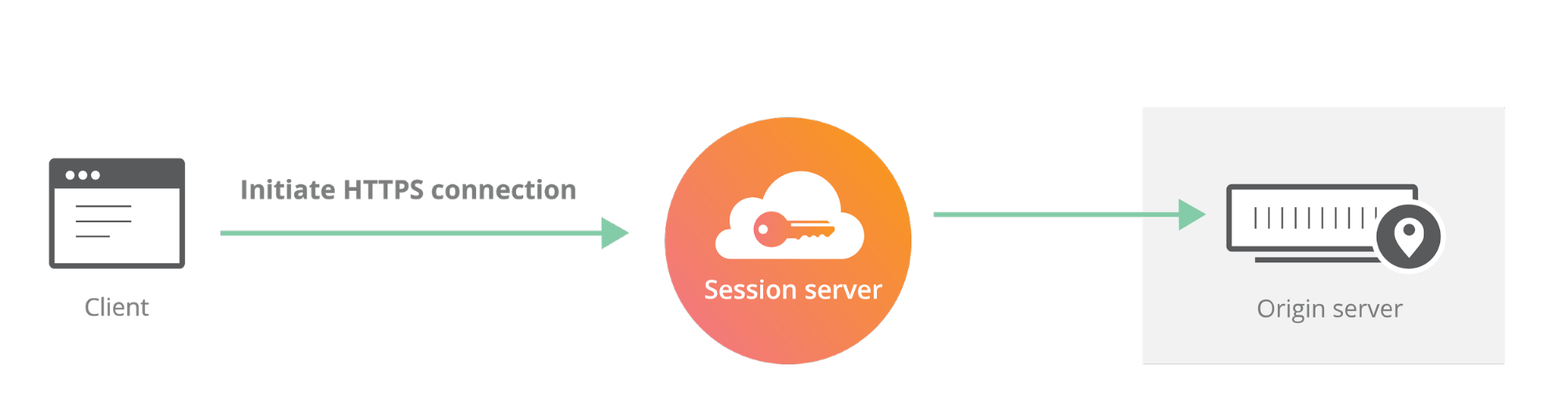

Cloudflare launched Keyless SSL three years ago to wide acclaim. With Keyless SSL, customers are able to take advantage of the full benefits of Cloudflare’s network while keeping their HTTPS private keys inside their own infrastructure. Keyless SSL has been popular with customers in industries with regulations around the control of access to private keys, such as the financial industry. Keyless SSL adoption has been slower outside these regulated industries, partly because it requires customers to run custom software (the key server) inside their infrastructure.

One of the motivating use cases for Keyless SSL was the expectation that customers may not trust a third party like Cloudflare with their Continue reading

Cloudflare’s customers recognize that they need to protect the confidentiality and integrity of communications with their web visitors. The widely accepted solution to this problem is to use the SSL/TLS protocol to establish an encrypted HTTPS session, over which secure requests can then be sent. Eavesdropping is protected against as only those who have access to the “private key” can legitimately identify themselves to browsers and decrypt encrypted requests.

Today, more than half of all traffic on the web uses HTTPS—but this was not always the case. In the early days of SSL, the protocol was viewed as slow as each encrypted request required two round trips between the user’s browser and web server. Companies like Cloudflare solved this problem by putting web servers close to end users and utilizing session resumption to eliminate those round trips for all but the very first request.

As Internet adoption grew around the world, with companies increasingly serving global and more remote audiences, providers like Cloudflare had to continue expanding their physical footprint to keep up with demand. As of the date this blog post was published, Cloudflare has data centers in over 55 countries, and we continue Continue reading