It is Always Something (RFC1925, Rule 7)

While those working in the network engineering world are quite familiar with the expression “it is always something!,” defining this (often exasperated) declaration is a little trickier. The wise folks in the IETF, however, have provided a definition in RFC1925. Rule 7, “it is always something,” is quickly followed with a corollary, rule 7a, which says: “Good, Fast, Cheap: Pick any two (you can’t have all three).”

You can either quickly build a network which works well and is therefore expensive, or take your time and build a network that is cheap and still does not work well, or… Well, you get the idea. There are many other instances of these sorts of three-way tradeoffs in the real world, such as the (in)famous CAP theorem, which states a database can be consistent, available, and partitionable (or partitioned). Eventual consistency, and problems from microloops to surprise package deliveries (when you thought you ordered one thing, but another was placed in your cart because of a database inconsistency) have resulted. Another form of this three-way tradeoff is the much less famous, but equally true, state, optimization, surface tradeoff trio in network design.

It is possible, however, to build a system Continue reading

Advanced Image Management in Docker Hub

We are excited to announce the latest feature for Docker Pro and Team users, our new Advanced Image Management Dashboard available on Docker Hub. The new dashboard provides developers with a new level of access to all of the content you have stored in Docker Hub providing you with more fine grained control over removing old content and exploring old versions of pushed images.

Historically in Docker Hub we have had visibility into the latest version of a tag that a user has pushed, but what has been very hard to see or even understand is what happened to all of those old things that you pushed. When you push an image to Docker Hub you are pushing a manifest, a list of all of the layers of your image, and the layers themselves.

When you are updating an existing tag, only the new layers will be pushed along with the new manifest which references these layers. This new manifest will be given the tag you specify when you push, such as bengotch/simplewhale:latest. But this does mean that all of those old manifests which point at the previous layers that made up your image are removed from Hub. These Continue reading

Browser Isolation for teams of all sizes

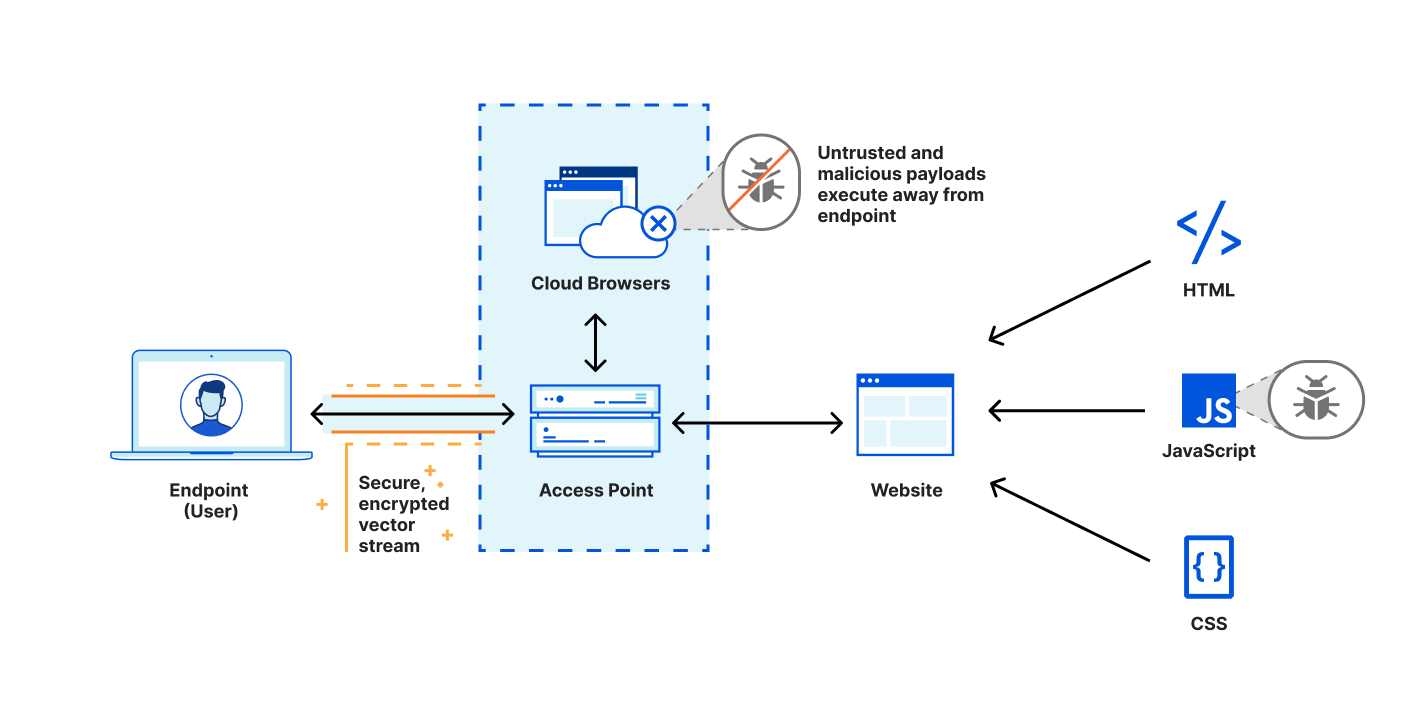

Every Internet-connected organization relies on web browsers to operate: accepting transactions, engaging with customers, or working with sensitive data. The very act of clicking a link triggers your web browser to download and execute a large bundle of unknown code on your local device.

IT organizations have always been on the back foot while defending themselves from security threats. It is not a question of ‘if’, but ‘when’ the next zero-day vulnerability will compromise a web browser. How can IT organizations protect their users and data from unknown threats without over-blocking every potential risk? The solution is to shift the burden of executing untrusted code from the user’s device to a remote isolated browser.

Bringing Remote Browser Isolation to teams of any size

Today we are excited to announce that Cloudflare Browser Isolation is now available within Cloudflare for Teams suite of zero trust security and secure web browsing services as an add-on. Teams of any size from startups to large enterprises can benefit from reliable and safe browsing without changing their preferred web browser or setting up complex network topologies.

Remote Browsers must be reliable

Running sensitive workloads in secure environments is nothing new, and Remote Browser Isolation (RBI) Continue reading

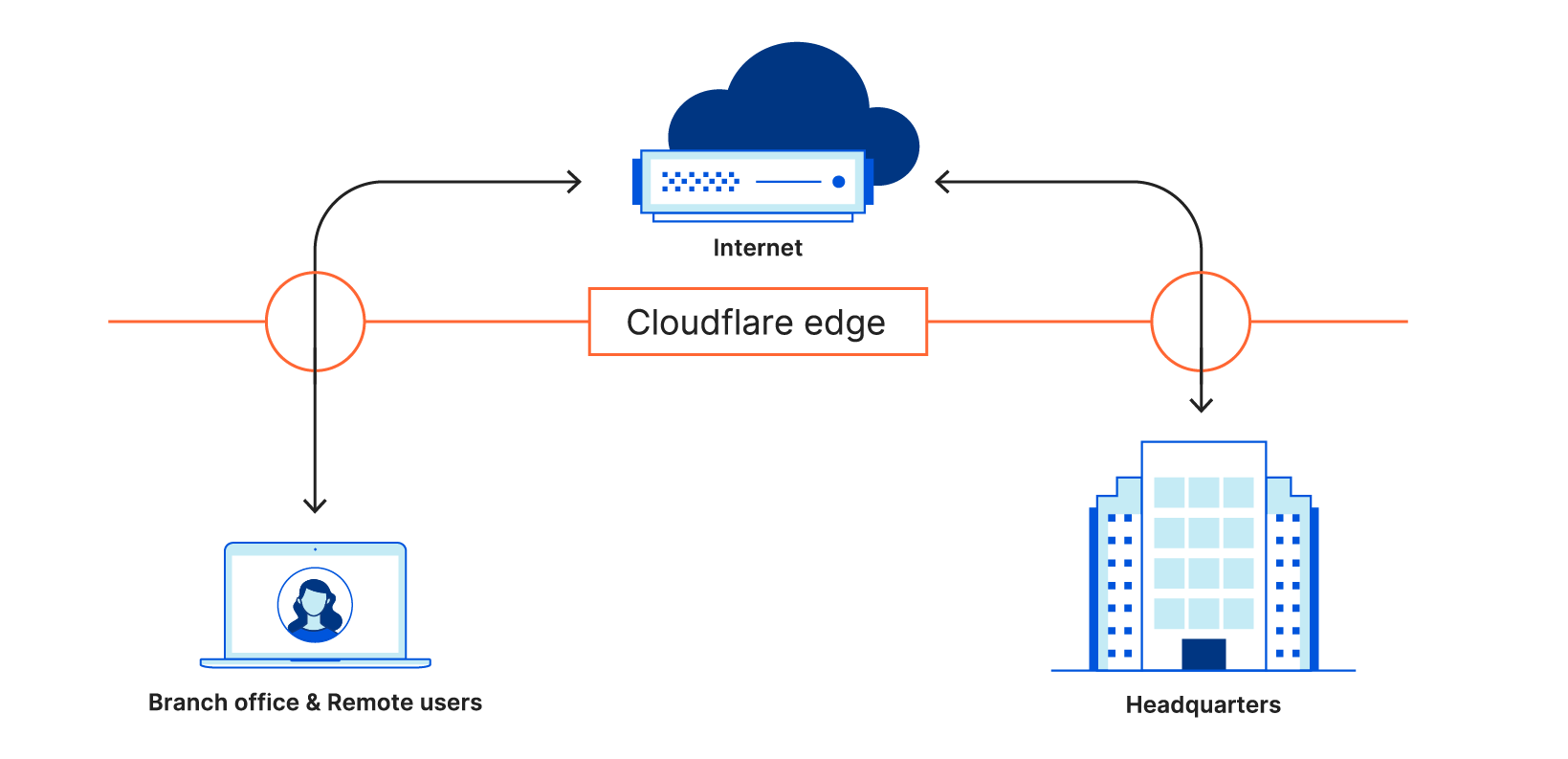

New device security partnerships for Cloudflare One

Last October, we announced Cloudflare One, our comprehensive, cloud-based network-as-a-service solution that is secure, fast, reliable, and defines the future of the corporate network. Cloudflare One consists of two components: network services like Magic WAN and Magic Transit that protect data centers and branch offices and connect them to the Internet, and Cloudflare for Teams, which secures corporate applications, devices, and employees working on the Internet. Today, we are excited to announce new integrations with VMware Carbon Black, CrowdStrike, and SentinelOne to pair with our existing Tanium integration. Cloudflare for Teams customers can now use these integrations to restrict access to their applications based on security signals from their devices.

Protecting applications with Cloudflare for Teams

When the COVID-19 pandemic unfolded, many of us started to work remotely. Employees left the office, but the network and applications they worked with didn’t. VPNs quickly began folding under heavy load from backhauling traffic and reconfiguring firewalls became an overnight IT nightmare.

This has accelerated many organizations' timelines for adopting a Zero Trust based network architecture. Zero Trust means to mistrust every connection request to a corporate resource, and instead intercept and only grant access if criteria defined by an administrator are Continue reading

Announcing antivirus in Cloudflare Gateway

Today we’re announcing support for malware detection and prevention directly from the Cloudflare edge, giving Gateway users an additional line of defense against security threats.

Cloudflare Gateway protects employees and data from threats on the Internet, and it does so without sacrificing performance for security. Instead of backhauling traffic to a central location, Gateway customers connect to one of Cloudflare’s data centers in 200 cities around the world where our network can apply content and security policies to protect their Internet-bound traffic.

Last year, Gateway expanded from a secure DNS filtering solution to a full Secure Web Gateway capable of protecting every user’s HTTP traffic as well. This enables admins to detect and block not only threats at the DNS layer, but malicious URLs and undesired file types as well. Moreover, admins now have the ability to create high-impact, company-wide policies that protect all users with one click, or they can create more granular rules based on user identity.

Earlier this month, we launched application policies in Cloudflare Gateway to make it easier for administrators to block specific web applications. With this feature, administrators can block those applications commonly used to distribute malware, such as public cloud file storage.

These Continue reading

Anatomy of a Targeted Ransomware Attack

Imagine your most critical systems suddenly stop operating, bringing your entire business to a screeching halt. And then someone demands a ransom to get your systems working again. Or someone launches a DDoS against you and demands ransom to make it stop. That’s the world of ransomware and ransom DDoS.

So what exactly is ransomware? It is malicious software that encrypts files on computers making them useless until they are decrypted. In some cases, ransomware could even corrupt and destroy data. A ransom note is then placed on compromised systems with instructions to pay a ransom in exchange for a decryption utility that can be used to restore encrypted files. Payment is often in the form of Bitcoin or other cryptocurrency.

Recently, Cloudflare onboarded and protected a Fortune 500 customer from a targeted Ransom DDoS (RDDoS) attack -- a different type of extortion attack.

Prior to joining Cloudflare, I responded to and investigated a large number of data breaches and ransomware attacks for clients across various industries, including healthcare, financial, and education, to name a few. I’ve been in the trenches analyzing these types of attacks and working closely with clients to help them recover from the aftermath.

In this Continue reading

Noction launches IRP 3.11, featuring the Remote-triggered Blackholing capability

Noction is pleased to announce that the Intelligent Routing Platform v 3.11 is now generally available. This version brings to the table the

The post Noction launches IRP 3.11, featuring the Remote-triggered Blackholing capability appeared first on Noction.

Unequal-Cost Multipath in Link State Protocols

TL&DR: You get unequal-cost multipath for free with distance-vector routing protocols. Implementing it in link state routing protocols is an order of magnitude more CPU-consuming.

Continuing our exploration of the Unequal-Cost Multipath world, why was it implemented in EIGRP decades ago, but not in OSPF or IS-IS?

Ignoring for the moment the “does it make sense” dilemma: finding downstream paths (paths strictly shorter than the current best path) is a side effect of running distance vector algorithms.

Unequal-Cost Multipath in Link State Protocols

TL&DR: You get unequal-cost multipath for free with distance-vector routing protocols. Implementing it in link state routing protocols is an order of magnitude more CPU-consuming.

Continuing our exploration of the Unequal-Cost Multipath world, why was it implemented in EIGRP decades ago, but not in OSPF or IS-IS?

Ignoring for the moment the “does it make sense” dilemma: finding downstream paths (paths strictly shorter than the current best path) is a side effect of running distance vector algorithms.

Shape the Internet’s Future with the Early Career Fellowship

Imagine a world where a global roster of Internet champions can stand up against the threats to the Internet.

This ideal was the inspiration for our new flagship program – the Early Career Fellowship!

This groundbreaking fellowship empowers a diverse new generation of Internet thinkers and doers.

The Early Career Fellows will have the opportunity to think, learning from Internet luminaries, today’s leading thinkers and organizations. They’ll explore topics like the Internet Ecosystem, Project Management & Advocacy, and the Internet Way of Thinking with Professor Dr Laura DeNardis of American University, scholars from the Oxford Internet Institute, and experts from the Internet Society, Diplo/GIP, Pyramid Learning and 89up.

The Fellows will also have the opportunity to do, getting direct support to nurture their professional growth. They’ll attend practical modules to help develop their own projects – bringing their ideas to life as they address the real-world challenges facing the future of the Internet.

These components, complemented with discussion, mentorship and leadership tracks, will:

- Increase the capacity of Internet champions through targeted educational and leadership development activities – and expand their expertise to support the development of the Internet

- Empower a cadre of talented early career professionals, giving Continue reading

Measuring ROAs and ROV

In 2020 APNIC Labs set up a measurement system for the validators. What we were trying to provide was a detailed view of where invalid routes were being propagated, and also take a longitudinal view of how things are changing over time. The report is at https://stats.labs.apnic.net/rpki and the description of the measurement is at https://www.potaroo.net/ispcol/2020-06/rov.html. I'd like to update this description with some work we’ve done on this measurement platform in recent months.Automate Leaf and Spine Deployment – Part6

The 6th post in the ‘Automate Leaf and Spine Deployment’ series goes through the validation of the fabric once deployment has been completed. A desired state validation file is built from the contents of the variable files and compared against the devices actual state to determine whether the fabric and all the services that run on top of it comply.

How to Implement the Principle of Least Privilege With CloudFormation StackSets

This article was originally posted on the Amazon Web Services Security Blog.

AWS CloudFormation is a service that lets you create a collection of related Amazon Web Services and third-party resources and provision them in an orderly and predictable fashion. A typical access control pattern is to delegate permissions for users to interact with CloudFormation and remove or limit their permissions to provision resources directly. You can grant the AWS CloudFormation service permission to create resources by creating a role that the user passes to CloudFormation when a stack or stack set is created. This can be used to ensure that only pre-authorized services and resources are provisioned in your AWS account. In this post, I show you how to conform to the principle of least privilege while still allowing users to use CloudFormation to create the resources they need.

Network Break 325: VMware Buys API Security Startup; Gartner Bullish On SONiC Network OS

This week's Network Break podcast discusses VMware's purchase of API security startup Mesh7, looks at a new security option for third-party Web components from Tala Security, and analyzes why Gartner is so bullish on the SONiC network OS. We also speculate on motivations behind Google's real estate spending spree, and hand out a nice selection of virtual donuts.Network Break 325: VMware Buys API Security Startup; Gartner Bullish On SONiC Network OS

This week's Network Break podcast discusses VMware's purchase of API security startup Mesh7, looks at a new security option for third-party Web components from Tala Security, and analyzes why Gartner is so bullish on the SONiC network OS. We also speculate on motivations behind Google's real estate spending spree, and hand out a nice selection of virtual donuts.

The post Network Break 325: VMware Buys API Security Startup; Gartner Bullish On SONiC Network OS appeared first on Packet Pushers.

Google Says The SOC Is The New Motherboard

For two decades now, Google has demonstrated perhaps more than any other company that the datacenter is the new computer, what the search engine giant called a “warehouse-scale machine” way back in 2009 with a paper written by Urs Hölzle, who was and still is senior vice president for Technical Infrastructure at Google, and Luiz André Barroso, who is vice president of engineering for the core products at Google and who was a researcher at Digital Equipment and Compaq before that. …

Google Says The SOC Is The New Motherboard was written by Timothy Prickett Morgan at The Next Platform.