0

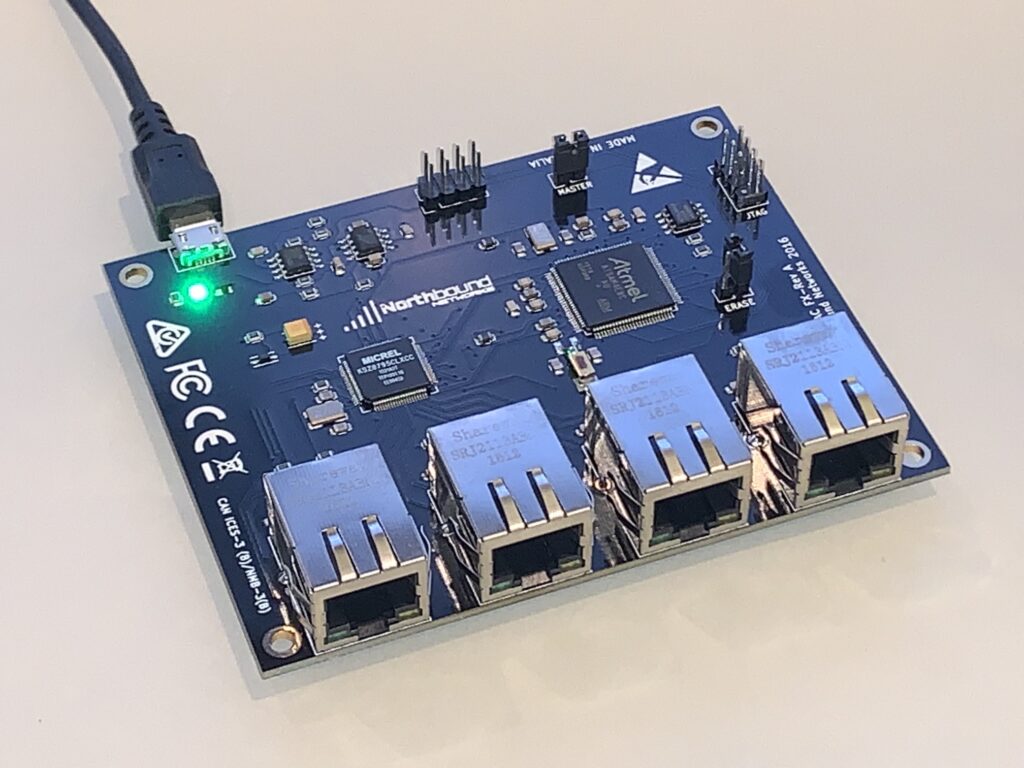

Digging through my office looking for some other technology which I had misplaced, I stumbled across a small box containing a Northbound Networks Zodiac-FX, a small 4-port FastEthernet OpenFlow SDN switch which I had picked up after backing a 2015 kickstarter campaign.

These were a pretty cool idea, and at the time OpenFlow (OF) was the hottest thing around, everything was being SDN-washed, and the idea that a regular user like myself could afford actual hardware with OF capabilities to toy with in the home lab was beyond belief. Of course, it was possible to virtualize OF with Mininet, but there’s something about using a real switch that goes beyond that. Even though, as you’ll in a future post, I ended up wasting that opportunity, I am still honored to have backed it, and my hat is off to Northbound Networks’ founder Paul Zanna for what he has accomplished.

Paying My Respects

With that in mind, I’m sad to note that when I went to the Northbound Networks website, I discovered that some time around August 2020 the company stopped manufacturing SDN hardware.

Since the original Zodiac FX campaign, Paul had expanded the available products to include an 802. Continue reading